Ghanim Mukhtar

Bootstrapping Robotic Ecological Perception from a Limited Set of Hypotheses Through Interactive Perception

Jan 30, 2019

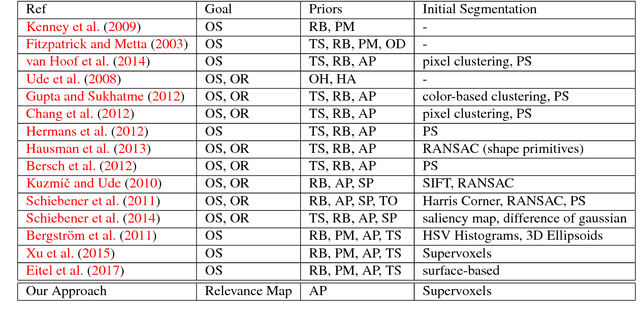

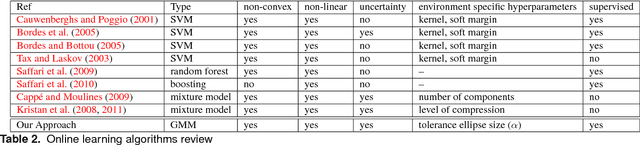

Abstract:To solve its task, a robot needs to have the ability to interpret its perceptions. In vision, this interpretation is particularly difficult and relies on the understanding of the structure of the scene, at least to the extent of its task and sensorimotor abilities. A robot with the ability to build and adapt this interpretation process according to its own tasks and capabilities would push away the limits of what robots can achieve in a non controlled environment. A solution is to provide the robot with processes to build such representations that are not specific to an environment or a situation. A lot of works focus on objects segmentation, recognition and manipulation. Defining an object solely on the basis of its visual appearance is challenging given the wide range of possible objects and environments. Therefore, current works make simplifying assumptions about the structure of a scene. Such assumptions reduce the adaptivity of the object extraction process to the environments in which the assumption holds. To limit such assumptions, we introduce an exploration method aimed at identifying moveable elements in a scene without considering the concept of object. By using the interactive perception framework, we aim at bootstrapping the acquisition process of a representation of the environment with a minimum of context specific assumptions. The robotic system builds a perceptual map called relevance map which indicates the moveable parts of the current scene. A classifier is trained online to predict the category of each region (moveable or non-moveable). It is also used to select a region with which to interact, with the goal of minimizing the uncertainty of the classification. A specific classifier is introduced to fit these needs: the collaborative mixture models classifier. The method is tested on a set of scenarios of increasing complexity, using both simulations and a PR2 robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge