Marco Dorigo

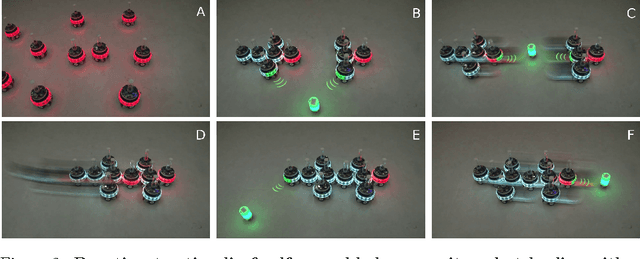

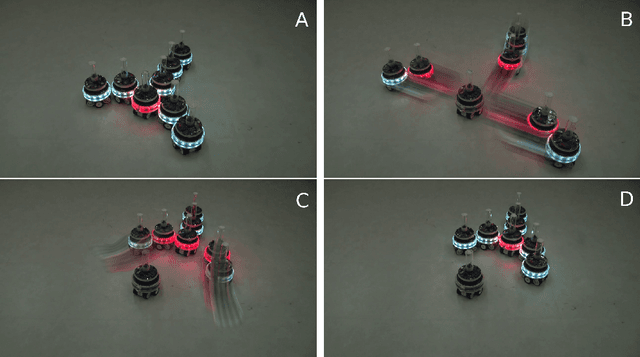

Online automatic code generation for robot swarms: LLMs and self-organizing hierarchy

Oct 06, 2025

Abstract:Our recently introduced self-organizing nervous system (SoNS) provides robot swarms with 1) ease of behavior design and 2) global estimation of the swarm configuration and its collective environment, facilitating the implementation of online automatic code generation for robot swarms. In a demonstration with 6 real robots and simulation trials with >30 robots, we show that when a SoNS-enhanced robot swarm gets stuck, it can automatically solicit and run code generated by an external LLM on the fly, completing its mission with an 85% success rate.

Swarm Oracle: Trustless Blockchain Agreements through Robot Swarms

Sep 19, 2025

Abstract:Blockchain consensus, rooted in the principle ``don't trust, verify'', limits access to real-world data, which may be ambiguous or inaccessible to some participants. Oracles address this limitation by supplying data to blockchains, but existing solutions may reduce autonomy, transparency, or reintroduce the need for trust. We propose Swarm Oracle: a decentralized network of autonomous robots -- that is, a robot swarm -- that use onboard sensors and peer-to-peer communication to collectively verify real-world data and provide it to smart contracts on public blockchains. Swarm Oracle leverages the built-in decentralization, fault tolerance and mobility of robot swarms, which can flexibly adapt to meet information requests on-demand, even in remote locations. Unlike typical cooperative robot swarms, Swarm Oracle integrates robots from multiple stakeholders, protecting the system from single-party biases but also introducing potential adversarial behavior. To ensure the secure, trustless and global consensus required by blockchains, we employ a Byzantine fault-tolerant protocol that enables robots from different stakeholders to operate together, reaching social agreements of higher quality than the estimates of individual robots. Through extensive experiments using both real and simulated robots, we showcase how consensus on uncertain environmental information can be achieved, despite several types of attacks orchestrated by large proportions of the robots, and how a reputation system based on blockchain tokens lets Swarm Oracle autonomously recover from faults and attacks, a requirement for long-term operation.

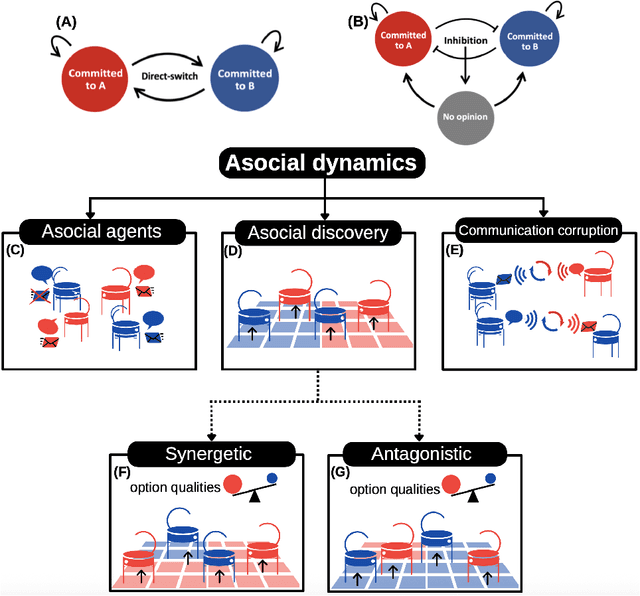

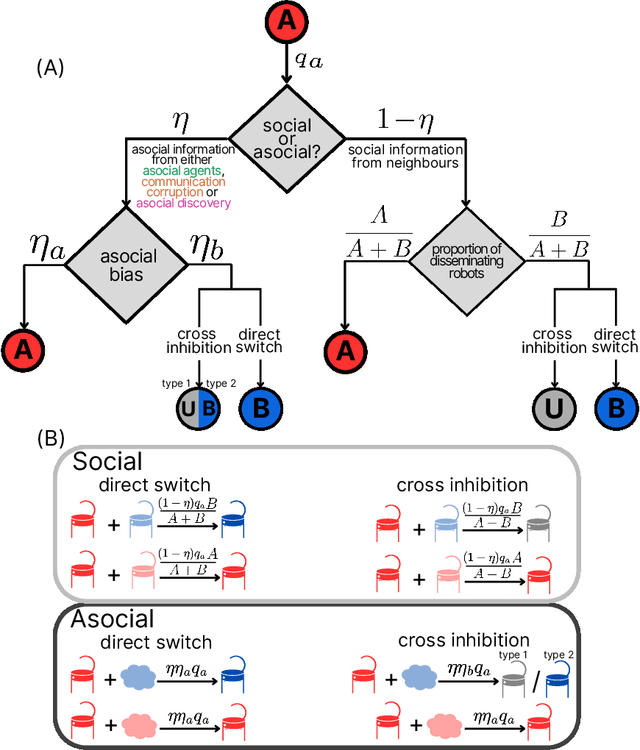

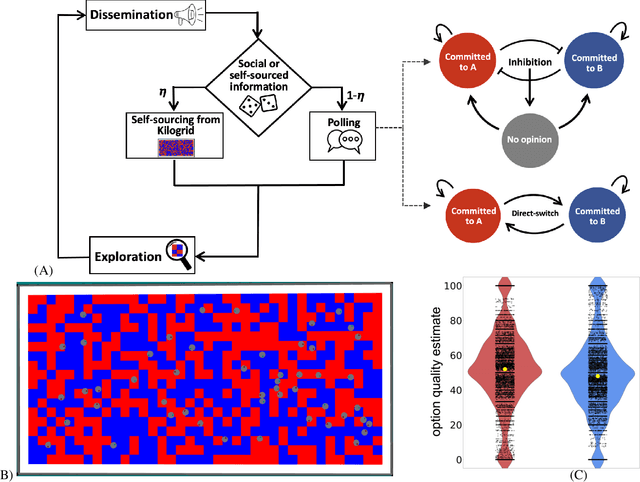

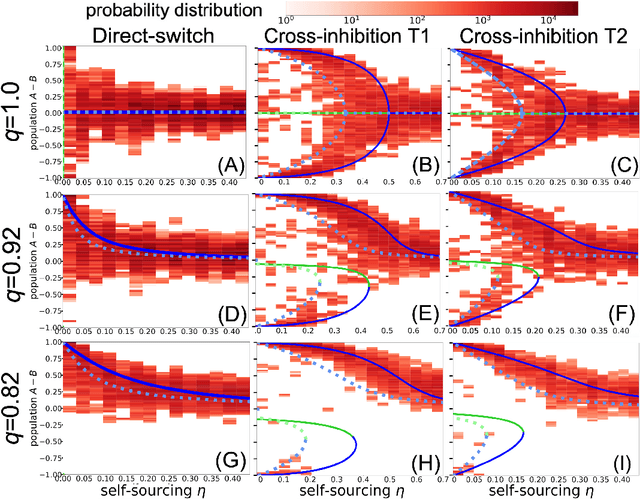

Bio-inspired decision making in swarms under biases from stubborn robots, corrupted communication, and independent discovery

Sep 09, 2025

Abstract:Minimalistic robot swarms offer a scalable, robust, and cost-effective approach to performing complex tasks with the potential to transform applications in healthcare, disaster response, and environmental monitoring. However, coordinating such decentralised systems remains a fundamental challenge, particularly when robots are constrained in communication, computation, and memory. In our study, individual robots frequently make errors when sensing the environment, yet the swarm can rapidly and reliably reach consensus on the best among $n$ discrete options. We compare two canonical mechanisms of opinion dynamics -- direct-switch and cross-inhibition -- which are simple yet effective rules for collective information processing observed in biological systems across scales, from neural populations to insect colonies. We generalise the existing mean-field models by considering asocial biases influencing the opinion dynamics. While swarms using direct-switch reliably select the best option in absence of asocial dynamics, their performance deteriorates once such biases are introduced, often resulting in decision deadlocks. In contrast, bio-inspired cross-inhibition enables faster, more cohesive, accurate, robust, and scalable decisions across a wide range of biased conditions. Our findings provide theoretical and practical insights into the coordination of minimal swarms and offer insights that extend to a broad class of decentralised decision-making systems in biology and engineering.

LLM2Swarm: Robot Swarms that Responsively Reason, Plan, and Collaborate through LLMs

Oct 15, 2024Abstract:Robot swarms are composed of many simple robots that communicate and collaborate to fulfill complex tasks. Robot controllers usually need to be specified by experts on a case-by-case basis via programming code. This process is time-consuming, prone to errors, and unable to take into account all situations that may be encountered during deployment. On the other hand, recent Large Language Models (LLMs) have demonstrated reasoning and planning capabilities, introduced new ways to interact with and program machines, and represent domain and commonsense knowledge. Hence, we propose to address the aforementioned challenges by integrating LLMs with robot swarms and show the potential in proofs of concept (showcases). For this integration, we explore two approaches. The first approach is 'indirect integration,' where LLMs are used to synthesize and validate the robot controllers. This approach may reduce development time and human error before deployment. Moreover, during deployment, it could be used for on-the-fly creation of new robot behaviors. The second approach is 'direct integration,' where each robot locally executes a separate LLM instance during deployment for robot-robot collaboration and human-swarm interaction. These local LLM instances enable each robot to reason, plan, and collaborate using natural language. To enable further research on our mainly conceptual contribution, we release the software and videos for our LLM2Swarm system: https://github.com/Pold87/LLM2Swarm.

Securing Federated Learning in Robot Swarms using Blockchain Technology

Sep 03, 2024Abstract:Federated learning is a new approach to distributed machine learning that offers potential advantages such as reducing communication requirements and distributing the costs of training algorithms. Therefore, it could hold great promise in swarm robotics applications. However, federated learning usually requires a centralized server for the aggregation of the models. In this paper, we present a proof-of-concept implementation of federated learning in a robot swarm that does not compromise decentralization. To do so, we use blockchain technology to enable our robot swarm to securely synchronize a shared model that is the aggregation of the individual models without relying on a central server. We then show that introducing a single malfunctioning robot can, however, heavily disrupt the training process. To prevent such situations, we devise protection mechanisms that are implemented through secure and tamper-proof blockchain smart contracts. Our experiments are conducted in ARGoS, a physics-based simulator for swarm robotics, using the Ethereum blockchain protocol which is executed by each simulated robot.

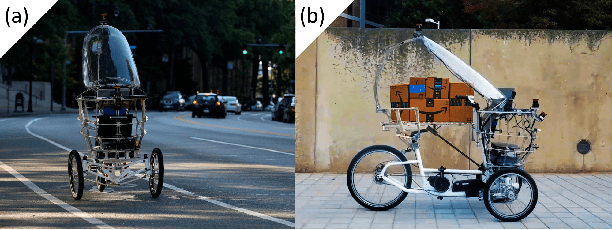

Centralization vs. decentralization in multi-robot coverage: Ground robots under UAV supervision

Aug 13, 2024

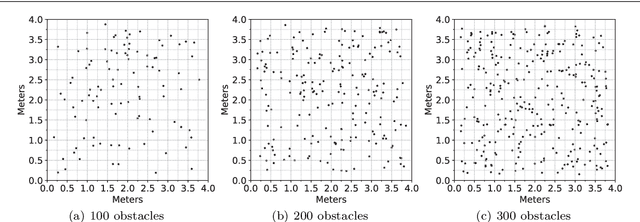

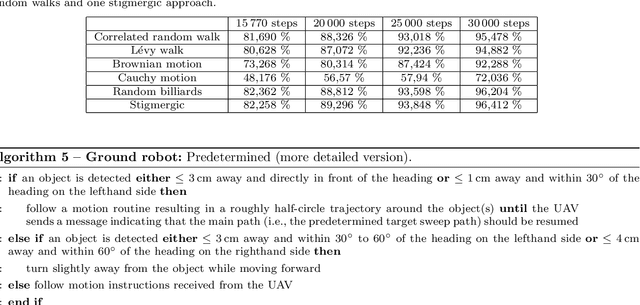

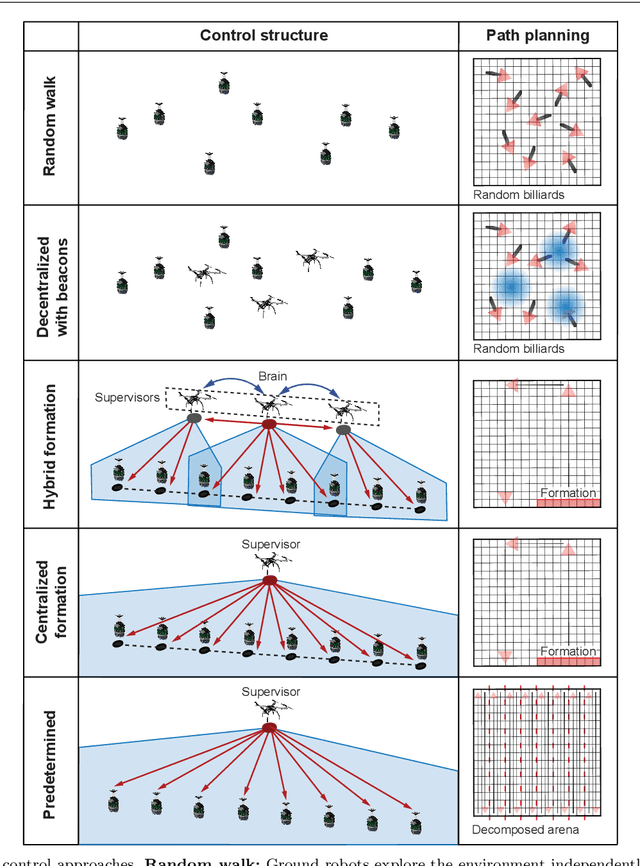

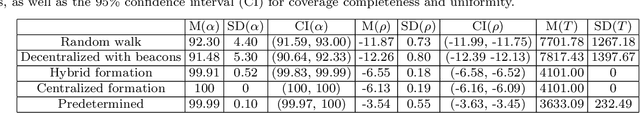

Abstract:In swarm robotics, decentralized control is often proposed as a more scalable and fault-tolerant alternative to centralized control. However, centralized behaviors are often faster and more efficient than their decentralized counterparts. In any given application, the goals and constraints of the task being solved should guide the choice to use centralized control, decentralized control, or a combination of the two. Currently, the tradeoffs that exist between centralization and decentralization have not been thoroughly studied. In this paper, we investigate these tradeoffs for multi-robot coverage, and find that they are more nuanced than expected. For instance, our findings reinforce the expectation that more decentralized control will provide better scalability, but contradict the expectation that more decentralized control will perform better in environments with randomized obstacles. Beginning with a group of fully independent ground robots executing coverage, we add unmanned aerial vehicles as supervisors and progressively increase the degree to which the supervisors use centralized control, in terms of access to global information and a central coordinating entity. We compare, using the multi-robot physics-based simulation environment ARGoS, the following four control approaches: decentralized control, hybrid control, centralized control, and predetermined control. In comparing the ground robots performing the coverage task, we assess the speed and efficiency advantages of centralization -- in terms of coverage completeness and coverage uniformity -- and we assess the scalability and fault tolerance advantages of decentralization. We also assess the energy expenditure disadvantages of centralization due to different energy consumption rates of ground robots and unmanned aerial vehicles, according to the specifications of robots available off-the-shelf.

Toychain: A Simple Blockchain for Research in Swarm Robotics

Jul 09, 2024Abstract:This technical report describes the implementation of Toychain: a simple, lightweight blockchain implemented in Python, designed for ease of deployment and practicality in robotics research. It can be integrated with various software and simulation tools used in robotics (we have integrated it with ARGoS, Gazebo, and ROS2), and also be deployed on real robots capable of Wi-Fi communications. The Toychain package supports the deployment of smart contracts written in Python (computer programs that can be executed by and synchronized across a distributed network). The nodes in the blockchain can execute smart contract functions by broadcasting transactions, which update the state of the blockchain upon agreement by all other nodes. The conditions for this agreement are established by a consensus protocol. The Toychain package allows for custom implementations of the consensus protocol, which can be useful for research or meeting specific application requirements. Currently, Proof-of-Work and Proof-of-Authority are implemented.

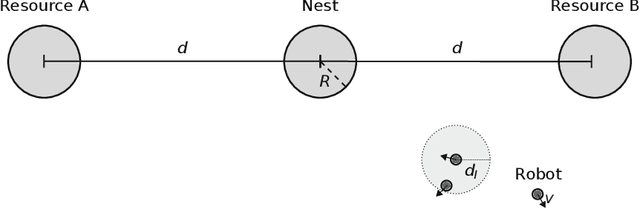

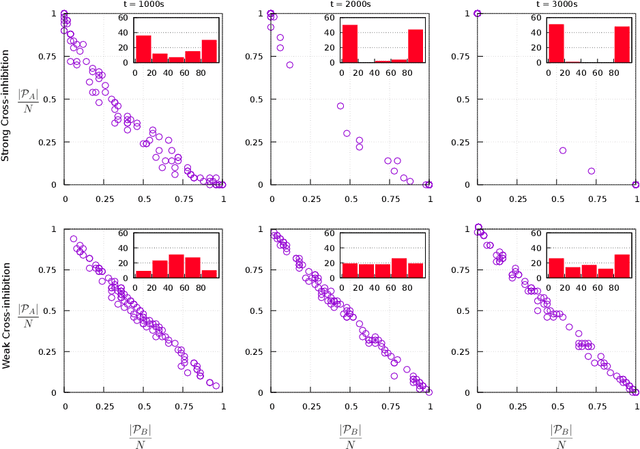

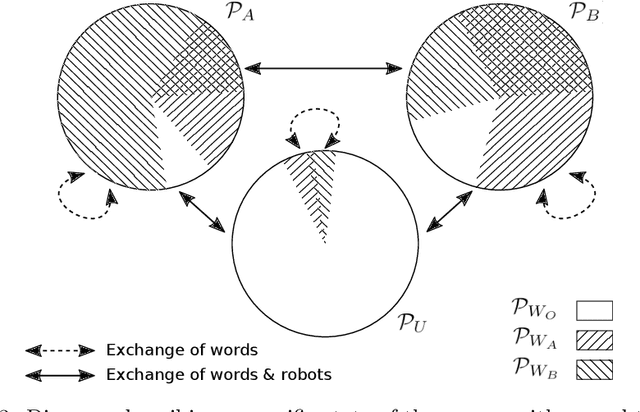

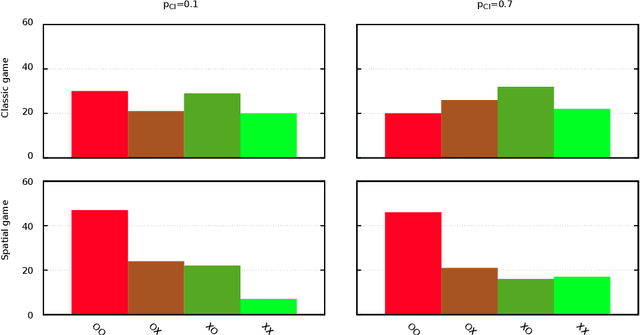

Emergent naming of resources in a foraging robot swarm

Oct 05, 2019

Abstract:We investigate the emergence of language convention within a swarm of robots foraging in an open environment from two identical resources. While foraging, the swarm needs to explore and decide which resource to exploit, moving through complex transitory dynamics towards different possible equilibria, such as, selection of a single resource or spread across the two. Our point of interest is the understanding of possible correlations between the emergent, evolving, task-induced interaction network and the language dynamics. In particular, our goal is to determine whether the dynamics of the interaction network are sufficient to determine emergent naming conventions that represent features of the task execution (e.g., choice of one or the other resource) and of the environment, In other words, we look for an emergent vocabulary that is both complete (a word for each resource) and correct (no misnomer) for as long as each resource is relevant to the swarm. In this study, robots are playing two variants of the minimal language game. The classic one, where words are created when needed, and a new variant we introduce in this article: the spatial minimal naming game, where the creation of words is linked with the discovery of resources by exploring robots. We end the article by proposing a proof of concept extension of the spatial minimal naming game that assures the completeness and correctness of the swarms vocabulary.

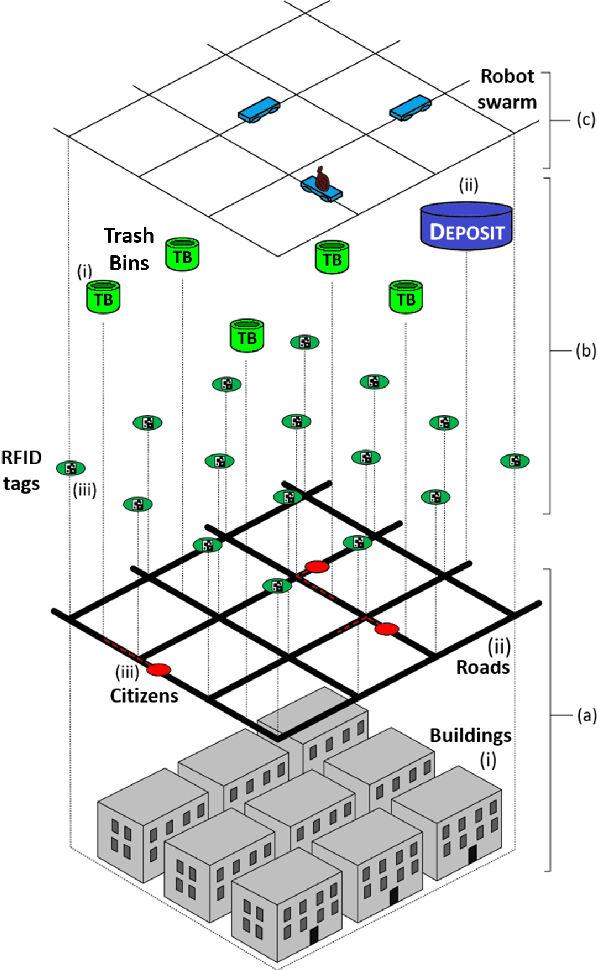

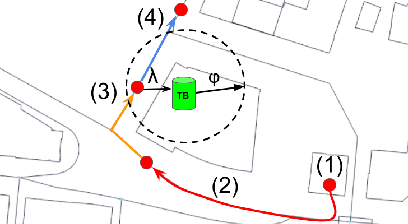

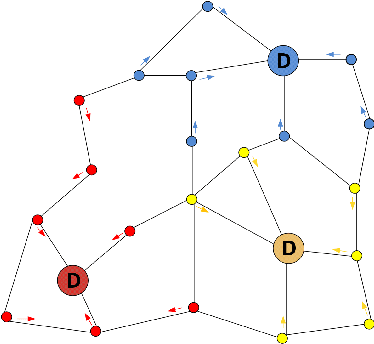

Urban Swarms: A new approach for autonomous waste management

Mar 01, 2019

Abstract:Modern cities are growing ecosystems that face new challenges due to the increasing population demands. One of the many problems they face nowadays is waste management, which has become a pressing issue requiring new solutions. Swarm robotics systems have been attracting an increasing amount of attention in the past years and they are expected to become one of the main driving factors for innovation in the field of robotics. The research presented in this paper explores the feasibility of a swarm robotics system in an urban environment. By using bio-inspired foraging methods such as multi-place foraging and stigmergy-based navigation, a swarm of robots is able to improve the efficiency and autonomy of the urban waste management system in a realistic scenario. To achieve this, a diverse set of simulation experiments was conducted using real-world GIS data and implementing different garbage collection scenarios driven by robot swarms. Results presented in this research show that the proposed system outperforms current approaches. Moreover, results not only show the efficiency of our solution, but also give insights about how to design and customize these systems.

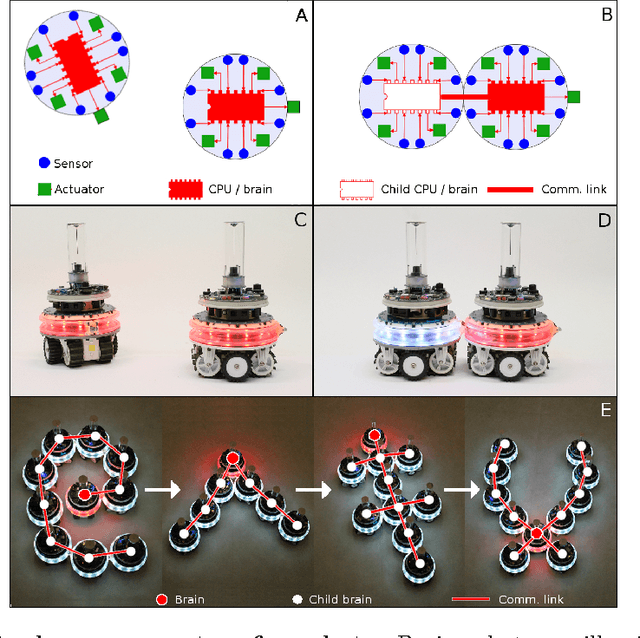

Virtual Nervous Systems for Self-Assembling Robots - A preliminary report

May 26, 2015

Abstract:We define the nervous system of a robot as the processing unit responsible for controlling the robot body, together with the links between the processing unit and the sensorimotor hardware of the robot - i.e., the equivalent of the central nervous system in biological organisms. We present autonomous robots that can merge their nervous systems when they physically connect to each other, creating a "virtual nervous system" (VNS). We show that robots with a VNS have capabilities beyond those found in any existing robotic system or biological organism: they can merge into larger bodies with a single brain (i.e., processing unit), split into separate bodies with independent brains, and temporarily acquire sensing and actuating capabilities of specialized peer robots. VNS-based robots can also self-heal by removing or replacing malfunctioning body parts, including the brain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge