Mostafa Wahby

Centralization vs. decentralization in multi-robot coverage: Ground robots under UAV supervision

Aug 13, 2024

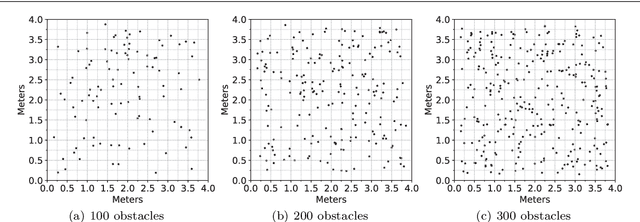

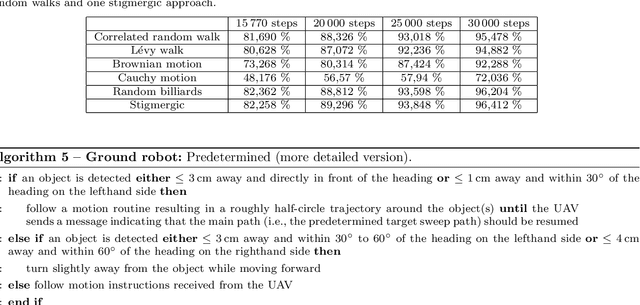

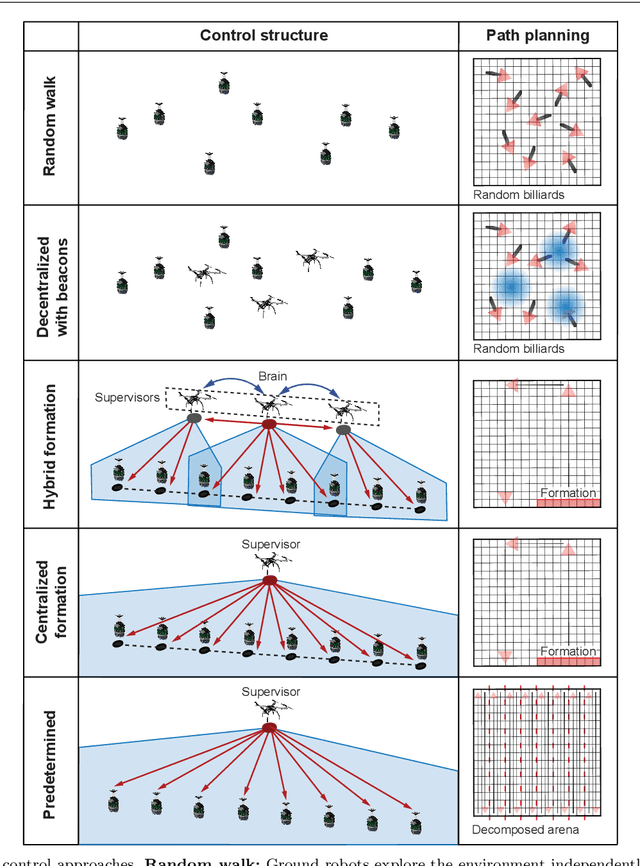

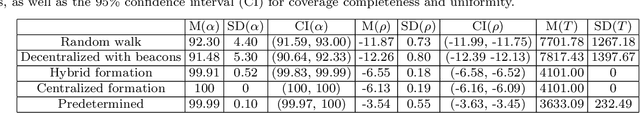

Abstract:In swarm robotics, decentralized control is often proposed as a more scalable and fault-tolerant alternative to centralized control. However, centralized behaviors are often faster and more efficient than their decentralized counterparts. In any given application, the goals and constraints of the task being solved should guide the choice to use centralized control, decentralized control, or a combination of the two. Currently, the tradeoffs that exist between centralization and decentralization have not been thoroughly studied. In this paper, we investigate these tradeoffs for multi-robot coverage, and find that they are more nuanced than expected. For instance, our findings reinforce the expectation that more decentralized control will provide better scalability, but contradict the expectation that more decentralized control will perform better in environments with randomized obstacles. Beginning with a group of fully independent ground robots executing coverage, we add unmanned aerial vehicles as supervisors and progressively increase the degree to which the supervisors use centralized control, in terms of access to global information and a central coordinating entity. We compare, using the multi-robot physics-based simulation environment ARGoS, the following four control approaches: decentralized control, hybrid control, centralized control, and predetermined control. In comparing the ground robots performing the coverage task, we assess the speed and efficiency advantages of centralization -- in terms of coverage completeness and coverage uniformity -- and we assess the scalability and fault tolerance advantages of decentralization. We also assess the energy expenditure disadvantages of centralization due to different energy consumption rates of ground robots and unmanned aerial vehicles, according to the specifications of robots available off-the-shelf.

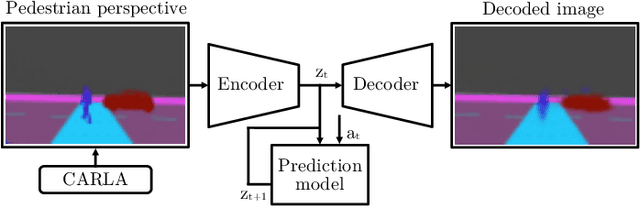

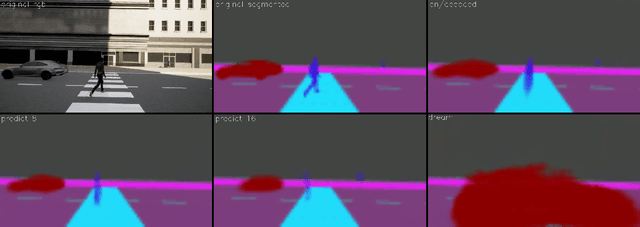

On the Road to Clarity: Exploring Explainable AI for World Models in a Driver Assistance System

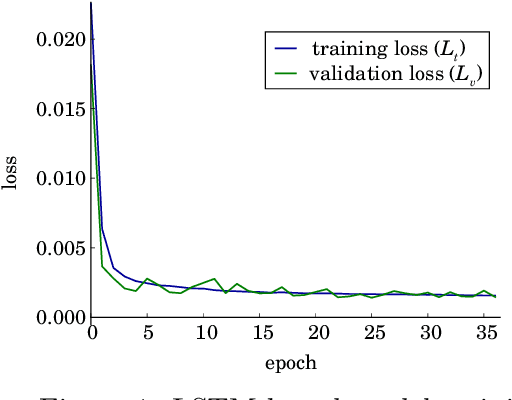

Apr 26, 2024Abstract:In Autonomous Driving (AD) transparency and safety are paramount, as mistakes are costly. However, neural networks used in AD systems are generally considered black boxes. As a countermeasure, we have methods of explainable AI (XAI), such as feature relevance estimation and dimensionality reduction. Coarse graining techniques can also help reduce dimensionality and find interpretable global patterns. A specific coarse graining method is Renormalization Groups from statistical physics. It has previously been applied to Restricted Boltzmann Machines (RBMs) to interpret unsupervised learning. We refine this technique by building a transparent backbone model for convolutional variational autoencoders (VAE) that allows mapping latent values to input features and has performance comparable to trained black box VAEs. Moreover, we propose a custom feature map visualization technique to analyze the internal convolutional layers in the VAE to explain internal causes of poor reconstruction that may lead to dangerous traffic scenarios in AD applications. In a second key contribution, we propose explanation and evaluation techniques for the internal dynamics and feature relevance of prediction networks. We test a long short-term memory (LSTM) network in the computer vision domain to evaluate the predictability and in future applications potentially safety of prediction models. We showcase our methods by analyzing a VAE-LSTM world model that predicts pedestrian perception in an urban traffic situation.

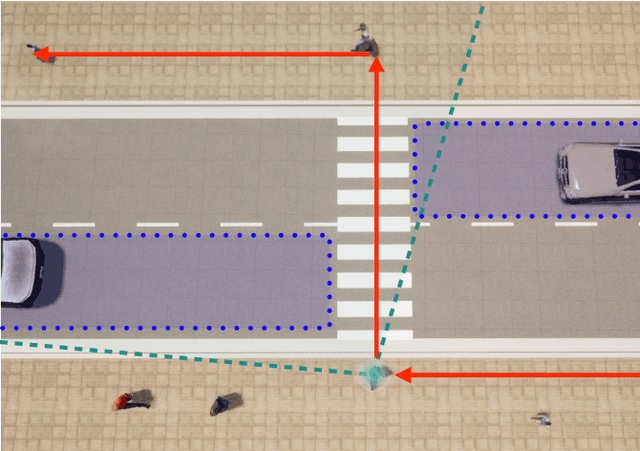

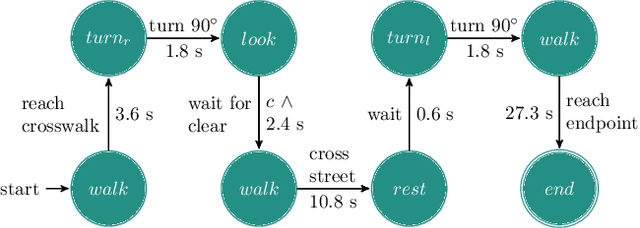

"If you could see me through my eyes": Predicting Pedestrian Perception

Mar 22, 2022

Abstract:Pedestrians are particularly vulnerable road users in urban traffic. With the arrival of autonomous driving, novel technologies can be developed specifically to protect pedestrians. We propose a machine learning toolchain to train artificial neural networks as models of pedestrian behavior. In a preliminary study, we use synthetic data from simulations of a specific pedestrian crossing scenario to train a variational autoencoder and a long short-term memory network to predict a pedestrian's future visual perception. We can accurately predict a pedestrian's future perceptions within relevant time horizons. By iteratively feeding these predicted frames into these networks, they can be used as simulations of pedestrians as indicated by our results. Such trained networks can later be used to predict pedestrian behaviors even from the perspective of the autonomous car. Another future extension will be to re-train these networks with real-world video data.

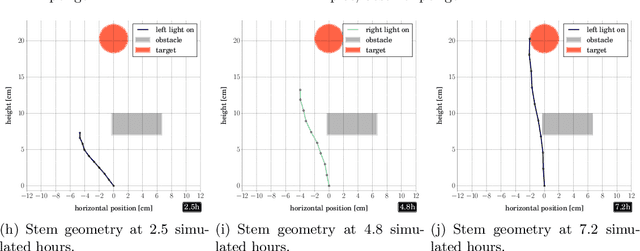

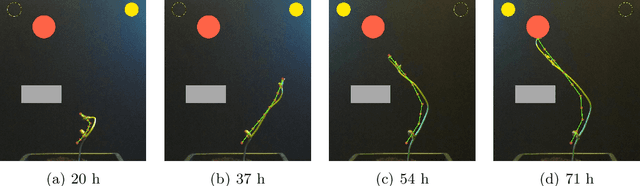

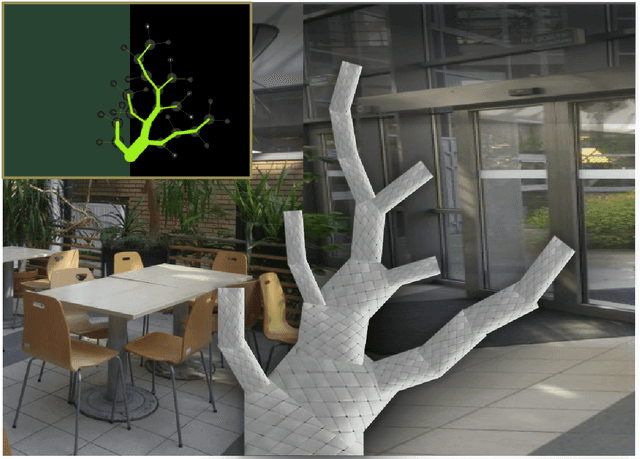

A Robot to Shape your Natural Plant: The Machine Learning Approach to Model and Control Bio-Hybrid Systems

Apr 19, 2018

Abstract:Bio-hybrid systems---close couplings of natural organisms with technology---are high potential and still underexplored. In existing work, robots have mostly influenced group behaviors of animals. We explore the possibilities of mixing robots with natural plants, merging useful attributes. Significant synergies arise by combining the plants' ability to efficiently produce shaped material and the robots' ability to extend sensing and decision-making behaviors. However, programming robots to control plant motion and shape requires good knowledge of complex plant behaviors. Therefore, we use machine learning to create a holistic plant model and evolve robot controllers. As a benchmark task we choose obstacle avoidance. We use computer vision to construct a model of plant stem stiffening and motion dynamics by training an LSTM network. The LSTM network acts as a forward model predicting change in the plant, driving the evolution of neural network robot controllers. The evolved controllers augment the plants' natural light-finding and tissue-stiffening behaviors to avoid obstacles and grow desired shapes. We successfully verify the robot controllers and bio-hybrid behavior in reality, with a physical setup and actual plants.

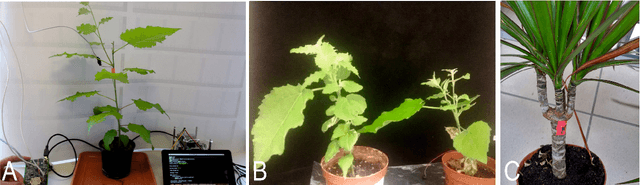

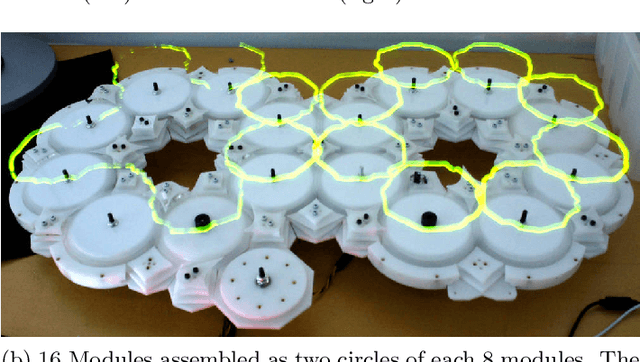

Flora robotica -- An Architectural System Combining Living Natural Plants and Distributed Robots

Sep 13, 2017

Abstract:Key to our project flora robotica is the idea of creating a bio-hybrid system of tightly coupled natural plants and distributed robots to grow architectural artifacts and spaces. Our motivation with this ground research project is to lay a principled foundation towards the design and implementation of living architectural systems that provide functionalities beyond those of orthodox building practice, such as self-repair, material accumulation and self-organization. Plants and robots work together to create a living organism that is inhabited by human beings. User-defined design objectives help to steer the directional growth of the plants, but also the system's interactions with its inhabitants determine locations where growth is prohibited or desired (e.g., partitions, windows, occupiable space). We report our plant species selection process and aspects of living architecture. A leitmotif of our project is the rich concept of braiding: braids are produced by robots from continuous material and serve as both scaffolds and initial architectural artifacts before plants take over and grow the desired architecture. We use light and hormones as attraction stimuli and far-red light as repelling stimulus to influence the plants. Applied sensors range from simple proximity sensing to detect the presence of plants to sophisticated sensing technology, such as electrophysiology and measurements of sap flow. We conclude by discussing our anticipated final demonstrator that integrates key features of flora robotica, such as the continuous growth process of architectural artifacts and self-repair of living architecture.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge