Julian Petzold

On the Road to Clarity: Exploring Explainable AI for World Models in a Driver Assistance System

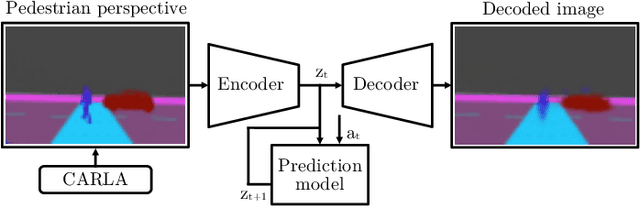

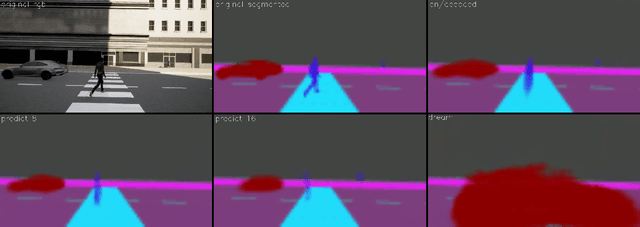

Apr 26, 2024Abstract:In Autonomous Driving (AD) transparency and safety are paramount, as mistakes are costly. However, neural networks used in AD systems are generally considered black boxes. As a countermeasure, we have methods of explainable AI (XAI), such as feature relevance estimation and dimensionality reduction. Coarse graining techniques can also help reduce dimensionality and find interpretable global patterns. A specific coarse graining method is Renormalization Groups from statistical physics. It has previously been applied to Restricted Boltzmann Machines (RBMs) to interpret unsupervised learning. We refine this technique by building a transparent backbone model for convolutional variational autoencoders (VAE) that allows mapping latent values to input features and has performance comparable to trained black box VAEs. Moreover, we propose a custom feature map visualization technique to analyze the internal convolutional layers in the VAE to explain internal causes of poor reconstruction that may lead to dangerous traffic scenarios in AD applications. In a second key contribution, we propose explanation and evaluation techniques for the internal dynamics and feature relevance of prediction networks. We test a long short-term memory (LSTM) network in the computer vision domain to evaluate the predictability and in future applications potentially safety of prediction models. We showcase our methods by analyzing a VAE-LSTM world model that predicts pedestrian perception in an urban traffic situation.

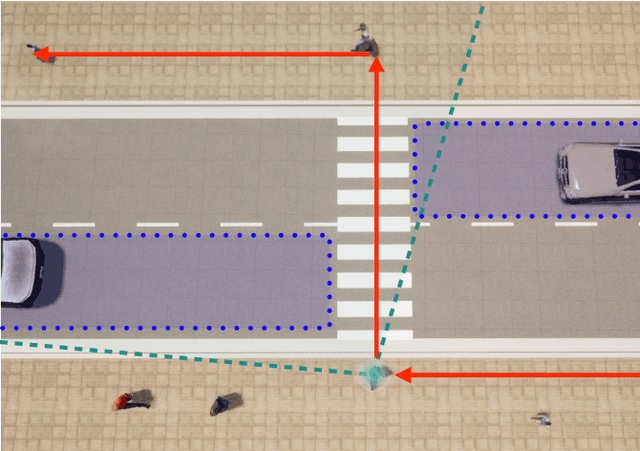

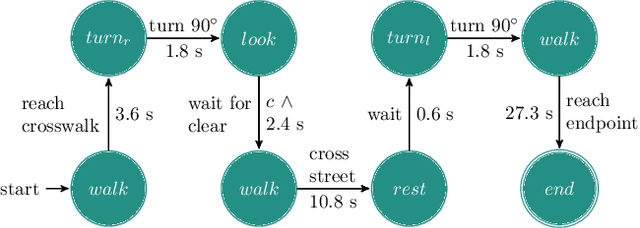

"If you could see me through my eyes": Predicting Pedestrian Perception

Mar 22, 2022

Abstract:Pedestrians are particularly vulnerable road users in urban traffic. With the arrival of autonomous driving, novel technologies can be developed specifically to protect pedestrians. We propose a machine learning toolchain to train artificial neural networks as models of pedestrian behavior. In a preliminary study, we use synthetic data from simulations of a specific pedestrian crossing scenario to train a variational autoencoder and a long short-term memory network to predict a pedestrian's future visual perception. We can accurately predict a pedestrian's future perceptions within relevant time horizons. By iteratively feeding these predicted frames into these networks, they can be used as simulations of pedestrians as indicated by our results. Such trained networks can later be used to predict pedestrian behaviors even from the perspective of the autonomous car. Another future extension will be to re-train these networks with real-world video data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge