Luyu Qiu

Dissecting Multiplication in Transformers: Insights into LLMs

Jul 22, 2024Abstract:Transformer-based large language models have achieved remarkable performance across various natural language processing tasks. However, they often struggle with seemingly easy tasks like arithmetic despite their vast capabilities. This stark disparity raise human's concerns about their safe and ethical use, hinder their widespread adoption.In this paper, we focus on a typical arithmetic task, integer multiplication, to explore and explain the imperfection of transformers in this domain. We provide comprehensive analysis of a vanilla transformer trained to perform n-digit integer multiplication. Our observations indicate that the model decomposes multiplication task into multiple parallel subtasks, sequentially optimizing each subtask for each digit to complete the final multiplication. Based on observation and analysis, we infer the reasons of transformers deficiencies in multiplication tasks lies in their difficulty in calculating successive carryovers and caching intermediate results, and confirmed this inference through experiments. Guided by these findings, we propose improvements to enhance transformers performance on multiplication tasks. These enhancements are validated through rigorous testing and mathematical modeling, not only enhance transformer's interpretability, but also improve its performance, e.g., we achieve over 99.9% accuracy on 5-digit integer multiplication with a tiny transformer, outperform LLMs GPT-4. Our method contributes to the broader fields of model understanding and interpretability, paving the way for analyzing more complex tasks and Transformer models. This work underscores the importance of explainable AI, helping to build trust in large language models and promoting their adoption in critical applications.

XPose: eXplainable Human Pose Estimation

Mar 19, 2024

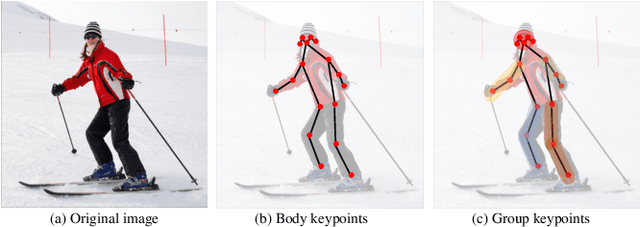

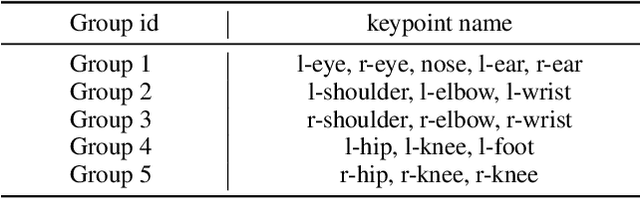

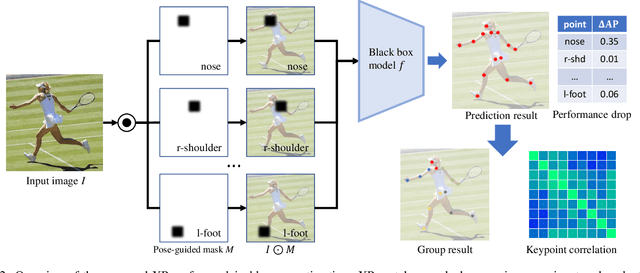

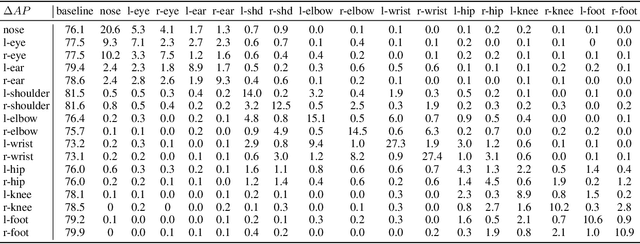

Abstract:Current approaches in pose estimation primarily concentrate on enhancing model architectures, often overlooking the importance of comprehensively understanding the rationale behind model decisions. In this paper, we propose XPose, a novel framework that incorporates Explainable AI (XAI) principles into pose estimation. This integration aims to elucidate the individual contribution of each keypoint to final prediction, thereby elevating the model's transparency and interpretability. Conventional XAI techniques have predominantly addressed tasks with single-target tasks like classification. Additionally, the application of Shapley value, a common measure in XAI, to pose estimation has been hindered by prohibitive computational demands. To address these challenges, this work introduces an innovative concept called Group Shapley Value (GSV). This approach strategically organizes keypoints into clusters based on their interdependencies. Within these clusters, GSV meticulously calculates Shapley value for keypoints, while for inter-cluster keypoints, it opts for a more holistic group-level valuation. This dual-level computation framework meticulously assesses keypoint contributions to the final outcome, optimizing computational efficiency. Building on the insights into keypoint interactions, we devise a novel data augmentation technique known as Group-based Keypoint Removal (GKR). This method ingeniously removes individual keypoints during training phases, deliberately preserving those with strong mutual connections, thereby refining the model's predictive prowess for non-visible keypoints. The empirical validation of GKR across a spectrum of standard approaches attests to its efficacy. GKR's success demonstrates how using Explainable AI (XAI) can directly enhance pose estimation models.

Towards Fine-Grained Explainability for Heterogeneous Graph Neural Network

Dec 23, 2023Abstract:Heterogeneous graph neural networks (HGNs) are prominent approaches to node classification tasks on heterogeneous graphs. Despite the superior performance, insights about the predictions made from HGNs are obscure to humans. Existing explainability techniques are mainly proposed for GNNs on homogeneous graphs. They focus on highlighting salient graph objects to the predictions whereas the problem of how these objects affect the predictions remains unsolved. Given heterogeneous graphs with complex structures and rich semantics, it is imperative that salient objects can be accompanied with their influence paths to the predictions, unveiling the reasoning process of HGNs. In this paper, we develop xPath, a new framework that provides fine-grained explanations for black-box HGNs specifying a cause node with its influence path to the target node. In xPath, we differentiate the influence of a node on the prediction w.r.t. every individual influence path, and measure the influence by perturbing graph structure via a novel graph rewiring algorithm. Furthermore, we introduce a greedy search algorithm to find the most influential fine-grained explanations efficiently. Empirical results on various HGNs and heterogeneous graphs show that xPath yields faithful explanations efficiently, outperforming the adaptations of advanced GNN explanation approaches.

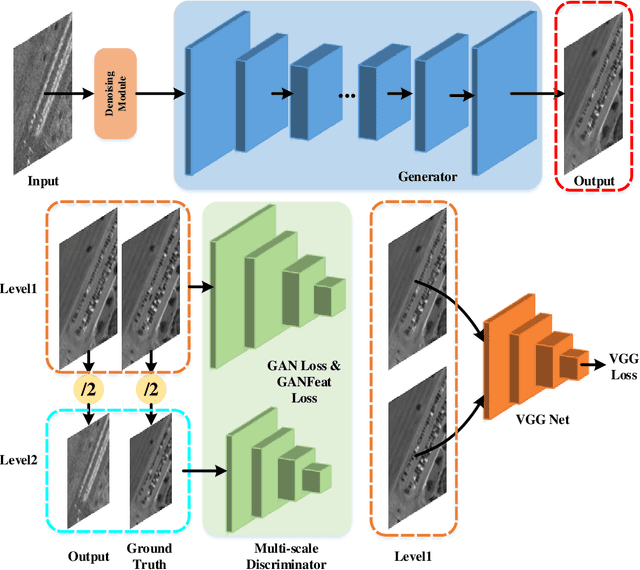

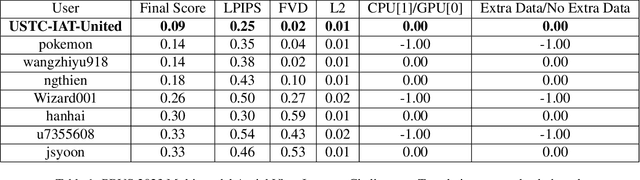

SAR2EO: A High-resolution Image Translation Framework with Denoising Enhancement

Apr 08, 2023

Abstract:Synthetic Aperture Radar (SAR) to electro-optical (EO) image translation is a fundamental task in remote sensing that can enrich the dataset by fusing information from different sources. Recently, many methods have been proposed to tackle this task, but they are still difficult to complete the conversion from low-resolution images to high-resolution images. Thus, we propose a framework, SAR2EO, aiming at addressing this challenge. Firstly, to generate high-quality EO images, we adopt the coarse-to-fine generator, multi-scale discriminators, and improved adversarial loss in the pix2pixHD model to increase the synthesis quality. Secondly, we introduce a denoising module to remove the noise in SAR images, which helps to suppress the noise while preserving the structural information of the images. To validate the effectiveness of the proposed framework, we conduct experiments on the dataset of the Multi-modal Aerial View Imagery Challenge (MAVIC), which consists of large-scale SAR and EO image pairs. The experimental results demonstrate the superiority of our proposed framework, and we win the first place in the MAVIC held in CVPR PBVS 2023.

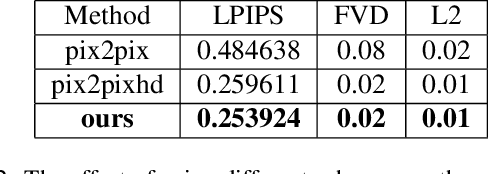

Resisting Out-of-Distribution Data Problem in Perturbation of XAI

Jul 27, 2021

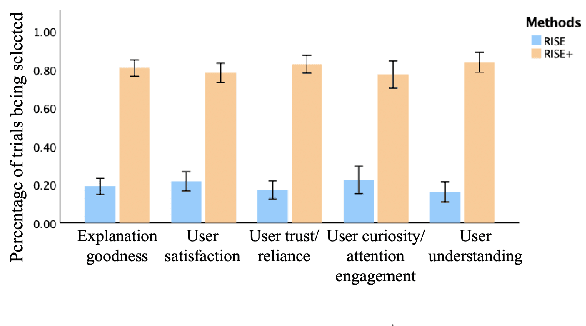

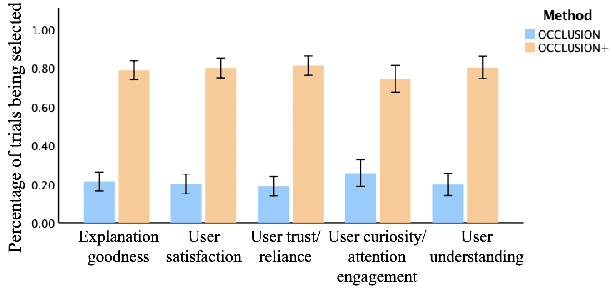

Abstract:With the rapid development of eXplainable Artificial Intelligence (XAI), perturbation-based XAI algorithms have become quite popular due to their effectiveness and ease of implementation. The vast majority of perturbation-based XAI techniques face the challenge of Out-of-Distribution (OoD) data -- an artifact of randomly perturbed data becoming inconsistent with the original dataset. OoD data leads to the over-confidence problem in model predictions, making the existing XAI approaches unreliable. To our best knowledge, the OoD data problem in perturbation-based XAI algorithms has not been adequately addressed in the literature. In this work, we address this OoD data problem by designing an additional module quantifying the affinity between the perturbed data and the original dataset distribution, which is integrated into the process of aggregation. Our solution is shown to be compatible with the most popular perturbation-based XAI algorithms, such as RISE, OCCLUSION, and LIME. Experiments have confirmed that our methods demonstrate a significant improvement in general cases using both computational and cognitive metrics. Especially in the case of degradation, our proposed approach demonstrates outstanding performance comparing to baselines. Besides, our solution also resolves a fundamental problem with the faithfulness indicator, a commonly used evaluation metric of XAI algorithms that appears to be sensitive to the OoD issue.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge