Louis Thiry

DI-ENS

Classification-Denoising Networks

Oct 04, 2024Abstract:Image classification and denoising suffer from complementary issues of lack of robustness or partially ignoring conditioning information. We argue that they can be alleviated by unifying both tasks through a model of the joint probability of (noisy) images and class labels. Classification is performed with a forward pass followed by conditioning. Using the Tweedie-Miyasawa formula, we evaluate the denoising function with the score, which can be computed by marginalization and back-propagation. The training objective is then a combination of cross-entropy loss and denoising score matching loss integrated over noise levels. Numerical experiments on CIFAR-10 and ImageNet show competitive classification and denoising performance compared to reference deep convolutional classifiers/denoisers, and significantly improves efficiency compared to previous joint approaches. Our model shows an increased robustness to adversarial perturbations compared to a standard discriminative classifier, and allows for a novel interpretation of adversarial gradients as a difference of denoisers.

The Unreasonable Effectiveness of Patches in Deep Convolutional Kernels Methods

Jan 19, 2021

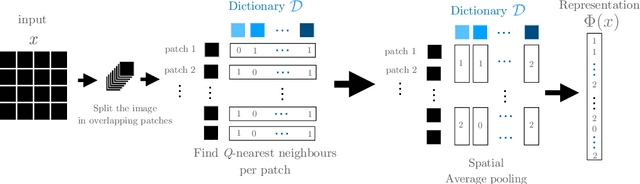

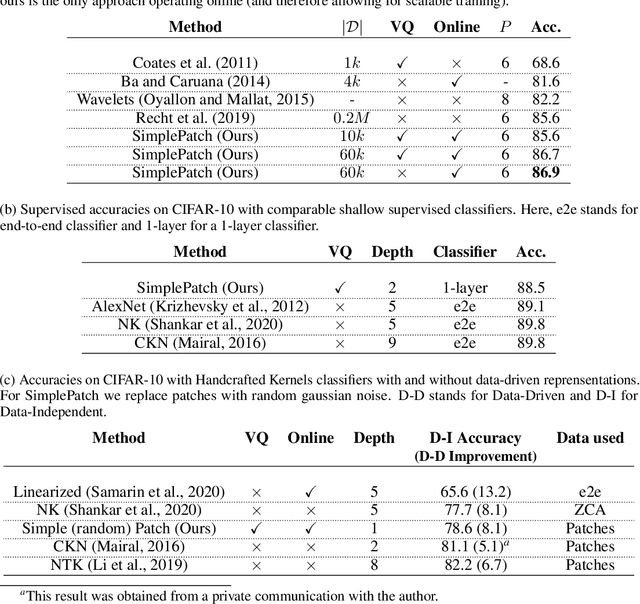

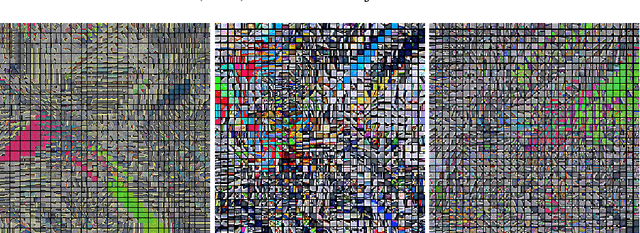

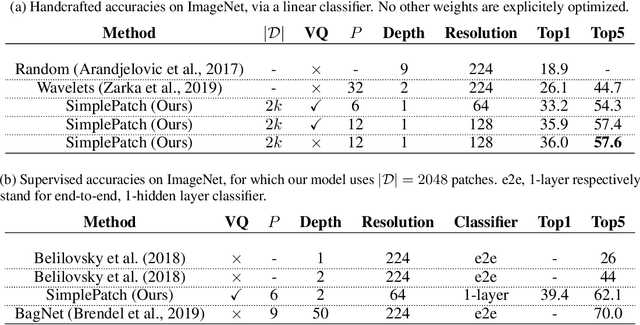

Abstract:A recent line of work showed that various forms of convolutional kernel methods can be competitive with standard supervised deep convolutional networks on datasets like CIFAR-10, obtaining accuracies in the range of 87-90% while being more amenable to theoretical analysis. In this work, we highlight the importance of a data-dependent feature extraction step that is key to the obtain good performance in convolutional kernel methods. This step typically corresponds to a whitened dictionary of patches, and gives rise to a data-driven convolutional kernel methods. We extensively study its effect, demonstrating it is the key ingredient for high performance of these methods. Specifically, we show that one of the simplest instances of such kernel methods, based on a single layer of image patches followed by a linear classifier is already obtaining classification accuracies on CIFAR-10 in the same range as previous more sophisticated convolutional kernel methods. We scale this method to the challenging ImageNet dataset, showing such a simple approach can exceed all existing non-learned representation methods. This is a new baseline for object recognition without representation learning methods, that initiates the investigation of convolutional kernel models on ImageNet. We conduct experiments to analyze the dictionary that we used, our ablations showing they exhibit low-dimensional properties.

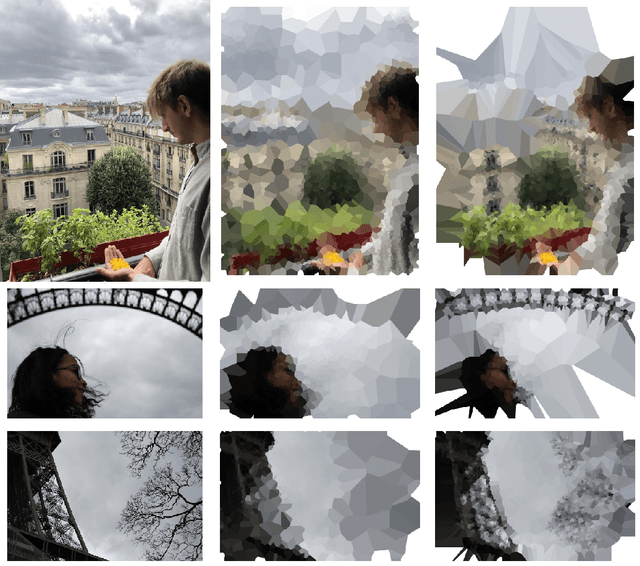

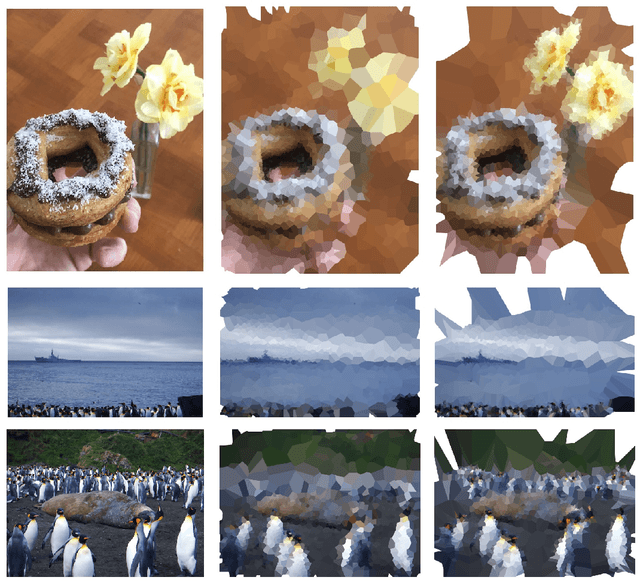

Diptychs of human and machine perceptions

Oct 12, 2020

Abstract:We propose visual creations that put differences in algorithms and humans \emph{perceptions} into perspective. We exploit saliency maps of neural networks and visual focus of humans to create diptychs that are reinterpretations of an original image according to both machine and human attentions. Using those diptychs as a qualitative evaluation of perception, we discuss some crucial issues of current \textit{task-oriented} artificial intelligence.

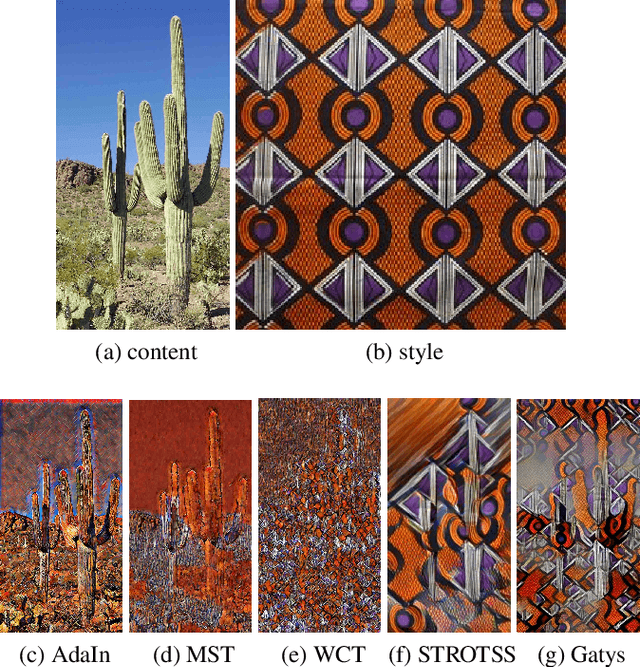

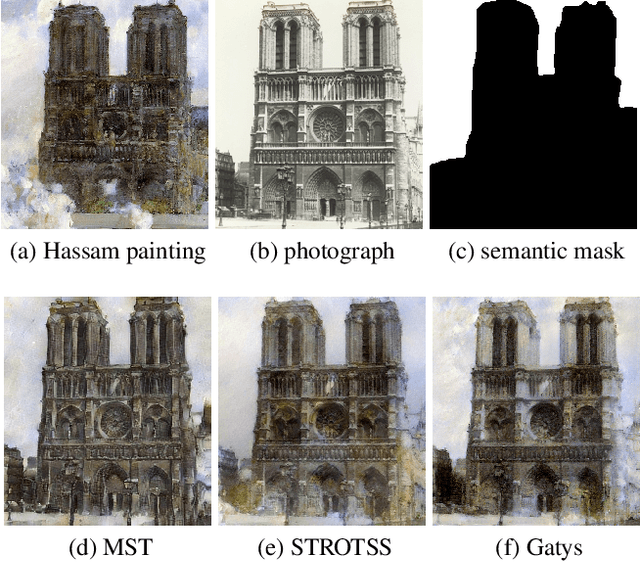

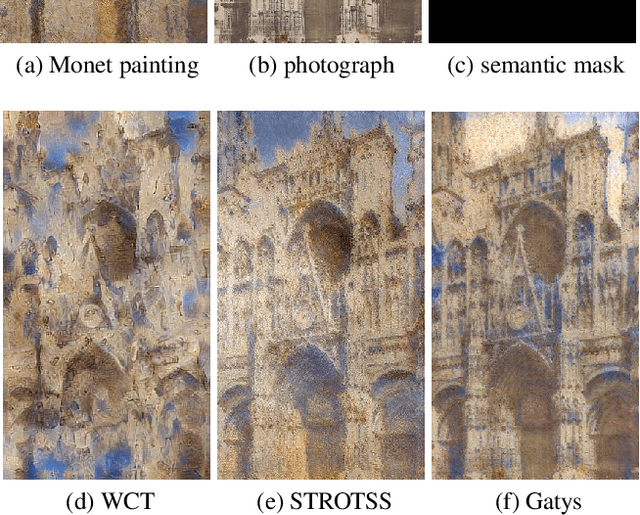

Interactive Neural Style Transfer with Artists

Mar 14, 2020

Abstract:We present interactive painting processes in which a painter and various neural style transfer algorithms interact on a real canvas. Understanding what these algorithms' outputs achieve is then paramount to describe the creative agency in our interactive experiments. We gather a set of paired painting-pictures images and present a new evaluation methodology based on the predictivity of neural style transfer algorithms. We point some algorithms' instabilities and show that they can be used to enlarge the diversity and pleasing oddity of the images synthesized by the numerous existing neural style transfer algorithms. This diversity of images was perceived as a source of inspiration for human painters, portraying the machine as a computational catalyst.

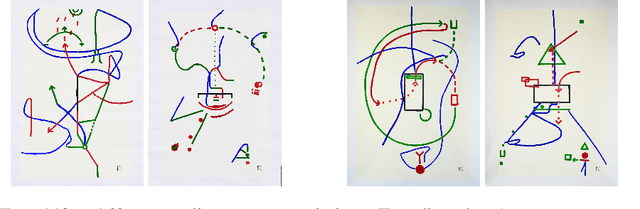

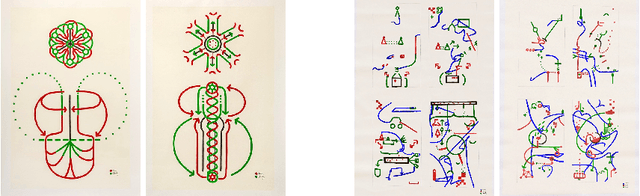

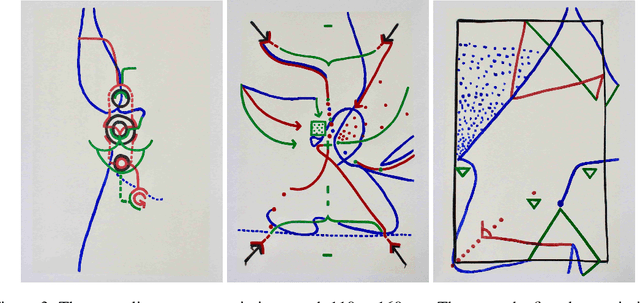

Dialog on a canvas with a machine

Oct 13, 2019

Abstract:We propose a new form of human-machine interaction. It is a pictorial game consisting of interactive rounds of creation between artists and a machine. They repetitively paint one after the other. At its rounds, the computer partially completes the drawing using machine learning algorithms, and projects its additions directly on the canvas, which the artists are free to insert or modify. Alongside fostering creativity, the process is designed to question the growing interaction between humans and machines.

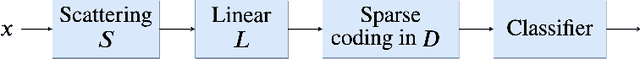

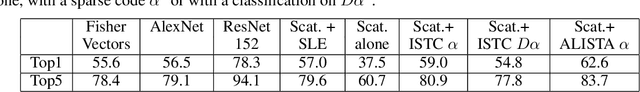

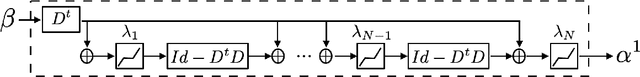

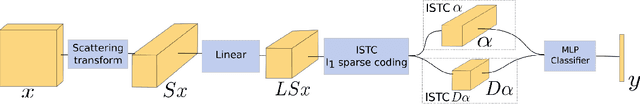

Deep Network classification by Scattering and Homotopy dictionary learning

Oct 08, 2019

Abstract:We introduce a sparse scattering deep convolutional neural network, which provides a simple model to analyze properties of deep representation learning for classification. Learning a single dictionary matrix with a classifier yields a higher classification accuracy than AlexNet over the ImageNet ILSVRC2012 dataset. The network first applies a scattering transform which linearizes variabilities due to geometric transformations such as translations and small deformations. A sparse l1 dictionary coding reduces intra-class variability while preserving class separation through projections over unions of linear spaces. It is implemented in a deep convolutional network with a homotopy algorithm having an exponential convergence. A convergence proof is given in a general framework including ALISTA. Classification results are analyzed over ImageNet.

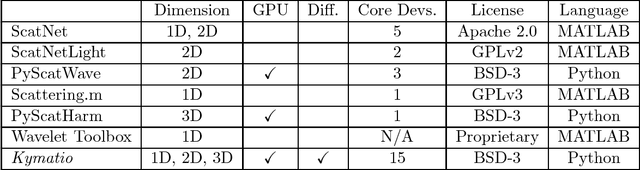

Kymatio: Scattering Transforms in Python

Dec 28, 2018

Abstract:The wavelet scattering transform is an invariant signal representation suitable for many signal processing and machine learning applications. We present the Kymatio software package, an easy-to-use, high-performance Python implementation of the scattering transform in 1D, 2D, and 3D that is compatible with modern deep learning frameworks. All transforms may be executed on a GPU (in addition to CPU), offering a considerable speed up over CPU implementations. The package also has a small memory footprint, resulting inefficient memory usage. The source code, documentation, and examples are available undera BSD license at https://www.kymat.io/

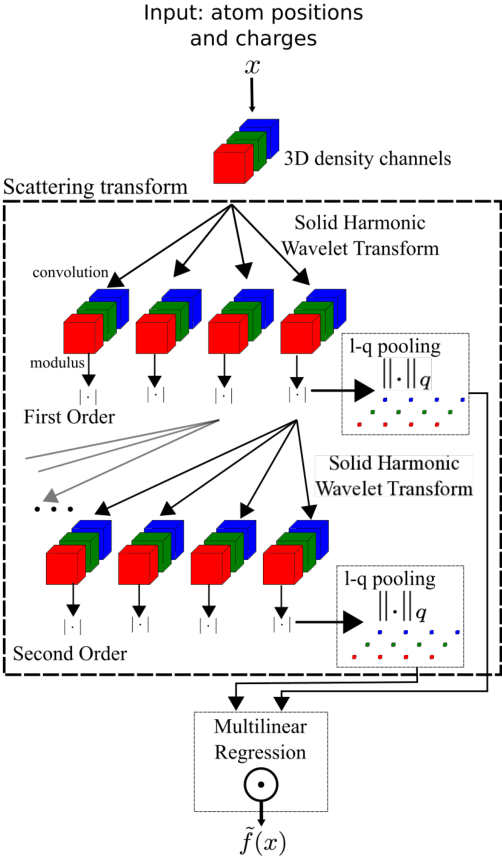

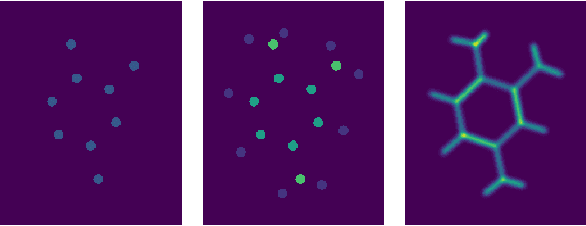

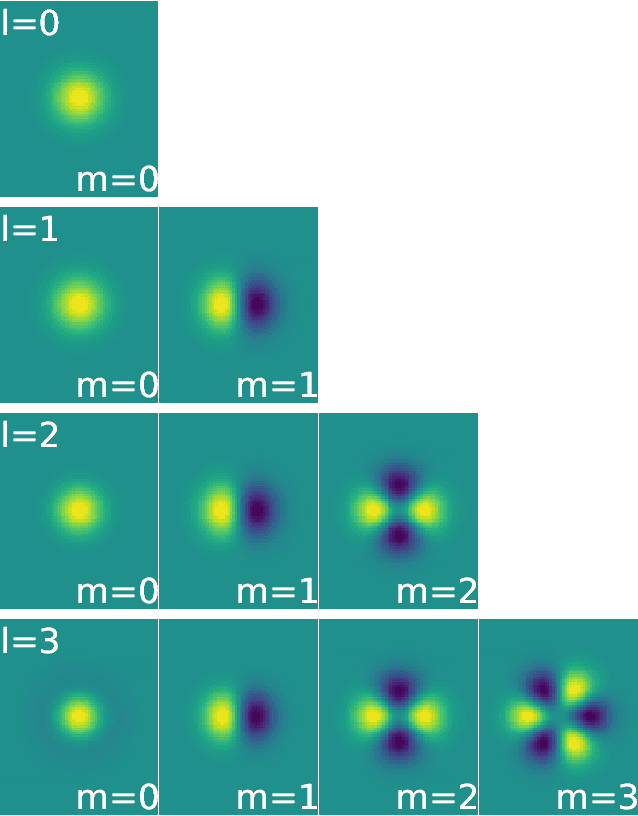

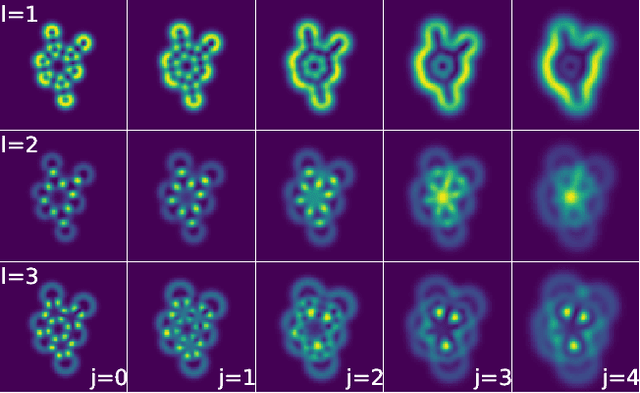

Solid Harmonic Wavelet Scattering for Predictions of Molecule Properties

May 01, 2018

Abstract:We present a machine learning algorithm for the prediction of molecule properties inspired by ideas from density functional theory. Using Gaussian-type orbital functions, we create surrogate electronic densities of the molecule from which we compute invariant "solid harmonic scattering coefficients" that account for different types of interactions at different scales. Multi-linear regressions of various physical properties of molecules are computed from these invariant coefficients. Numerical experiments show that these regressions have near state of the art performance, even with relatively few training examples. Predictions over small sets of scattering coefficients can reach a DFT precision while being interpretable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge