Liang Han

CountDiffusion: Text-to-Image Synthesis with Training-Free Counting-Guidance Diffusion

May 07, 2025Abstract:Stable Diffusion has advanced text-to-image synthesis, but training models to generate images with accurate object quantity is still difficult due to the high computational cost and the challenge of teaching models the abstract concept of quantity. In this paper, we propose CountDiffusion, a training-free framework aiming at generating images with correct object quantity from textual descriptions. CountDiffusion consists of two stages. In the first stage, an intermediate denoising result is generated by the diffusion model to predict the final synthesized image with one-step denoising, and a counting model is used to count the number of objects in this image. In the second stage, a correction module is used to correct the object quantity by changing the attention map of the object with universal guidance. The proposed CountDiffusion can be plugged into any diffusion-based text-to-image (T2I) generation models without further training. Experiment results demonstrate the superiority of our proposed CountDiffusion, which improves the accurate object quantity generation ability of T2I models by a large margin.

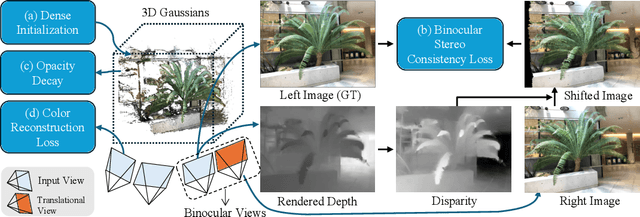

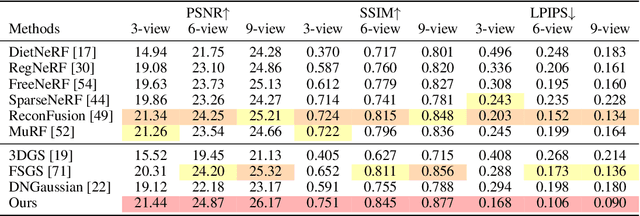

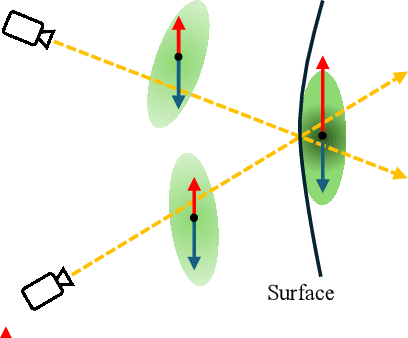

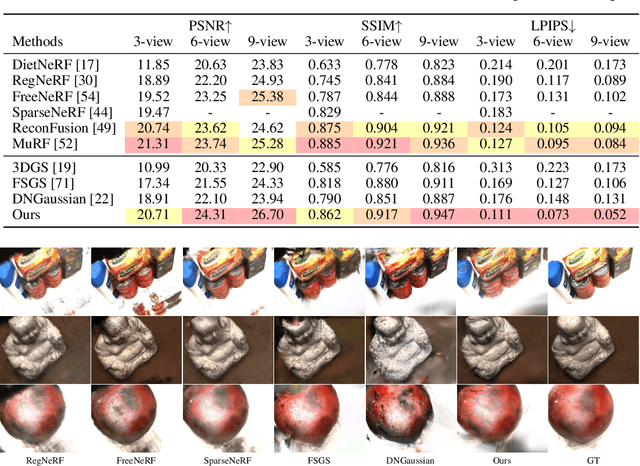

Binocular-Guided 3D Gaussian Splatting with View Consistency for Sparse View Synthesis

Oct 24, 2024

Abstract:Novel view synthesis from sparse inputs is a vital yet challenging task in 3D computer vision. Previous methods explore 3D Gaussian Splatting with neural priors (e.g. depth priors) as an additional supervision, demonstrating promising quality and efficiency compared to the NeRF based methods. However, the neural priors from 2D pretrained models are often noisy and blurry, which struggle to precisely guide the learning of radiance fields. In this paper, We propose a novel method for synthesizing novel views from sparse views with Gaussian Splatting that does not require external prior as supervision. Our key idea lies in exploring the self-supervisions inherent in the binocular stereo consistency between each pair of binocular images constructed with disparity-guided image warping. To this end, we additionally introduce a Gaussian opacity constraint which regularizes the Gaussian locations and avoids Gaussian redundancy for improving the robustness and efficiency of inferring 3D Gaussians from sparse views. Extensive experiments on the LLFF, DTU, and Blender datasets demonstrate that our method significantly outperforms the state-of-the-art methods.

Potato: A Data-Oriented Programming 3D Simulator for Large-Scale Heterogeneous Swarm Robotics

Aug 24, 2023

Abstract:Large-scale simulation with realistic nonlinear dynamic models is crucial for algorithms development for swarm robotics. However, existing platforms are mainly developed based on Object-Oriented Programming (OOP) and either use simple kinematic models to pursue a large number of simulating nodes or implement realistic dynamic models with limited simulating nodes. In this paper, we develop a simulator based on Data-Oriented Programming (DOP) that utilizes GPU parallel computing to achieve large-scale swarm robotic simulations. Specifically, we use a multi-process approach to simulate heterogeneous agents and leverage PyTorch with GPU to simulate homogeneous agents with a large number. We test our approach using a nonlinear quadrotor model and demonstrate that this DOP approach can maintain almost the same computational speed when quadrotors are less than 5,000. We also provide two examples to present the functionality of the platform.

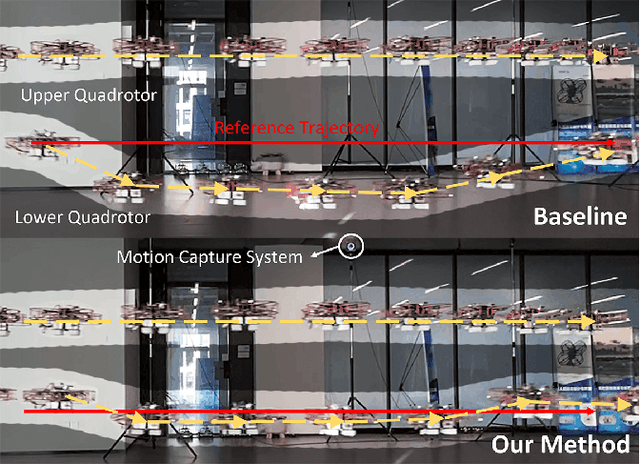

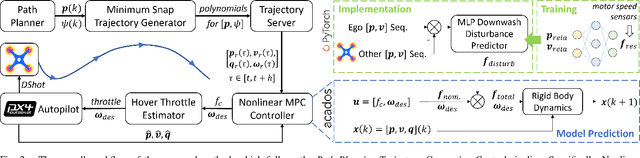

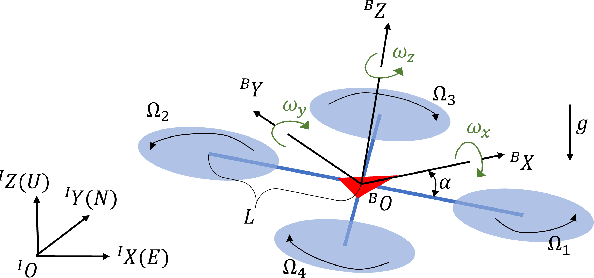

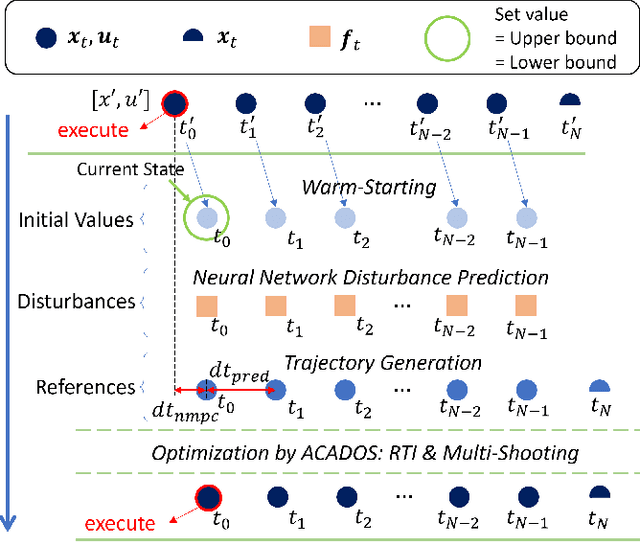

Nonlinear MPC for Quadrotors in Close-Proximity Flight with Neural Network Downwash Prediction

Apr 16, 2023

Abstract:Swarm aerial robots are required to maintain close proximity to successfully traverse narrow areas in cluttered environments. However, this movement is affected by the downwash effect generated by the other quadrotors in the swarm. This aerodynamic effect is highly nonlinear and hard to model by classic mathematical methods. In addition, the motor speeds of quadrotors are risky to reach the limit when resisting the effect. To solve these problems, we integrate a Neural network Downwash Predictor with Nonlinear Model Predictive Control (NDP-NMPC) to propose a trajectory-tracking approach. The network is trained with spectral normalization to ensure robustness and safety on uncollected cases. The predicted disturbances are then incorporated into the optimization scheme in NMPC, which handles constraints to ensure that the motor speed remains within safe limits. We also design a quadrotor system, identify its parameters, and implement the proposed method onboard. Finally, we conduct an open-loop prediction experiment to verify the safety and effectiveness of the network, and a real-time closed-loop trajectory tracking experiment which demonstrates a 75.37% reduction of tracking error in height under the downwash effect.

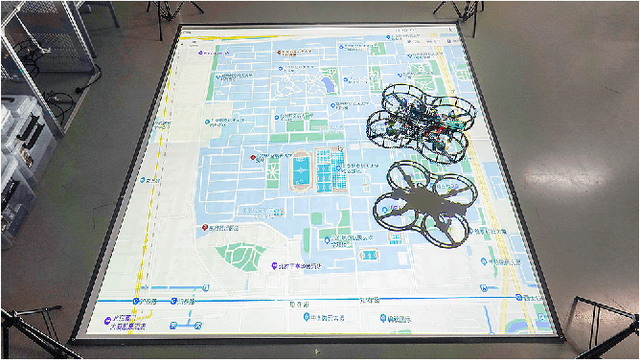

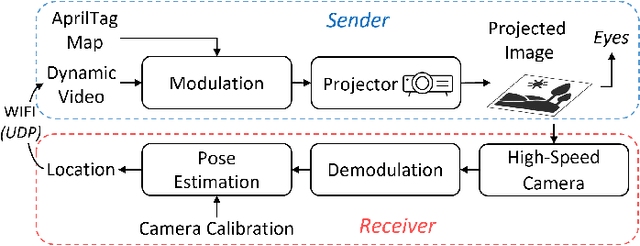

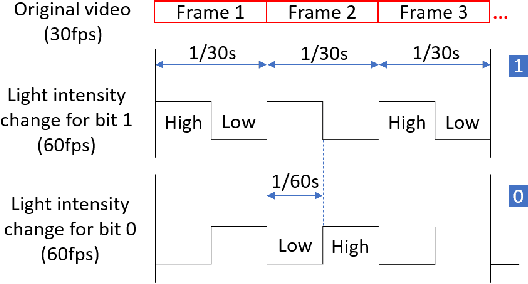

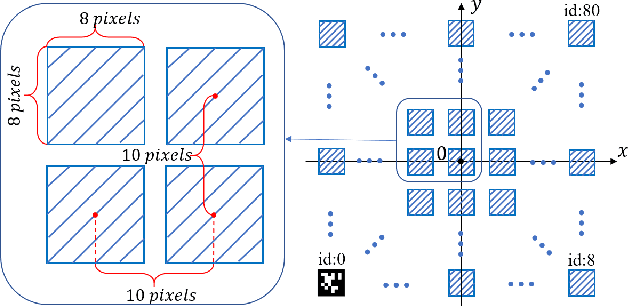

Indoor Localization for Quadrotors using Invisible Projected Tags

Mar 13, 2022

Abstract:Augmented reality (AR) technology has been introduced into the robotics field to narrow the visual gap between indoor and outdoor environments. However, without signals from satellite navigation systems, flight experiments in these indoor AR scenarios need other accurate localization approaches. This work proposes a real-time centimeter-level indoor localization method based on psycho-visually invisible projected tags (IPT), requiring a projector as the sender and quadrotors with high-speed cameras as the receiver. The method includes a modulation process for the sender, as well as demodulation and pose estimation steps for the receiver, where screen-camera communication technology is applied to hide fiducial tags using human vision property. Experiments have demonstrated that IPT can achieve accuracy within ten centimeters and a speed of about ten FPS. Compared with other localization methods for AR robotics platforms, IPT is affordable by using only a projector and high-speed cameras as hardware consumption and convenient by omitting a coordinate alignment step. To the authors' best knowledge, this is the first time screen-camera communication is utilized for AR robot localization.

Vision-based Price Suggestion for Online Second-hand Items

Dec 10, 2020

Abstract:Different from shopping in physical stores, where people have the opportunity to closely check a product (e.g., touching the surface of a T-shirt or smelling the scent of perfume) before making a purchase decision, online shoppers rely greatly on the uploaded product images to make any purchase decision. The decision-making is challenging when selling or purchasing second-hand items online since estimating the items' prices is not trivial. In this work, we present a vision-based price suggestion system for the online second-hand item shopping platform. The goal of vision-based price suggestion is to help sellers set effective prices for their second-hand listings with the images uploaded to the online platforms. First, we propose to better extract representative visual features from the images with the aid of some other image-based item information (e.g., category, brand). Then, we design a vision-based price suggestion module which takes the extracted visual features along with some statistical item features from the shopping platform as the inputs to determine whether an uploaded item image is qualified for price suggestion by a binary classification model, and provide price suggestions for items with qualified images by a regression model. According to two demands from the platform, two different objective functions are proposed to jointly optimize the classification model and the regression model. For better model training, we also propose a warm-up training strategy for the joint optimization. Extensive experiments on a large real-world dataset demonstrate the effectiveness of our vision-based price prediction system.

Price Suggestion for Online Second-hand Items with Texts and Images

Dec 10, 2020

Abstract:This paper presents an intelligent price suggestion system for online second-hand listings based on their uploaded images and text descriptions. The goal of price prediction is to help sellers set effective and reasonable prices for their second-hand items with the images and text descriptions uploaded to the online platforms. Specifically, we design a multi-modal price suggestion system which takes as input the extracted visual and textual features along with some statistical item features collected from the second-hand item shopping platform to determine whether the image and text of an uploaded second-hand item are qualified for reasonable price suggestion with a binary classification model, and provide price suggestions for second-hand items with qualified images and text descriptions with a regression model. To satisfy different demands, two different constraints are added into the joint training of the classification model and the regression model. Moreover, a customized loss function is designed for optimizing the regression model to provide price suggestions for second-hand items, which can not only maximize the gain of the sellers but also facilitate the online transaction. We also derive a set of metrics to better evaluate the proposed price suggestion system. Extensive experiments on a large real-world dataset demonstrate the effectiveness of the proposed multi-modal price suggestion system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge