Laurel D. Riek

Robot Navigation in Risky, Crowded Environments: Understanding Human Preferences

Mar 15, 2023

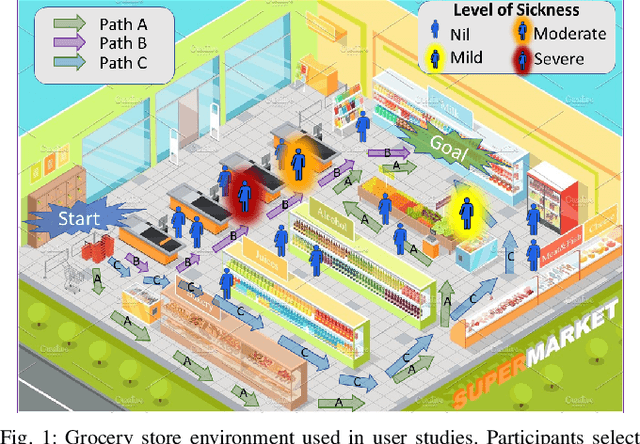

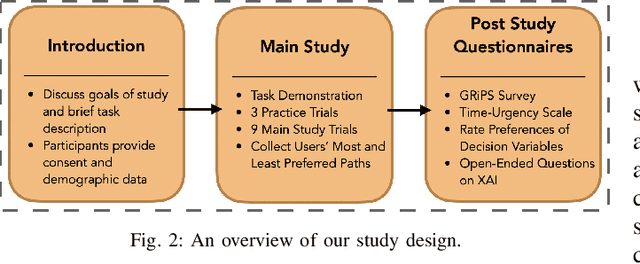

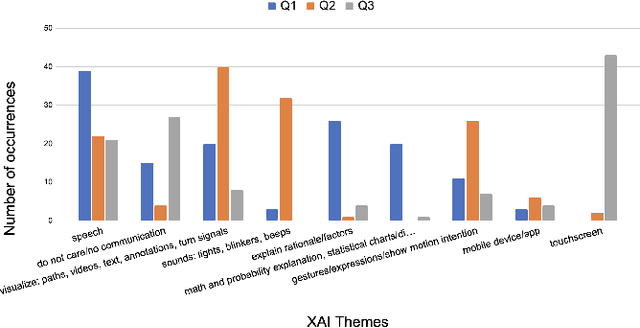

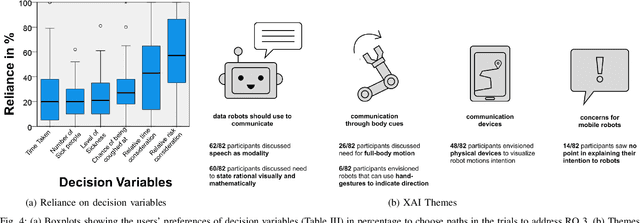

Abstract:Risky and crowded environments (RCE) contain abstract sources of risk and uncertainty, which are perceived differently by humans, leading to a variety of behaviors. Thus, robots deployed in RCEs, need to exhibit diverse perception and planning capabilities in order to interpret other human agents' behavior and act accordingly in such environments. To understand this problem domain, we conducted a study to explore human path choices in RCEs, enabling better robotic navigational explainable AI (XAI) designs. We created a novel COVID-19 pandemic grocery shopping scenario which had time-risk tradeoffs, and acquired users' path preferences. We found that participants showcase a variety of path preferences: from risky and urgent to safe and relaxed. To model users' decision making, we evaluated three popular risk models (Cumulative Prospect Theory (CPT), Conditional Value at Risk (CVAR), and Expected Risk (ER). We found that CPT captured people's decision making more accurately than CVaR and ER, corroborating theoretical results that CPT is more expressive and inclusive than CVaR and ER. We also found that people's self assessments of risk and time-urgency do not correlate with their path preferences in RCEs. Finally, we conducted thematic analysis of open-ended questions, providing crucial design insights for robots is RCE. Thus, through this study, we provide novel and critical insights about human behavior and perception to help design better navigational explainable AI (XAI) in RCEs.

Shared Control in Human Robot Teaming: Toward Context-Aware Communication

Mar 19, 2022

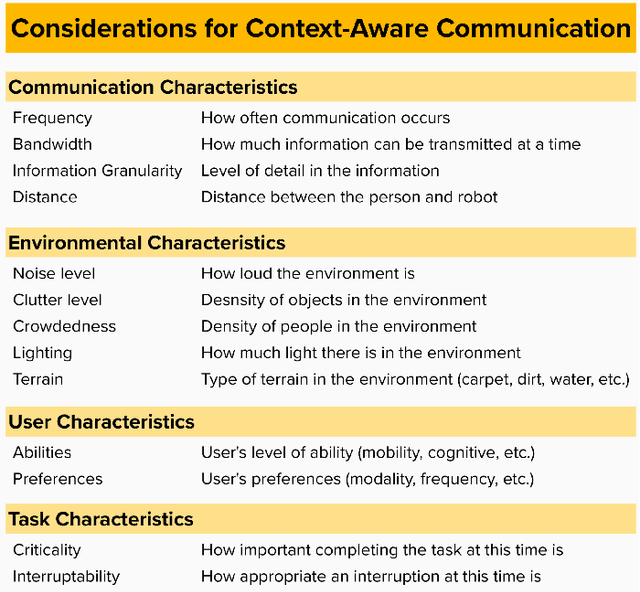

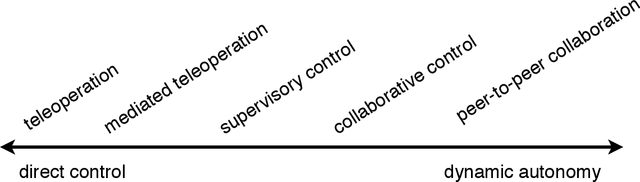

Abstract:In the field of Human-Robot Interaction (HRI), many researchers study shared control systems. Shared control is when a person and agent both contribute to the performance of a task in a collaborative way, often by providing control inputs for a robot. One of the most important things in shared control is the nature of the communication between the person and robot, which could help the human-robot team handle a variety of challenging situations. In this paper, we identify key challenges in shared control, in which better communication design could be useful, including when encountering novel situations and contexts, resolving tensions between preferences and performance, and alleviating cognitive burden and interruptions. Through the use of four exemplar shared control scenarios, we explore how well-designed human-robot communication strategies could help address each challenge. We hope this paper will draw attention to these challenges and assist researchers in advancing the development of shared control systems.

Multitask Bandit Learning through Heterogeneous Feedback Aggregation

Oct 29, 2020

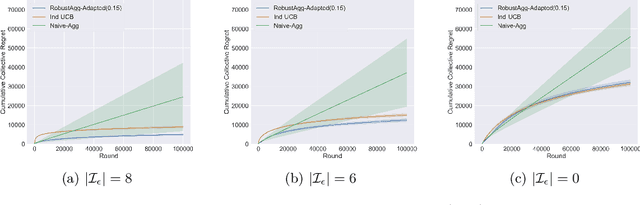

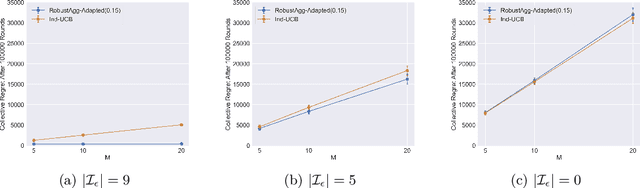

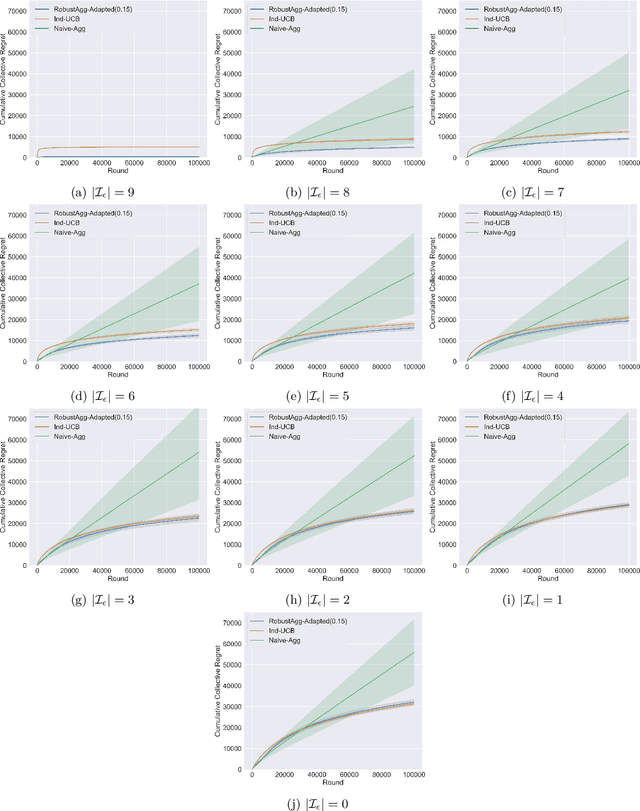

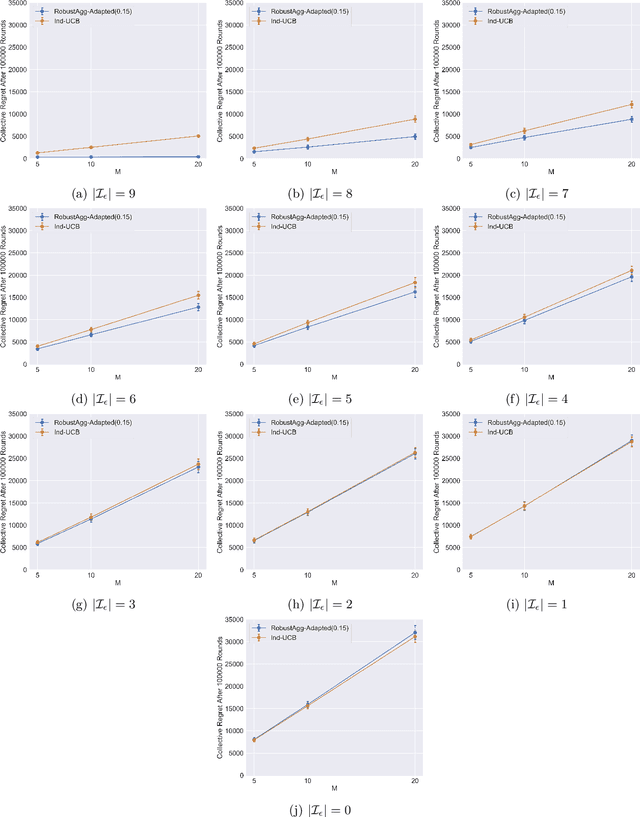

Abstract:In many real-world applications, multiple agents seek to learn how to perform highly related yet slightly different tasks in an online bandit learning protocol. We formulate this problem as the $\epsilon$-multi-player multi-armed bandit problem, in which a set of players concurrently interact with a set of arms, and for each arm, the reward distributions for all players are similar but not necessarily identical. We develop an upper confidence bound-based algorithm, RobustAgg$(\epsilon)$, that adaptively aggregates rewards collected by different players. In the setting where an upper bound on the pairwise similarities of reward distributions between players is known, we achieve instance-dependent regret guarantees that depend on the amenability of information sharing across players. We complement these upper bounds with nearly matching lower bounds. In the setting where pairwise similarities are unknown, we provide a lower bound, as well as an algorithm that trades off minimax regret guarantees for adaptivity to unknown similarity structure.

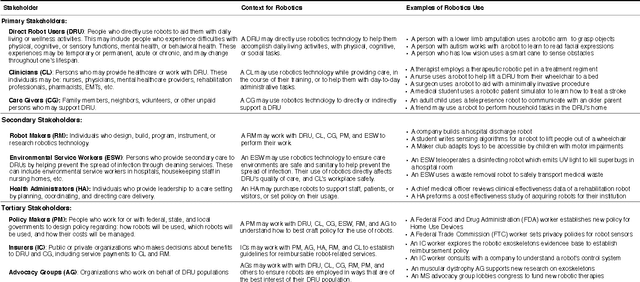

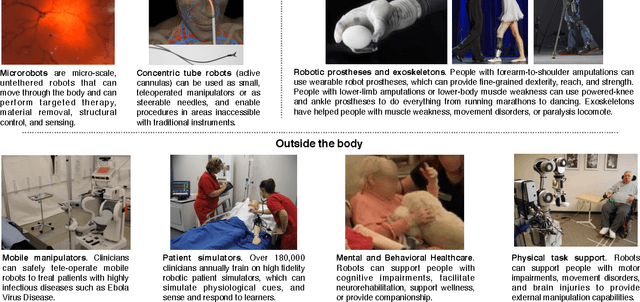

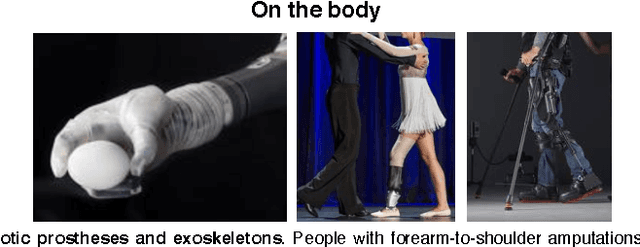

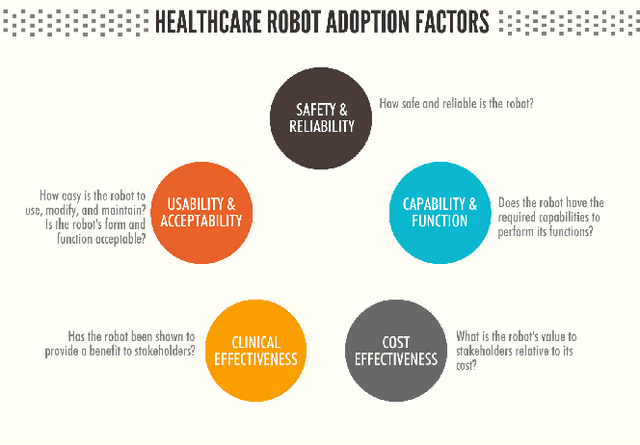

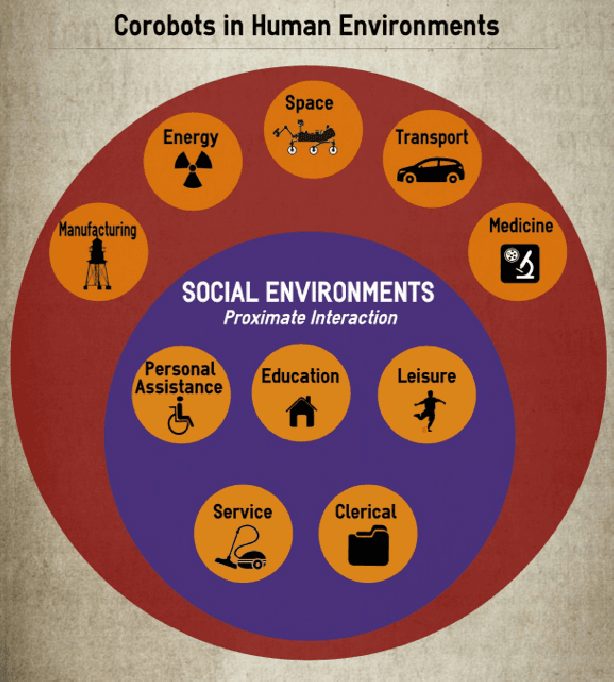

Healthcare Robotics

Apr 12, 2017

Abstract:Robots have the potential to be a game changer in healthcare: improving health and well-being, filling care gaps, supporting care givers, and aiding health care workers. However, before robots are able to be widely deployed, it is crucial that both the research and industrial communities work together to establish a strong evidence-base for healthcare robotics, and surmount likely adoption barriers. This article presents a broad contextualization of robots in healthcare by identifying key stakeholders, care settings, and tasks; reviewing recent advances in healthcare robotics; and outlining major challenges and opportunities to their adoption.

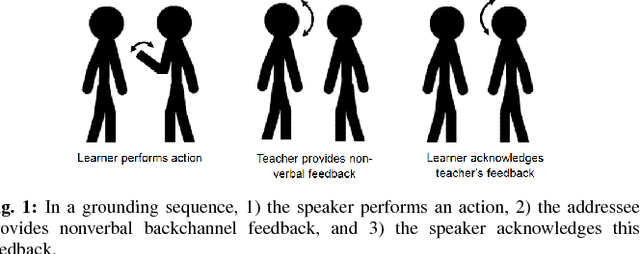

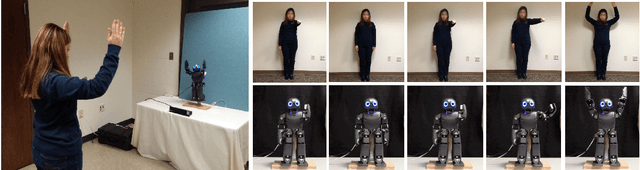

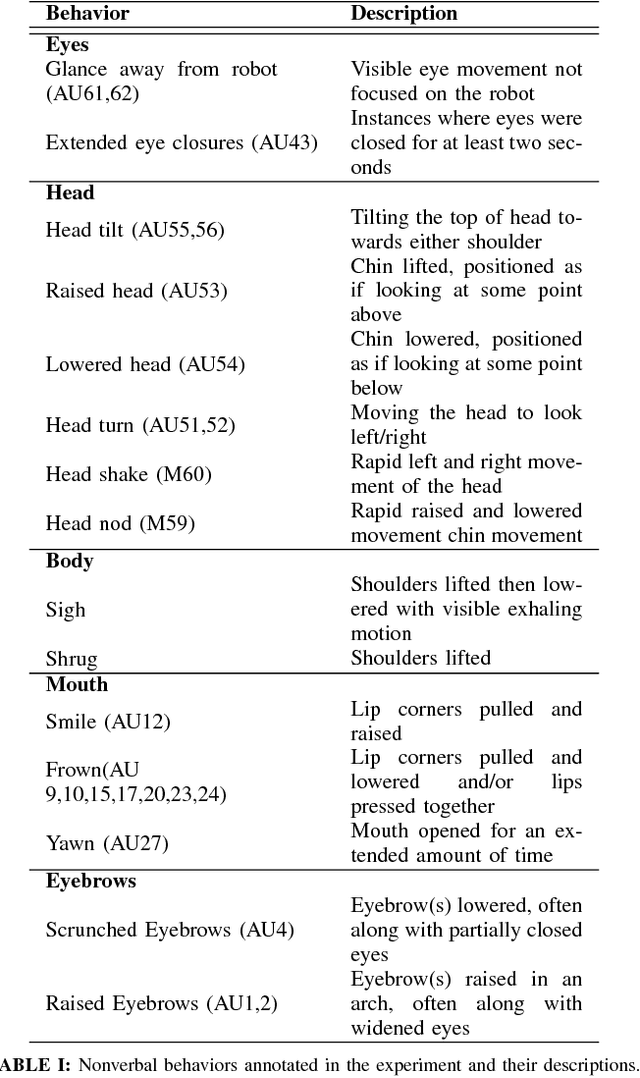

Exploring Implicit Human Responses to Robot Mistakes in a Learning from Demonstration Task

Jun 08, 2016

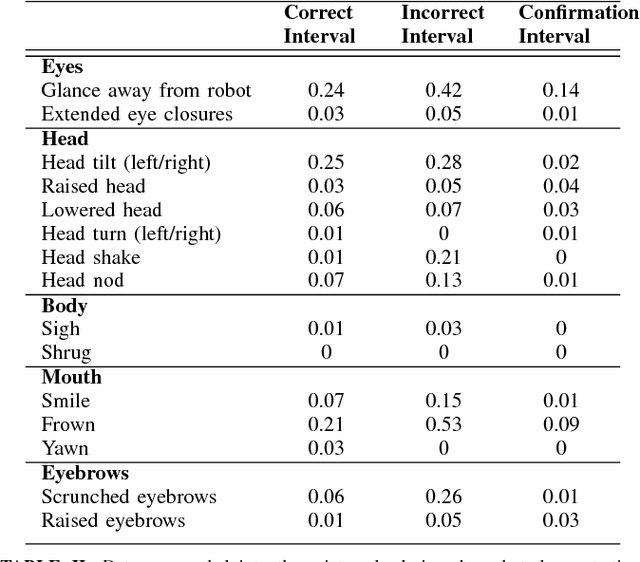

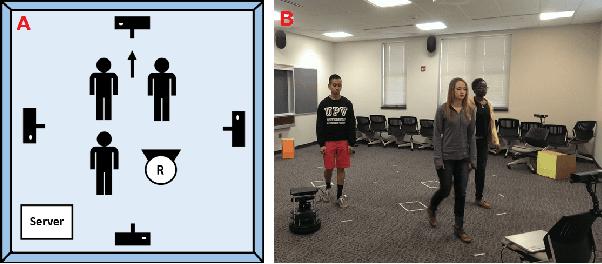

Abstract:As robots enter human environments, they will be expected to accomplish a tremendous range of tasks. It is not feasible for robot designers to pre-program these behaviors or know them in advance, so one way to address this is through end-user programming, such as via learning from demonstration (LfD). While significant work has been done on the mechanics of enabling robot learning from human teachers, one unexplored aspect is enabling mutual feedback between both the human teacher and robot during the learning process, i.e., implicit learning. In this paper, we explore one aspect of this mutual understanding, grounding sequences, where both a human and robot provide non-verbal feedback to signify their mutual understanding during interaction. We conducted a study where people taught an autonomous humanoid robot a dance, and performed gesture analysis to measure people's responses to the robot during correct and incorrect demonstrations.

Movement Coordination in Human-Robot Teams: A Dynamical Systems Approach

May 04, 2016

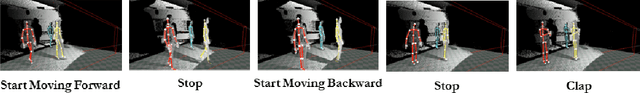

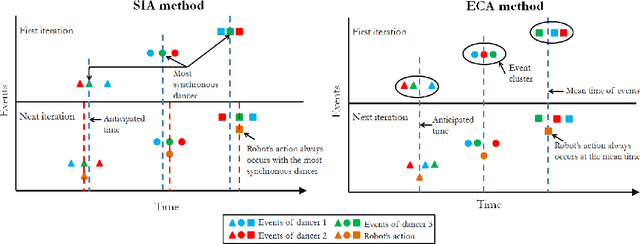

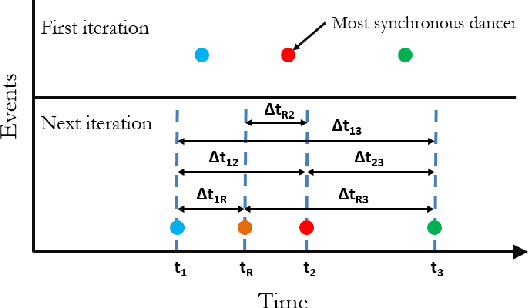

Abstract:In order to be effective teammates, robots need to be able to understand high-level human behavior to recognize, anticipate, and adapt to human motion. We have designed a new approach to enable robots to perceive human group motion in real-time, anticipate future actions, and synthesize their own motion accordingly. We explore this within the context of joint action, where humans and robots move together synchronously. In this paper, we present an anticipation method which takes high-level group behavior into account. We validate the method within a human-robot interaction scenario, where an autonomous mobile robot observes a team of human dancers, and then successfully and contingently coordinates its movements to "join the dance". We compared the results of our anticipation method to move the robot with another method which did not rely on high-level group behavior, and found our method performed better both in terms of more closely synchronizing the robot's motion to the team, and also exhibiting more contingent and fluent motion. These findings suggest that the robot performs better when it has an understanding of high-level group behavior than when it does not. This work will help enable others in the robotics community to build more fluent and adaptable robots in the future.

Robotics Technology in Mental Health Care

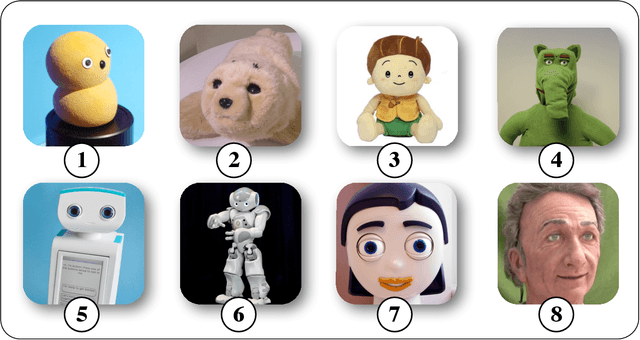

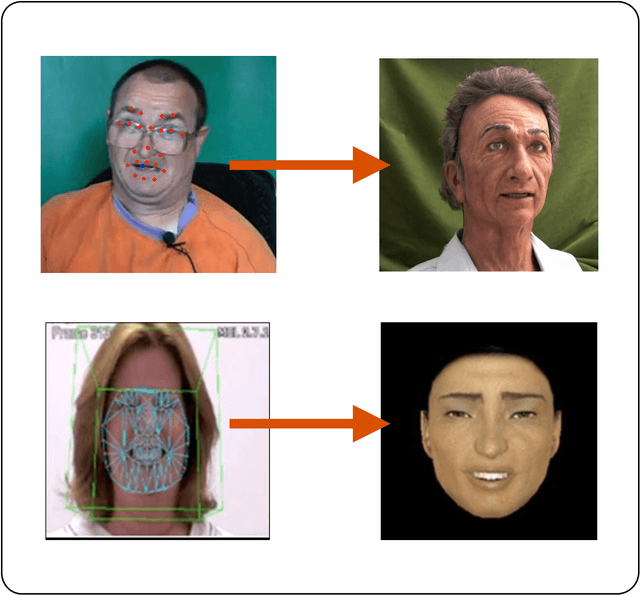

Nov 07, 2015

Abstract:This chapter discusses the existing and future use of robotics and intelligent sensing technology in mental health care. While the use of this technology is nascent in mental health care, it represents a potentially useful tool in the practitioner's toolbox. The goal of this chapter is to provide a brief overview of the field, discuss the recent use of robotics technology in mental health care practice, explore some of the design issues and ethical issues of using robots in this space, and finally to explore the potential of emerging technology.

Proceedings of the 1st Workshop on Robotics Challenges and Vision

Feb 13, 2014

Abstract:Proceedings of the 1st Workshop on Robotics Challenges and Vision (RCV2013)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge