Kun Qin

Wuhan University

Research on Foundation Model for Spatial Data Intelligence: China's 2024 White Paper on Strategic Development of Spatial Data Intelligence

May 30, 2024Abstract:This report focuses on spatial data intelligent large models, delving into the principles, methods, and cutting-edge applications of these models. It provides an in-depth discussion on the definition, development history, current status, and trends of spatial data intelligent large models, as well as the challenges they face. The report systematically elucidates the key technologies of spatial data intelligent large models and their applications in urban environments, aerospace remote sensing, geography, transportation, and other scenarios. Additionally, it summarizes the latest application cases of spatial data intelligent large models in themes such as urban development, multimodal systems, remote sensing, smart transportation, and resource environments. Finally, the report concludes with an overview and outlook on the development prospects of spatial data intelligent large models.

All Grains, One Scheme : Learning Multi-grain Instance Representation for Aerial Scene Classification

May 06, 2022

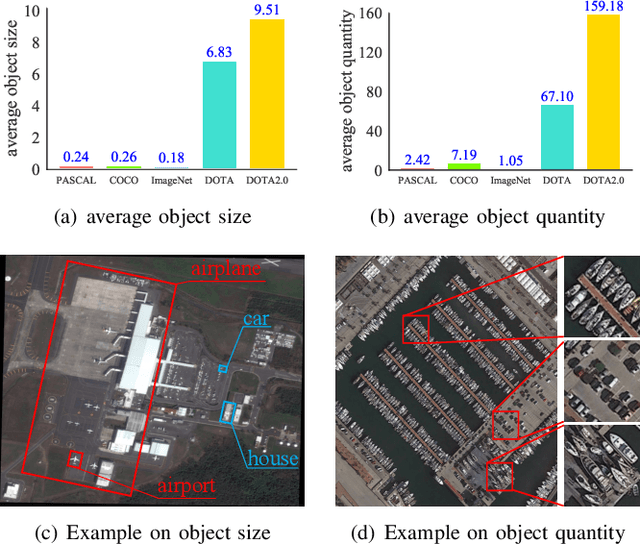

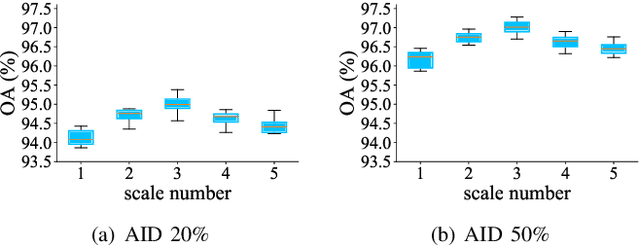

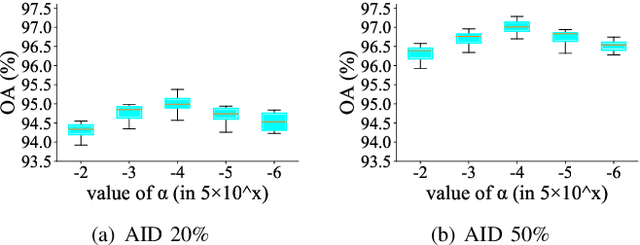

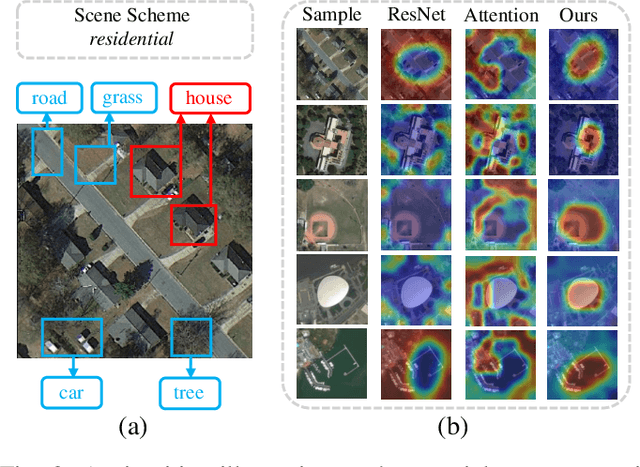

Abstract:Aerial scene classification remains challenging as: 1) the size of key objects in determining the scene scheme varies greatly; 2) many objects irrelevant to the scene scheme are often flooded in the image. Hence, how to effectively perceive the region of interests (RoIs) from a variety of sizes and build more discriminative representation from such complicated object distribution is vital to understand an aerial scene. In this paper, we propose a novel all grains, one scheme (AGOS) framework to tackle these challenges. To the best of our knowledge, it is the first work to extend the classic multiple instance learning into multi-grain formulation. Specially, it consists of a multi-grain perception module (MGP), a multi-branch multi-instance representation module (MBMIR) and a self-aligned semantic fusion (SSF) module. Firstly, our MGP preserves the differential dilated convolutional features from the backbone, which magnifies the discriminative information from multi-grains. Then, our MBMIR highlights the key instances in the multi-grain representation under the MIL formulation. Finally, our SSF allows our framework to learn the same scene scheme from multi-grain instance representations and fuses them, so that the entire framework is optimized as a whole. Notably, our AGOS is flexible and can be easily adapted to existing CNNs in a plug-and-play manner. Extensive experiments on UCM, AID and NWPU benchmarks demonstrate that our AGOS achieves a comparable performance against the state-of-the-art methods.

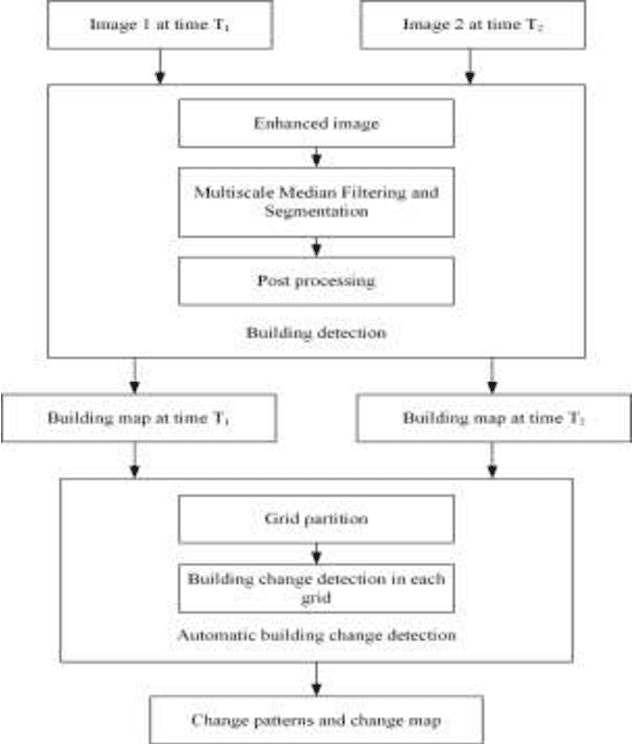

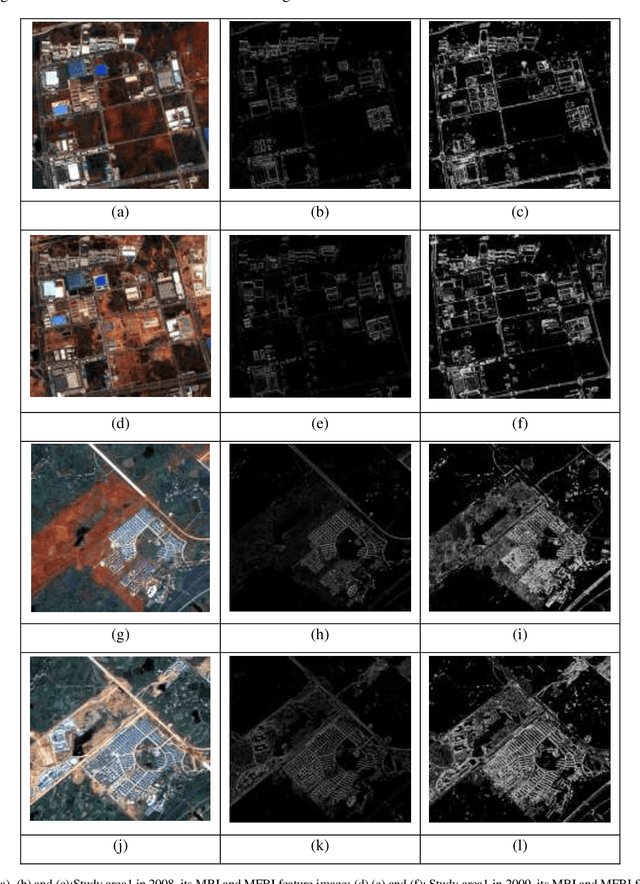

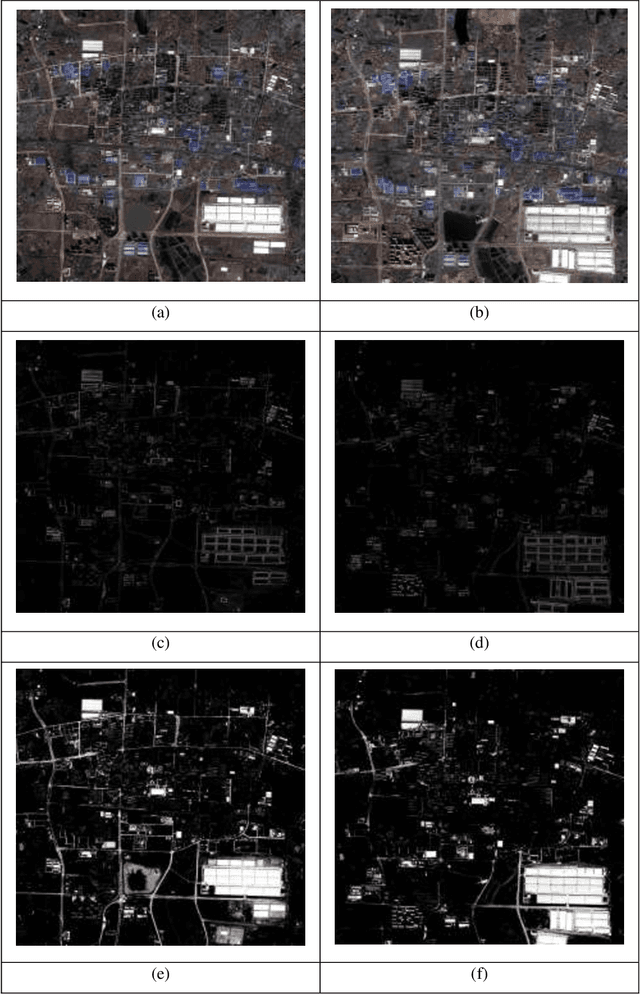

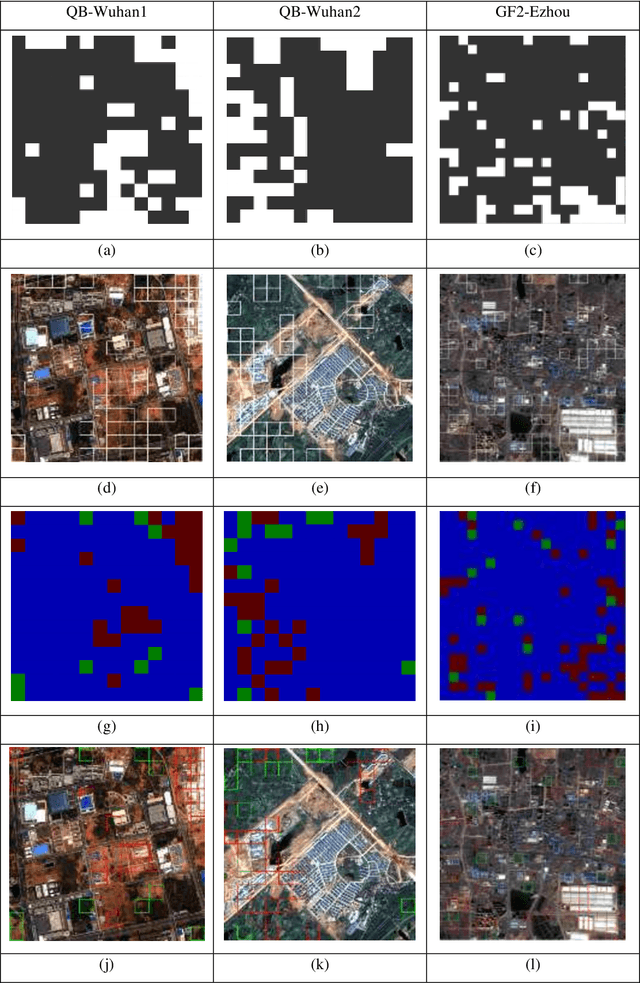

Building change detection based on multi-scale filtering and grid partition

Aug 22, 2019

Abstract:Building change detection is of great significance in high resolution remote sensing applications. Multi-index learning, one of the state-of-the-art building change detection methods, still has drawbacks like incapability to find change types directly and heavy computation consumption of MBI. In this paper, a two-stage building change detection method is proposed to address these problems. In the first stage, a multi-scale filtering building index (MFBI) is calculated to detect building areas in each temporal with fast speed and moderate accuracy. In the second stage, images and the corresponding building maps are partitioned into grids. In each grid, the ratio of building areas in time T2 and time T1 is calculated. Each grid is classified into one of the three change patterns, i.e., significantly increase, significantly decrease and approximately unchanged. Exhaustive experiments indicate that the proposed method can detect building change types directly and outperform the current multi-index learning method.

* 8 pages, 6 figures, conference paper

Multiple instance dense connected convolution neural network for aerial image scene classification

Aug 22, 2019

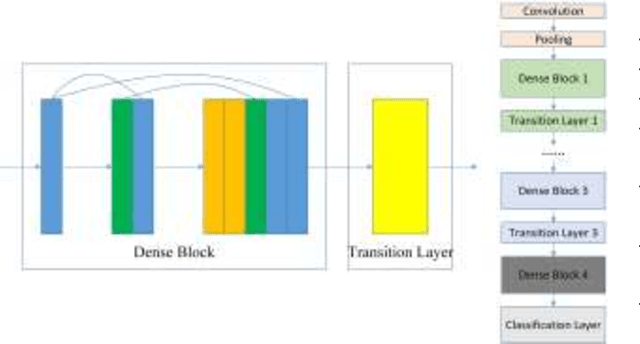

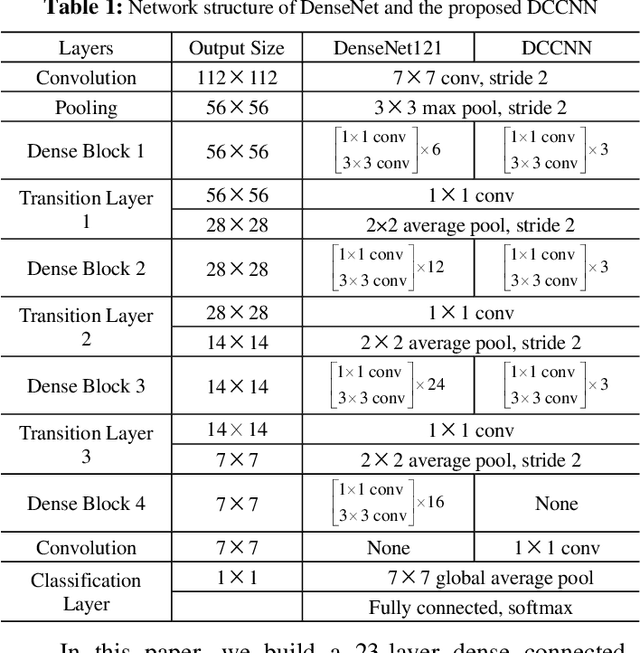

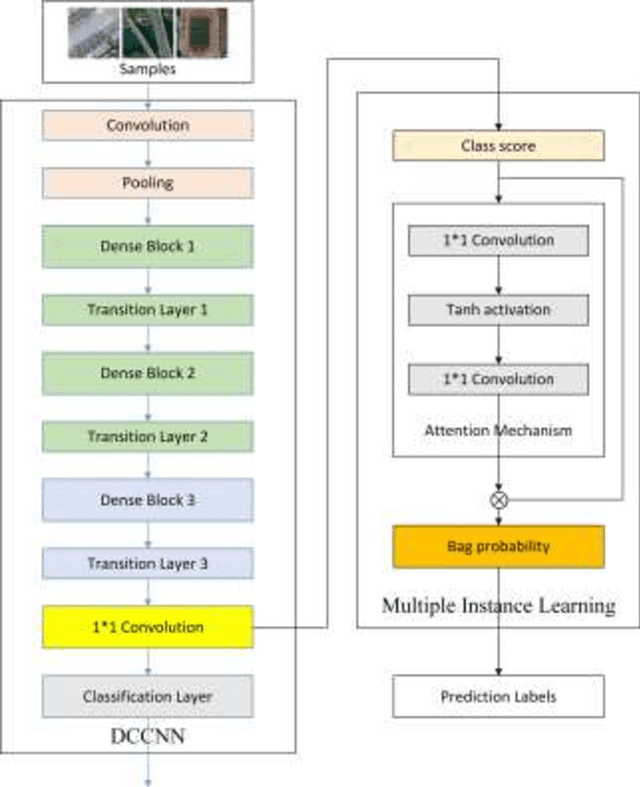

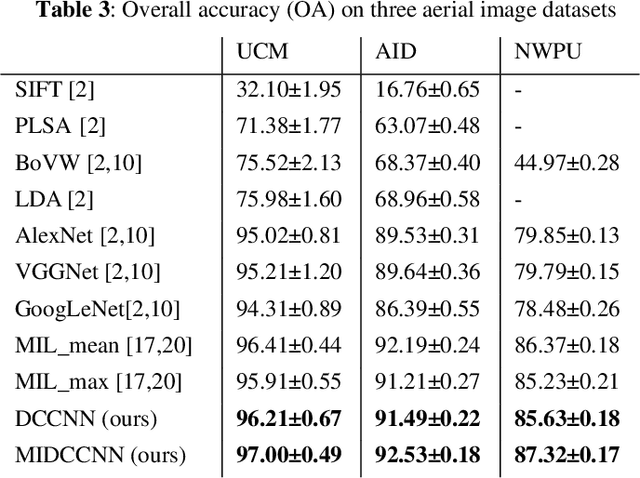

Abstract:With the development of deep learning, many state-of-the-art natural image scene classification methods have demonstrated impressive performance. While the current convolution neural network tends to extract global features and global semantic information in a scene, the geo-spatial objects can be located at anywhere in an aerial image scene and their spatial arrangement tends to be more complicated. One possible solution is to preserve more local semantic information and enhance feature propagation. In this paper, an end to end multiple instance dense connected convolution neural network (MIDCCNN) is proposed for aerial image scene classification. First, a 23 layer dense connected convolution neural network (DCCNN) is built and served as a backbone to extract convolution features. It is capable of preserving middle and low level convolution features. Then, an attention based multiple instance pooling is proposed to highlight the local semantics in an aerial image scene. Finally, we minimize the loss between the bag-level predictions and the ground truth labels so that the whole framework can be trained directly. Experiments on three aerial image datasets demonstrate that our proposed methods can outperform current baselines by a large margin.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge