Kin Gwn Lore

ReeFRAME: Reeb Graph based Trajectory Analysis Framework to Capture Top-Down and Bottom-Up Patterns of Life

Oct 19, 2024Abstract:In this paper, we present ReeFRAME, a scalable Reeb graph-based framework designed to analyze vast volumes of GPS-enabled human trajectory data generated at 1Hz frequency. ReeFRAME models Patterns-of-life (PoL) at both the population and individual levels, utilizing Multi-Agent Reeb Graphs (MARGs) for population-level patterns and Temporal Reeb Graphs (TERGs) for individual trajectories. The framework's linear algorithmic complexity relative to the number of time points ensures scalability for anomaly detection. We validate ReeFRAME on six large-scale anomaly detection datasets, simulating real-time patterns with up to 500,000 agents over two months.

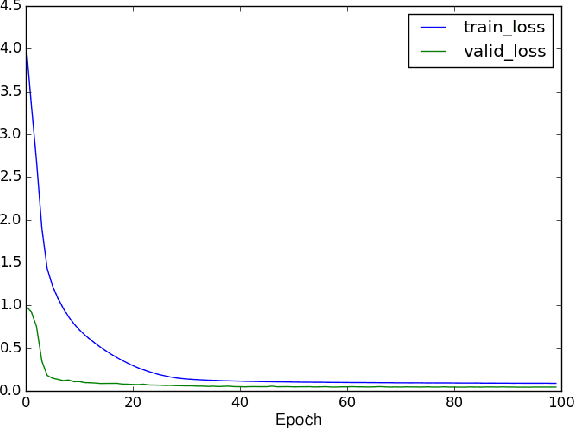

Implicit Context-aware Learning and Discovery for Streaming Data Analytics

Oct 18, 2019

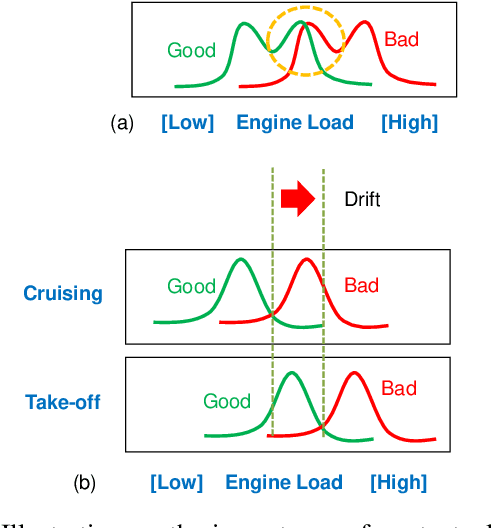

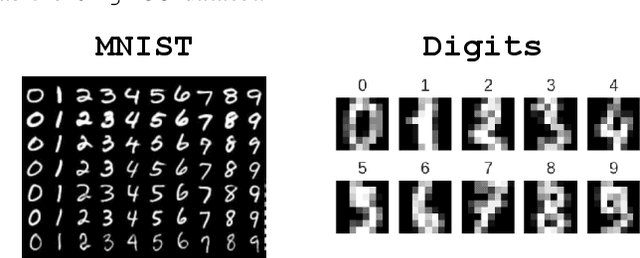

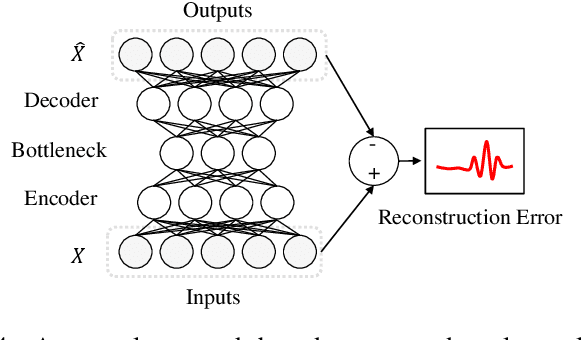

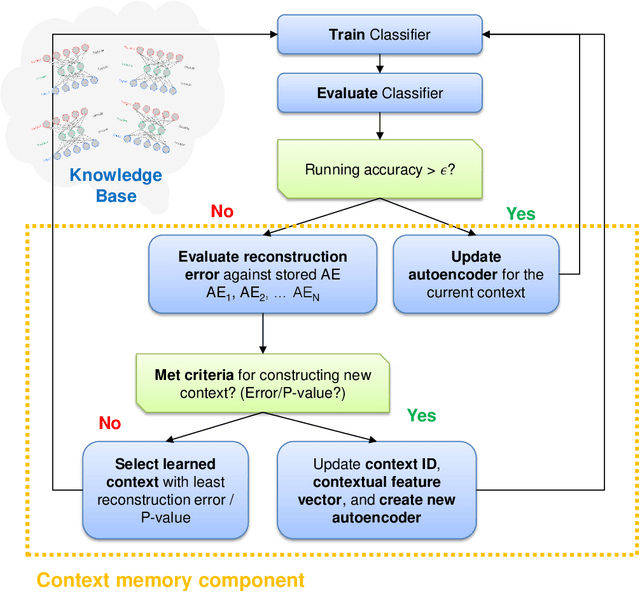

Abstract:The performance of machine learning model can be further improved if contextual cues are provided as input along with base features that are directly related to an inference task. In offline learning, one can inspect historical training data to identify contextual clusters either through feature clustering, or hand-crafting additional features to describe a context. While offline training enjoys the privilege of learning reliable models based on already-defined contextual features, online training for streaming data may be more challenging -- the data is streamed through time, and the underlying context during a data generation process may change. Furthermore, the problem is exacerbated when the number of possible context is not known. In this study, we propose an online-learning algorithm involving the use of a neural network-based autoencoder to identify contextual changes during training, then compares the currently-inferred context to a knowledge base of learned contexts as training advances. Results show that classifier-training benefits from the automatically discovered contexts which demonstrates quicker learning convergence during contextual changes compared to current methods.

Root-cause Analysis for Time-series Anomalies via Spatiotemporal Graphical Modeling in Distributed Complex Systems

May 31, 2018

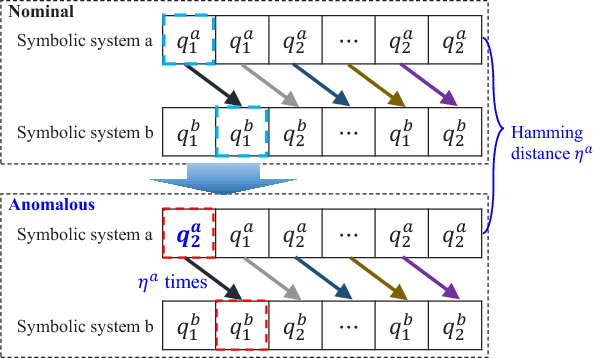

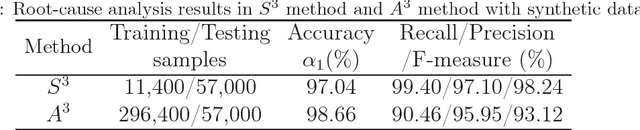

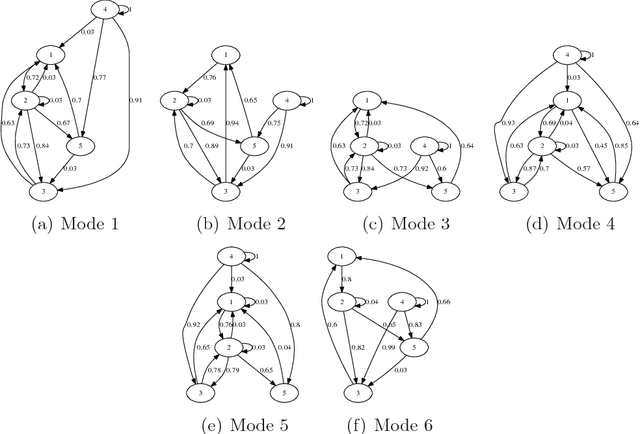

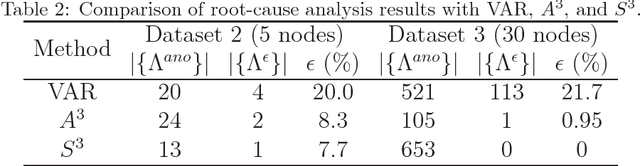

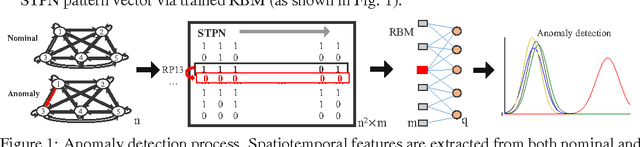

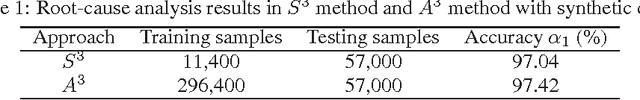

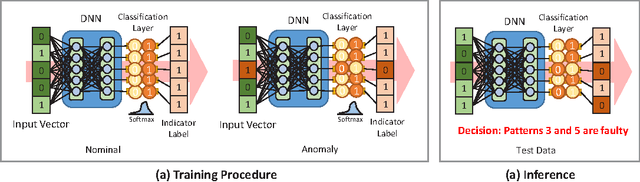

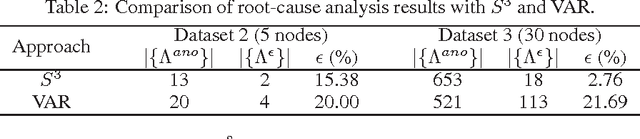

Abstract:Performance monitoring, anomaly detection, and root-cause analysis in complex cyber-physical systems (CPSs) are often highly intractable due to widely diverse operational modes, disparate data types, and complex fault propagation mechanisms. This paper presents a new data-driven framework for root-cause analysis, based on a spatiotemporal graphical modeling approach built on the concept of symbolic dynamics for discovering and representing causal interactions among sub-systems of complex CPSs. We formulate the root-cause analysis problem as a minimization problem via the proposed inference based metric and present two approximate approaches for root-cause analysis, namely the sequential state switching ($S^3$, based on free energy concept of a restricted Boltzmann machine, RBM) and artificial anomaly association ($A^3$, a classification framework using deep neural networks, DNN). Synthetic data from cases with failed pattern(s) and anomalous node(s) are simulated to validate the proposed approaches. Real dataset based on Tennessee Eastman process (TEP) is also used for comparison with other approaches. The results show that: (1) $S^3$ and $A^3$ approaches can obtain high accuracy in root-cause analysis under both pattern-based and node-based fault scenarios, in addition to successfully handling multiple nominal operating modes, (2) the proposed tool-chain is shown to be scalable while maintaining high accuracy, and (3) the proposed framework is robust and adaptive in different fault conditions and performs better in comparison with the state-of-the-art methods.

Data-driven root-cause analysis for distributed system anomalies

May 31, 2018

Abstract:Modern distributed cyber-physical systems encounter a large variety of anomalies and in many cases, they are vulnerable to catastrophic fault propagation scenarios due to strong connectivity among the sub-systems. In this regard, root-cause analysis becomes highly intractable due to complex fault propagation mechanisms in combination with diverse operating modes. This paper presents a new data-driven framework for root-cause analysis for addressing such issues. The framework is based on a spatiotemporal feature extraction scheme for distributed cyber-physical systems built on the concept of symbolic dynamics for discovering and representing causal interactions among subsystems of a complex system. We present two approaches for root-cause analysis, namely the sequential state switching ($S^3$, based on free energy concept of a Restricted Boltzmann Machine, RBM) and artificial anomaly association ($A^3$, a multi-class classification framework using deep neural networks, DNN). Synthetic data from cases with failed pattern(s) and anomalous node are simulated to validate the proposed approaches, then compared with the performance of vector autoregressive (VAR) model-based root-cause analysis. Real dataset based on Tennessee Eastman process (TEP) is also used for validation. The results show that: (1) $S^3$ and $A^3$ approaches can obtain high accuracy in root-cause analysis and successfully handle multiple nominal operation modes, and (2) the proposed tool-chain is shown to be scalable while maintaining high accuracy.

Learning Localized Geometric Features Using 3D-CNN: An Application to Manufacturability Analysis of Drilled Holes

Jun 22, 2017

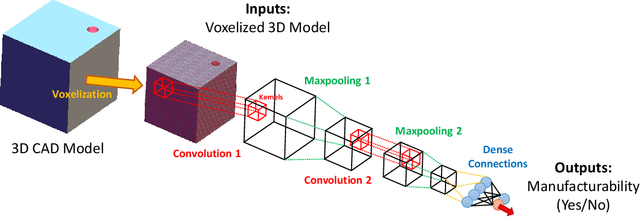

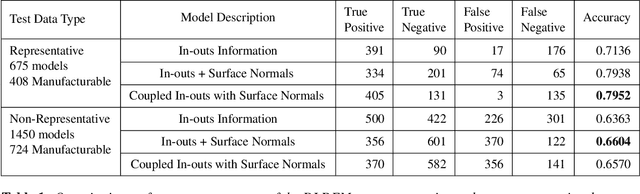

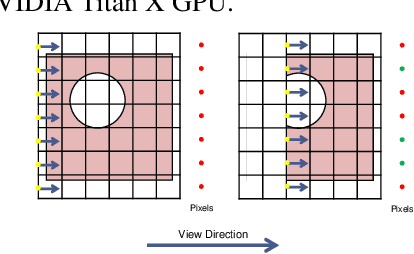

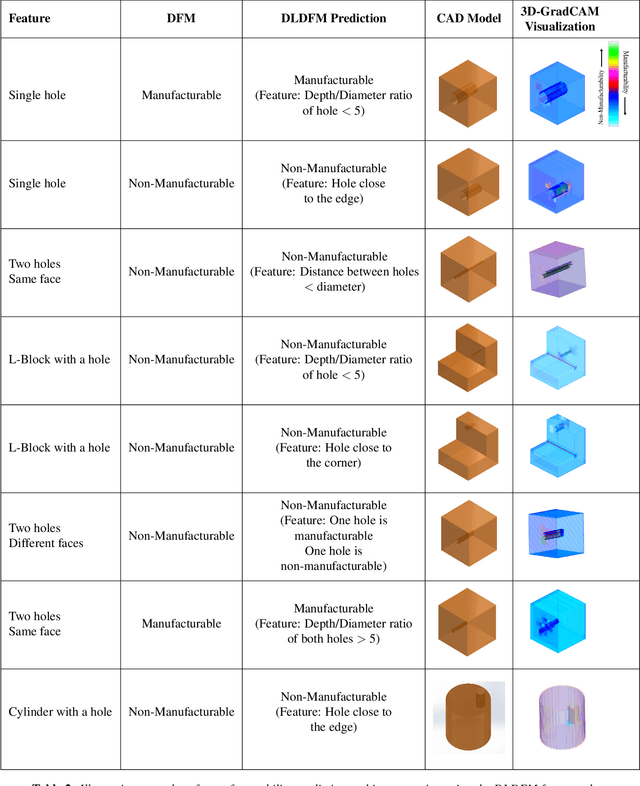

Abstract:3D convolutional neural networks (3D-CNN) have been used for object recognition based on the voxelized shape of an object. In this paper, we present a 3D-CNN based method to learn distinct local geometric features of interest within an object. In this context, the voxelized representation may not be sufficient to capture the distinguishing information about such local features. To enable efficient learning, we augment the voxel data with surface normals of the object boundary. We then train a 3D-CNN with this augmented data and identify the local features critical for decision-making using 3D gradient-weighted class activation maps. An application of this feature identification framework is to recognize difficult-to-manufacture drilled hole features in a complex CAD geometry. The framework can be extended to identify difficult-to-manufacture features at multiple spatial scales leading to a real-time decision support system for design for manufacturability.

Deep Action Sequence Learning for Causal Shape Transformation

Nov 08, 2016

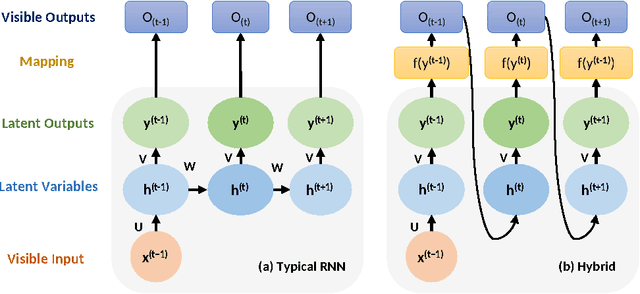

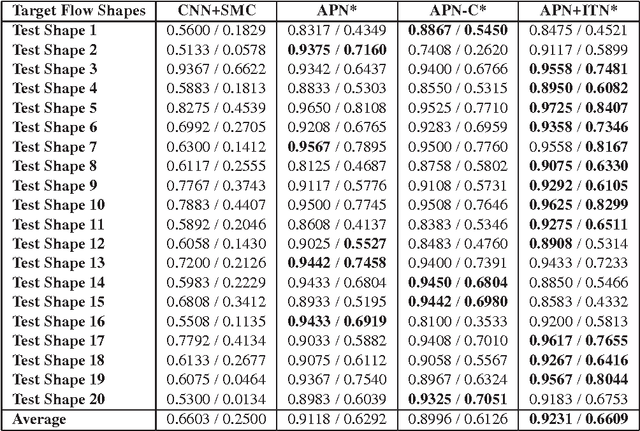

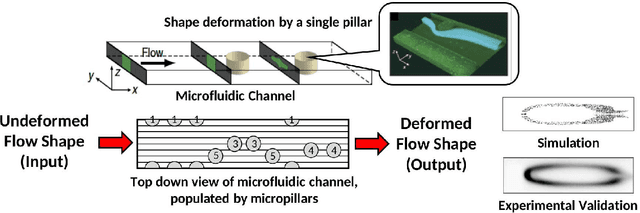

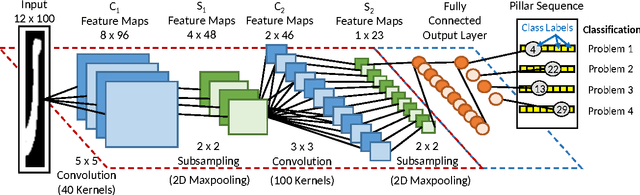

Abstract:Deep learning became the method of choice in recent year for solving a wide variety of predictive analytics tasks. For sequence prediction, recurrent neural networks (RNN) are often the go-to architecture for exploiting sequential information where the output is dependent on previous computation. However, the dependencies of the computation lie in the latent domain which may not be suitable for certain applications involving the prediction of a step-wise transformation sequence that is dependent on the previous computation only in the visible domain. We propose that a hybrid architecture of convolution neural networks (CNN) and stacked autoencoders (SAE) is sufficient to learn a sequence of actions that nonlinearly transforms an input shape or distribution into a target shape or distribution with the same support. While such a framework can be useful in a variety of problems such as robotic path planning, sequential decision-making in games, and identifying material processing pathways to achieve desired microstructures, the application of the framework is exemplified by the control of fluid deformations in a microfluidic channel by deliberately placing a sequence of pillars. Learning of a multistep topological transform has significant implications for rapid advances in material science and biomedical applications.

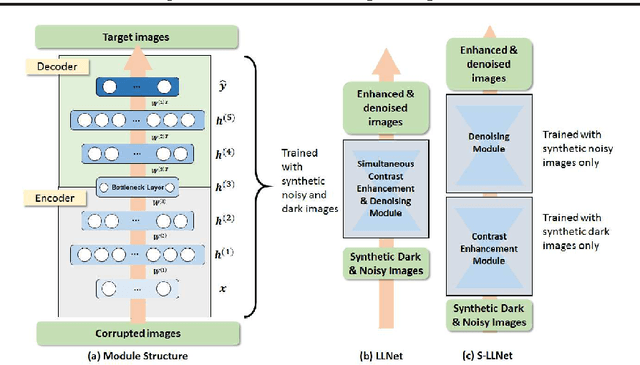

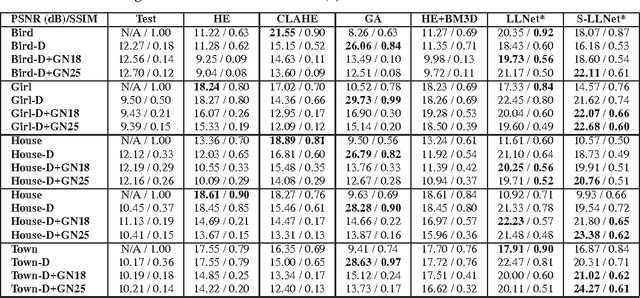

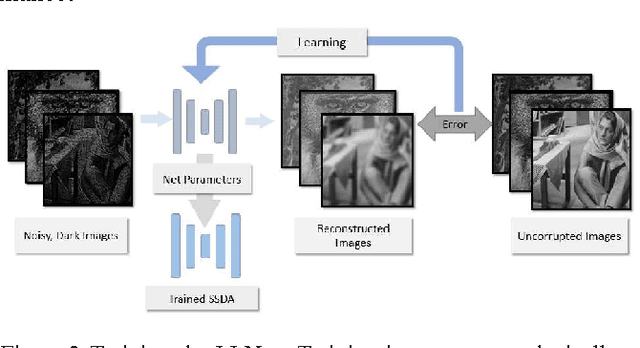

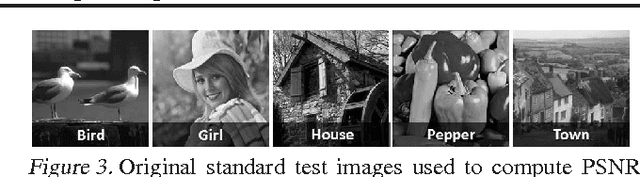

LLNet: A Deep Autoencoder Approach to Natural Low-light Image Enhancement

Apr 15, 2016

Abstract:In surveillance, monitoring and tactical reconnaissance, gathering the right visual information from a dynamic environment and accurately processing such data are essential ingredients to making informed decisions which determines the success of an operation. Camera sensors are often cost-limited in ability to clearly capture objects without defects from images or videos taken in a poorly-lit environment. The goal in many applications is to enhance the brightness, contrast and reduce noise content of such images in an on-board real-time manner. We propose a deep autoencoder-based approach to identify signal features from low-light images handcrafting and adaptively brighten images without over-amplifying the lighter parts in images (i.e., without saturation of image pixels) in high dynamic range. We show that a variant of the recently proposed stacked-sparse denoising autoencoder can learn to adaptively enhance and denoise from synthetically darkened and noisy training examples. The network can then be successfully applied to naturally low-light environment and/or hardware degraded images. Results show significant credibility of deep learning based approaches both visually and by quantitative comparison with various popular enhancing, state-of-the-art denoising and hybrid enhancing-denoising techniques.

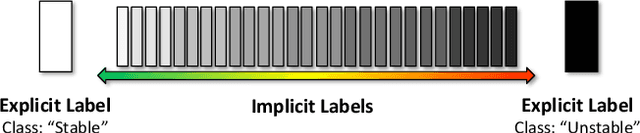

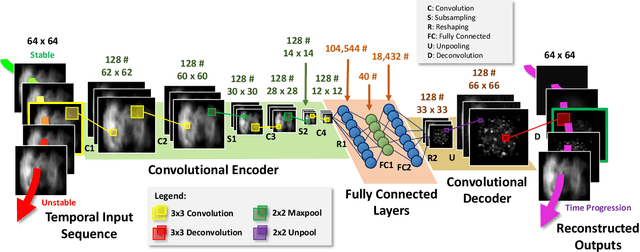

Early Detection of Combustion Instabilities using Deep Convolutional Selective Autoencoders on Hi-speed Flame Video

Mar 25, 2016

Abstract:This paper proposes an end-to-end convolutional selective autoencoder approach for early detection of combustion instabilities using rapidly arriving flame image frames. The instabilities arising in combustion processes cause significant deterioration and safety issues in various human-engineered systems such as land and air based gas turbine engines. These properties are described as self-sustaining, large amplitude pressure oscillations and show varying spatial scales periodic coherent vortex structure shedding. However, such instability is extremely difficult to detect before a combustion process becomes completely unstable due to its sudden (bifurcation-type) nature. In this context, an autoencoder is trained to selectively mask stable flame and allow unstable flame image frames. In that process, the model learns to identify and extract rich descriptive and explanatory flame shape features. With such a training scheme, the selective autoencoder is shown to be able to detect subtle instability features as a combustion process makes transition from stable to unstable region. As a consequence, the deep learning tool-chain can perform as an early detection framework for combustion instabilities that will have a transformative impact on the safety and performance of modern engines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge