Kashif Ahmad

Stylometry Analysis of Human and Machine Text for Academic Integrity

Jan 03, 2026Abstract:This work addresses critical challenges to academic integrity, including plagiarism, fabrication, and verification of authorship of educational content, by proposing a Natural Language Processing (NLP)-based framework for authenticating students' content through author attribution and style change detection. Despite some initial efforts, several aspects of the topic are yet to be explored. In contrast to existing solutions, the paper provides a comprehensive analysis of the topic by targeting four relevant tasks, including (i) classification of human and machine text, (ii) differentiating in single and multi-authored documents, (iii) author change detection within multi-authored documents, and (iv) author recognition in collaboratively produced documents. The solutions proposed for the tasks are evaluated on two datasets generated with Gemini using two different prompts, including a normal and a strict set of instructions. During experiments, some reduction in the performance of the proposed solutions is observed on the dataset generated through the strict prompt, demonstrating the complexities involved in detecting machine-generated text with cleverly crafted prompts. The generated datasets, code, and other relevant materials are made publicly available on GitHub, which are expected to provide a baseline for future research in the domain.

Social Media Informatics for Sustainable Cities and Societies: An Overview of the Applications, associated Challenges, and Potential Solutions

Dec 03, 2024Abstract:In the modern world, our cities and societies face several technological and societal challenges, such as rapid urbanization, global warming & climate change, the digital divide, and social inequalities, increasing the need for more sustainable cities and societies. Addressing these challenges requires a multifaceted approach involving all the stakeholders, sustainable planning, efficient resource management, innovative solutions, and modern technologies. Like other modern technologies, social media informatics also plays its part in developing more sustainable and resilient cities and societies. Despite its limitations, social media informatics has proven very effective in various sustainable cities and society applications. In this paper, we review and analyze the role of social media informatics in sustainable cities and society by providing a detailed overview of its applications, associated challenges, and potential solutions. This work is expected to provide a baseline for future research in the domain.

Explainable AI-based Intrusion Detection System for Industry 5.0: An Overview of the Literature, associated Challenges, the existing Solutions, and Potential Research Directions

Jul 21, 2024

Abstract:Industry 5.0, which focuses on human and Artificial Intelligence (AI) collaboration for performing different tasks in manufacturing, involves a higher number of robots, Internet of Things (IoTs) devices and interconnections, Augmented/Virtual Reality (AR), and other smart devices. The huge involvement of these devices and interconnection in various critical areas, such as economy, health, education and defense systems, poses several types of potential security flaws. AI itself has been proven a very effective and powerful tool in different areas of cybersecurity, such as intrusion detection, malware detection, and phishing detection, among others. Just as in many application areas, cybersecurity professionals were reluctant to accept black-box ML solutions for cybersecurity applications. This reluctance pushed forward the adoption of eXplainable Artificial Intelligence (XAI) as a tool that helps explain how decisions are made in ML-based systems. In this survey, we present a comprehensive study of different XAI-based intrusion detection systems for industry 5.0, and we also examine the impact of explainability and interpretability on Cybersecurity practices through the lens of Adversarial XIDS (Adv-XIDS) approaches. Furthermore, we analyze the possible opportunities and challenges in XAI cybersecurity systems for industry 5.0 that elicit future research toward XAI-based solutions to be adopted by high-stakes industry 5.0 applications. We believe this rigorous analysis will establish a foundational framework for subsequent research endeavors within the specified domain.

A Named Entity Recognition and Topic Modeling-based Solution for Locating and Better Assessment of Natural Disasters in Social Media

May 01, 2024

Abstract:Over the last decade, similar to other application domains, social media content has been proven very effective in disaster informatics. However, due to the unstructured nature of the data, several challenges are associated with disaster analysis in social media content. To fully explore the potential of social media content in disaster informatics, access to relevant content and the correct geo-location information is very critical. In this paper, we propose a three-step solution to tackling these challenges. Firstly, the proposed solution aims to classify social media posts into relevant and irrelevant posts followed by the automatic extraction of location information from the posts' text through Named Entity Recognition (NER) analysis. Finally, to quickly analyze the topics covered in large volumes of social media posts, we perform topic modeling resulting in a list of top keywords, that highlight the issues discussed in the tweet. For the Relevant Classification of Twitter Posts (RCTP), we proposed a merit-based fusion framework combining the capabilities of four different models namely BERT, RoBERTa, Distil BERT, and ALBERT obtaining the highest F1-score of 0.933 on a benchmark dataset. For the Location Extraction from Twitter Text (LETT), we evaluated four models namely BERT, RoBERTa, Distil BERTA, and Electra in an NER framework obtaining the highest F1-score of 0.960. For topic modeling, we used the BERTopic library to discover the hidden topic patterns in the relevant tweets. The experimental results of all the components of the proposed end-to-end solution are very encouraging and hint at the potential of social media content and NLP in disaster management.

Social Media and Artificial Intelligence for Sustainable Cities and Societies: A Water Quality Analysis Use-case

Apr 23, 2024Abstract:This paper focuses on a very important societal challenge of water quality analysis. Being one of the key factors in the economic and social development of society, the provision of water and ensuring its quality has always remained one of the top priorities of public authorities. To ensure the quality of water, different methods for monitoring and assessing the water networks, such as offline and online surveys, are used. However, these surveys have several limitations, such as the limited number of participants and low frequency due to the labor involved in conducting such surveys. In this paper, we propose a Natural Language Processing (NLP) framework to automatically collect and analyze water-related posts from social media for data-driven decisions. The proposed framework is composed of two components, namely (i) text classification, and (ii) topic modeling. For text classification, we propose a merit-fusion-based framework incorporating several Large Language Models (LLMs) where different weight selection and optimization methods are employed to assign weights to the LLMs. In topic modeling, we employed the BERTopic library to discover the hidden topic patterns in the water-related tweets. We also analyzed relevant tweets originating from different regions and countries to explore global, regional, and country-specific issues and water-related concerns. We also collected and manually annotated a large-scale dataset, which is expected to facilitate future research on the topic.

Stylometry Analysis of Multi-authored Documents for Authorship and Author Style Change Detection

Jan 12, 2024Abstract:In recent years, the increasing use of Artificial Intelligence based text generation tools has posed new challenges in document provenance, authentication, and authorship detection. However, advancements in stylometry have provided opportunities for automatic authorship and author change detection in multi-authored documents using style analysis techniques. Style analysis can serve as a primary step toward document provenance and authentication through authorship detection. This paper investigates three key tasks of style analysis: (i) classification of single and multi-authored documents, (ii) single change detection, which involves identifying the point where the author switches, and (iii) multiple author-switching detection in multi-authored documents. We formulate all three tasks as classification problems and propose a merit-based fusion framework that integrates several state-of-the-art natural language processing (NLP) algorithms and weight optimization techniques. We also explore the potential of special characters, which are typically removed during pre-processing in NLP applications, on the performance of the proposed methods for these tasks by conducting extensive experiments on both cleaned and raw datasets. Experimental results demonstrate significant improvements over existing solutions for all three tasks on a benchmark dataset.

Document Provenance and Authentication through Authorship Classification

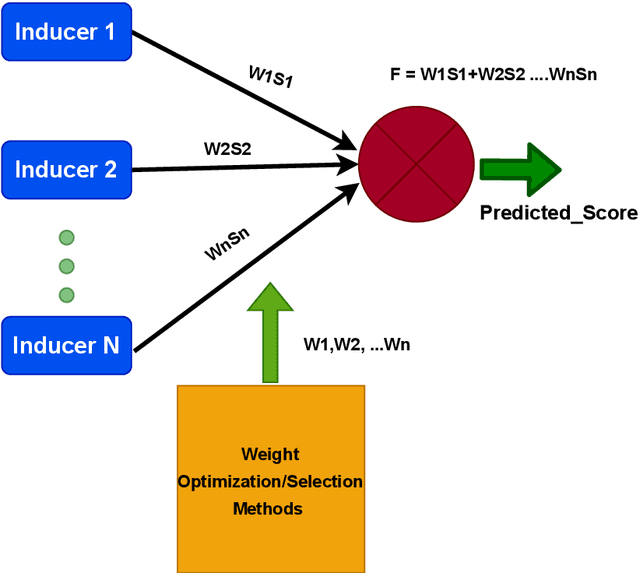

Mar 02, 2023Abstract:Style analysis, which is relatively a less explored topic, enables several interesting applications. For instance, it allows authors to adjust their writing style to produce a more coherent document in collaboration. Similarly, style analysis can also be used for document provenance and authentication as a primary step. In this paper, we propose an ensemble-based text-processing framework for the classification of single and multi-authored documents, which is one of the key tasks in style analysis. The proposed framework incorporates several state-of-the-art text classification algorithms including classical Machine Learning (ML) algorithms, transformers, and deep learning algorithms both individually and in merit-based late fusion. For the merit-based late fusion, we employed several weight optimization and selection methods to assign merit-based weights to the individual text classification algorithms. We also analyze the impact of the characters on the task that are usually excluded in NLP applications during pre-processing by conducting experiments on both clean and un-clean data. The proposed framework is evaluated on a large-scale benchmark dataset, significantly improving performance over the existing solutions.

* 7 pages; 3 tables; 1 figure

Floods Relevancy and Identification of Location from Twitter Posts using NLP Techniques

Jan 01, 2023

Abstract:This paper presents our solutions for the MediaEval 2022 task on DisasterMM. The task is composed of two subtasks, namely (i) Relevance Classification of Twitter Posts (RCTP), and (ii) Location Extraction from Twitter Texts (LETT). The RCTP subtask aims at differentiating flood-related and non-relevant social posts while LETT is a Named Entity Recognition (NER) task and aims at the extraction of location information from the text. For RCTP, we proposed four different solutions based on BERT, RoBERTa, Distil BERT, and ALBERT obtaining an F1-score of 0.7934, 0.7970, 0.7613, and 0.7924, respectively. For LETT, we used three models namely BERT, RoBERTa, and Distil BERTA obtaining an F1-score of 0.6256, 0.6744, and 0.6723, respectively.

Relevance Classification of Flood-related Twitter Posts via Multiple Transformers

Jan 01, 2023

Abstract:In recent years, social media has been widely explored as a potential source of communication and information in disasters and emergency situations. Several interesting works and case studies of disaster analytics exploring different aspects of natural disasters have been already conducted. Along with the great potential, disaster analytics comes with several challenges mainly due to the nature of social media content. In this paper, we explore one such challenge and propose a text classification framework to deal with Twitter noisy data. More specifically, we employed several transformers both individually and in combination, so as to differentiate between relevant and non-relevant Twitter posts, achieving the highest F1-score of 0.87.

A Late Fusion Framework with Multiple Optimization Methods for Media Interestingness

Jul 11, 2022

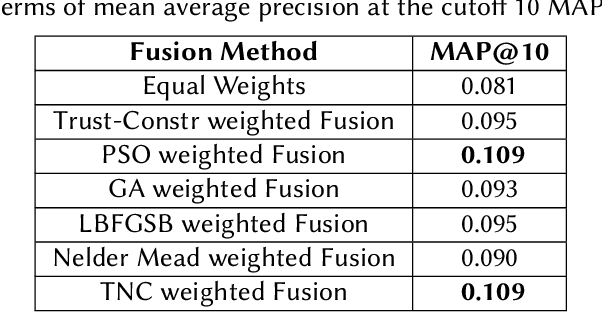

Abstract:The recent advancement in Multimedia Analytical, Computer Vision (CV), and Artificial Intelligence (AI) algorithms resulted in several interesting tools allowing an automatic analysis and retrieval of multimedia content of users' interests. However, retrieving the content of interest generally involves analysis and extraction of semantic features, such as emotions and interestingness-level. The extraction of such meaningful information is a complex task and generally, the performance of individual algorithms is very low. One way to enhance the performance of the individual algorithms is to combine the predictive capabilities of multiple algorithms using fusion schemes. This allows the individual algorithms to complement each other, leading to improved performance. This paper proposes several fusion methods for the media interestingness score prediction task introduced in CLEF Fusion 2022. The proposed methods include both a naive fusion scheme, where all the inducers are treated equally and a merit-based fusion scheme where multiple weight optimization methods are employed to assign weights to the individual inducers. In total, we used six optimization methods including a Particle Swarm Optimization (PSO), a Genetic Algorithm (GA), Nelder Mead, Trust Region Constrained (TRC), and Limited-memory Broyden Fletcher Goldfarb Shanno Algorithm (LBFGSA), and Truncated Newton Algorithm (TNA). Overall better results are obtained with PSO and TNA achieving 0.109 mean average precision at 10. The task is complex and generally, scores are low. We believe the presented analysis will provide a baseline for future research in the domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge