Jun Lang

Test-Time Code-Switching for Cross-lingual Aspect Sentiment Triplet Extraction

Jan 24, 2025Abstract:Aspect Sentiment Triplet Extraction (ASTE) is a thriving research area with impressive outcomes being achieved on high-resource languages. However, the application of cross-lingual transfer to the ASTE task has been relatively unexplored, and current code-switching methods still suffer from term boundary detection issues and out-of-dictionary problems. In this study, we introduce a novel Test-Time Code-SWitching (TT-CSW) framework, which bridges the gap between the bilingual training phase and the monolingual test-time prediction. During training, a generative model is developed based on bilingual code-switched training data and can produce bilingual ASTE triplets for bilingual inputs. In the testing stage, we employ an alignment-based code-switching technique for test-time augmentation. Extensive experiments on cross-lingual ASTE datasets validate the effectiveness of our proposed method. We achieve an average improvement of 3.7% in terms of weighted-averaged F1 in four datasets with different languages. Additionally, we set a benchmark using ChatGPT and GPT-4, and demonstrate that even smaller generative models fine-tuned with our proposed TT-CSW framework surpass ChatGPT and GPT-4 by 14.2% and 5.0% respectively.

Improving LLM-based Machine Translation with Systematic Self-Correction

Mar 04, 2024Abstract:Large Language Models (LLMs) have achieved impressive results in Machine Translation (MT). However, careful evaluations by human reveal that the translations produced by LLMs still contain multiple errors. Importantly, feeding back such error information into the LLMs can lead to self-correction and result in improved translation performance. Motivated by these insights, we introduce a systematic LLM-based self-correcting translation framework, named TER, which stands for Translate, Estimate, and Refine, marking a significant step forward in this direction. Our findings demonstrate that 1) our self-correction framework successfully assists LLMs in improving their translation quality across a wide range of languages, whether it's from high-resource languages to low-resource ones or whether it's English-centric or centered around other languages; 2) TER exhibits superior systematicity and interpretability compared to previous methods; 3) different estimation strategies yield varied impacts on AI feedback, directly affecting the effectiveness of the final corrections. We further compare different LLMs and conduct various experiments involving self-correction and cross-model correction to investigate the potential relationship between the translation and evaluation capabilities of LLMs. Our code and data are available at https://github.com/fzp0424/self_correct_mt

DMBGN: Deep Multi-Behavior Graph Networks for Voucher Redemption Rate Prediction

Jun 07, 2021

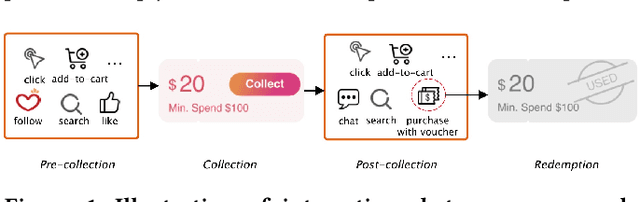

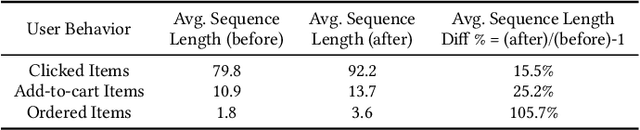

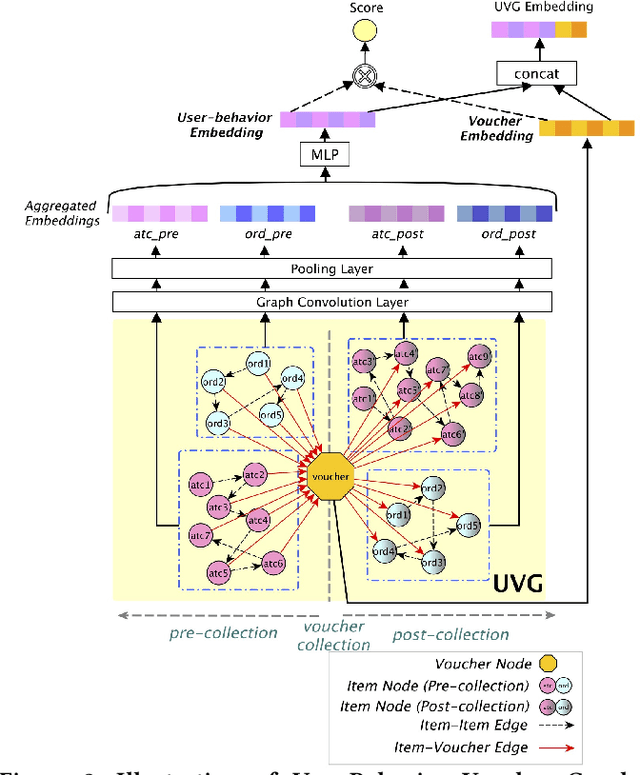

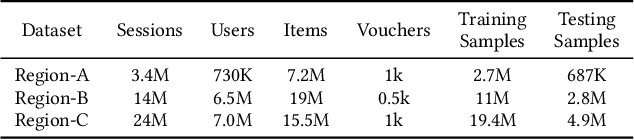

Abstract:In E-commerce, vouchers are important marketing tools to enhance users' engagement and boost sales and revenue. The likelihood that a user redeems a voucher is a key factor in voucher distribution decision. User-item Click-Through-Rate (CTR) models are often applied to predict the user-voucher redemption rate. However, the voucher scenario involves more complicated relations among users, items and vouchers. The users' historical behavior in a voucher collection activity reflects users' voucher usage patterns, which is nevertheless overlooked by the CTR-based solutions. In this paper, we propose a Deep Multi-behavior Graph Networks (DMBGN) to shed light on this field for the voucher redemption rate prediction. The complex structural user-voucher-item relationships are captured by a User-Behavior Voucher Graph (UVG). User behavior happening both before and after voucher collection is taken into consideration, and a high-level representation is extracted by Higher-order Graph Neural Networks. On top of a sequence of UVGs, an attention network is built which can help to learn users' long-term voucher redemption preference. Extensive experiments on three large-scale production datasets demonstrate the proposed DMBGN model is effective, with 10% to 16% relative AUC improvement over Deep Neural Networks (DNN), and 2% to 4% AUC improvement over Deep Interest Network (DIN). Source code and a sample dataset are made publicly available to facilitate future research.

Deep Interest with Hierarchical Attention Network for Click-Through Rate Prediction

May 22, 2020

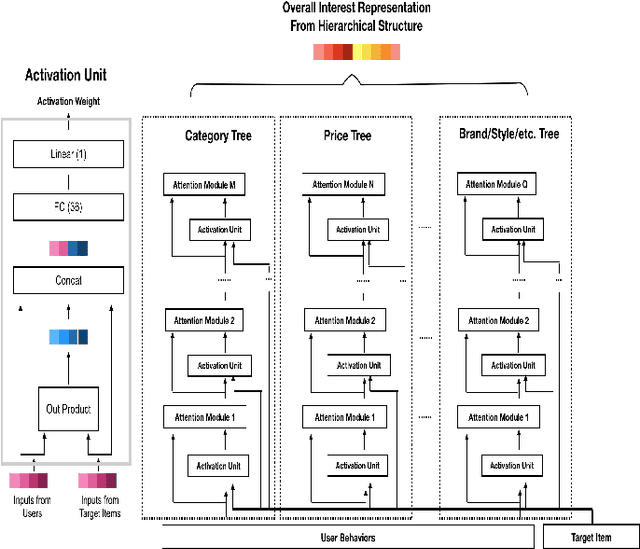

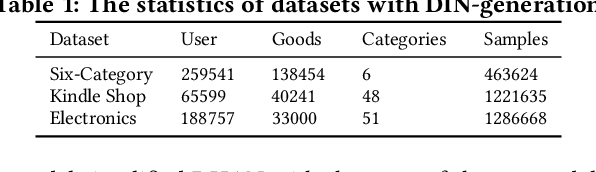

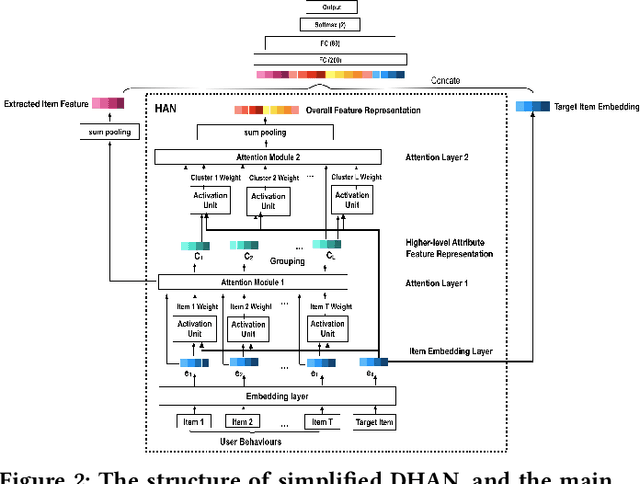

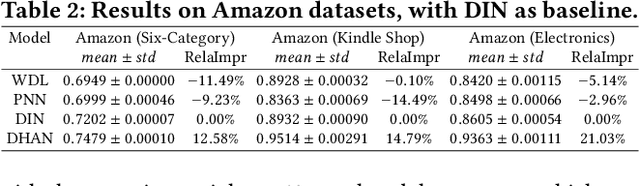

Abstract:Deep Interest Network (DIN) is a state-of-the-art model which uses attention mechanism to capture user interests from historical behaviors. User interests intuitively follow a hierarchical pattern such that users generally show interests from a higher-level then to a lower-level abstraction. Modeling such an interest hierarchy in an attention network can fundamentally improve the representation of user behaviors. We, therefore, propose an improvement over DIN to model arbitrary interest hierarchy: Deep Interest with Hierarchical Attention Network (DHAN). In this model, a multi-dimensional hierarchical structure is introduced on the first attention layer which attends to an individual item, and the subsequent attention layers in the same dimension attend to higher-level hierarchy built on top of the lower corresponding layers. To enable modeling of multiple dimensional hierarchies, an expanding mechanism is introduced to capture one to many hierarchies. This design enables DHAN to attend different importance to different hierarchical abstractions thus can fully capture user interests at different dimensions (e.g. category, price, or brand).To validate our model, a simplified DHAN has applied to Click-Through Rate (CTR) prediction and our experimental results on three public datasets with two levels of the one-dimensional hierarchy only by category. It shows the superiority of DHAN with significant AUC uplift from 12% to 21% over DIN. DHAN is also compared with another state-of-the-art model Deep Interest Evolution Network (DIEN), which models temporal interest. The simplified DHAN also gets slight AUC uplift from 1.0% to 1.7% over DIEN. A potential future work can be a combination of DHAN and DIEN to model both temporal and hierarchical interests.

A Multi-task Learning Approach for Improving Product Title Compression with User Search Log Data

Jan 05, 2018

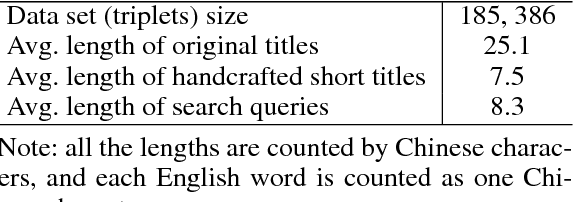

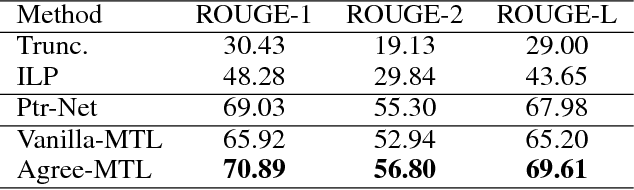

Abstract:It is a challenging and practical research problem to obtain effective compression of lengthy product titles for E-commerce. This is particularly important as more and more users browse mobile E-commerce apps and more merchants make the original product titles redundant and lengthy for Search Engine Optimization. Traditional text summarization approaches often require a large amount of preprocessing costs and do not capture the important issue of conversion rate in E-commerce. This paper proposes a novel multi-task learning approach for improving product title compression with user search log data. In particular, a pointer network-based sequence-to-sequence approach is utilized for title compression with an attentive mechanism as an extractive method and an attentive encoder-decoder approach is utilized for generating user search queries. The encoding parameters (i.e., semantic embedding of original titles) are shared among the two tasks and the attention distributions are jointly optimized. An extensive set of experiments with both human annotated data and online deployment demonstrate the advantage of the proposed research for both compression qualities and online business values.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge