John Taylor

PAUNet: Precipitation Attention-based U-Net for rain prediction from satellite radiance data

Nov 30, 2023Abstract:This paper introduces Precipitation Attention-based U-Net (PAUNet), a deep learning architecture for predicting precipitation from satellite radiance data, addressing the challenges of the Weather4cast 2023 competition. PAUNet is a variant of U-Net and Res-Net, designed to effectively capture the large-scale contextual information of multi-band satellite images in visible, water vapor, and infrared bands through encoder convolutional layers with center cropping and attention mechanisms. We built upon the Focal Precipitation Loss including an exponential component (e-FPL), which further enhanced the importance across different precipitation categories, particularly medium and heavy rain. Trained on a substantial dataset from various European regions, PAUNet demonstrates notable accuracy with a higher Critical Success Index (CSI) score than the baseline model in predicting rainfall over multiple time slots. PAUNet's architecture and training methodology showcase improvements in precipitation forecasting, crucial for sectors like emergency services and retail and supply chain management.

SSG2: A new modelling paradigm for semantic segmentation

Oct 12, 2023Abstract:State-of-the-art models in semantic segmentation primarily operate on single, static images, generating corresponding segmentation masks. This one-shot approach leaves little room for error correction, as the models lack the capability to integrate multiple observations for enhanced accuracy. Inspired by work on semantic change detection, we address this limitation by introducing a methodology that leverages a sequence of observables generated for each static input image. By adding this "temporal" dimension, we exploit strong signal correlations between successive observations in the sequence to reduce error rates. Our framework, dubbed SSG2 (Semantic Segmentation Generation 2), employs a dual-encoder, single-decoder base network augmented with a sequence model. The base model learns to predict the set intersection, union, and difference of labels from dual-input images. Given a fixed target input image and a set of support images, the sequence model builds the predicted mask of the target by synthesizing the partial views from each sequence step and filtering out noise. We evaluate SSG2 across three diverse datasets: UrbanMonitor, featuring orthoimage tiles from Darwin, Australia with five spectral bands and 0.2m spatial resolution; ISPRS Potsdam, which includes true orthophoto images with multiple spectral bands and a 5cm ground sampling distance; and ISIC2018, a medical dataset focused on skin lesion segmentation, particularly melanoma. The SSG2 model demonstrates rapid convergence within the first few tens of epochs and significantly outperforms UNet-like baseline models with the same number of gradient updates. However, the addition of the temporal dimension results in an increased memory footprint. While this could be a limitation, it is offset by the advent of higher-memory GPUs and coding optimizations.

Earth Virtualization Engines -- A Technical Perspective

Sep 16, 2023Abstract:Participants of the Berlin Summit on Earth Virtualization Engines (EVEs) discussed ideas and concepts to improve our ability to cope with climate change. EVEs aim to provide interactive and accessible climate simulations and data for a wide range of users. They combine high-resolution physics-based models with machine learning techniques to improve the fidelity, efficiency, and interpretability of climate projections. At their core, EVEs offer a federated data layer that enables simple and fast access to exabyte-sized climate data through simple interfaces. In this article, we summarize the technical challenges and opportunities for developing EVEs, and argue that they are essential for addressing the consequences of climate change.

Machine Learning based Parameter Sensitivity of Regional Climate Models -- A Case Study of the WRF Model for Heat Extremes over Southeast Australia

Jul 27, 2023Abstract:Heatwaves and bushfires cause substantial impacts on society and ecosystems across the globe. Accurate information of heat extremes is needed to support the development of actionable mitigation and adaptation strategies. Regional climate models are commonly used to better understand the dynamics of these events. These models have very large input parameter sets, and the parameters within the physics schemes substantially influence the model's performance. However, parameter sensitivity analysis (SA) of regional models for heat extremes is largely unexplored. Here, we focus on the southeast Australian region, one of the global hotspots of heat extremes. In southeast Australia Weather Research and Forecasting (WRF) model is the widely used regional model to simulate extreme weather events across the region. Hence in this study, we focus on the sensitivity of WRF model parameters to surface meteorological variables such as temperature, relative humidity, and wind speed during two extreme heat events over southeast Australia. Due to the presence of multiple parameters and their complex relationship with output variables, a machine learning (ML) surrogate-based global sensitivity analysis method is considered for the SA. The ML surrogate-based Sobol SA is used to identify the sensitivity of 24 adjustable parameters in seven different physics schemes of the WRF model. Results show that out of these 24, only three parameters, namely the scattering tuning parameter, multiplier of saturated soil water content, and profile shape exponent in the momentum diffusivity coefficient, are important for the considered meteorological variables. These SA results are consistent for the two different extreme heat events. Further, we investigated the physical significance of sensitive parameters. This study's results will help in further optimising WRF parameters to improve model simulation.

Movement Analytics: Current Status, Application to Manufacturing, and Future Prospects from an AI Perspective

Oct 04, 2022

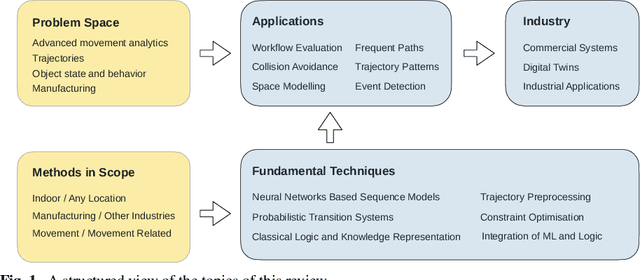

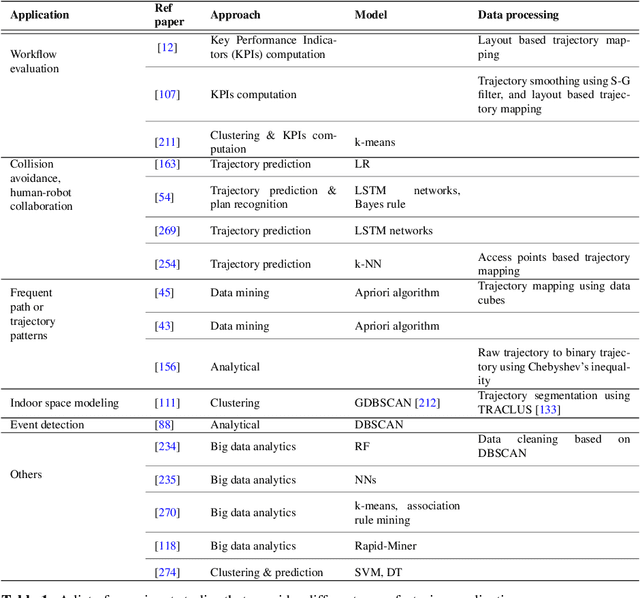

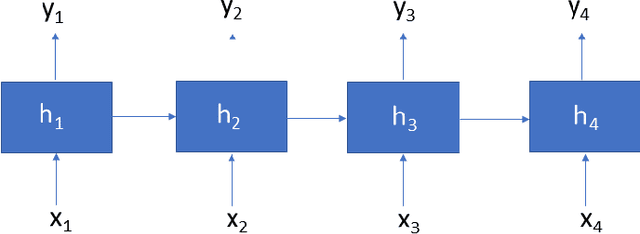

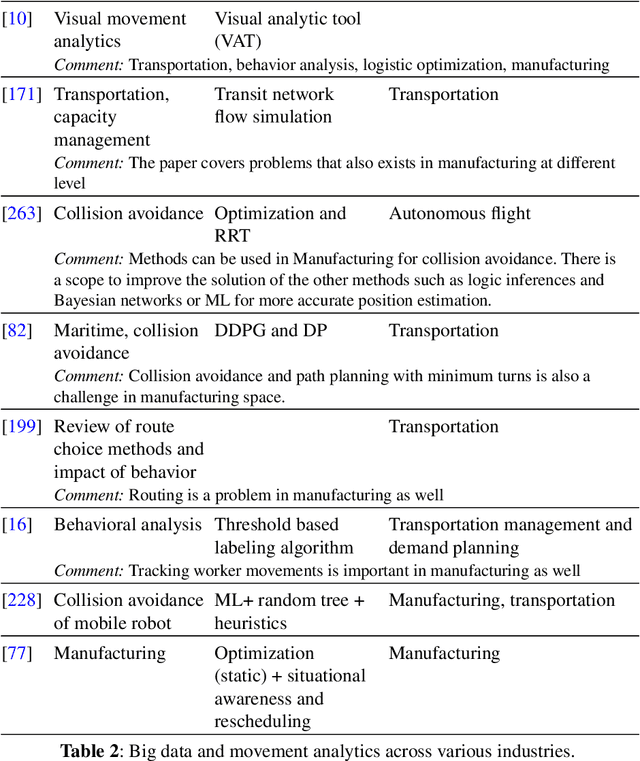

Abstract:Data-driven decision making is becoming an integral part of manufacturing companies. Data is collected and commonly used to improve efficiency and produce high quality items for the customers. IoT-based and other forms of object tracking are an emerging tool for collecting movement data of objects/entities (e.g. human workers, moving vehicles, trolleys etc.) over space and time. Movement data can provide valuable insights like process bottlenecks, resource utilization, effective working time etc. that can be used for decision making and improving efficiency. Turning movement data into valuable information for industrial management and decision making requires analysis methods. We refer to this process as movement analytics. The purpose of this document is to review the current state of work for movement analytics both in manufacturing and more broadly. We survey relevant work from both a theoretical perspective and an application perspective. From the theoretical perspective, we put an emphasis on useful methods from two research areas: machine learning, and logic-based knowledge representation. We also review their combinations in view of movement analytics, and we discuss promising areas for future development and application. Furthermore, we touch on constraint optimization. From an application perspective, we review applications of these methods to movement analytics in a general sense and across various industries. We also describe currently available commercial off-the-shelf products for tracking in manufacturing, and we overview main concepts of digital twins and their applications.

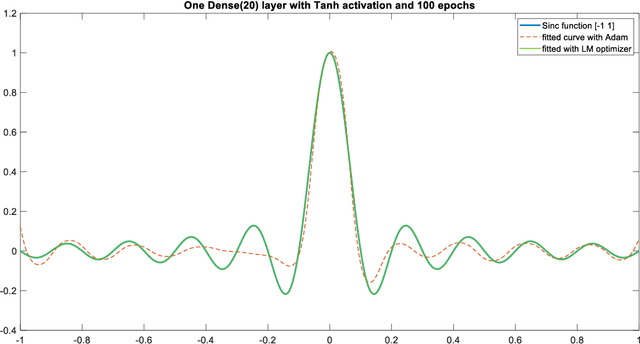

Optimizing the optimizer for data driven deep neural networks and physics informed neural networks

May 16, 2022

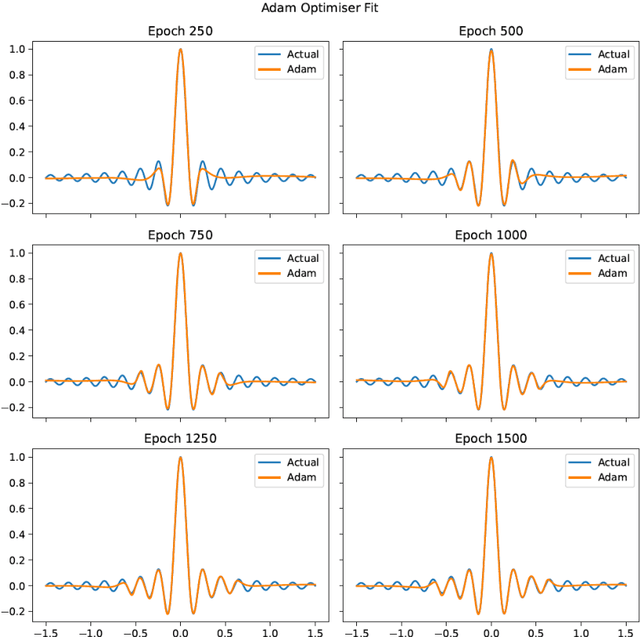

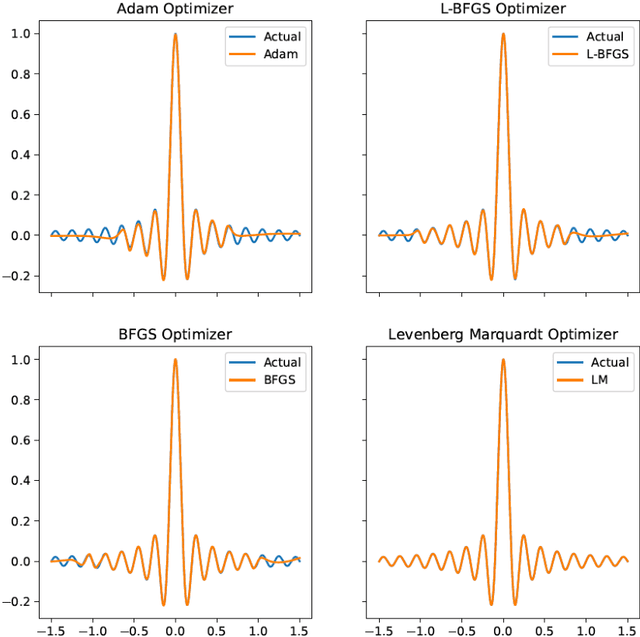

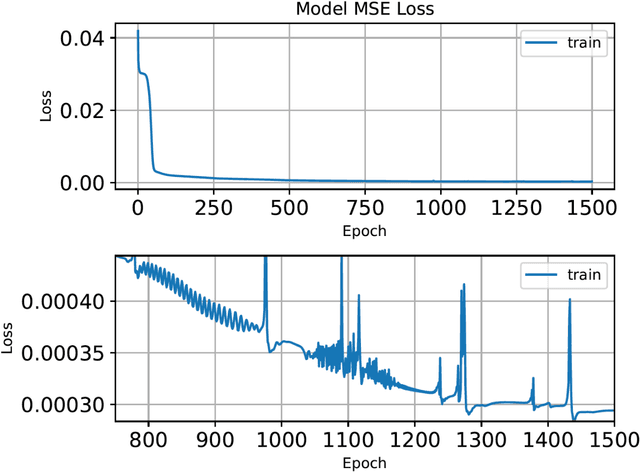

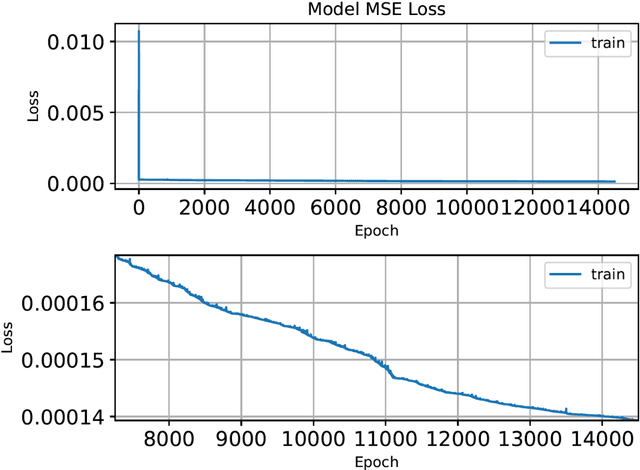

Abstract:We investigate the role of the optimizer in determining the quality of the model fit for neural networks with a small to medium number of parameters. We study the performance of Adam, an algorithm for first-order gradient-based optimization that uses adaptive momentum, the Levenberg and Marquardt (LM) algorithm a second order method, Broyden,Fletcher,Goldfarb and Shanno algorithm (BFGS) a second order method and LBFGS, a low memory version of BFGS. Using these optimizers we fit the function y = sinc(10x) using a neural network with a few parameters. This function has a variable amplitude and a constant frequency. We observe that the higher amplitude components of the function are fitted first and the Adam, BFGS and LBFGS struggle to fit the lower amplitude components of the function. We also solve the Burgers equation using a physics informed neural network(PINN) with the BFGS and LM optimizers. For our example problems with a small to medium number of weights, we find that the LM algorithm is able to rapidly converge to machine precision offering significant benefits over other optimizers. We further investigated the Adam optimizer with a range of models and found that Adam optimiser requires much deeper models with large numbers of hidden units containing up to 26x more parameters, in order to achieve a model fit close that achieved by the LM optimizer. The LM optimizer results illustrate that it may be possible build models with far fewer parameters. We have implemented all our methods in Keras and TensorFlow 2.

A Deep Learning Model for Forecasting Global Monthly Mean Sea Surface Temperature Anomalies

Feb 21, 2022

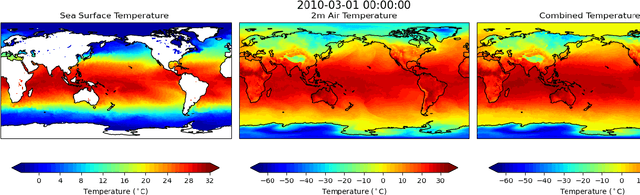

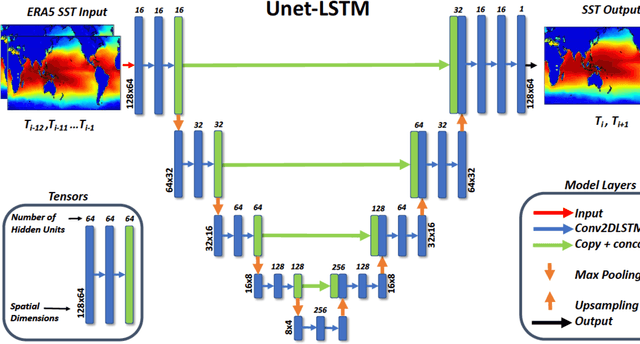

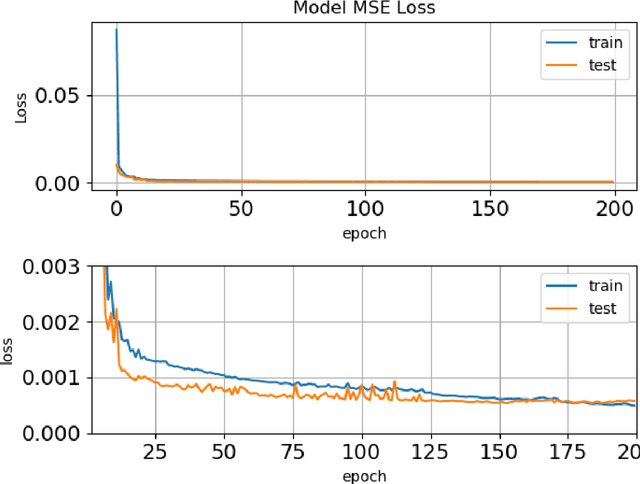

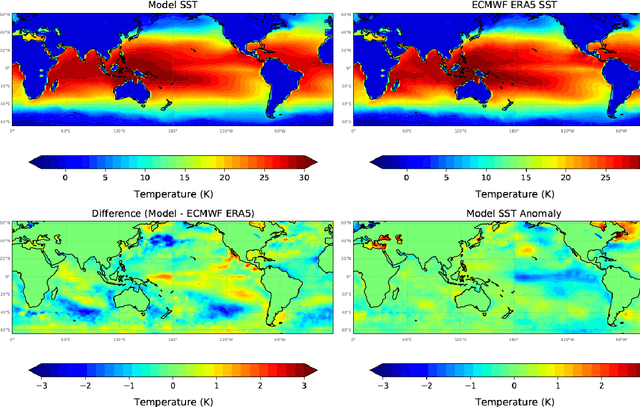

Abstract:Sea surface temperature (SST) variability plays a key role in the global weather and climate system, with phenomena such as El Ni\~{n}o-Southern Oscillation regarded as a major source of interannual climate variability at the global scale. The ability to be able to make long-range forecasts of sea surface temperature anomalies, especially those associated with extreme marine heatwave events, has potentially significant economic and societal benefits. We have developed a deep learning time series prediction model (Unet-LSTM) based on more than 70 years (1950-2021) of ECMWF ERA5 monthly mean sea surface temperature and 2-metre air temperature data. The Unet-LSTM model is able to learn the underlying physics driving the temporal evolution of the 2-dimensional global sea surface temperatures. The model accurately predicts sea surface temperatures over a 24 month period with a root mean square error remaining below 0.75$^\circ$C for all predicted months. We have also investigated the ability of the model to predict sea surface temperature anomalies in the Ni\~{n}o3.4 region, as well as a number of marine heatwave hot spots over the past decade. Model predictions of the Ni\~{n}o3.4 index allow us to capture the strong 2010-11 La Ni\~{n}a, 2009-10 El Nino and the 2015-16 extreme El Ni\~{n}o up to 24 months in advance. It also shows long lead prediction skills for the northeast Pacific marine heatwave, the Blob. However, the prediction of the marine heatwaves in the southeast Indian Ocean, the Ningaloo Ni\~{n}o, shows limited skill. These results indicate the significant potential of data driven methods to yield long-range predictions of sea surface temperature anomalies.

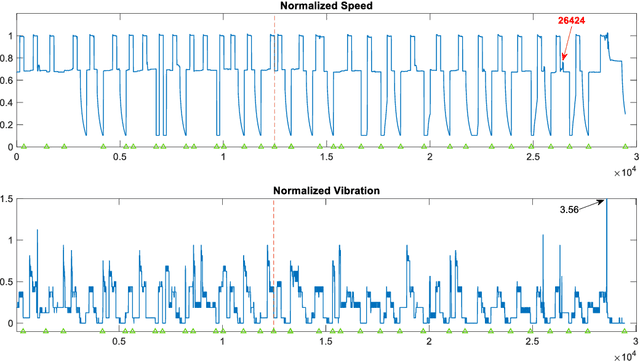

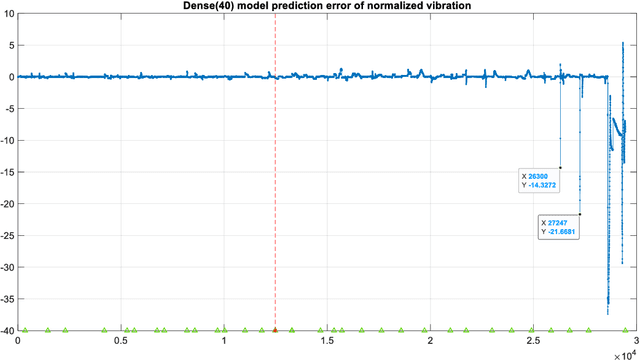

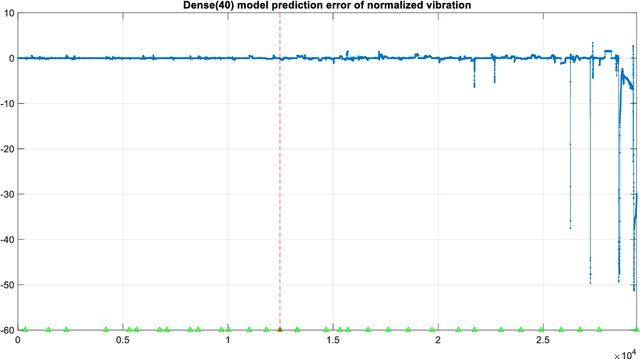

Exploiting the Power of Levenberg-Marquardt Optimizer with Anomaly Detection in Time Series

Nov 11, 2021

Abstract:The Levenberg-Marquardt (LM) optimization algorithm has been widely used for solving machine learning problems. Literature reviews have shown that the LM can be very powerful and effective on moderate function approximation problems when the number of weights in the network is not more than a couple of hundred. In contrast, the LM does not seem to perform as well when dealing with pattern recognition or classification problems, and inefficient when networks become large (e.g. with more than 500 weights). In this paper, we exploit the true power of LM algorithm using some real world aircraft datasets. On these datasets most other commonly used optimizers are unable to detect the anomalies caused by the changing conditions of the aircraft engine. The challenging nature of the datasets are the abrupt changes in the time series data. We find that the LM optimizer has a much better ability to approximate abrupt changes and detect anomalies than other optimizers. We compare the performance, in addressing this anomaly/change detection problem, of the LM and several other optimizers. We assess the relative performance based on a range of measures including network complexity (i.e. number of weights), fitting accuracy, over fitting, training time, use of GPUs and memory requirement etc. We also discuss the issue of robust LM implementation in MATLAB and Tensorflow for promoting more popular usage of the LM algorithm and potential use of LM optimizer for large-scale problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge