Ashfaqur Rahman

Fruit Picker Activity Recognition with Wearable Sensors and Machine Learning

Apr 20, 2023

Abstract:In this paper we present a novel application of detecting fruit picker activities based on time series data generated from wearable sensors. During harvesting, fruit pickers pick fruit into wearable bags and empty these bags into harvesting bins located in the orchard. Once full, these bins are quickly transported to a cooled pack house to improve the shelf life of picked fruits. For farmers and managers, the knowledge of when a picker bag is emptied is important for managing harvesting bins more effectively to minimise the time the picked fruit is left out in the heat (resulting in reduced shelf life). We propose a means to detect these bag-emptying events using human activity recognition with wearable sensors and machine learning methods. We develop a semi-supervised approach to labelling the data. A feature-based machine learning ensemble model and a deep recurrent convolutional neural network are developed and tested on a real-world dataset. When compared, the neural network achieves 86% detection accuracy.

A Semi-supervised Approach for Activity Recognition from Indoor Trajectory Data

Jan 11, 2023Abstract:The increasingly wide usage of location aware sensors has made it possible to collect large volume of trajectory data in diverse application domains. Machine learning allows to study the activities or behaviours of moving objects (e.g., people, vehicles, robot) using such trajectory data with rich spatiotemporal information to facilitate informed strategic and operational decision making. In this study, we consider the task of classifying the activities of moving objects from their noisy indoor trajectory data in a collaborative manufacturing environment. Activity recognition can help manufacturing companies to develop appropriate management policies, and optimise safety, productivity, and efficiency. We present a semi-supervised machine learning approach that first applies an information theoretic criterion to partition a long trajectory into a set of segments such that the object exhibits homogeneous behaviour within each segment. The segments are then labelled automatically based on a constrained hierarchical clustering method. Finally, a deep learning classification model based on convolutional neural networks is trained on trajectory segments and the generated pseudo labels. The proposed approach has been evaluated on a dataset containing indoor trajectories of multiple workers collected from a tricycle assembly workshop. The proposed approach is shown to achieve high classification accuracy (F-score varies between 0.81 to 0.95 for different trajectories) using only a small proportion of labelled trajectory segments.

An operational framework to automatically evaluate the quality of weather observations from third-party stations

Dec 05, 2022Abstract:With increasing number of crowdsourced private automatic weather stations (called TPAWS) established to fill the gap of official network and obtain local weather information for various purposes, the data quality is a major concern in promoting their usage. Proper quality control and assessment are necessary to reach mutual agreement on the TPAWS observations. To derive near real-time assessment for operational system, we propose a simple, scalable and interpretable framework based on AI/Stats/ML models. The framework constructs separate models for individual data from official sources and then provides the final assessment by fusing the individual models. The performance of our proposed framework is evaluated by synthetic data and demonstrated by applying it to a re-al TPAWS network.

Smart Headset, Computer Vision and Machine Learning for Efficient Prawn Farm Management

Oct 14, 2022

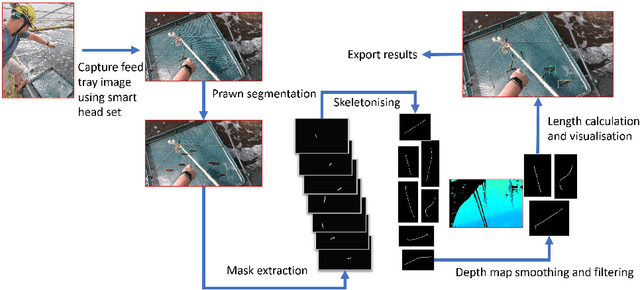

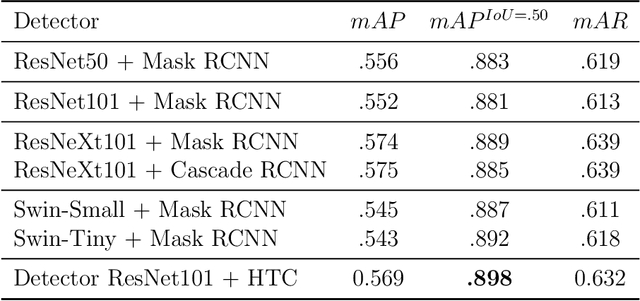

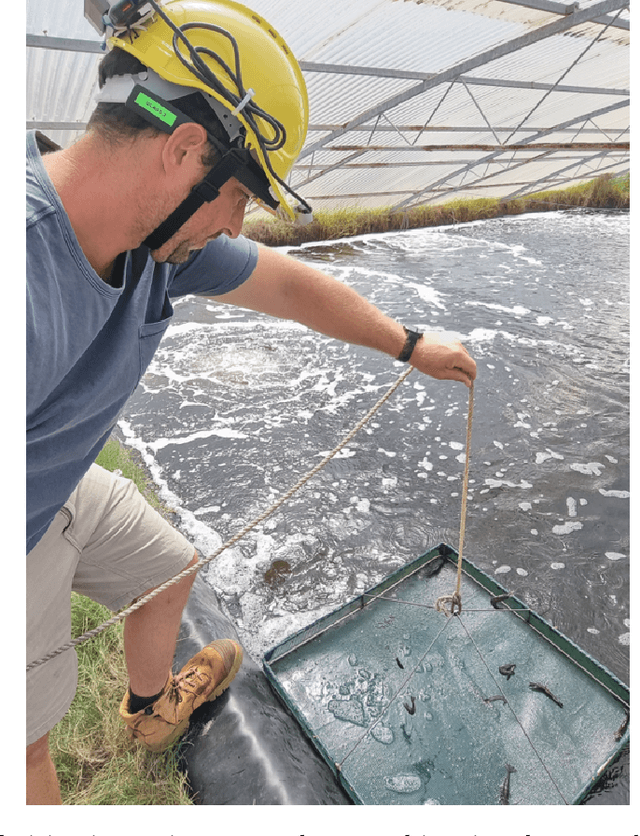

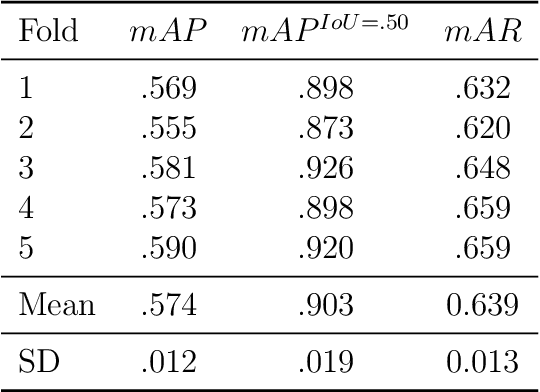

Abstract:Understanding the growth and distribution of the prawns is critical for optimising the feed and harvest strategies. An inadequate understanding of prawn growth can lead to reduced financial gain, for example, crops are harvested too early. The key to maintaining a good understanding of prawn growth is frequent sampling. However, the most commonly adopted sampling practice, the cast net approach, is unable to sample the prawns at a high frequency as it is expensive and laborious. An alternative approach is to sample prawns from feed trays that farm workers inspect each day. This will allow growth data collection at a high frequency (each day). But measuring prawns manually each day is a laborious task. In this article, we propose a new approach that utilises smart glasses, depth camera, computer vision and machine learning to detect prawn distribution and growth from feed trays. A smart headset was built to allow farmers to collect prawn data while performing daily feed tray checks. A computer vision + machine learning pipeline was developed and demonstrated to detect the growth trends of prawns in 4 prawn ponds over a growing season.

Movement Analytics: Current Status, Application to Manufacturing, and Future Prospects from an AI Perspective

Oct 04, 2022

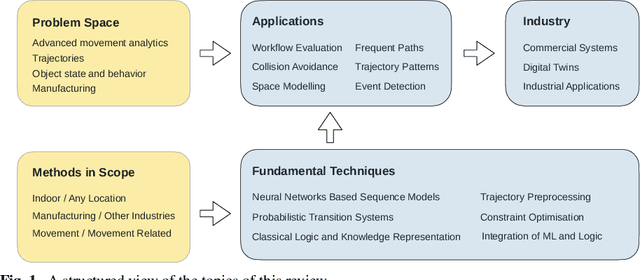

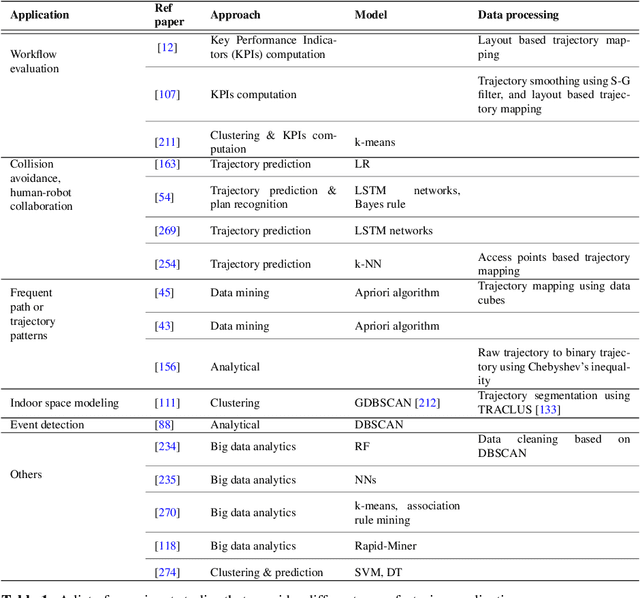

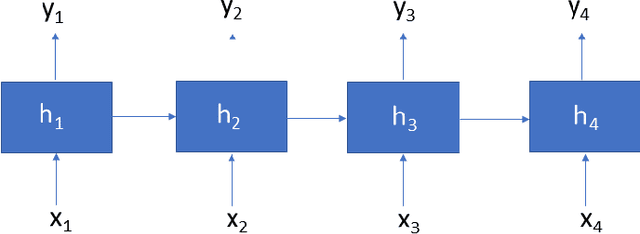

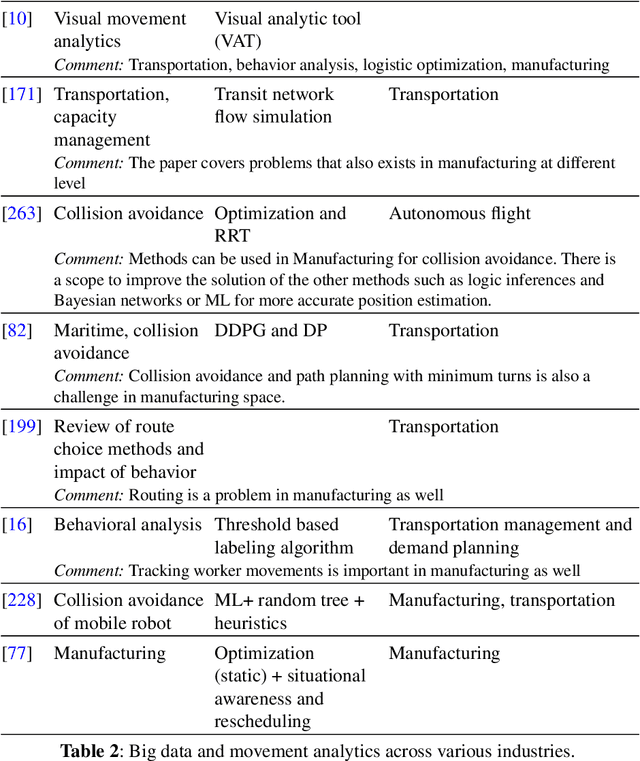

Abstract:Data-driven decision making is becoming an integral part of manufacturing companies. Data is collected and commonly used to improve efficiency and produce high quality items for the customers. IoT-based and other forms of object tracking are an emerging tool for collecting movement data of objects/entities (e.g. human workers, moving vehicles, trolleys etc.) over space and time. Movement data can provide valuable insights like process bottlenecks, resource utilization, effective working time etc. that can be used for decision making and improving efficiency. Turning movement data into valuable information for industrial management and decision making requires analysis methods. We refer to this process as movement analytics. The purpose of this document is to review the current state of work for movement analytics both in manufacturing and more broadly. We survey relevant work from both a theoretical perspective and an application perspective. From the theoretical perspective, we put an emphasis on useful methods from two research areas: machine learning, and logic-based knowledge representation. We also review their combinations in view of movement analytics, and we discuss promising areas for future development and application. Furthermore, we touch on constraint optimization. From an application perspective, we review applications of these methods to movement analytics in a general sense and across various industries. We also describe currently available commercial off-the-shelf products for tracking in manufacturing, and we overview main concepts of digital twins and their applications.

Deep Learning for Prawn Farming: Forecasting and Anomaly Detection

May 12, 2022

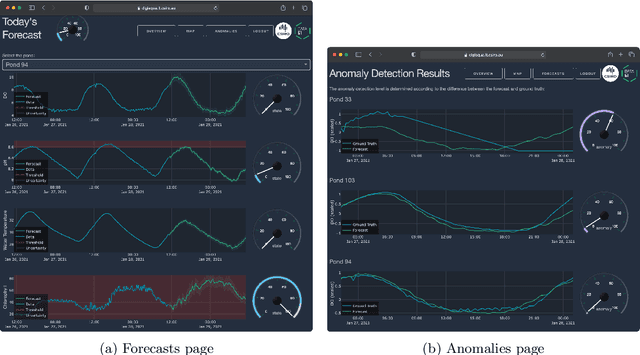

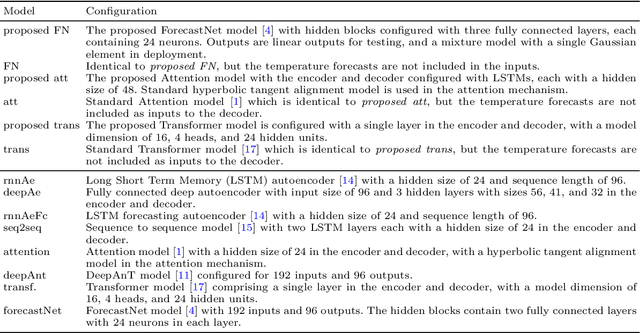

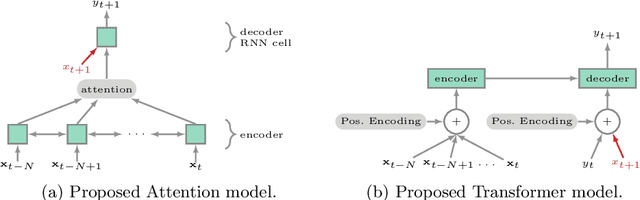

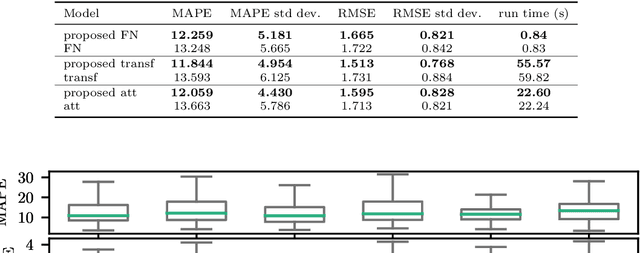

Abstract:We present a decision support system for managing water quality in prawn ponds. The system uses various sources of data and deep learning models in a novel way to provide 24-hour forecasting and anomaly detection of water quality parameters. It provides prawn farmers with tools to proactively avoid a poor growing environment, thereby optimising growth and reducing the risk of losing stock. This is a major shift for farmers who are forced to manage ponds by reactively correcting poor water quality conditions. To our knowledge, we are the first to apply Transformer as an anomaly detection model, and the first to apply anomaly detection in general to this aquaculture problem. Our technical contributions include adapting ForecastNet for multivariate data and adapting Transformer and the Attention model to incorporate weather forecast data into their decoders. We attain an average mean absolute percentage error of 12% for dissolved oxygen forecasts and we demonstrate two anomaly detection case studies. The system is successfully running in its second year of deployment on a commercial prawn farm.

Resource Allocation and Service Provisioning in Multi-Agent Cloud Robotics: A Comprehensive Survey

Apr 29, 2021

Abstract:Robotic applications nowadays are widely adopted to enhance operational automation and performance of real-world Cyber-Physical Systems (CPSs) including Industry 4.0, agriculture, healthcare, and disaster management. These applications are composed of latency-sensitive, data-heavy, and compute-intensive tasks. The robots, however, are constrained in the computational power and storage capacity. The concept of multi-agent cloud robotics enables robot-to-robot cooperation and creates a complementary environment for the robots in executing large-scale applications with the capability to utilize the edge and cloud resources. However, in such a collaborative environment, the optimal resource allocation for robotic tasks is challenging to achieve. Heterogeneous energy consumption rates and application of execution costs associated with the robots and computing instances make it even more complex. In addition, the data transmission delay between local robots, edge nodes, and cloud data centres adversely affects the real-time interactions and impedes service performance guarantee. Taking all these issues into account, this paper comprehensively surveys the state-of-the-art on resource allocation and service provisioning in multi-agent cloud robotics. The paper presents the application domains of multi-agent cloud robotics through explicit comparison with the contemporary computing paradigms and identifies the specific research challenges. A complete taxonomy on resource allocation is presented for the first time, together with the discussion of resource pooling, computation offloading, and task scheduling for efficient service provisioning. Furthermore, we highlight the research gaps from the learned lessons, and present future directions deemed beneficial to further advance this emerging field.

Deep Learning and Statistical Models for Time-Critical Pedestrian Behaviour Prediction

Feb 26, 2020

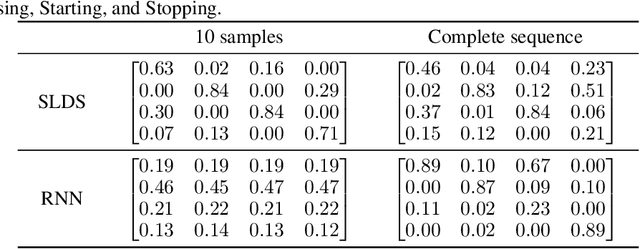

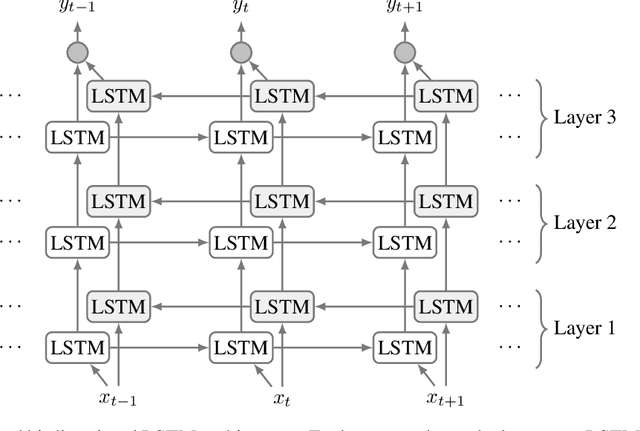

Abstract:The time it takes for a classifier to make an accurate prediction can be crucial in many behaviour recognition problems. For example, an autonomous vehicle should detect hazardous pedestrian behaviour early enough for it to take appropriate measures. In this context, we compare the switching linear dynamical system (SLDS) and a three-layered bi-directional long short-term memory (LSTM) neural network, which are applied to infer pedestrian behaviour from motion tracks. We show that, though the neural network model achieves an accuracy of 80%, it requires long sequences to achieve this (100 samples or more). The SLDS, has a lower accuracy of 74%, but it achieves this result with short sequences (10 samples). To our knowledge, such a comparison on sequence length has not been considered in the literature before. The results provide a key intuition of the suitability of the models in time-critical problems.

Sequence-to-Sequence Imputation of Missing Sensor Data

Feb 25, 2020

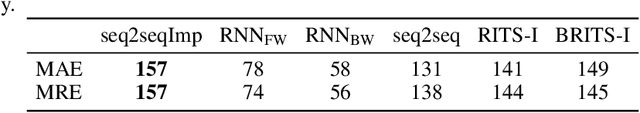

Abstract:Although the sequence-to-sequence (encoder-decoder) model is considered the state-of-the-art in deep learning sequence models, there is little research into using this model for recovering missing sensor data. The key challenge is that the missing sensor data problem typically comprises three sequences (a sequence of observed samples, followed by a sequence of missing samples, followed by another sequence of observed samples) whereas, the sequence-to-sequence model only considers two sequences (an input sequence and an output sequence). We address this problem by formulating a sequence-to-sequence in a novel way. A forward RNN encodes the data observed before the missing sequence and a backward RNN encodes the data observed after the missing sequence. A decoder decodes the two encoders in a novel way to predict the missing data. We demonstrate that this model produces the lowest errors in 12% more cases than the current state-of-the-art.

ForecastNet: A Time-Variant Deep Feed-Forward Neural Network Architecture for Multi-Step-Ahead Time-Series Forecasting

Feb 11, 2020

Abstract:Recurrent and convolutional neural networks are the most common architectures used for time series forecasting in deep learning literature. These networks use parameter sharing by repeating a set of fixed architectures with fixed parameters over time or space. The result is that the overall architecture is time-invariant (shift-invariant in the spatial domain) or stationary. We argue that time-invariance can reduce the capacity to perform multi-step-ahead forecasting, where modelling the dynamics at a range of scales and resolutions is required. We propose ForecastNet which uses a deep feed-forward architecture to provide a time-variant model. An additional novelty of ForecastNet is interleaved outputs, which we show assist in mitigating vanishing gradients. ForecastNet is demonstrated to outperform statistical and deep learning benchmark models on several datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge