John Chen

Mah Parsa

Vox Deorum: A Hybrid LLM Architecture for 4X / Grand Strategy Game AI -- Lessons from Civilization V

Dec 21, 2025Abstract:Large Language Models' capacity to reason in natural language makes them uniquely promising for 4X and grand strategy games, enabling more natural human-AI gameplay interactions such as collaboration and negotiation. However, these games present unique challenges due to their complexity and long-horizon nature, while latency and cost factors may hinder LLMs' real-world deployment. Working on a classic 4X strategy game, Sid Meier's Civilization V with the Vox Populi mod, we introduce Vox Deorum, a hybrid LLM+X architecture. Our layered technical design empowers LLMs to handle macro-strategic reasoning, delegating tactical execution to subsystems (e.g., algorithmic AI or reinforcement learning AI in the future). We validate our approach through 2,327 complete games, comparing two open-source LLMs with a simple prompt against Vox Populi's enhanced AI. Results show that LLMs achieve competitive end-to-end gameplay while exhibiting play styles that diverge substantially from algorithmic AI and from each other. Our work establishes a viable architecture for integrating LLMs in commercial 4X games, opening new opportunities for game design and agentic AI research.

A Computational Method for Measuring "Open Codes" in Qualitative Analysis

Nov 19, 2024

Abstract:Qualitative analysis is critical to understanding human datasets in many social science disciplines. Open coding is an inductive qualitative process that identifies and interprets "open codes" from datasets. Yet, meeting methodological expectations (such as "as exhaustive as possible") can be challenging. While many machine learning (ML)/generative AI (GAI) studies have attempted to support open coding, few have systematically measured or evaluated GAI outcomes, increasing potential bias risks. Building on Grounded Theory and Thematic Analysis theories, we present a computational method to measure and identify potential biases from "open codes" systematically. Instead of operationalizing human expert results as the "ground truth," our method is built upon a team-based approach between human and machine coders. We experiment with two HCI datasets to establish this method's reliability by 1) comparing it with human analysis, and 2) analyzing its output stability. We present evidence-based suggestions and example workflows for ML/GAI to support open coding.

Prompts Matter: Comparing ML/GAI Approaches for Generating Inductive Qualitative Coding Results

Nov 10, 2024

Abstract:Inductive qualitative methods have been a mainstay of education research for decades, yet it takes much time and effort to conduct rigorously. Recent advances in artificial intelligence, particularly with generative AI (GAI), have led to initial success in generating inductive coding results. Like human coders, GAI tools rely on instructions to work, and how to instruct it may matter. To understand how ML/GAI approaches could contribute to qualitative coding processes, this study applied two known and two theory-informed novel approaches to an online community dataset and evaluated the resulting coding results. Our findings show significant discrepancies between ML/GAI approaches and demonstrate the advantage of our approaches, which introduce human coding processes into GAI prompts.

A Network Simulation of OTC Markets with Multiple Agents

May 03, 2024

Abstract:We present a novel agent-based approach to simulating an over-the-counter (OTC) financial market in which trades are intermediated solely by market makers and agent visibility is constrained to a network topology. Dynamics, such as changes in price, result from agent-level interactions that ubiquitously occur via market maker agents acting as liquidity providers. Two additional agents are considered: trend investors use a deep convolutional neural network paired with a deep Q-learning framework to inform trading decisions by analysing price history; and value investors use a static price-target to determine their trade directions and sizes. We demonstrate that our novel inclusion of a network topology with market makers facilitates explorations into various market structures. First, we present the model and an overview of its mechanics. Second, we validate our findings via comparison to the real-world: we demonstrate a fat-tailed distribution of price changes, auto-correlated volatility, a skew negatively correlated to market maker positioning, predictable price-history patterns and more. Finally, we demonstrate that our network-based model can lend insights into the effect of market-structure on price-action. For example, we show that markets with sparsely connected intermediaries can have a critical point of fragmentation, beyond which the market forms distinct clusters and arbitrage becomes rapidly possible between the prices of different market makers. A discussion is provided on future work that would be beneficial.

Fast FixMatch: Faster Semi-Supervised Learning with Curriculum Batch Size

Sep 07, 2023Abstract:Advances in Semi-Supervised Learning (SSL) have almost entirely closed the gap between SSL and Supervised Learning at a fraction of the number of labels. However, recent performance improvements have often come \textit{at the cost of significantly increased training computation}. To address this, we propose Curriculum Batch Size (CBS), \textit{an unlabeled batch size curriculum which exploits the natural training dynamics of deep neural networks.} A small unlabeled batch size is used in the beginning of training and is gradually increased to the end of training. A fixed curriculum is used regardless of dataset, model or number of epochs, and reduced training computations is demonstrated on all settings. We apply CBS, strong labeled augmentation, Curriculum Pseudo Labeling (CPL) \citep{FlexMatch} to FixMatch \citep{FixMatch} and term the new SSL algorithm Fast FixMatch. We perform an ablation study to show that strong labeled augmentation and/or CPL do not significantly reduce training computations, but, in synergy with CBS, they achieve optimal performance. Fast FixMatch also achieves substantially higher data utilization compared to previous state-of-the-art. Fast FixMatch achieves between $2.1\times$ - $3.4\times$ reduced training computations on CIFAR-10 with all but 40, 250 and 4000 labels removed, compared to vanilla FixMatch, while attaining the same cited state-of-the-art error rate \citep{FixMatch}. Similar results are achieved for CIFAR-100, SVHN and STL-10. Finally, Fast MixMatch achieves between $2.6\times$ - $3.3\times$ reduced training computations in federated SSL tasks and online/streaming learning SSL tasks, which further demonstrate the generializbility of Fast MixMatch to different scenarios and tasks.

ChatLogo: A Large Language Model-Driven Hybrid Natural-Programming Language Interface for Agent-based Modeling and Programming

Aug 16, 2023Abstract:Building on Papert (1980)'s idea of children talking to computers, we propose ChatLogo, a hybrid natural-programming language interface for agent-based modeling and programming. We build upon previous efforts to scaffold ABM & P learning and recent development in leveraging large language models (LLMs) to support the learning of computational programming. ChatLogo aims to support conversations with computers in a mix of natural and programming languages, provide a more user-friendly interface for novice learners, and keep the technical system from over-reliance on any single LLM. We introduced the main elements of our design: an intelligent command center, and a conversational interface to support creative expression. We discussed the presentation format and future work. Responding to the challenges of supporting open-ended constructionist learning of ABM & P and leveraging LLMs for educational purposes, we contribute to the field by proposing the first constructionist LLM-driven interface to support computational and complex systems thinking.

REX: Revisiting Budgeted Training with an Improved Schedule

Jul 09, 2021

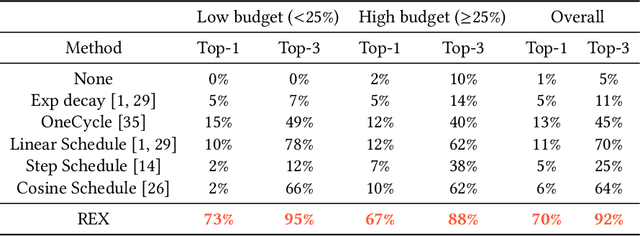

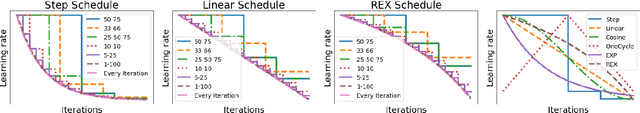

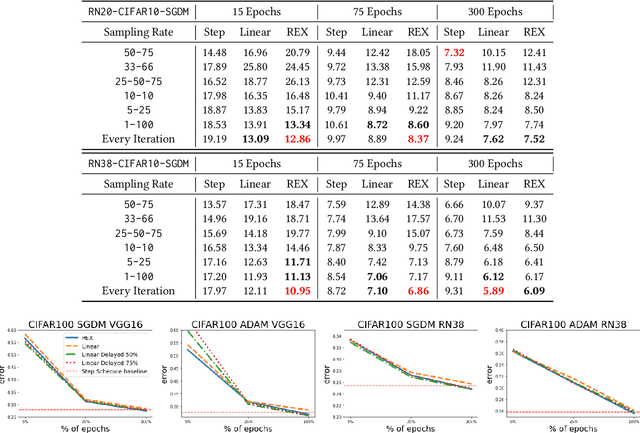

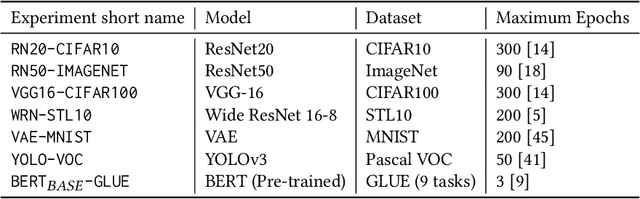

Abstract:Deep learning practitioners often operate on a computational and monetary budget. Thus, it is critical to design optimization algorithms that perform well under any budget. The linear learning rate schedule is considered the best budget-aware schedule, as it outperforms most other schedules in the low budget regime. On the other hand, learning rate schedules -- such as the \texttt{30-60-90} step schedule -- are known to achieve high performance when the model can be trained for many epochs. Yet, it is often not known a priori whether one's budget will be large or small; thus, the optimal choice of learning rate schedule is made on a case-by-case basis. In this paper, we frame the learning rate schedule selection problem as a combination of $i)$ selecting a profile (i.e., the continuous function that models the learning rate schedule), and $ii)$ choosing a sampling rate (i.e., how frequently the learning rate is updated/sampled from this profile). We propose a novel profile and sampling rate combination called the Reflected Exponential (REX) schedule, which we evaluate across seven different experimental settings with both SGD and Adam optimizers. REX outperforms the linear schedule in the low budget regime, while matching or exceeding the performance of several state-of-the-art learning rate schedules (linear, step, exponential, cosine, step decay on plateau, and OneCycle) in both high and low budget regimes. Furthermore, REX requires no added computation, storage, or hyperparameters.

Mitigating deep double descent by concatenating inputs

Jul 02, 2021

Abstract:The double descent curve is one of the most intriguing properties of deep neural networks. It contrasts the classical bias-variance curve with the behavior of modern neural networks, occurring where the number of samples nears the number of parameters. In this work, we explore the connection between the double descent phenomena and the number of samples in the deep neural network setting. In particular, we propose a construction which augments the existing dataset by artificially increasing the number of samples. This construction empirically mitigates the double descent curve in this setting. We reproduce existing work on deep double descent, and observe a smooth descent into the overparameterized region for our construction. This occurs both with respect to the model size, and with respect to the number epochs.

Transformer-Based Models for Question Answering on COVID19

Jan 16, 2021

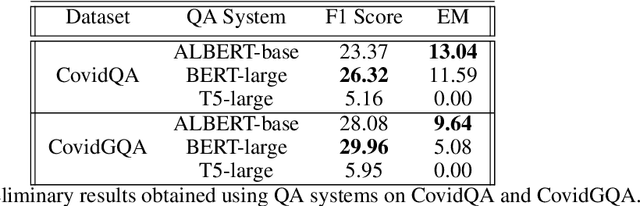

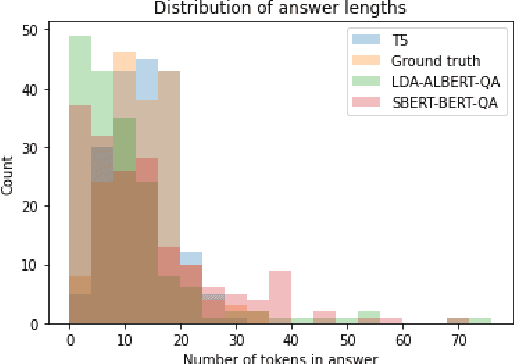

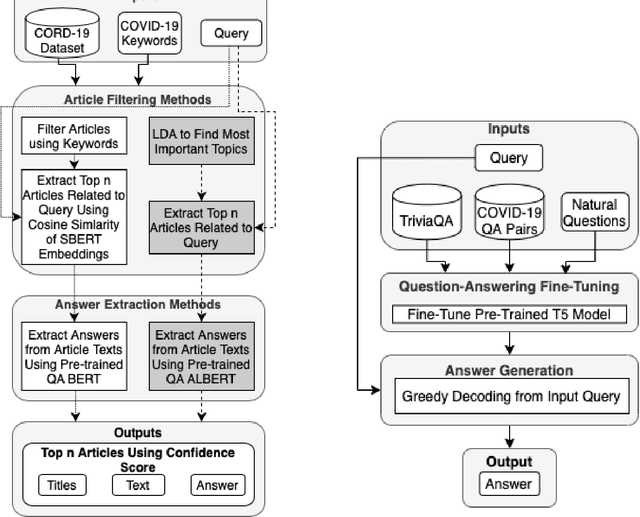

Abstract:In response to the Kaggle's COVID-19 Open Research Dataset (CORD-19) challenge, we have proposed three transformer-based question-answering systems using BERT, ALBERT, and T5 models. Since the CORD-19 dataset is unlabeled, we have evaluated the question-answering models' performance on two labeled questions answers datasets \textemdash CovidQA and CovidGQA. The BERT-based QA system achieved the highest F1 score (26.32), while the ALBERT-based QA system achieved the highest Exact Match (13.04). However, numerous challenges are associated with developing high-performance question-answering systems for the ongoing COVID-19 pandemic and future pandemics. At the end of this paper, we discuss these challenges and suggest potential solutions to address them.

ImCLR: Implicit Contrastive Learning for Image Classification

Nov 25, 2020

Abstract:Contrastive learning is an effective method for learning visual representations. In most cases, this involves adding an explicit loss function to encourage similar images to have similar representations, and different images to have different representations. Inspired by contrastive learning, we introduce a clever input construction for Implicit Contrastive Learning (ImCLR), primarily in the supervised setting: there, the network can implicitly learn to differentiate between similar and dissimilar images. Each input is presented as a concatenation of two images, and the label is the mean of the two one-hot labels. Furthermore, this requires almost no change to existing pipelines, which allows for easy integration and for fair demonstration of effectiveness on a wide range of well-accepted benchmarks. Namely, there is no change to loss, no change to hyperparameters, and no change to general network architecture. We show that ImCLR improves the test error in the supervised setting across a variety of settings, including 3.24% on Tiny ImageNet, 1.30% on CIFAR-100, 0.14% on CIFAR-10, and 2.28% on STL-10. We show that this holds across different number of labeled samples, maintaining approximately a 2% gap in test accuracy down to using only 5% of the whole dataset. We further show that gains hold for robustness to common input corruptions and perturbations at varying severities with a 0.72% improvement on CIFAR-100-C, and in the semi-supervised setting with a 2.16% improvement with the standard benchmark $\Pi$-model. We demonstrate that ImCLR is complementary to existing data augmentation techniques, achieving over 1% improvement on CIFAR-100 and 2% improvement on Tiny ImageNet by combining ImCLR with CutMix over either baseline, and 2% by combining ImCLR with AutoAugment over either baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge