Grace Wang

Prompts Matter: Comparing ML/GAI Approaches for Generating Inductive Qualitative Coding Results

Nov 10, 2024

Abstract:Inductive qualitative methods have been a mainstay of education research for decades, yet it takes much time and effort to conduct rigorously. Recent advances in artificial intelligence, particularly with generative AI (GAI), have led to initial success in generating inductive coding results. Like human coders, GAI tools rely on instructions to work, and how to instruct it may matter. To understand how ML/GAI approaches could contribute to qualitative coding processes, this study applied two known and two theory-informed novel approaches to an online community dataset and evaluated the resulting coding results. Our findings show significant discrepancies between ML/GAI approaches and demonstrate the advantage of our approaches, which introduce human coding processes into GAI prompts.

Quantifying the Uniqueness of Donald Trump in Presidential Discourse

Jan 02, 2024Abstract:Does Donald Trump speak differently from other presidents? If so, in what ways? Are these differences confined to any single medium of communication? To investigate these questions, this paper introduces a novel metric of uniqueness based on large language models, develops a new lexicon for divisive speech, and presents a framework for comparing the lexical features of political opponents. Applying these tools to a variety of corpora of presidential speeches, we find considerable evidence that Trump's speech patterns diverge from those of all major party nominees for the presidency in recent history. Some notable findings include Trump's employment of particularly divisive and antagonistic language targeting of his political opponents and his patterns of repetition for emphasis. Furthermore, Trump is significantly more distinctive than his fellow Republicans, whose uniqueness values are comparably closer to those of the Democrats. These differences hold across a variety of measurement strategies, arise on both the campaign trail and in official presidential addresses, and do not appear to be an artifact of secular time trends.

Correlations Between COVID-19 and Dengue

Jul 27, 2022

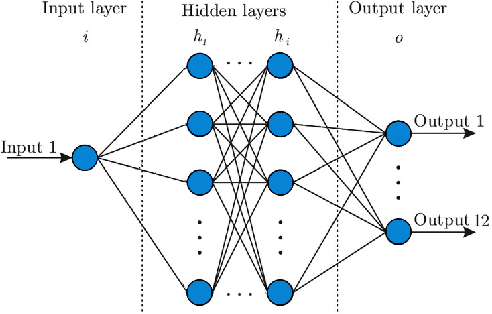

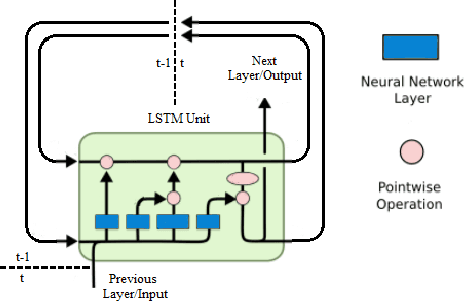

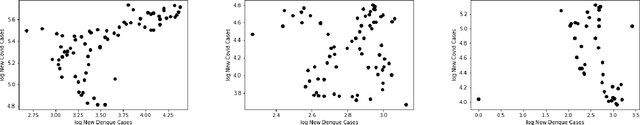

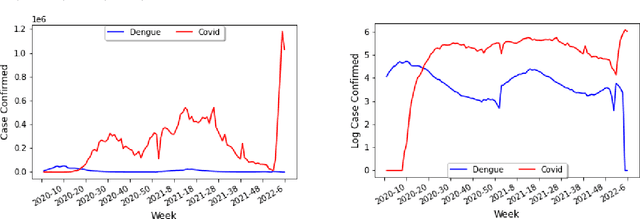

Abstract:A dramatic increase in the number of outbreaks of Dengue has recently been reported, and climate change is likely to extend the geographical spread of the disease. In this context, this paper shows how a neural network approach can incorporate Dengue and COVID-19 data as well as external factors (such as social behaviour or climate variables), to develop predictive models that could improve our knowledge and provide useful tools for health policy makers. Through the use of neural networks with different social and natural parameters, in this paper we define a Correlation Model through which we show that the number of cases of COVID-19 and Dengue have very similar trends. We then illustrate the relevance of our model by extending it to a Long short-term memory model (LSTM) that incorporates both diseases, and using this to estimate Dengue infections via COVID-19 data in countries that lack sufficient Dengue data.

Ensemble plasticity and network adaptability in SNNs

Mar 11, 2022

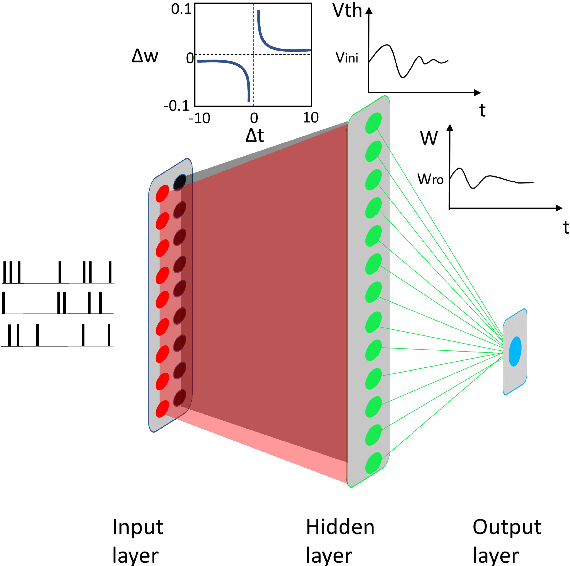

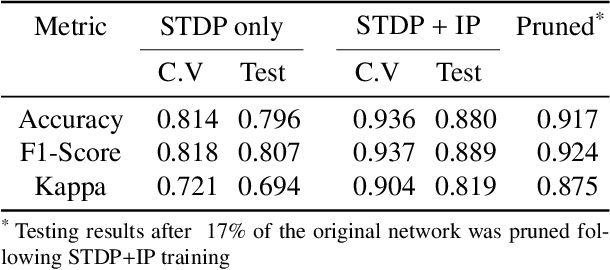

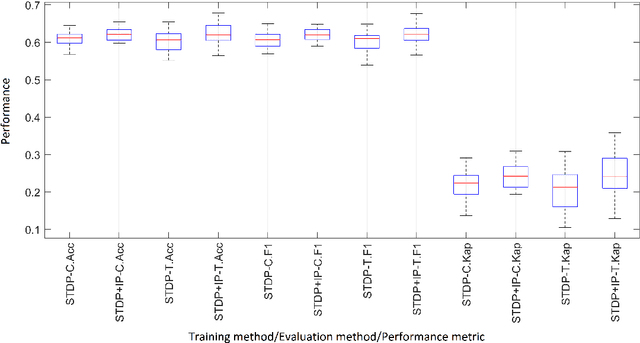

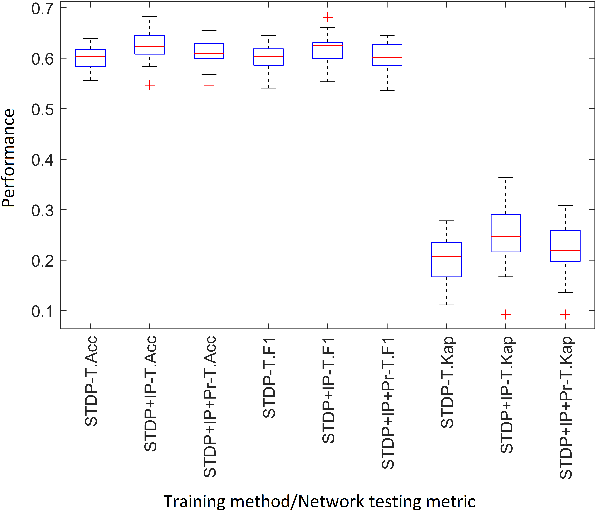

Abstract:Artificial Spiking Neural Networks (ASNNs) promise greater information processing efficiency because of discrete event-based (i.e., spike) computation. Several Machine Learning (ML) applications use biologically inspired plasticity mechanisms as unsupervised learning techniques to increase the robustness of ASNNs while preserving efficiency. Spike Time Dependent Plasticity (STDP) and Intrinsic Plasticity (IP) (i.e., dynamic spiking threshold adaptation) are two such mechanisms that have been combined to form an ensemble learning method. However, it is not clear how this ensemble learning should be regulated based on spiking activity. Moreover, previous studies have attempted threshold based synaptic pruning following STDP, to increase inference efficiency at the cost of performance in ASNNs. However, this type of structural adaptation, that employs individual weight mechanisms, does not consider spiking activity for pruning which is a better representation of input stimuli. We envisaged that plasticity-based spike-regulation and spike-based pruning will result in ASSNs that perform better in low resource situations. In this paper, a novel ensemble learning method based on entropy and network activation is introduced, which is amalgamated with a spike-rate neuron pruning technique, operated exclusively using spiking activity. Two electroencephalography (EEG) datasets are used as the input for classification experiments with a three-layer feed forward ASNN trained using one-pass learning. During the learning process, we observed neurons assembling into a hierarchy of clusters based on spiking rate. It was discovered that pruning lower spike-rate neuron clusters resulted in increased generalization or a predictable decline in performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge