Jinhu Lü

School of Automation Science and Electrical Engineering, Beihang University, Beijing, China, Zhongguancun Laboratory, Beijing, China

Meanshift Shape Formation Control Using Discrete Mass Distribution

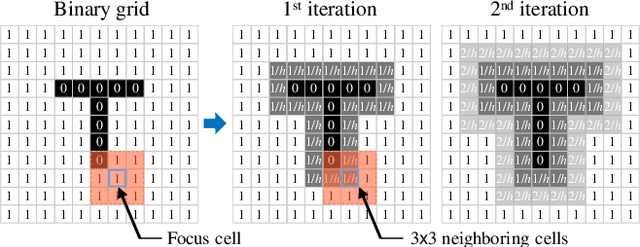

Feb 01, 2026Abstract:The density-distribution method has recently become a promising paradigm owing to its adaptability to variations in swarm size. However, existing studies face practical challenges in achieving complex shape representation and decentralized implementation. This motivates us to develop a fully decentralized, distribution-based control strategy with the dual capability of forming complex shapes and adapting to swarm-size variations. Specifically, we first propose a discrete mass-distribution function defined over a set of sample points to model swarm formation. In contrast to the continuous density-distribution method, our model eliminates the requirement for defining continuous density functions-a task that is difficult for complex shapes. Second, we design a decentralized meanshift control law to coordinate the swarm's global distribution to fit the sample-point distribution by feeding back mass estimates. The mass estimates for all sample points are achieved by the robots in a decentralized manner via the designed mass estimator. It is shown that the mass estimates of the sample points can asymptotically converge to the true global values. To validate the proposed strategy, we conduct comprehensive simulations and real-world experiments to evaluate the efficiency of complex shape formation and adaptability to swarm-size variations.

Bidirectional Task-Motion Planning Based on Hierarchical Reinforcement Learning for Strategic Confrontation

Apr 22, 2025Abstract:In swarm robotics, confrontation scenarios, including strategic confrontations, require efficient decision-making that integrates discrete commands and continuous actions. Traditional task and motion planning methods separate decision-making into two layers, but their unidirectional structure fails to capture the interdependence between these layers, limiting adaptability in dynamic environments. Here, we propose a novel bidirectional approach based on hierarchical reinforcement learning, enabling dynamic interaction between the layers. This method effectively maps commands to task allocation and actions to path planning, while leveraging cross-training techniques to enhance learning across the hierarchical framework. Furthermore, we introduce a trajectory prediction model that bridges abstract task representations with actionable planning goals. In our experiments, it achieves over 80\% in confrontation win rate and under 0.01 seconds in decision time, outperforming existing approaches. Demonstrations through large-scale tests and real-world robot experiments further emphasize the generalization capabilities and practical applicability of our method.

HyperSAT: Unsupervised Hypergraph Neural Networks for Weighted MaxSAT Problems

Apr 16, 2025

Abstract:Graph neural networks (GNNs) have shown promising performance in solving both Boolean satisfiability (SAT) and Maximum Satisfiability (MaxSAT) problems due to their ability to efficiently model and capture the structural dependencies between literals and clauses. However, GNN methods for solving Weighted MaxSAT problems remain underdeveloped. The challenges arise from the non-linear dependency and sensitive objective function, which are caused by the non-uniform distribution of weights across clauses. In this paper, we present HyperSAT, a novel neural approach that employs an unsupervised hypergraph neural network model to solve Weighted MaxSAT problems. We propose a hypergraph representation for Weighted MaxSAT instances and design a cross-attention mechanism along with a shared representation constraint loss function to capture the logical interactions between positive and negative literal nodes in the hypergraph. Extensive experiments on various Weighted MaxSAT datasets demonstrate that HyperSAT achieves better performance than state-of-the-art competitors.

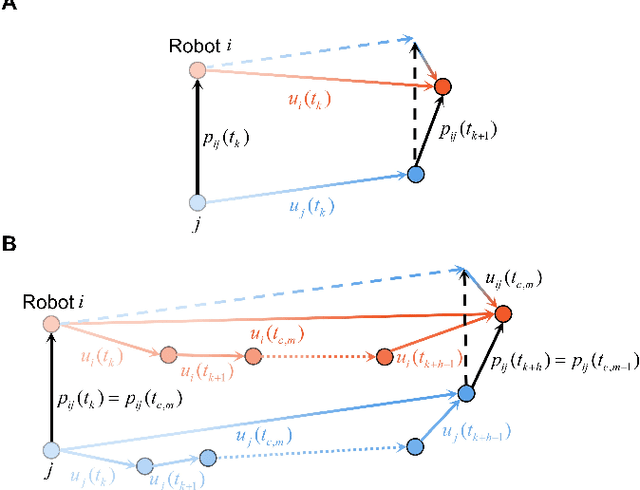

Relative Pose Estimation for Nonholonomic Robot Formation with UWB-IO Measurements

Nov 08, 2024

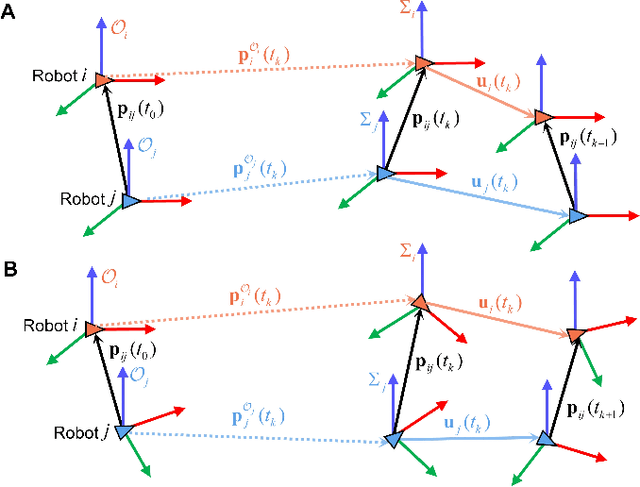

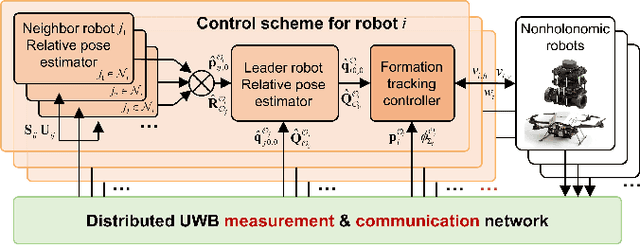

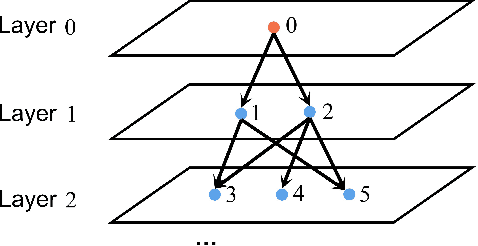

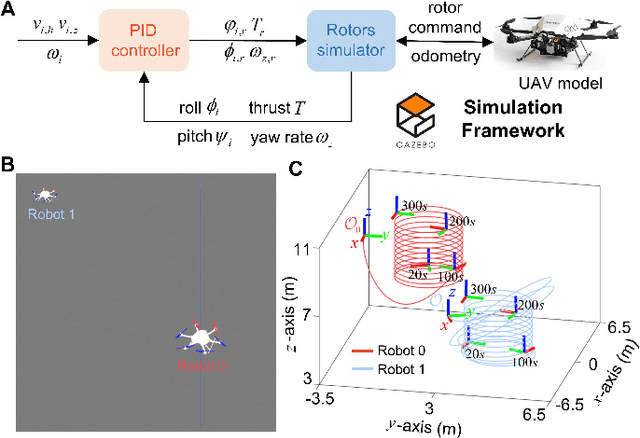

Abstract:This article studies the problem of distributed formation control for multiple robots by using onboard ultra wide band (UWB) ranging and inertial odometer (IO) measurements. Although this problem has been widely studied, a fundamental limitation of most works is that they require each robot's pose and sensor measurements are expressed in a common reference frame. However, it is inapplicable for nonholonomic robot formations due to the practical difficulty of aligning IO measurements of individual robot in a common frame. To address this problem, firstly, a concurrent-learning based estimator is firstly proposed to achieve relative localization between neighboring robots in a local frame. Different from most relative localization methods in a global frame, both relative position and orientation in a local frame are estimated with only UWB ranging and IO measurements. Secondly, to deal with information loss caused by directed communication topology, a cooperative localization algorithm is introduced to estimate the relative pose to the leader robot. Thirdly, based on the theoretical results on relative pose estimation, a distributed formation tracking controller is proposed for nonholonomic robots. Both gazebo physical simulation and real-world experiments conducted on networked TurtleBot3 nonholonomic robots are provided to demonstrate the effectiveness of the proposed method.

Distributed Formation Shape Control of Identity-less Robot Swarms

Oct 31, 2024

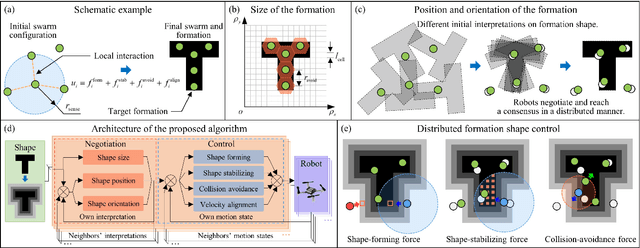

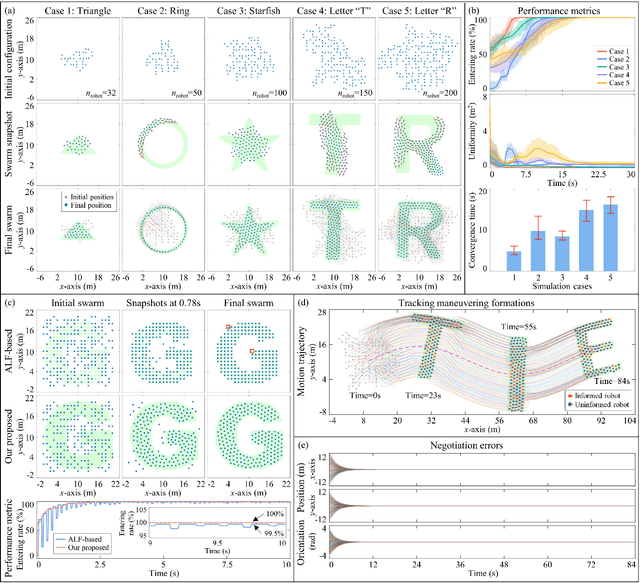

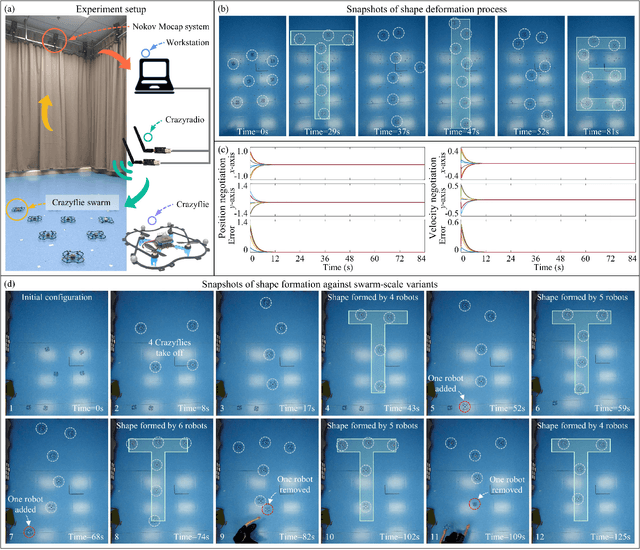

Abstract:Different from most of the formation strategies where robots require unique labels to identify topological neighbors to satisfy the predefined shape constraints, we here study the problem of identity-less distributed shape formation in homogeneous swarms, which is rarely studied in the literature. The absence of identities creates a unique challenge: how to design appropriate target formations and local behaviors that are suitable for identity-less formation shape control. To address this challenge, we propose the following novel results. First, to avoid using unique identities, we propose a dynamic formation description method and solve the formation consensus of robots in a locally distributed manner. Second, to handle identity-less distributed formations, we propose a fully distributed control law for homogeneous swarms based on locally sensed information. While the existing methods are applicable to simple cases where the target formation is stationary, ours can tackle more general maneuvering formations such as translation, rotation, or even shape deformation. Both numerical simulation and flight experiment are presented to verify the effectiveness and robustness of our proposed formation strategy.

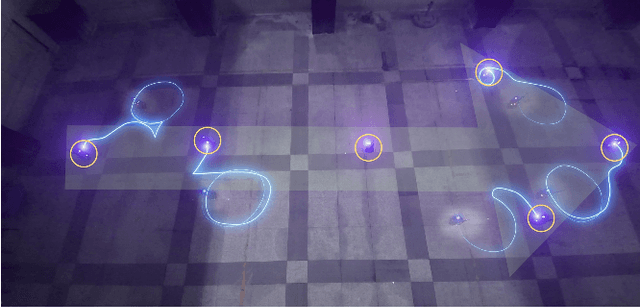

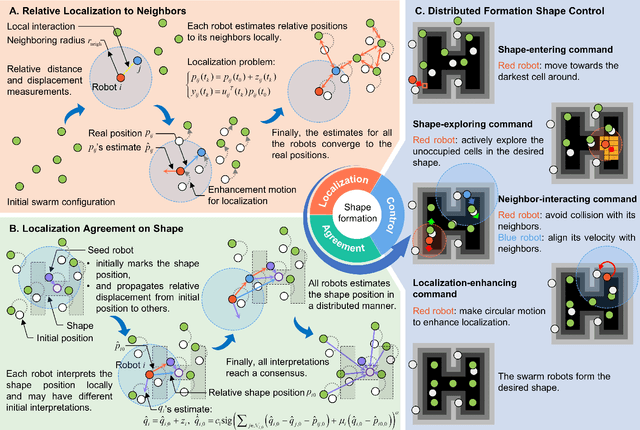

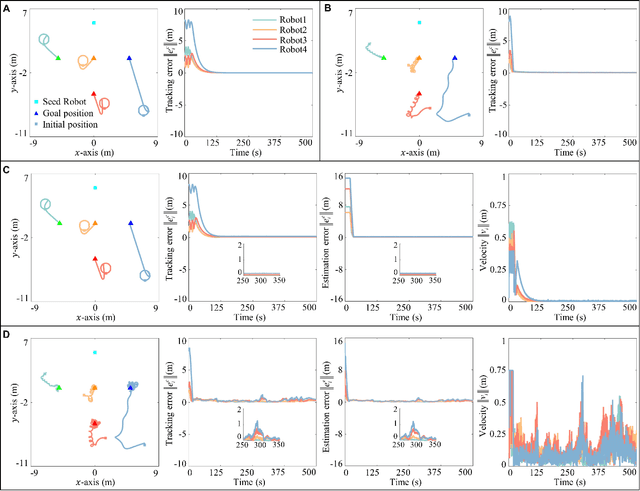

Concurrent-Learning Based Relative Localization in Shape Formation of Robot Swarms

Oct 08, 2024

Abstract:In this paper, we address the shape formation problem for massive robot swarms in environments where external localization systems are unavailable. Achieving this task effectively with solely onboard measurements is still scarcely explored and faces some practical challenges. To solve this challenging problem, we propose the following novel results. Firstly, to estimate the relative positions among neighboring robots, a concurrent-learning based estimator is proposed. It relaxes the persistent excitation condition required in the classical ones such as least-square estimator. Secondly, we introduce a finite-time agreement protocol to determine the shape location. This is achieved by estimating the relative position between each robot and a randomly assigned seed robot. The initial position of the seed one marks the shape location. Thirdly, based on the theoretical results of the relative localization, a novel behavior-based control strategy is devised. This strategy not only enables adaptive shape formation of large group of robots but also enhances the observability of inter-robot relative localization. Numerical simulation results are provided to verify the performance of our proposed strategy compared to the state-of-the-art ones. Additionally, outdoor experiments on real robots further demonstrate the practical effectiveness and robustness of our methods.

Bi-level Doubly Variational Learning for Energy-based Latent Variable Models

Mar 24, 2022

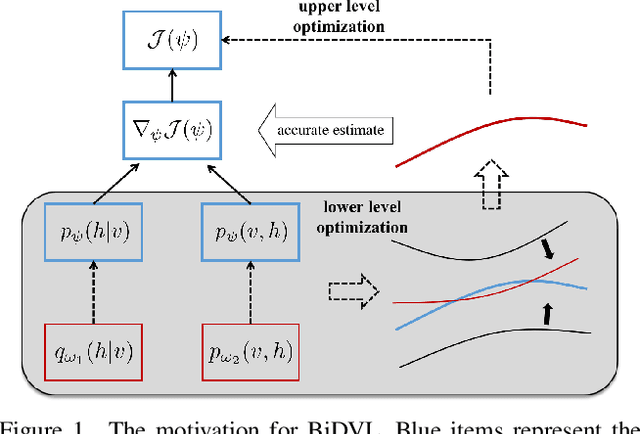

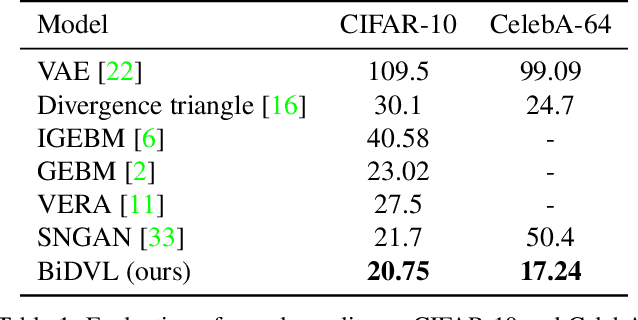

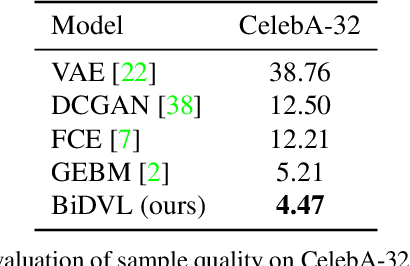

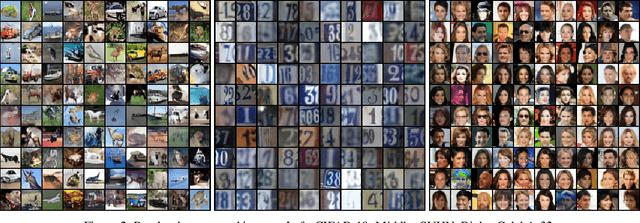

Abstract:Energy-based latent variable models (EBLVMs) are more expressive than conventional energy-based models. However, its potential on visual tasks are limited by its training process based on maximum likelihood estimate that requires sampling from two intractable distributions. In this paper, we propose Bi-level doubly variational learning (BiDVL), which is based on a new bi-level optimization framework and two tractable variational distributions to facilitate learning EBLVMs. Particularly, we lead a decoupled EBLVM consisting of a marginal energy-based distribution and a structural posterior to handle the difficulties when learning deep EBLVMs on images. By choosing a symmetric KL divergence in the lower level of our framework, a compact BiDVL for visual tasks can be obtained. Our model achieves impressive image generation performance over related works. It also demonstrates the significant capacity of testing image reconstruction and out-of-distribution detection.

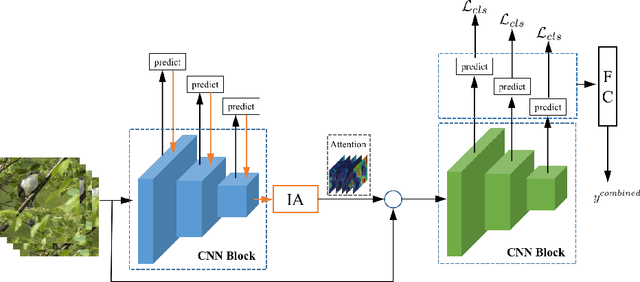

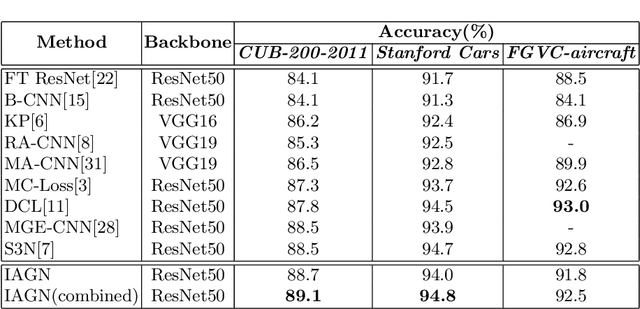

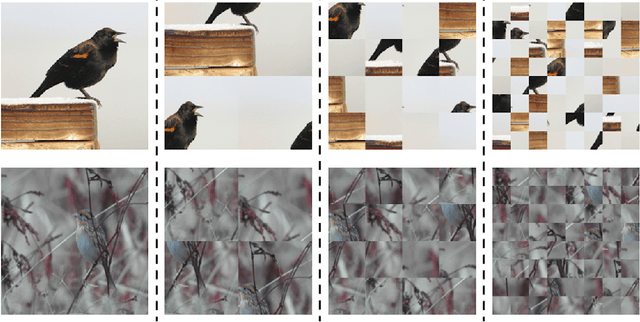

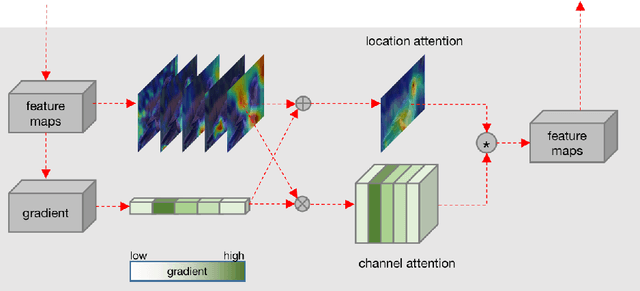

Interpretable Attention Guided Network for Fine-grained Visual Classification

Mar 09, 2021

Abstract:Fine-grained visual classification (FGVC) is challenging but more critical than traditional classification tasks. It requires distinguishing different subcategories with the inherently subtle intra-class object variations. Previous works focus on enhancing the feature representation ability using multiple granularities and discriminative regions based on the attention strategy or bounding boxes. However, these methods highly rely on deep neural networks which lack interpretability. We propose an Interpretable Attention Guided Network (IAGN) for fine-grained visual classification. The contributions of our method include: i) an attention guided framework which can guide the network to extract discriminitive regions in an interpretable way; ii) a progressive training mechanism obtained to distill knowledge stage by stage to fuse features of various granularities; iii) the first interpretable FGVC method with a competitive performance on several standard FGVC benchmark datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge