Jijunnan Li

Monocular Localization with Semantics Map for Autonomous Vehicles

Jun 06, 2024

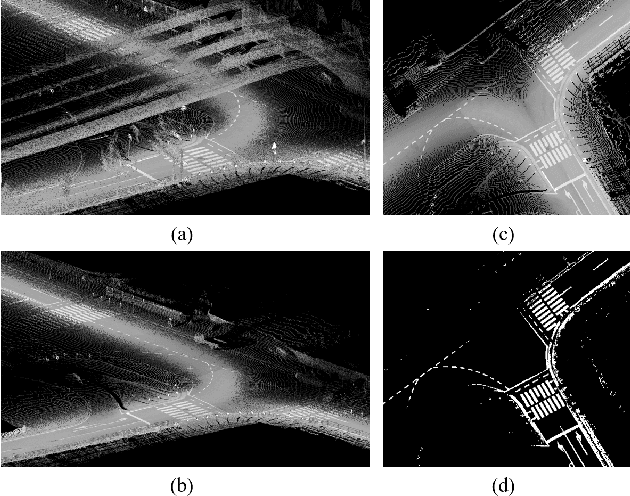

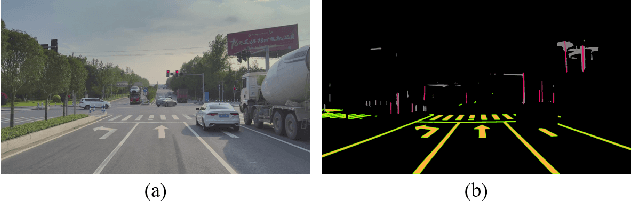

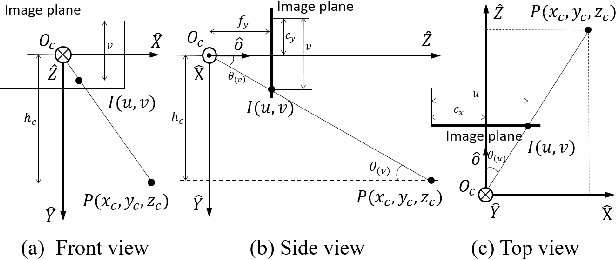

Abstract:Accurate and robust localization remains a significant challenge for autonomous vehicles. The cost of sensors and limitations in local computational efficiency make it difficult to scale to large commercial applications. Traditional vision-based approaches focus on texture features that are susceptible to changes in lighting, season, perspective, and appearance. Additionally, the large storage size of maps with descriptors and complex optimization processes hinder system performance. To balance efficiency and accuracy, we propose a novel lightweight visual semantic localization algorithm that employs stable semantic features instead of low-level texture features. First, semantic maps are constructed offline by detecting semantic objects, such as ground markers, lane lines, and poles, using cameras or LiDAR sensors. Then, online visual localization is performed through data association of semantic features and map objects. We evaluated our proposed localization framework in the publicly available KAIST Urban dataset and in scenarios recorded by ourselves. The experimental results demonstrate that our method is a reliable and practical localization solution in various autonomous driving localization tasks.

Fusing Monocular Images and Sparse IMU Signals for Real-time Human Motion Capture

Sep 01, 2023Abstract:Either RGB images or inertial signals have been used for the task of motion capture (mocap), but combining them together is a new and interesting topic. We believe that the combination is complementary and able to solve the inherent difficulties of using one modality input, including occlusions, extreme lighting/texture, and out-of-view for visual mocap and global drifts for inertial mocap. To this end, we propose a method that fuses monocular images and sparse IMUs for real-time human motion capture. Our method contains a dual coordinate strategy to fully explore the IMU signals with different goals in motion capture. To be specific, besides one branch transforming the IMU signals to the camera coordinate system to combine with the image information, there is another branch to learn from the IMU signals in the body root coordinate system to better estimate body poses. Furthermore, a hidden state feedback mechanism is proposed for both two branches to compensate for their own drawbacks in extreme input cases. Thus our method can easily switch between the two kinds of signals or combine them in different cases to achieve a robust mocap. %The two divided parts can help each other for better mocap results under different conditions. Quantitative and qualitative results demonstrate that by delicately designing the fusion method, our technique significantly outperforms the state-of-the-art vision, IMU, and combined methods on both global orientation and local pose estimation. Our codes are available for research at https://shaohua-pan.github.io/robustcap-page/.

SGL: Structure Guidance Learning for Camera Localization

Apr 12, 2023

Abstract:Camera localization is a classical computer vision task that serves various Artificial Intelligence and Robotics applications. With the rapid developments of Deep Neural Networks (DNNs), end-to-end visual localization methods are prosperous in recent years. In this work, we focus on the scene coordinate prediction ones and propose a network architecture named as Structure Guidance Learning (SGL) which utilizes the receptive branch and the structure branch to extract both high-level and low-level features to estimate the 3D coordinates. We design a confidence strategy to refine and filter the predicted 3D observations, which enables us to estimate the camera poses by employing the Perspective-n-Point (PnP) with RANSAC. In the training part, we design the Bundle Adjustment trainer to help the network fit the scenes better. Comparisons with some state-of-the-art (SOTA) methods and sufficient ablation experiments confirm the validity of our proposed architecture.

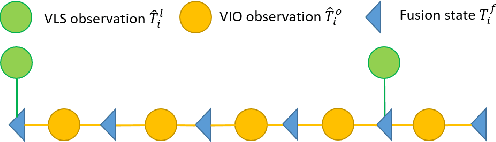

A Real-Time Fusion Framework for Long-term Visual Localization

Oct 18, 2022

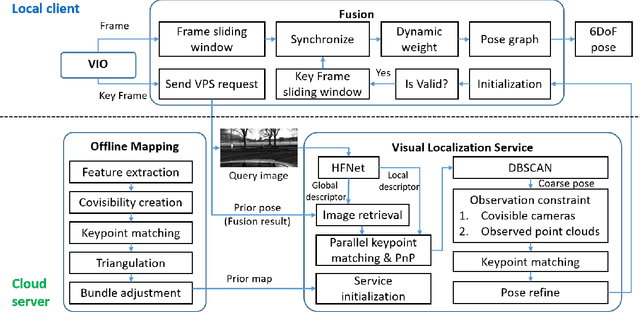

Abstract:Visual localization is a fundamental task that regresses the 6 Degree Of Freedom (6DoF) poses with image features in order to serve the high precision localization requests in many robotics applications. Degenerate conditions like motion blur, illumination changes and environment variations place great challenges in this task. Fusion with additional information, such as sequential information and Inertial Measurement Unit (IMU) inputs, would greatly assist such problems. In this paper, we present an efficient client-server visual localization architecture that fuses global and local pose estimations to realize promising precision and efficiency. We include additional geometry hints in mapping and global pose regressing modules to improve the measurement quality. A loosely coupled fusion policy is adopted to leverage the computation complexity and accuracy. We conduct the evaluations on two typical open-source benchmarks, 4Seasons and OpenLORIS. Quantitative results prove that our framework has competitive performance with respect to other state-of-the-art visual localization solutions.

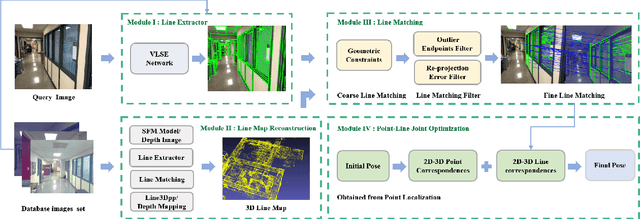

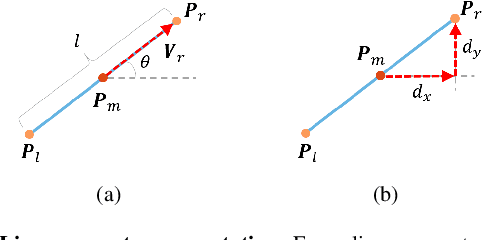

Pose Refinement with Joint Optimization of Visual Points and Lines

Oct 08, 2021

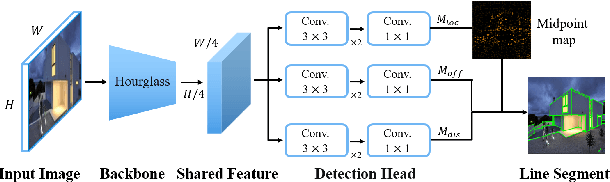

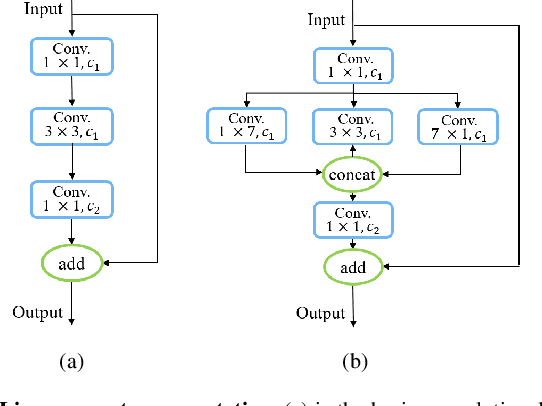

Abstract:High-precision camera re-localization technology in a pre-established 3D environment map is the basis for many tasks, such as Augmented Reality, Robotics and Autonomous Driving. The point-based visual re-localization approaches are well-developed in recent decades, but are insufficient in some feature-less cases. In this paper, we propose a point-line joint optimization method for pose refinement with the help of the innovatively designed line extracting CNN named VLSE, and the line matching and pose optimization approach. We adopt a novel line representation and customize a hybrid convolutional block based on the Stacked Hourglass network, to detect accurate and stable line features on images. Then we apply a coarse-to-fine strategy to obtain precise 2D-3D line correspondences based on the geometric constraint. A following point-line joint cost function is constructed to optimize the camera pose with the initial coarse pose. Sufficient experiments are conducted on open datasets, i.e, line extractor on Wireframe and YorkUrban, localization performance on Aachen Day-Night v1.1 and InLoc, to confirm the effectiveness of our point-line joint pose optimization method.

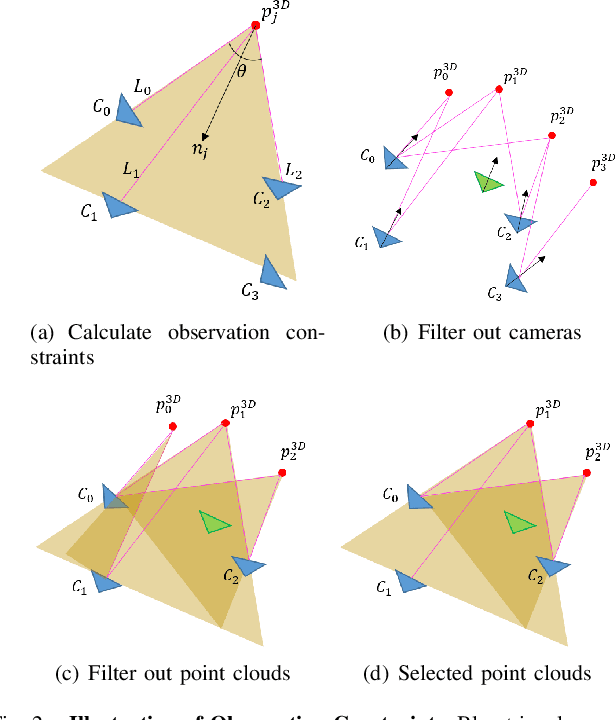

Retrieval and Localization with Observation Constraints

Aug 19, 2021

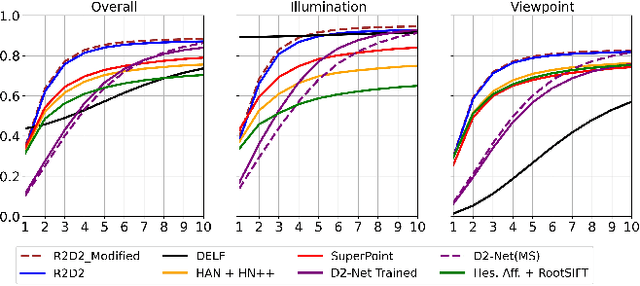

Abstract:Accurate visual re-localization is very critical to many artificial intelligence applications, such as augmented reality, virtual reality, robotics and autonomous driving. To accomplish this task, we propose an integrated visual re-localization method called RLOCS by combining image retrieval, semantic consistency and geometry verification to achieve accurate estimations. The localization pipeline is designed as a coarse-to-fine paradigm. In the retrieval part, we cascade the architecture of ResNet101-GeM-ArcFace and employ DBSCAN followed by spatial verification to obtain a better initial coarse pose. We design a module called observation constraints, which combines geometry information and semantic consistency for filtering outliers. Comprehensive experiments are conducted on open datasets, including retrieval on R-Oxford5k and R-Paris6k, semantic segmentation on Cityscapes, localization on Aachen Day-Night and InLoc. By creatively modifying separate modules in the total pipeline, our method achieves many performance improvements on the challenging localization benchmarks.

Visual Localization Using Semantic Segmentation and Depth Prediction

May 25, 2020

Abstract:In this paper, we propose a monocular visual localization pipeline leveraging semantic and depth cues. We apply semantic consistency evaluation to rank the image retrieval results and a practical clustering technique to reject estimation outliers. In addition, we demonstrate a substantial performance boost achieved with a combination of multiple feature extractors. Furthermore, by using depth prediction with a deep neural network, we show that a significant amount of falsely matched keypoints are identified and eliminated. The proposed pipeline outperforms most of the existing approaches at the Long-Term Visual Localization benchmark 2020.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge