Yishan Ping

SGL: Structure Guidance Learning for Camera Localization

Apr 12, 2023

Abstract:Camera localization is a classical computer vision task that serves various Artificial Intelligence and Robotics applications. With the rapid developments of Deep Neural Networks (DNNs), end-to-end visual localization methods are prosperous in recent years. In this work, we focus on the scene coordinate prediction ones and propose a network architecture named as Structure Guidance Learning (SGL) which utilizes the receptive branch and the structure branch to extract both high-level and low-level features to estimate the 3D coordinates. We design a confidence strategy to refine and filter the predicted 3D observations, which enables us to estimate the camera poses by employing the Perspective-n-Point (PnP) with RANSAC. In the training part, we design the Bundle Adjustment trainer to help the network fit the scenes better. Comparisons with some state-of-the-art (SOTA) methods and sufficient ablation experiments confirm the validity of our proposed architecture.

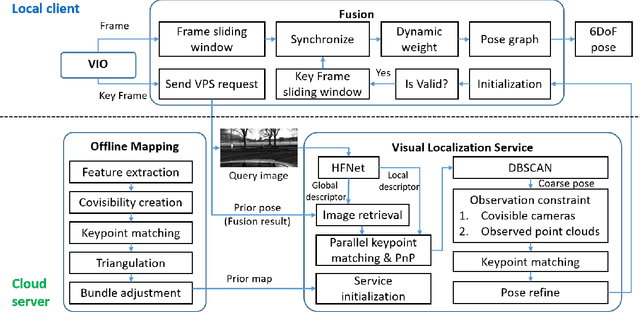

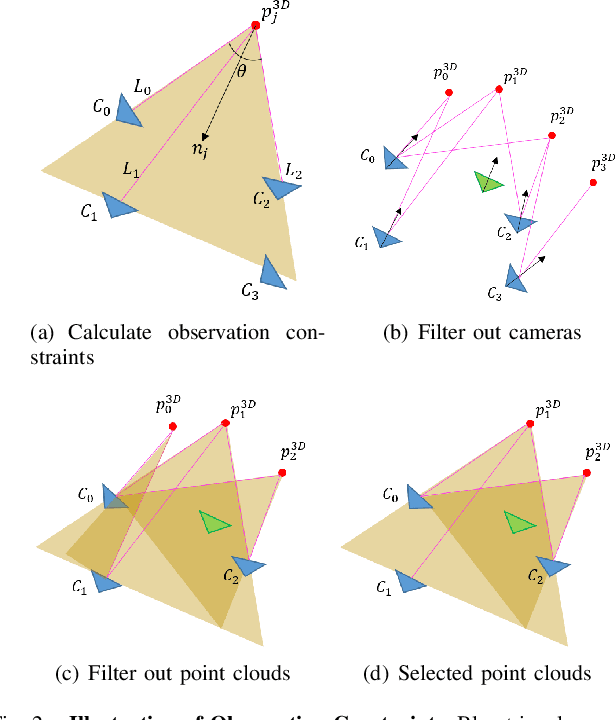

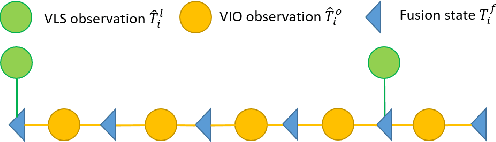

A Real-Time Fusion Framework for Long-term Visual Localization

Oct 18, 2022

Abstract:Visual localization is a fundamental task that regresses the 6 Degree Of Freedom (6DoF) poses with image features in order to serve the high precision localization requests in many robotics applications. Degenerate conditions like motion blur, illumination changes and environment variations place great challenges in this task. Fusion with additional information, such as sequential information and Inertial Measurement Unit (IMU) inputs, would greatly assist such problems. In this paper, we present an efficient client-server visual localization architecture that fuses global and local pose estimations to realize promising precision and efficiency. We include additional geometry hints in mapping and global pose regressing modules to improve the measurement quality. A loosely coupled fusion policy is adopted to leverage the computation complexity and accuracy. We conduct the evaluations on two typical open-source benchmarks, 4Seasons and OpenLORIS. Quantitative results prove that our framework has competitive performance with respect to other state-of-the-art visual localization solutions.

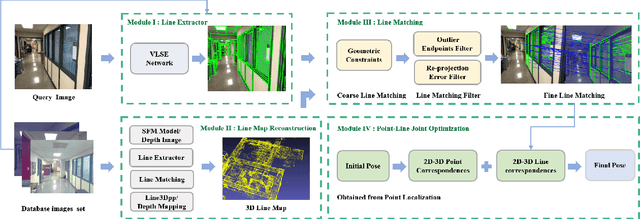

Pose Refinement with Joint Optimization of Visual Points and Lines

Oct 08, 2021

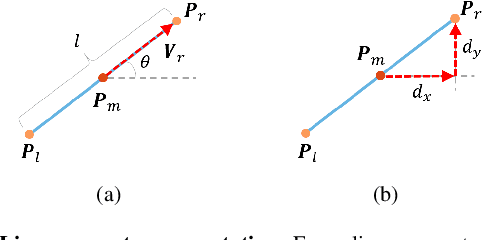

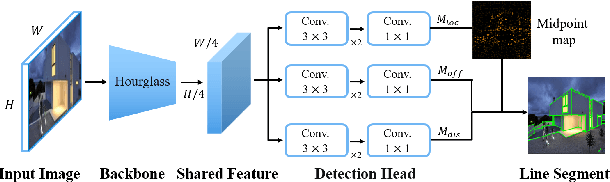

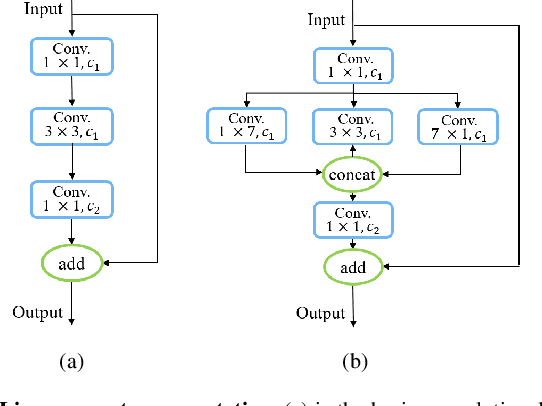

Abstract:High-precision camera re-localization technology in a pre-established 3D environment map is the basis for many tasks, such as Augmented Reality, Robotics and Autonomous Driving. The point-based visual re-localization approaches are well-developed in recent decades, but are insufficient in some feature-less cases. In this paper, we propose a point-line joint optimization method for pose refinement with the help of the innovatively designed line extracting CNN named VLSE, and the line matching and pose optimization approach. We adopt a novel line representation and customize a hybrid convolutional block based on the Stacked Hourglass network, to detect accurate and stable line features on images. Then we apply a coarse-to-fine strategy to obtain precise 2D-3D line correspondences based on the geometric constraint. A following point-line joint cost function is constructed to optimize the camera pose with the initial coarse pose. Sufficient experiments are conducted on open datasets, i.e, line extractor on Wireframe and YorkUrban, localization performance on Aachen Day-Night v1.1 and InLoc, to confirm the effectiveness of our point-line joint pose optimization method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge