Jayaram K. Udupa

Exploiting DINOv3-Based Self-Supervised Features for Robust Few-Shot Medical Image Segmentation

Jan 12, 2026Abstract:Deep learning-based automatic medical image segmentation plays a critical role in clinical diagnosis and treatment planning but remains challenging in few-shot scenarios due to the scarcity of annotated training data. Recently, self-supervised foundation models such as DINOv3, which were trained on large natural image datasets, have shown strong potential for dense feature extraction that can help with the few-shot learning challenge. Yet, their direct application to medical images is hindered by domain differences. In this work, we propose DINO-AugSeg, a novel framework that leverages DINOv3 features to address the few-shot medical image segmentation challenge. Specifically, we introduce WT-Aug, a wavelet-based feature-level augmentation module that enriches the diversity of DINOv3-extracted features by perturbing frequency components, and CG-Fuse, a contextual information-guided fusion module that exploits cross-attention to integrate semantic-rich low-resolution features with spatially detailed high-resolution features. Extensive experiments on six public benchmarks spanning five imaging modalities, including MRI, CT, ultrasound, endoscopy, and dermoscopy, demonstrate that DINO-AugSeg consistently outperforms existing methods under limited-sample conditions. The results highlight the effectiveness of incorporating wavelet-domain augmentation and contextual fusion for robust feature representation, suggesting DINO-AugSeg as a promising direction for advancing few-shot medical image segmentation. Code and data will be made available on https://github.com/apple1986/DINO-AugSeg.

Segment Anything for Video: A Comprehensive Review of Video Object Segmentation and Tracking from Past to Future

Jul 30, 2025

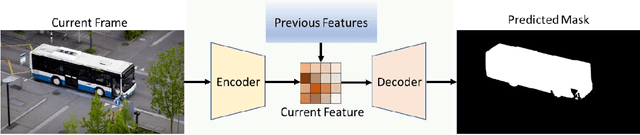

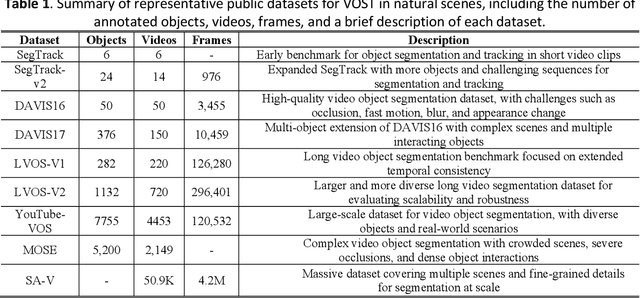

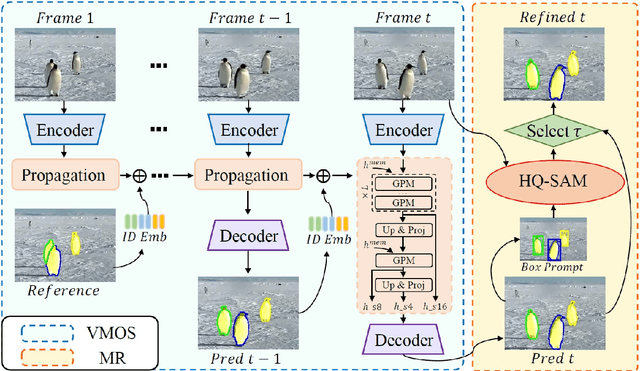

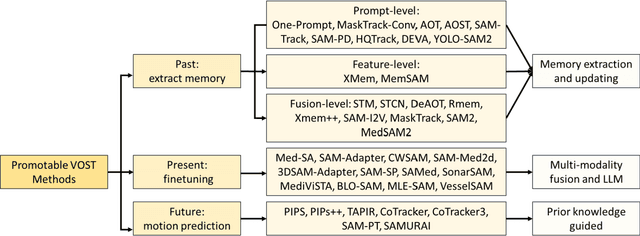

Abstract:Video Object Segmentation and Tracking (VOST) presents a complex yet critical challenge in computer vision, requiring robust integration of segmentation and tracking across temporally dynamic frames. Traditional methods have struggled with domain generalization, temporal consistency, and computational efficiency. The emergence of foundation models like the Segment Anything Model (SAM) and its successor, SAM2, has introduced a paradigm shift, enabling prompt-driven segmentation with strong generalization capabilities. Building upon these advances, this survey provides a comprehensive review of SAM/SAM2-based methods for VOST, structured along three temporal dimensions: past, present, and future. We examine strategies for retaining and updating historical information (past), approaches for extracting and optimizing discriminative features from the current frame (present), and motion prediction and trajectory estimation mechanisms for anticipating object dynamics in subsequent frames (future). In doing so, we highlight the evolution from early memory-based architectures to the streaming memory and real-time segmentation capabilities of SAM2. We also discuss recent innovations such as motion-aware memory selection and trajectory-guided prompting, which aim to enhance both accuracy and efficiency. Finally, we identify remaining challenges including memory redundancy, error accumulation, and prompt inefficiency, and suggest promising directions for future research. This survey offers a timely and structured overview of the field, aiming to guide researchers and practitioners in advancing the state of VOST through the lens of foundation models.

Predicting Risk of Pulmonary Fibrosis Formation in PASC Patients

May 15, 2025Abstract:While the acute phase of the COVID-19 pandemic has subsided, its long-term effects persist through Post-Acute Sequelae of COVID-19 (PASC), commonly known as Long COVID. There remains substantial uncertainty regarding both its duration and optimal management strategies. PASC manifests as a diverse array of persistent or newly emerging symptoms--ranging from fatigue, dyspnea, and neurologic impairments (e.g., brain fog), to cardiovascular, pulmonary, and musculoskeletal abnormalities--that extend beyond the acute infection phase. This heterogeneous presentation poses substantial challenges for clinical assessment, diagnosis, and treatment planning. In this paper, we focus on imaging findings that may suggest fibrotic damage in the lungs, a critical manifestation characterized by scarring of lung tissue, which can potentially affect long-term respiratory function in patients with PASC. This study introduces a novel multi-center chest CT analysis framework that combines deep learning and radiomics for fibrosis prediction. Our approach leverages convolutional neural networks (CNNs) and interpretable feature extraction, achieving 82.2% accuracy and 85.5% AUC in classification tasks. We demonstrate the effectiveness of Grad-CAM visualization and radiomics-based feature analysis in providing clinically relevant insights for PASC-related lung fibrosis prediction. Our findings highlight the potential of deep learning-driven computational methods for early detection and risk assessment of PASC-related lung fibrosis--presented for the first time in the literature.

Eyes Tell the Truth: GazeVal Highlights Shortcomings of Generative AI in Medical Imaging

Mar 26, 2025

Abstract:The demand for high-quality synthetic data for model training and augmentation has never been greater in medical imaging. However, current evaluations predominantly rely on computational metrics that fail to align with human expert recognition. This leads to synthetic images that may appear realistic numerically but lack clinical authenticity, posing significant challenges in ensuring the reliability and effectiveness of AI-driven medical tools. To address this gap, we introduce GazeVal, a practical framework that synergizes expert eye-tracking data with direct radiological evaluations to assess the quality of synthetic medical images. GazeVal leverages gaze patterns of radiologists as they provide a deeper understanding of how experts perceive and interact with synthetic data in different tasks (i.e., diagnostic or Turing tests). Experiments with sixteen radiologists revealed that 96.6% of the generated images (by the most recent state-of-the-art AI algorithm) were identified as fake, demonstrating the limitations of generative AI in producing clinically accurate images.

CIDI-Lung-Seg: A Single-Click Annotation Tool for Automatic Delineation of Lungs from CT Scans

Jul 11, 2014

Abstract:Accurate and fast extraction of lung volumes from computed tomography (CT) scans remains in a great demand in the clinical environment because the available methods fail to provide a generic solution due to wide anatomical variations of lungs and existence of pathologies. Manual annotation, current gold standard, is time consuming and often subject to human bias. On the other hand, current state-of-the-art fully automated lung segmentation methods fail to make their way into the clinical practice due to their inability to efficiently incorporate human input for handling misclassifications and praxis. This paper presents a lung annotation tool for CT images that is interactive, efficient, and robust. The proposed annotation tool produces an "as accurate as possible" initial annotation based on the fuzzy-connectedness image segmentation, followed by efficient manual fixation of the initial extraction if deemed necessary by the practitioner. To provide maximum flexibility to the users, our annotation tool is supported in three major operating systems (Windows, Linux, and the Mac OS X). The quantitative results comparing our free software with commercially available lung segmentation tools show higher degree of consistency and precision of our software with a considerable potential to enhance the performance of routine clinical tasks.

Ball-Scale Based Hierarchical Multi-Object Recognition in 3D Medical Images

Feb 05, 2010Abstract:This paper investigates, using prior shape models and the concept of ball scale (b-scale), ways of automatically recognizing objects in 3D images without performing elaborate searches or optimization. That is, the goal is to place the model in a single shot close to the right pose (position, orientation, and scale) in a given image so that the model boundaries fall in the close vicinity of object boundaries in the image. This is achieved via the following set of key ideas: (a) A semi-automatic way of constructing a multi-object shape model assembly. (b) A novel strategy of encoding, via b-scale, the pose relationship between objects in the training images and their intensity patterns captured in b-scale images. (c) A hierarchical mechanism of positioning the model, in a one-shot way, in a given image from a knowledge of the learnt pose relationship and the b-scale image of the given image to be segmented. The evaluation results on a set of 20 routine clinical abdominal female and male CT data sets indicate the following: (1) Incorporating a large number of objects improves the recognition accuracy dramatically. (2) The recognition algorithm can be thought as a hierarchical framework such that quick replacement of the model assembly is defined as coarse recognition and delineation itself is known as finest recognition. (3) Scale yields useful information about the relationship between the model assembly and any given image such that the recognition results in a placement of the model close to the actual pose without doing any elaborate searches or optimization. (4) Effective object recognition can make delineation most accurate.

The Influence of Intensity Standardization on Medical Image Registration

Feb 05, 2010Abstract:Acquisition-to-acquisition signal intensity variations (non-standardness) are inherent in MR images. Standardization is a post processing method for correcting inter-subject intensity variations through transforming all images from the given image gray scale into a standard gray scale wherein similar intensities achieve similar tissue meanings. The lack of a standard image intensity scale in MRI leads to many difficulties in tissue characterizability, image display, and analysis, including image segmentation. This phenomenon has been documented well; however, effects of standardization on medical image registration have not been studied yet. In this paper, we investigate the influence of intensity standardization in registration tasks with systematic and analytic evaluations involving clinical MR images. We conducted nearly 20,000 clinical MR image registration experiments and evaluated the quality of registrations both quantitatively and qualitatively. The evaluations show that intensity variations between images degrades the accuracy of registration performance. The results imply that the accuracy of image registration not only depends on spatial and geometric similarity but also on the similarity of the intensity values for the same tissues in different images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge