Indrin Chetty

Iterative Prompt Refinement for Radiation Oncology Symptom Extraction Using Teacher-Student Large Language Models

Feb 06, 2024Abstract:This study introduces a novel teacher-student architecture utilizing Large Language Models (LLMs) to improve prostate cancer radiotherapy symptom extraction from clinical notes. Mixtral, the student model, initially extracts symptoms, followed by GPT-4, the teacher model, which refines prompts based on Mixtral's performance. This iterative process involved 294 single symptom clinical notes across 12 symptoms, with up to 16 rounds of refinement per epoch. Results showed significant improvements in extracting symptoms from both single and multi-symptom notes. For 59 single symptom notes, accuracy increased from 0.51 to 0.71, precision from 0.52 to 0.82, recall from 0.52 to 0.72, and F1 score from 0.49 to 0.73. In 375 multi-symptom notes, accuracy rose from 0.24 to 0.43, precision from 0.6 to 0.76, recall from 0.24 to 0.43, and F1 score from 0.20 to 0.44. These results demonstrate the effectiveness of advanced prompt engineering in LLMs for radiation oncology use.

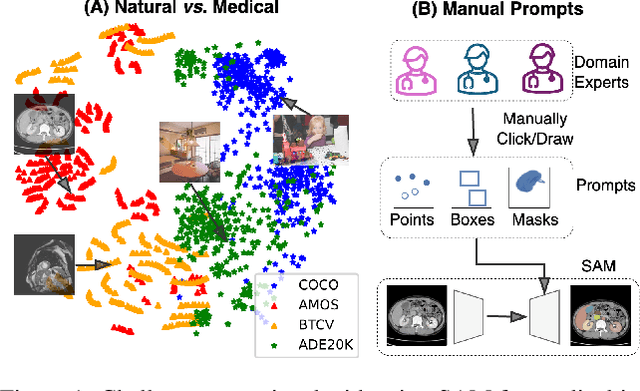

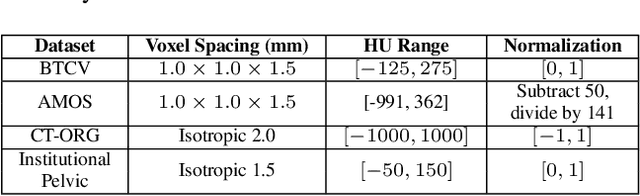

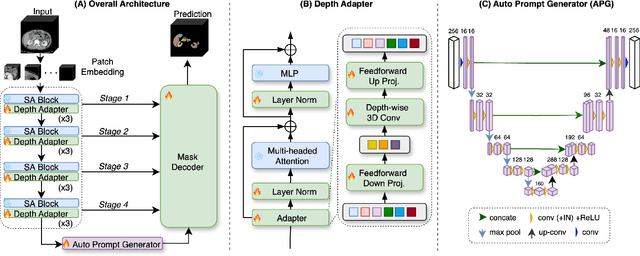

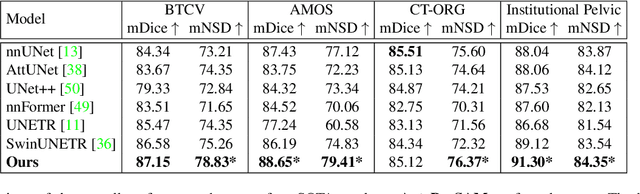

Auto-Prompting SAM for Mobile Friendly 3D Medical Image Segmentation

Aug 28, 2023

Abstract:The Segment Anything Model (SAM) has rapidly been adopted for segmenting a wide range of natural images. However, recent studies have indicated that SAM exhibits subpar performance on 3D medical image segmentation tasks. In addition to the domain gaps between natural and medical images, disparities in the spatial arrangement between 2D and 3D images, the substantial computational burden imposed by powerful GPU servers, and the time-consuming manual prompt generation impede the extension of SAM to a broader spectrum of medical image segmentation applications. To address these challenges, in this work, we introduce a novel method, AutoSAM Adapter, designed specifically for 3D multi-organ CT-based segmentation. We employ parameter-efficient adaptation techniques in developing an automatic prompt learning paradigm to facilitate the transformation of the SAM model's capabilities to 3D medical image segmentation, eliminating the need for manually generated prompts. Furthermore, we effectively transfer the acquired knowledge of the AutoSAM Adapter to other lightweight models specifically tailored for 3D medical image analysis, achieving state-of-the-art (SOTA) performance on medical image segmentation tasks. Through extensive experimental evaluation, we demonstrate the AutoSAM Adapter as a critical foundation for effectively leveraging the emerging ability of foundation models in 2D natural image segmentation for 3D medical image segmentation.

Prostate Cancer Malignancy Detection and localization from mpMRI using auto-Deep Learning: One Step Closer to Clinical Utilization

Jun 13, 2022

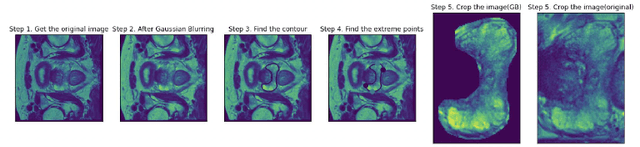

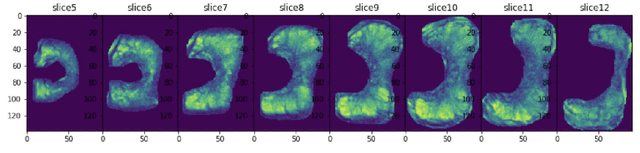

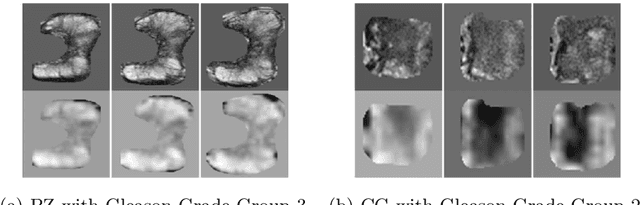

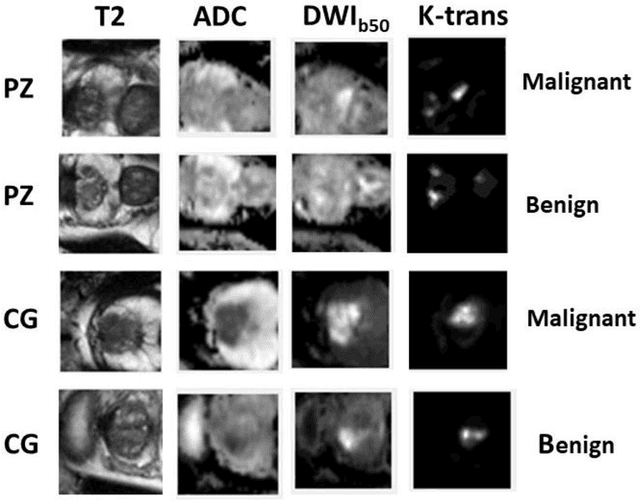

Abstract:Automatic diagnosis of malignant prostate cancer patients from mpMRI has been studied heavily in the past years. Model interpretation and domain drift have been the main road blocks for clinical utilization. As an extension from our previous work where we trained a customized convolutional neural network on a public cohort with 201 patients and the cropped 2D patches around the region of interest were used as the input, the cropped 2.5D slices of the prostate glands were used as the input, and the optimal model were searched in the model space using autoKeras. Something different was peripheral zone (PZ) and central gland (CG) were trained and tested separately, the PZ detector and CG detector were demonstrated effectively in highlighting the most suspicious slices out of a sequence, hopefully to greatly ease the workload for the physicians.

A Deep Dive into Understanding Tumor Foci Classification using Multiparametric MRI Based on Convolutional Neural Network

Apr 04, 2019

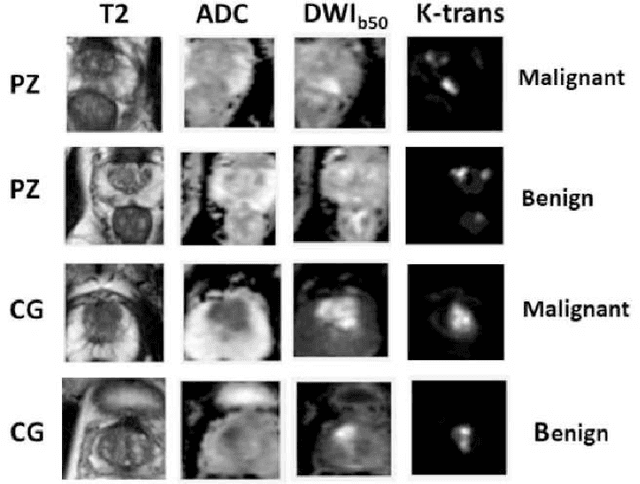

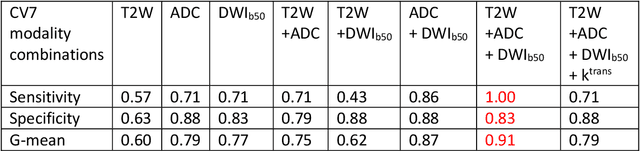

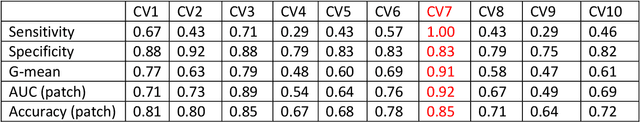

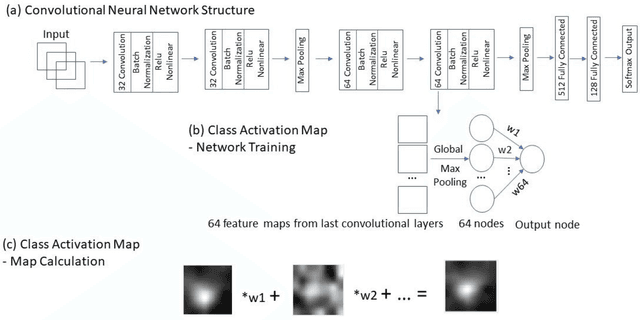

Abstract:Data scarcity has refrained deep learning models from making greater progress in prostate images analysis using multiparametric MRI. In this paper, an efficient convolutional neural network (CNN) was developed to classify lesion malignancy for prostate cancer patients, based on which model interpretation was systematically analyzed to bridge the gap between natural images and MR images, which is the first one of its kind in the literature. The problem of small sample size was addressed and successfully tackled by feeding the intermediate features into a traditional classification algorithm known as weighted extreme learning machine, with imbalanced distribution among output categories taken into consideration. Model trained on public data set was able to generalize to data from an independent institution to make accurate prediction. The generated saliency map was found to overlay well with the lesion and could benefit clinicians for diagnosing purpose.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge