Eric Carver

Prostate Cancer Malignancy Detection and localization from mpMRI using auto-Deep Learning: One Step Closer to Clinical Utilization

Jun 13, 2022

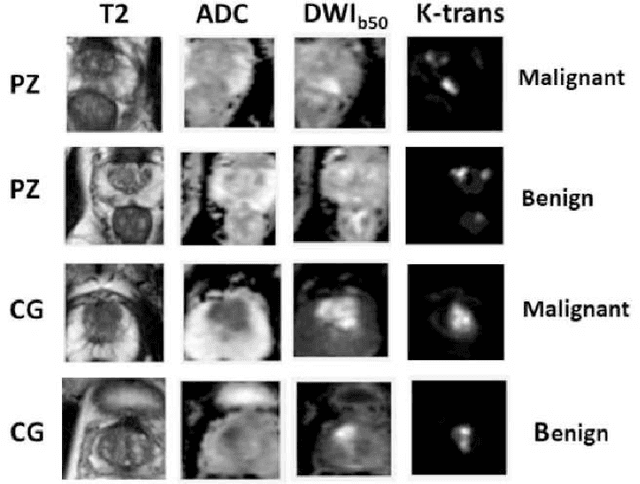

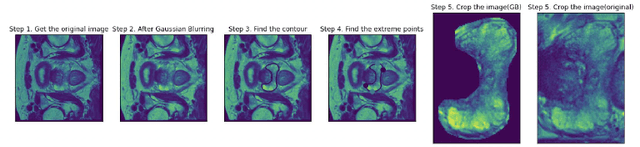

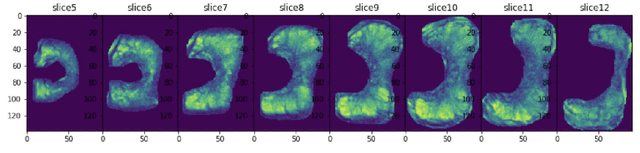

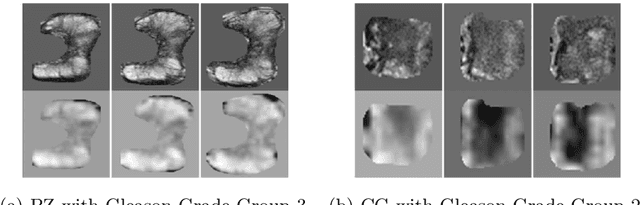

Abstract:Automatic diagnosis of malignant prostate cancer patients from mpMRI has been studied heavily in the past years. Model interpretation and domain drift have been the main road blocks for clinical utilization. As an extension from our previous work where we trained a customized convolutional neural network on a public cohort with 201 patients and the cropped 2D patches around the region of interest were used as the input, the cropped 2.5D slices of the prostate glands were used as the input, and the optimal model were searched in the model space using autoKeras. Something different was peripheral zone (PZ) and central gland (CG) were trained and tested separately, the PZ detector and CG detector were demonstrated effectively in highlighting the most suspicious slices out of a sequence, hopefully to greatly ease the workload for the physicians.

Improvement of Multiparametric MR Image Segmentation by Augmenting the Data with Generative Adversarial Networks for Glioma Patients

Oct 01, 2019

Abstract:Every year thousands of patients are diagnosed with a glioma, a type of malignant brain tumor. Physicians use MR images as a key tool in the diagnosis and treatment of these patients. Neural networks show great potential to aid physicians in the medical image analysis. This study investigates the use of varying amounts of synthetic brain T1-weighted (T1), post-contrast T1-weighted (T1Gd), T2-weighted (T2), and T2 Fluid Attenuated Inversion Recovery (FLAIR) MR images created by a generative adversarial network to overcome the lack of annotated medical image data in training separate 2D U-Nets to segment enhancing tumor, peritumoral edema, and necrosis (non-enhancing tumor core) regions on gliomas. These synthetic MR images were assessed quantitively (SSIM=0.79) and qualitatively by a physician who found that the synthetic images seem stronger for delineation of structural boundaries but struggle more when gradient is significant, (e.g. edema signal in T2 modalities). Multiple 2D U-Nets were trained with original BraTS data and differing subsets of a quarter, half, three-quarters, and all synthetic MR images. There was not an obvious correlation between the improvement of values of the metrics in separate validation dataset for each structure and amount of synthetic data added, there is a strong correlation between the amount of synthetic data added and the number of best overall validation metrics. In summary, this study showed ability to generate high quality synthetic Flair, T2, T1, and T1CE MR images using the GAN. Using the synthetic MR images showed encouraging results to improve the U-Net segmentation performance which has the potential to address the scarcity of readily available medical images.

A Deep Dive into Understanding Tumor Foci Classification using Multiparametric MRI Based on Convolutional Neural Network

Apr 04, 2019

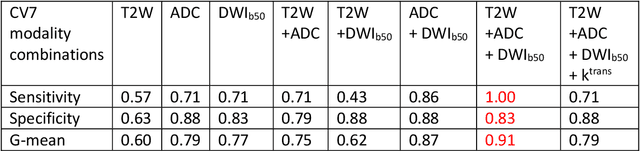

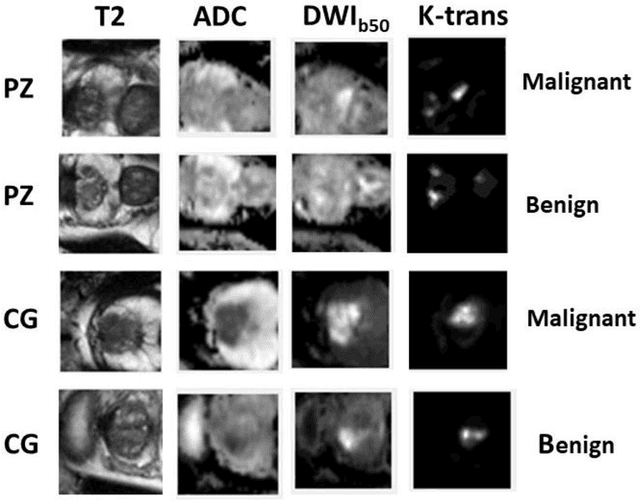

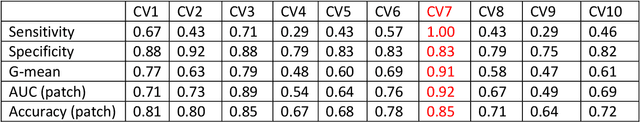

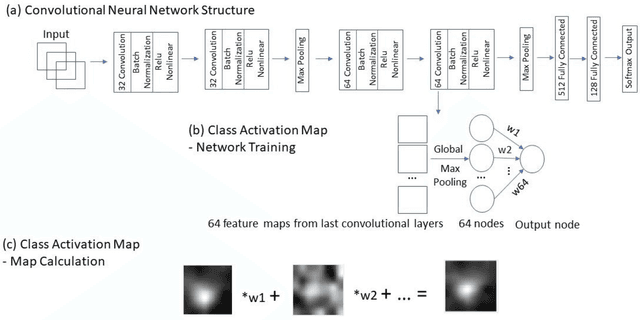

Abstract:Data scarcity has refrained deep learning models from making greater progress in prostate images analysis using multiparametric MRI. In this paper, an efficient convolutional neural network (CNN) was developed to classify lesion malignancy for prostate cancer patients, based on which model interpretation was systematically analyzed to bridge the gap between natural images and MR images, which is the first one of its kind in the literature. The problem of small sample size was addressed and successfully tackled by feeding the intermediate features into a traditional classification algorithm known as weighted extreme learning machine, with imbalanced distribution among output categories taken into consideration. Model trained on public data set was able to generalize to data from an independent institution to make accurate prediction. The generated saliency map was found to overlay well with the lesion and could benefit clinicians for diagnosing purpose.

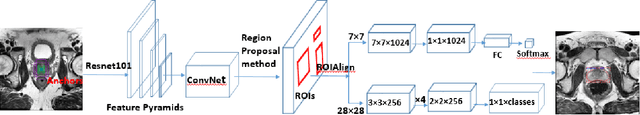

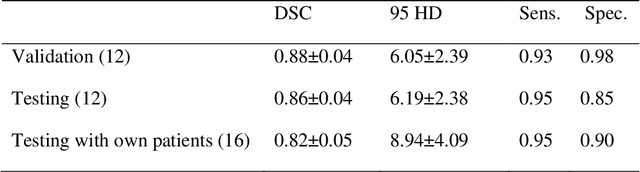

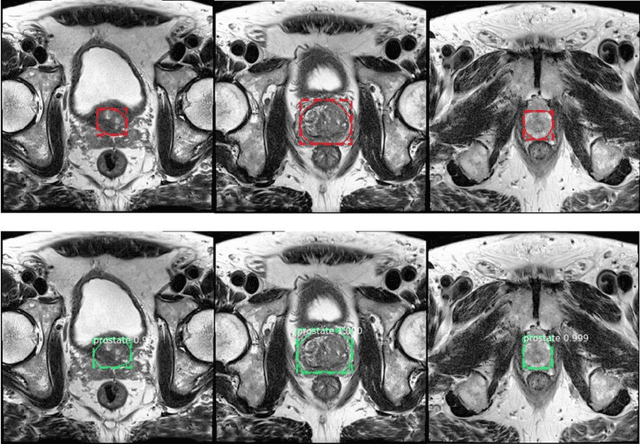

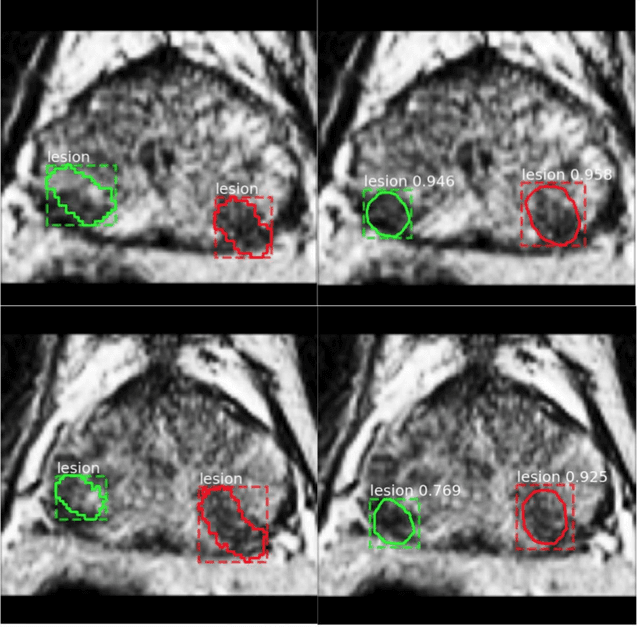

Segmentation of the Prostatic Gland and the Intraprostatic Lesions on Multiparametic MRI Using Mask-RCNN

Apr 04, 2019

Abstract:Prostate cancer (PCa) is the most common cancer in men in the United States. Multiparametic magnetic resonance imaging (mp-MRI) has been explored by many researchers to targeted prostate biopsies and radiation therapy. However, assessment on mp-MRI can be subjective, development of computer-aided diagnosis systems to automatically delineate the prostate gland and the intraprostratic lesions (ILs) becomes important to facilitate with radiologists in clinical practice. In this paper, we first study the implementation of the Mask-RCNN model to segment the prostate and ILs. We trained and evaluated models on 120 patients from two different cohorts of patients. We also used 2D U-Net and 3D U-Net as benchmarks to segment the prostate and compared the model's performance. The contour variability of ILs using the algorithm was also benchmarked against the interobserver variability between two different radiation oncologists on 19 patients. Our results indicate that the Mask-RCNN model is able to reach state-of-art performance in the prostate segmentation and outperforms several competitive baselines in ILs segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge