Ian Langmore

Moment Expansions of the Energy Distance

May 27, 2025Abstract:The energy distance is used to test distributional equality, and as a loss function in machine learning. While $D^2(X, Y)=0$ only when $X\sim Y$, the sensitivity to different moments is of practical importance. This work considers $D^2(X, Y)$ in the case where the distributions are close. In this regime, $D^2(X, Y)$ is more sensitive to differences in the means $\bar{X}-\bar{Y}$, than differences in the covariances $\Delta$. This is due to the structure of the energy distance and is independent of dimension. The sensitivity to on versus off diagonal components of $\Delta$ is examined when $X$ and $Y$ are close to isotropic. Here a dimension dependent averaging occurs and, in many cases, off diagonal correlations contribute significantly less. Numerical results verify these relationships hold even when distributional assumptions are not strictly met.

Neural general circulation models optimized to predict satellite-based precipitation observations

Dec 16, 2024Abstract:Climate models struggle to accurately simulate precipitation, particularly extremes and the diurnal cycle. Here, we present a hybrid model that is trained directly on satellite-based precipitation observations. Our model runs at 2.8$^\circ$ resolution and is built on the differentiable NeuralGCM framework. The model demonstrates significant improvements over existing general circulation models, the ERA5 reanalysis, and a global cloud-resolving model in simulating precipitation. Our approach yields reduced biases, a more realistic precipitation distribution, improved representation of extremes, and a more accurate diurnal cycle. Furthermore, it outperforms the mid-range precipitation forecast of the ECMWF ensemble. This advance paves the way for more reliable simulations of current climate and demonstrates how training on observations can be used to directly improve GCMs.

Neural General Circulation Models

Nov 28, 2023Abstract:General circulation models (GCMs) are the foundation of weather and climate prediction. GCMs are physics-based simulators which combine a numerical solver for large-scale dynamics with tuned representations for small-scale processes such as cloud formation. Recently, machine learning (ML) models trained on reanalysis data achieved comparable or better skill than GCMs for deterministic weather forecasting. However, these models have not demonstrated improved ensemble forecasts, or shown sufficient stability for long-term weather and climate simulations. Here we present the first GCM that combines a differentiable solver for atmospheric dynamics with ML components, and show that it can generate forecasts of deterministic weather, ensemble weather and climate on par with the best ML and physics-based methods. NeuralGCM is competitive with ML models for 1-10 day forecasts, and with the European Centre for Medium-Range Weather Forecasts ensemble prediction for 1-15 day forecasts. With prescribed sea surface temperature, NeuralGCM can accurately track climate metrics such as global mean temperature for multiple decades, and climate forecasts with 140 km resolution exhibit emergent phenomena such as realistic frequency and trajectories of tropical cyclones. For both weather and climate, our approach offers orders of magnitude computational savings over conventional GCMs. Our results show that end-to-end deep learning is compatible with tasks performed by conventional GCMs, and can enhance the large-scale physical simulations that are essential for understanding and predicting the Earth system.

WeatherBench 2: A benchmark for the next generation of data-driven global weather models

Aug 29, 2023Abstract:WeatherBench 2 is an update to the global, medium-range (1-14 day) weather forecasting benchmark proposed by Rasp et al. (2020), designed with the aim to accelerate progress in data-driven weather modeling. WeatherBench 2 consists of an open-source evaluation framework, publicly available training, ground truth and baseline data as well as a continuously updated website with the latest metrics and state-of-the-art models: https://sites.research.google/weatherbench. This paper describes the design principles of the evaluation framework and presents results for current state-of-the-art physical and data-driven weather models. The metrics are based on established practices for evaluating weather forecasts at leading operational weather centers. We define a set of headline scores to provide an overview of model performance. In addition, we also discuss caveats in the current evaluation setup and challenges for the future of data-driven weather forecasting.

tfp.mcmc: Modern Markov Chain Monte Carlo Tools Built for Modern Hardware

Feb 04, 2020Abstract:Markov chain Monte Carlo (MCMC) is widely regarded as one of the most important algorithms of the 20th century. Its guarantees of asymptotic convergence, stability, and estimator-variance bounds using only unnormalized probability functions make it indispensable to probabilistic programming. In this paper, we introduce the TensorFlow Probability MCMC toolkit, and discuss some of the considerations that motivated its design.

Constructing High Precision Knowledge Bases with Subjective and Factual Attributes

Jun 13, 2019

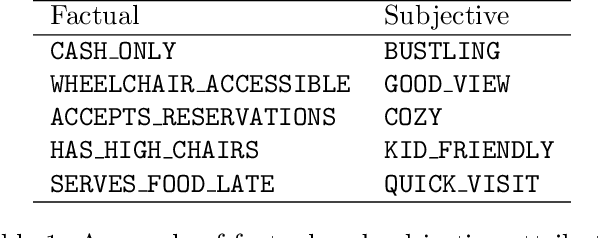

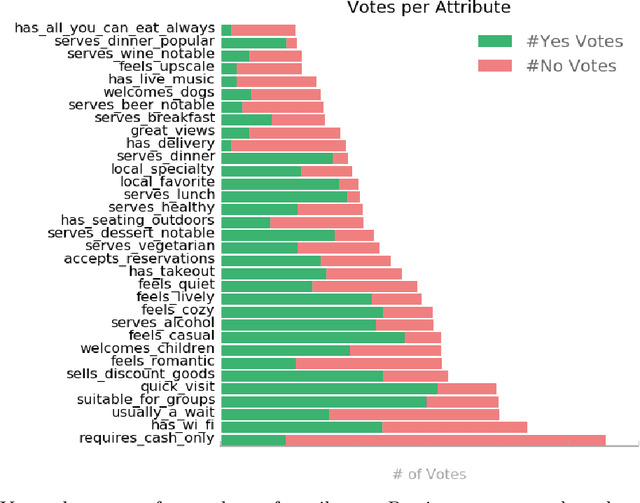

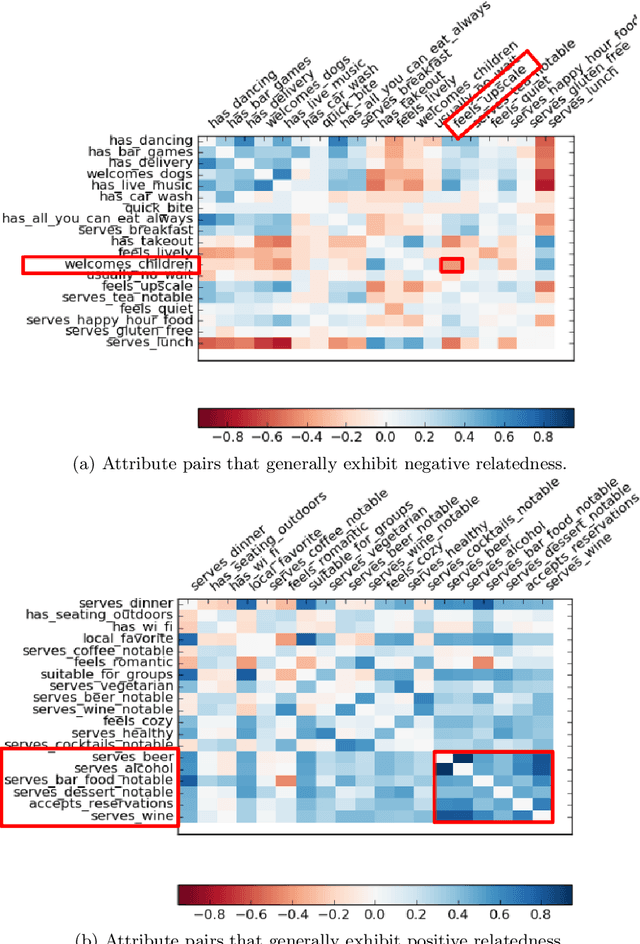

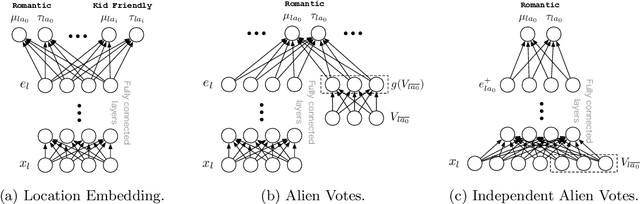

Abstract:Knowledge bases (KBs) are the backbone of many ubiquitous applications and are thus required to exhibit high precision. However, for KBs that store subjective attributes of entities, e.g., whether a movie is "kid friendly", simply estimating precision is complicated by the inherent ambiguity in measuring subjective phenomena. In this work, we develop a method for constructing KBs with tunable precision--i.e., KBs that can be made to operate at a specific false positive rate, despite storing both difficult-to-evaluate subjective attributes and more traditional factual attributes. The key to our approach is probabilistically modeling user consensus with respect to each entity-attribute pair, rather than modeling each pair as either True or False. Uncertainty in the model is explicitly represented and used to control the KB's precision. We propose three neural networks for fitting the consensus model and evaluate each one on data from Google Maps--a large KB of locations and their subjective and factual attributes. The results demonstrate that our learned models are well-calibrated and thus can successfully be used to control the KB's precision. Moreover, when constrained to maintain 95% precision, the best consensus model matches the F-score of a baseline that models each entity-attribute pair as a binary variable and does not support tunable precision. When unconstrained, our model dominates the same baseline by 12% F-score. Finally, we perform an empirical analysis of attribute-attribute correlations and show that leveraging them effectively contributes to reduced uncertainty and better performance in attribute prediction.

NeuTra-lizing Bad Geometry in Hamiltonian Monte Carlo Using Neural Transport

Mar 09, 2019

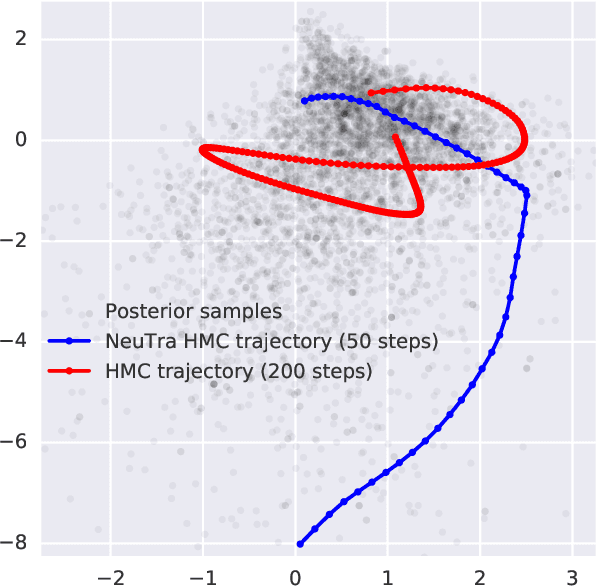

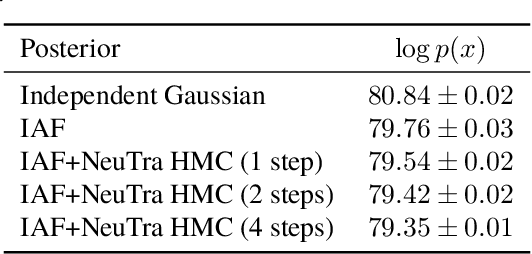

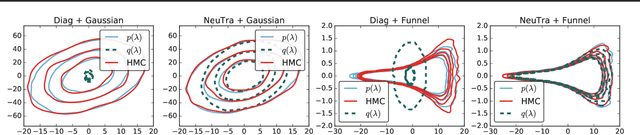

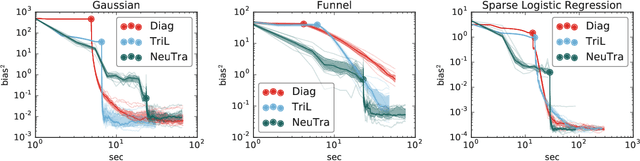

Abstract:Hamiltonian Monte Carlo is a powerful algorithm for sampling from difficult-to-normalize posterior distributions. However, when the geometry of the posterior is unfavorable, it may take many expensive evaluations of the target distribution and its gradient to converge and mix. We propose neural transport (NeuTra) HMC, a technique for learning to correct this sort of unfavorable geometry using inverse autoregressive flows (IAF), a powerful neural variational inference technique. The IAF is trained to minimize the KL divergence from an isotropic Gaussian to the warped posterior, and then HMC sampling is performed in the warped space. We evaluate NeuTra HMC on a variety of synthetic and real problems, and find that it significantly outperforms vanilla HMC both in time to reach the stationary distribution and asymptotic effective-sample-size rates.

TensorFlow Distributions

Nov 28, 2017

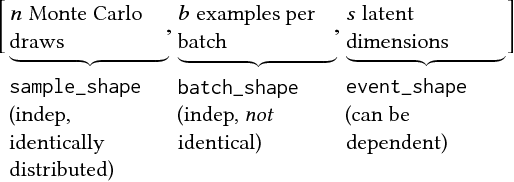

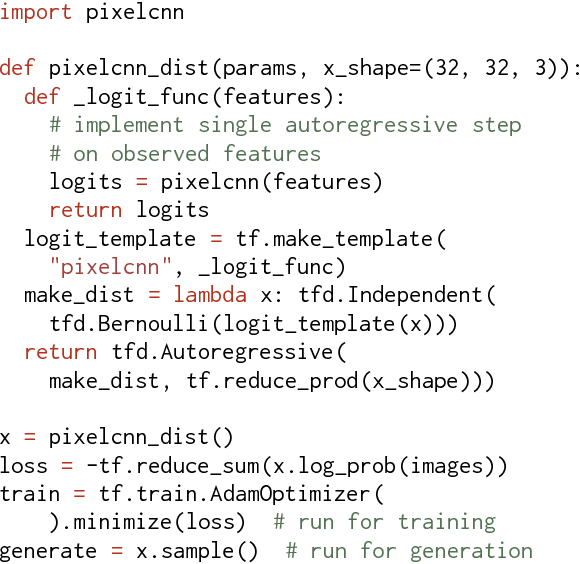

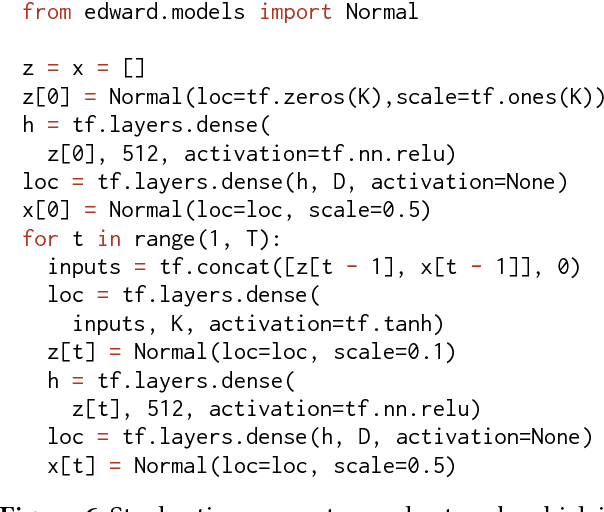

Abstract:The TensorFlow Distributions library implements a vision of probability theory adapted to the modern deep-learning paradigm of end-to-end differentiable computation. Building on two basic abstractions, it offers flexible building blocks for probabilistic computation. Distributions provide fast, numerically stable methods for generating samples and computing statistics, e.g., log density. Bijectors provide composable volume-tracking transformations with automatic caching. Together these enable modular construction of high dimensional distributions and transformations not possible with previous libraries (e.g., pixelCNNs, autoregressive flows, and reversible residual networks). They are the workhorse behind deep probabilistic programming systems like Edward and empower fast black-box inference in probabilistic models built on deep-network components. TensorFlow Distributions has proven an important part of the TensorFlow toolkit within Google and in the broader deep learning community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge