Stephan Rasp

Neural General Circulation Models

Nov 28, 2023Abstract:General circulation models (GCMs) are the foundation of weather and climate prediction. GCMs are physics-based simulators which combine a numerical solver for large-scale dynamics with tuned representations for small-scale processes such as cloud formation. Recently, machine learning (ML) models trained on reanalysis data achieved comparable or better skill than GCMs for deterministic weather forecasting. However, these models have not demonstrated improved ensemble forecasts, or shown sufficient stability for long-term weather and climate simulations. Here we present the first GCM that combines a differentiable solver for atmospheric dynamics with ML components, and show that it can generate forecasts of deterministic weather, ensemble weather and climate on par with the best ML and physics-based methods. NeuralGCM is competitive with ML models for 1-10 day forecasts, and with the European Centre for Medium-Range Weather Forecasts ensemble prediction for 1-15 day forecasts. With prescribed sea surface temperature, NeuralGCM can accurately track climate metrics such as global mean temperature for multiple decades, and climate forecasts with 140 km resolution exhibit emergent phenomena such as realistic frequency and trajectories of tropical cyclones. For both weather and climate, our approach offers orders of magnitude computational savings over conventional GCMs. Our results show that end-to-end deep learning is compatible with tasks performed by conventional GCMs, and can enhance the large-scale physical simulations that are essential for understanding and predicting the Earth system.

WeatherBench 2: A benchmark for the next generation of data-driven global weather models

Aug 29, 2023Abstract:WeatherBench 2 is an update to the global, medium-range (1-14 day) weather forecasting benchmark proposed by Rasp et al. (2020), designed with the aim to accelerate progress in data-driven weather modeling. WeatherBench 2 consists of an open-source evaluation framework, publicly available training, ground truth and baseline data as well as a continuously updated website with the latest metrics and state-of-the-art models: https://sites.research.google/weatherbench. This paper describes the design principles of the evaluation framework and presents results for current state-of-the-art physical and data-driven weather models. The metrics are based on established practices for evaluating weather forecasts at leading operational weather centers. We define a set of headline scores to provide an overview of model performance. In addition, we also discuss caveats in the current evaluation setup and challenges for the future of data-driven weather forecasting.

WeatherBench Probability: A benchmark dataset for probabilistic medium-range weather forecasting along with deep learning baseline models

May 02, 2022

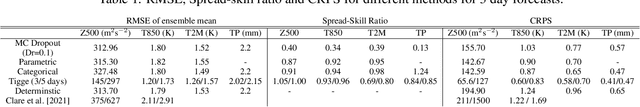

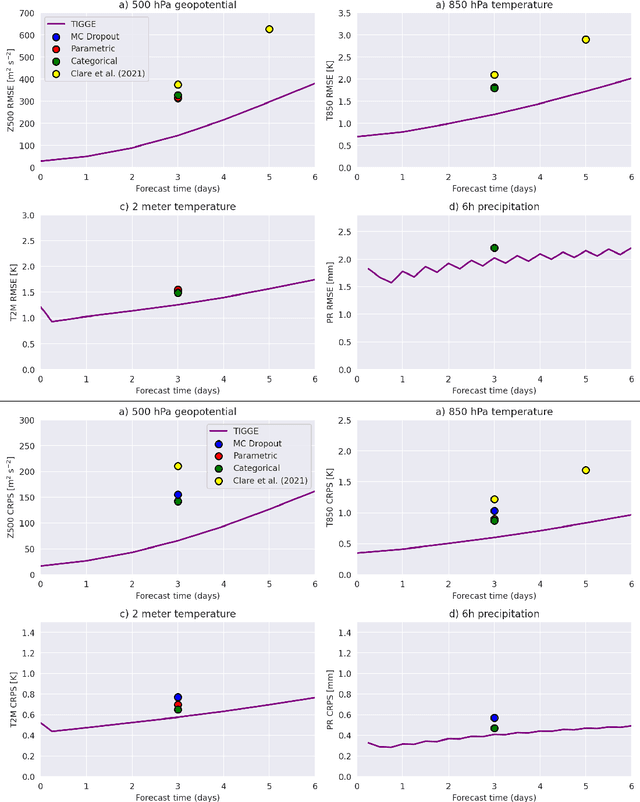

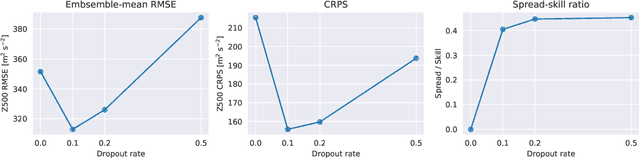

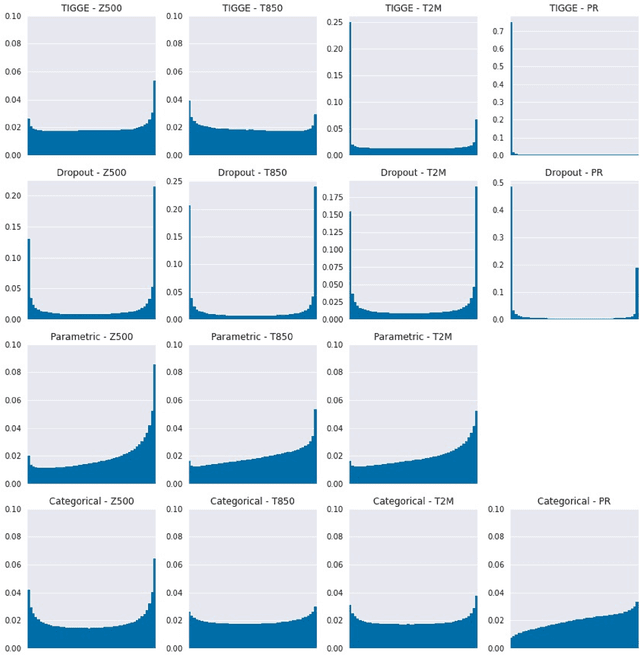

Abstract:WeatherBench is a benchmark dataset for medium-range weather forecasting of geopotential, temperature and precipitation, consisting of preprocessed data, predefined evaluation metrics and a number of baseline models. WeatherBench Probability extends this to probabilistic forecasting by adding a set of established probabilistic verification metrics (continuous ranked probability score, spread-skill ratio and rank histograms) and a state-of-the-art operational baseline using the ECWMF IFS ensemble forecast. In addition, we test three different probabilistic machine learning methods -- Monte Carlo dropout, parametric prediction and categorical prediction, in which the probability distribution is discretized. We find that plain Monte Carlo dropout severely underestimates uncertainty. The parametric and categorical models both produce fairly reliable forecasts of similar quality. The parametric models have fewer degrees of freedom while the categorical model is more flexible when it comes to predicting non-Gaussian distributions. None of the models are able to match the skill of the operational IFS model. We hope that this benchmark will enable other researchers to evaluate their probabilistic approaches.

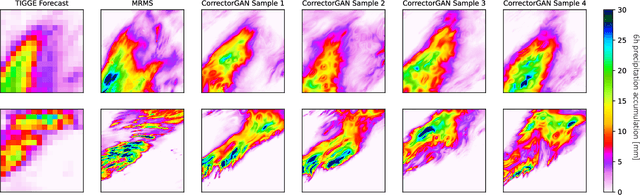

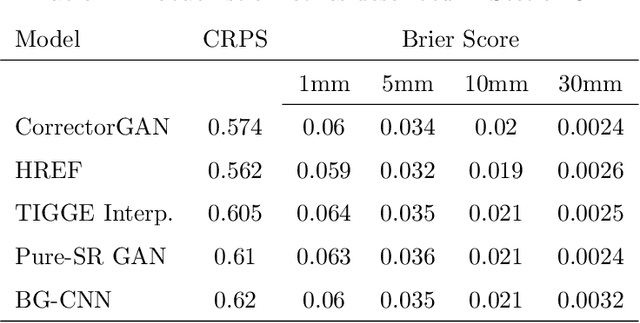

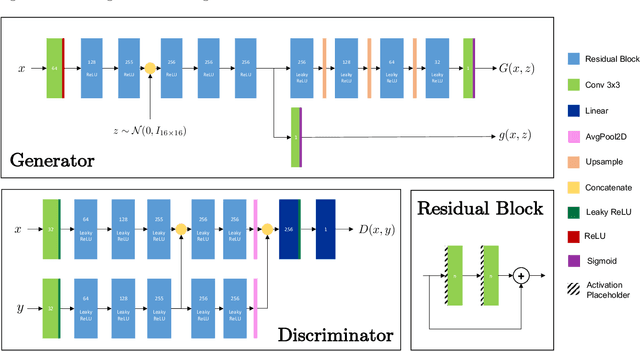

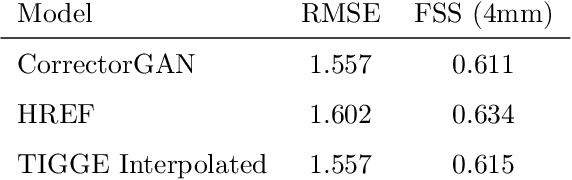

Increasing the accuracy and resolution of precipitation forecasts using deep generative models

Mar 23, 2022

Abstract:Accurately forecasting extreme rainfall is notoriously difficult, but is also ever more crucial for society as climate change increases the frequency of such extremes. Global numerical weather prediction models often fail to capture extremes, and are produced at too low a resolution to be actionable, while regional, high-resolution models are hugely expensive both in computation and labour. In this paper we explore the use of deep generative models to simultaneously correct and downscale (super-resolve) global ensemble forecasts over the Continental US. Specifically, using fine-grained radar observations as our ground truth, we train a conditional Generative Adversarial Network -- coined CorrectorGAN -- via a custom training procedure and augmented loss function, to produce ensembles of high-resolution, bias-corrected forecasts based on coarse, global precipitation forecasts in addition to other relevant meteorological fields. Our model outperforms an interpolation baseline, as well as super-resolution-only and CNN-based univariate methods, and approaches the performance of an operational regional high-resolution model across an array of established probabilistic metrics. Crucially, CorrectorGAN, once trained, produces predictions in seconds on a single machine. These results raise exciting questions about the necessity of regional models, and whether data-driven downscaling and correction methods can be transferred to data-poor regions that so far have had no access to high-resolution forecasts.

Climate-Invariant Machine Learning

Dec 14, 2021

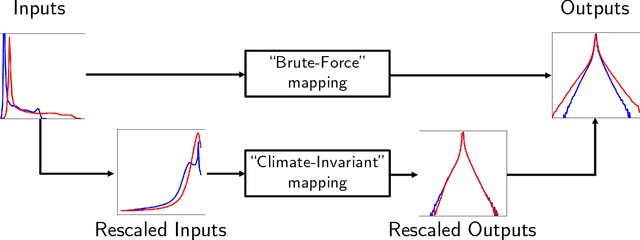

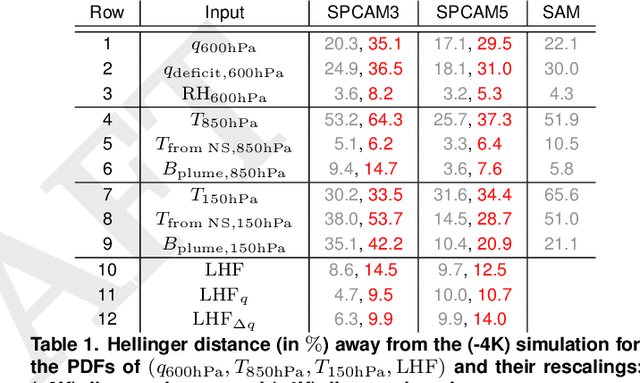

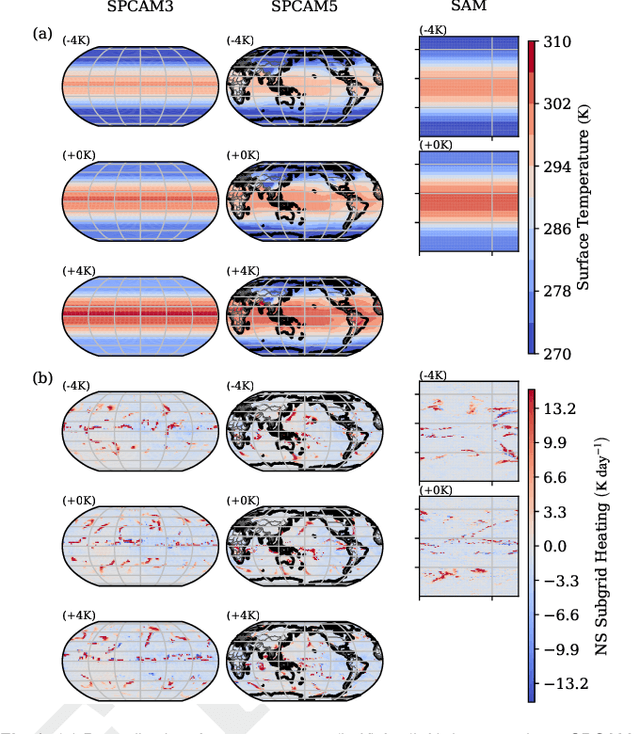

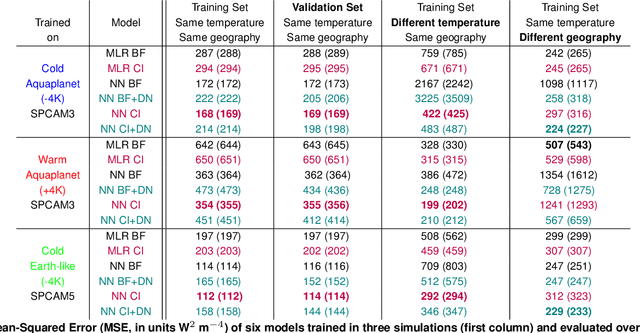

Abstract:Data-driven algorithms, in particular neural networks, can emulate the effects of unresolved processes in coarse-resolution climate models when trained on high-resolution simulation data; however, they often make large generalization errors when evaluated in conditions they were not trained on. Here, we propose to physically rescale the inputs and outputs of machine learning algorithms to help them generalize to unseen climates. Applied to offline parameterizations of subgrid-scale thermodynamics in three distinct climate models, we show that rescaled or "climate-invariant" neural networks make accurate predictions in test climates that are 4K and 8K warmer than their training climates. Additionally, "climate-invariant" neural nets facilitate generalization between Aquaplanet and Earth-like simulations. Through visualization and attribution methods, we show that compared to standard machine learning models, "climate-invariant" algorithms learn more local and robust relations between storm-scale convection, radiation, and their synoptic thermodynamic environment. Overall, these results suggest that explicitly incorporating physical knowledge into data-driven models of Earth system processes can improve their consistency and ability to generalize across climate regimes.

Towards Physically-consistent, Data-driven Models of Convection

Feb 20, 2020

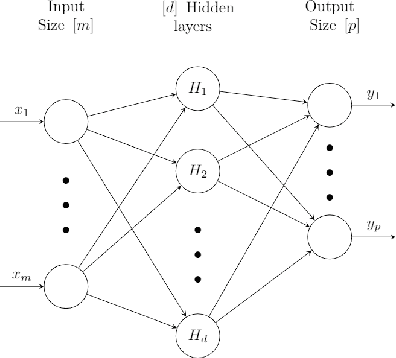

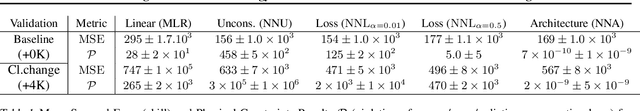

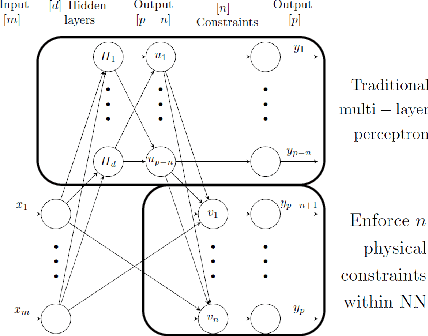

Abstract:Data-driven algorithms, in particular neural networks, can emulate the effect of sub-grid scale processes in coarse-resolution climate models if trained on high-resolution climate simulations. However, they may violate key physical constraints and lack the ability to generalize outside of their training set. Here, we show that physical constraints can be enforced in neural networks, either approximately by adapting the loss function or to machine precision by adapting the architecture. As these physical constraints are insufficient to guarantee generalizability, we additionally propose a framework to find physical normalizations that can be applied to the training and validation data to improve the ability of neural networks to generalize to unseen climates.

WeatherBench: A benchmark dataset for data-driven weather forecasting

Feb 12, 2020

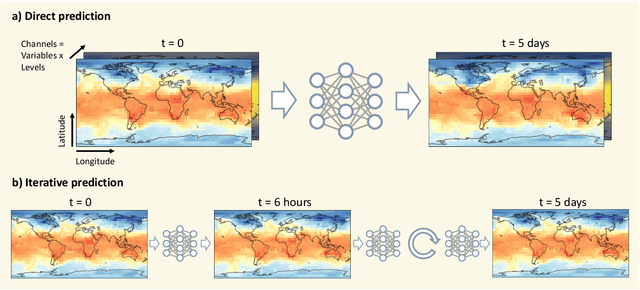

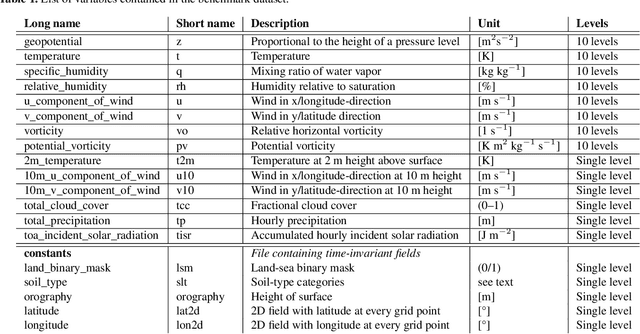

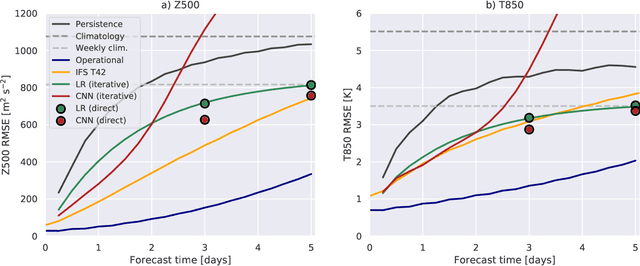

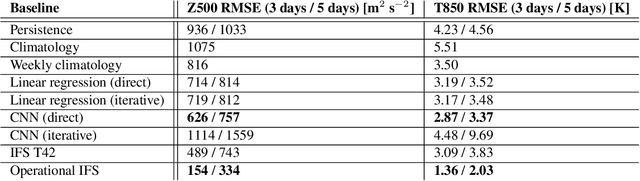

Abstract:Data-driven approaches, most prominently deep learning, have become powerful tools for prediction in many domains. A natural question to ask is whether data-driven methods could also be used for numerical weather prediction. First studies show promise but the lack of a common dataset and evaluation metrics make inter-comparison between studies difficult. Here we present a benchmark dataset for data-driven medium-range weather forecasting, a topic of high scientific interest for atmospheric and computer scientists alike. We provide data derived from the ERA5 archive that has been processed to facilitate the use in machine learning models. We propose a simple and clear evaluation metric which will enable a direct comparison between different methods. Further, we provide baseline scores from simple linear regression techniques, deep learning models as well as purely physical forecasting models. All data is publicly available at https://mediatum.ub.tum.de/1524895 and the companion code is reproducible with tutorials for getting started. We hope that this dataset will accelerate research in data-driven weather forecasting.

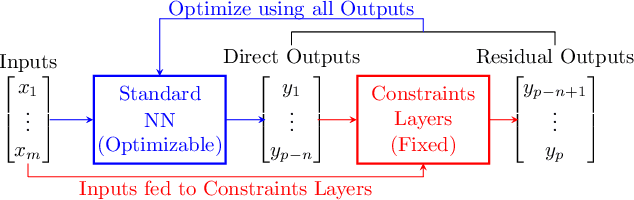

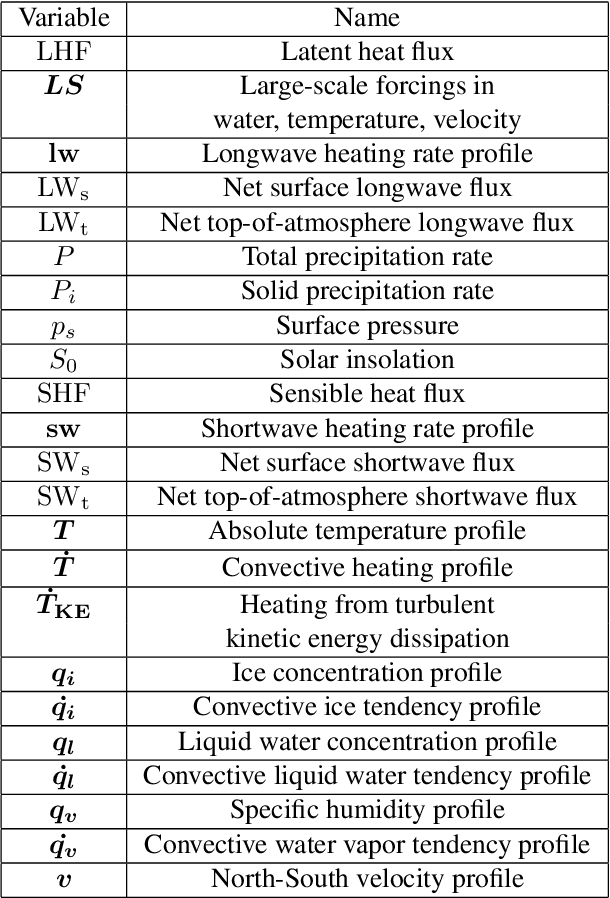

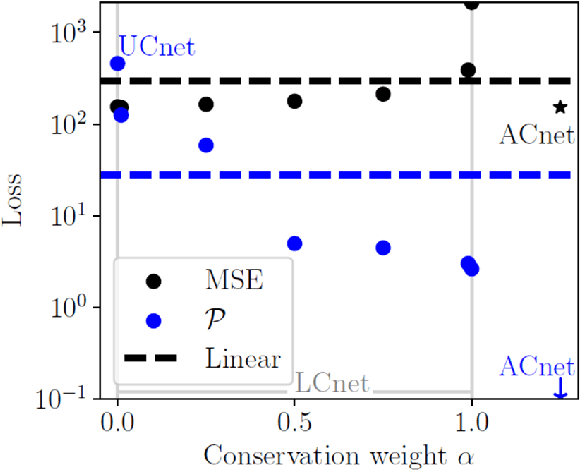

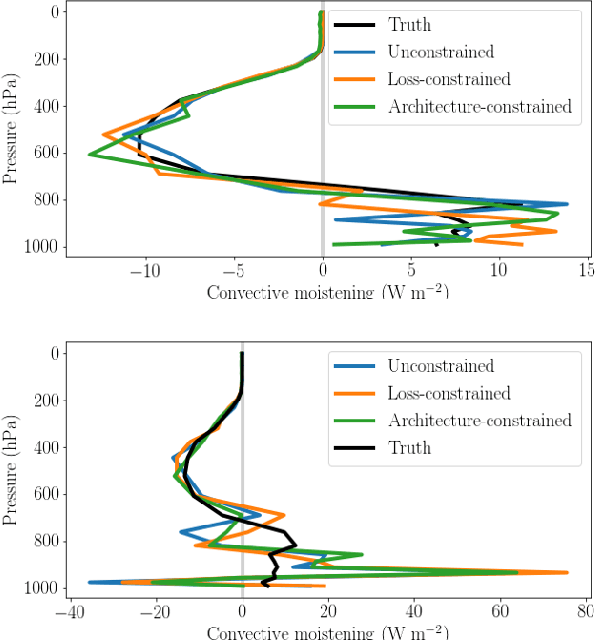

Achieving Conservation of Energy in Neural Network Emulators for Climate Modeling

Jun 15, 2019

Abstract:Artificial neural-networks have the potential to emulate cloud processes with higher accuracy than the semi-empirical emulators currently used in climate models. However, neural-network models do not intrinsically conserve energy and mass, which is an obstacle to using them for long-term climate predictions. Here, we propose two methods to enforce linear conservation laws in neural-network emulators of physical models: Constraining (1) the loss function or (2) the architecture of the network itself. Applied to the emulation of explicitly-resolved cloud processes in a prototype multi-scale climate model, we show that architecture constraints can enforce conservation laws to satisfactory numerical precision, while all constraints help the neural-network better generalize to conditions outside of its training set, such as global warming.

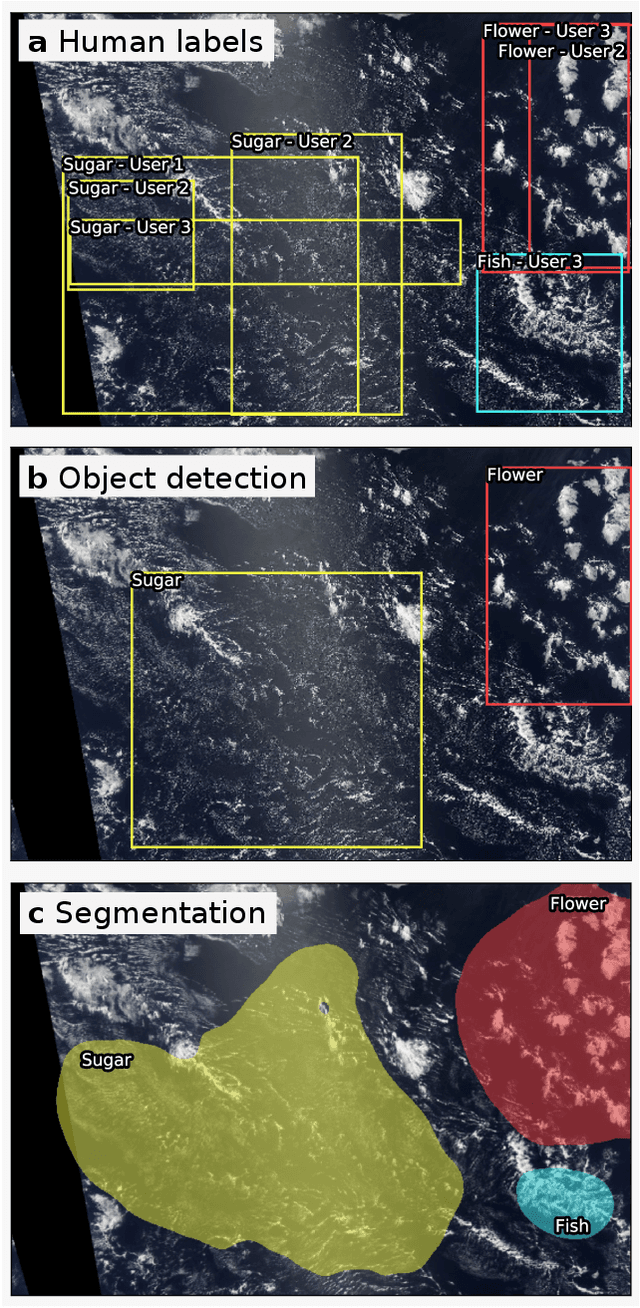

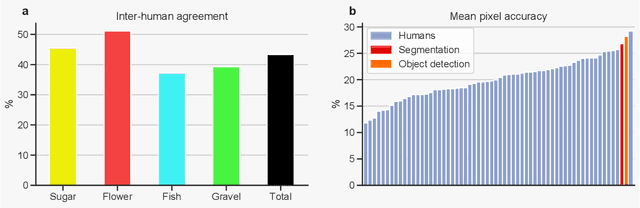

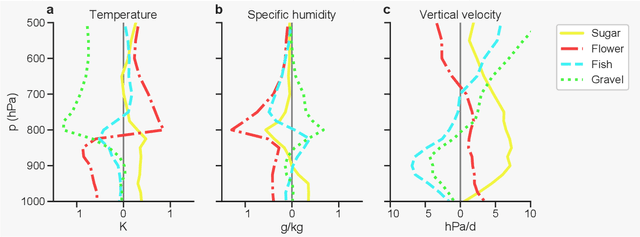

Combining crowd-sourcing and deep learning to understand meso-scale organization of shallow convection

Jun 05, 2019

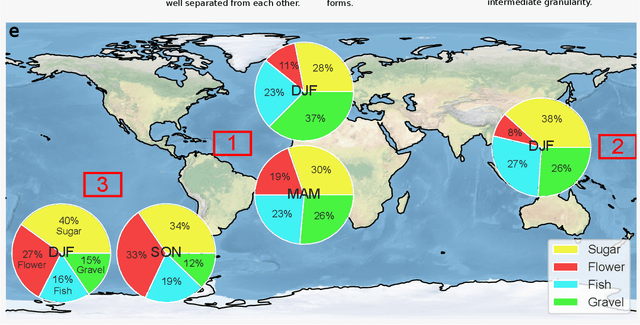

Abstract:The discovery of new phenomena and mechanisms often begins with a scientist's intuitive ability to recognize patterns, for example in satellite imagery or model output. Typically, however, such intuitive evidence turns out to be difficult to encode and reproduce. Here, we show how crowd-sourcing and deep learning can be combined to scale up the intuitive discovery of atmospheric phenomena. Specifically, we focus on the organization of shallow clouds in the trades, which play a disproportionately large role in the Earth's energy balance. Based on visual inspection four subjective patterns or organization were defined: Sugar, Flower, Fish and Gravel. On cloud labeling days at two institutes, 67 participants classified more than 30,000 satellite images on a crowd-sourcing platform. Physical analysis reveals that the four patterns are associated with distinct large-scale environmental conditions. We then used the classifications as a training set for deep learning algorithms, which learned to detect the cloud patterns with human accuracy. This enables analysis much beyond the human classifications. As an example, we created global climatologies of the four patterns. These reveal geographical hotspots that provide insight into the interaction of mesoscale cloud organization with the large-scale circulation. Our project shows that combining crowd-sourcing and deep learning opens new data-driven ways to explore cloud-circulation interactions and serves as a template for a wide range of possible studies in the geosciences.

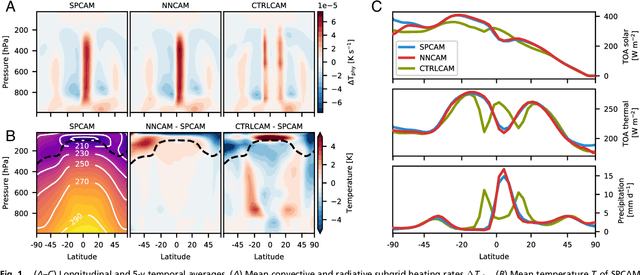

Deep learning to represent sub-grid processes in climate models

Sep 07, 2018

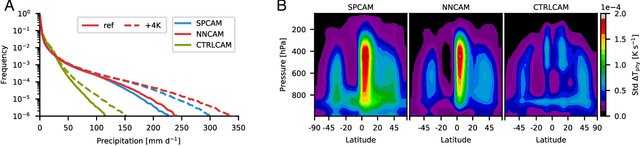

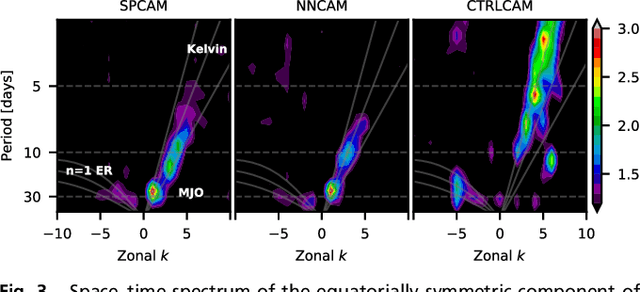

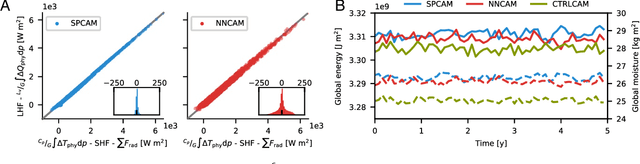

Abstract:The representation of nonlinear sub-grid processes, especially clouds, has been a major source of uncertainty in climate models for decades. Cloud-resolving models better represent many of these processes and can now be run globally but only for short-term simulations of at most a few years because of computational limitations. Here we demonstrate that deep learning can be used to capture many advantages of cloud-resolving modeling at a fraction of the computational cost. We train a deep neural network to represent all atmospheric sub-grid processes in a climate model by learning from a multi-scale model in which convection is treated explicitly. The trained neural network then replaces the traditional sub-grid parameterizations in a global general circulation model in which it freely interacts with the resolved dynamics and the surface-flux scheme. The prognostic multi-year simulations are stable and closely reproduce not only the mean climate of the cloud-resolving simulation but also key aspects of variability, including precipitation extremes and the equatorial wave spectrum. Furthermore, the neural network approximately conserves energy despite not being explicitly instructed to. Finally, we show that the neural network parameterization generalizes to new surface forcing patterns but struggles to cope with temperatures far outside its training manifold. Our results show the feasibility of using deep learning for climate model parameterization. In a broader context, we anticipate that data-driven Earth System Model development could play a key role in reducing climate prediction uncertainty in the coming decade.

* View official PNAS version at https://doi.org/10.1073/pnas.1810286115

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge