Climate-Invariant Machine Learning

Paper and Code

Dec 14, 2021

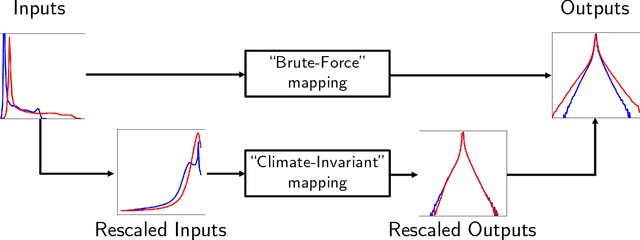

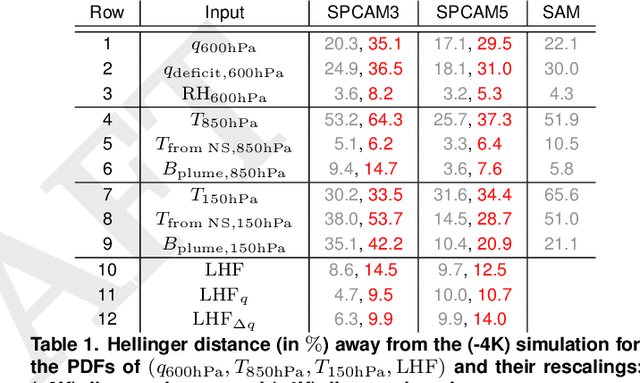

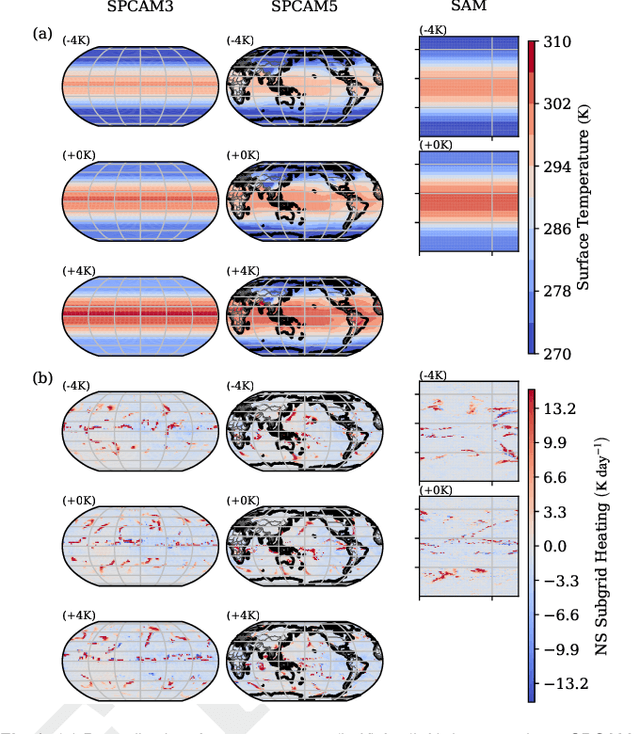

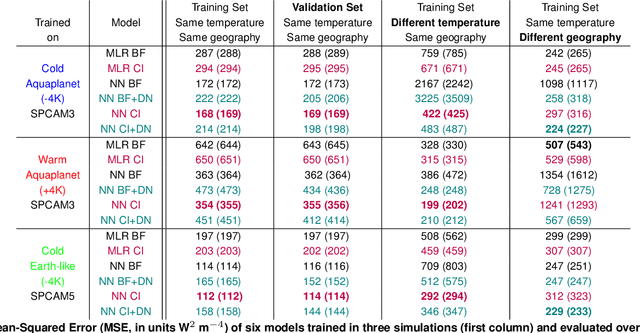

Data-driven algorithms, in particular neural networks, can emulate the effects of unresolved processes in coarse-resolution climate models when trained on high-resolution simulation data; however, they often make large generalization errors when evaluated in conditions they were not trained on. Here, we propose to physically rescale the inputs and outputs of machine learning algorithms to help them generalize to unseen climates. Applied to offline parameterizations of subgrid-scale thermodynamics in three distinct climate models, we show that rescaled or "climate-invariant" neural networks make accurate predictions in test climates that are 4K and 8K warmer than their training climates. Additionally, "climate-invariant" neural nets facilitate generalization between Aquaplanet and Earth-like simulations. Through visualization and attribution methods, we show that compared to standard machine learning models, "climate-invariant" algorithms learn more local and robust relations between storm-scale convection, radiation, and their synoptic thermodynamic environment. Overall, these results suggest that explicitly incorporating physical knowledge into data-driven models of Earth system processes can improve their consistency and ability to generalize across climate regimes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge