Towards Physically-consistent, Data-driven Models of Convection

Paper and Code

Feb 20, 2020

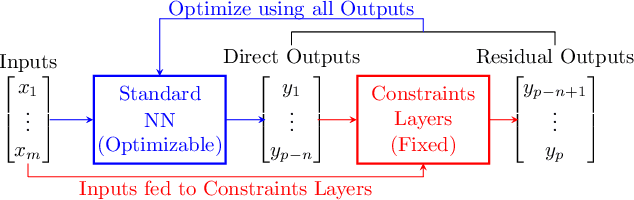

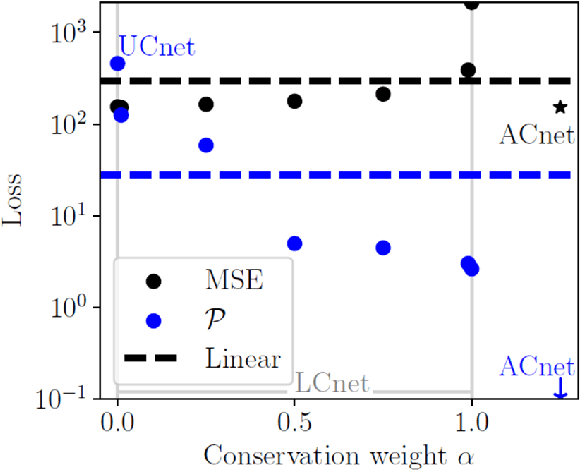

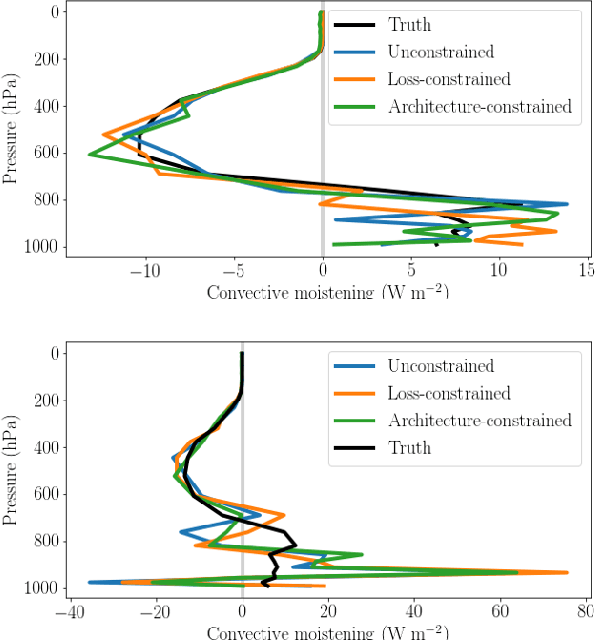

Data-driven algorithms, in particular neural networks, can emulate the effect of sub-grid scale processes in coarse-resolution climate models if trained on high-resolution climate simulations. However, they may violate key physical constraints and lack the ability to generalize outside of their training set. Here, we show that physical constraints can be enforced in neural networks, either approximately by adapting the loss function or to machine precision by adapting the architecture. As these physical constraints are insufficient to guarantee generalizability, we additionally propose a framework to find physical normalizations that can be applied to the training and validation data to improve the ability of neural networks to generalize to unseen climates.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge