Dave Moore

Embedded-model flows: Combining the inductive biases of model-free deep learning and explicit probabilistic modeling

Oct 17, 2021

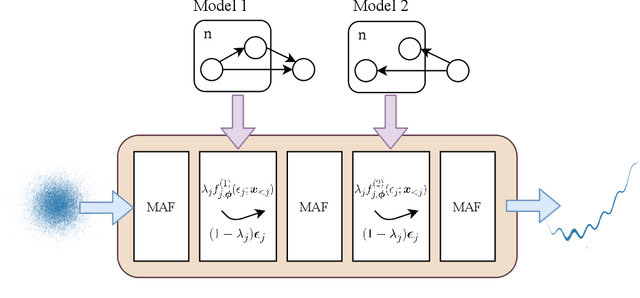

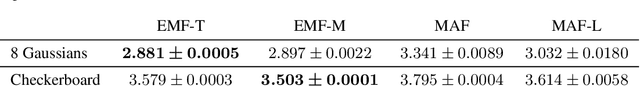

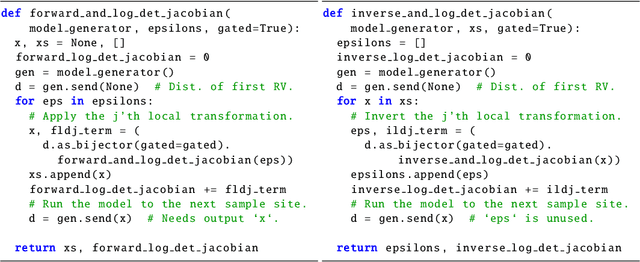

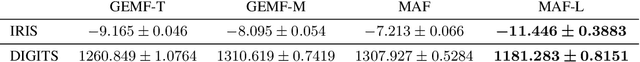

Abstract:Normalizing flows have shown great success as general-purpose density estimators. However, many real world applications require the use of domain-specific knowledge, which normalizing flows cannot readily incorporate. We propose embedded-model flows (EMF), which alternate general-purpose transformations with structured layers that embed domain-specific inductive biases. These layers are automatically constructed by converting user-specified differentiable probabilistic models into equivalent bijective transformations. We also introduce gated structured layers, which allow bypassing the parts of the models that fail to capture the statistics of the data. We demonstrate that EMFs can be used to induce desirable properties such as multimodality, hierarchical coupling and continuity. Furthermore, we show that EMFs enable a high performance form of variational inference where the structure of the prior model is embedded in the variational architecture. In our experiments, we show that this approach outperforms state-of-the-art methods in common structured inference problems.

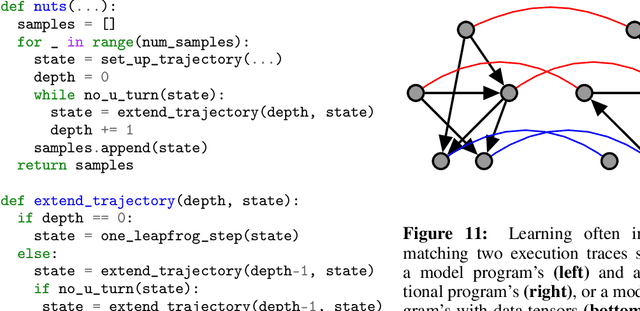

tfp.mcmc: Modern Markov Chain Monte Carlo Tools Built for Modern Hardware

Feb 04, 2020Abstract:Markov chain Monte Carlo (MCMC) is widely regarded as one of the most important algorithms of the 20th century. Its guarantees of asymptotic convergence, stability, and estimator-variance bounds using only unnormalized probability functions make it indispensable to probabilistic programming. In this paper, we introduce the TensorFlow Probability MCMC toolkit, and discuss some of the considerations that motivated its design.

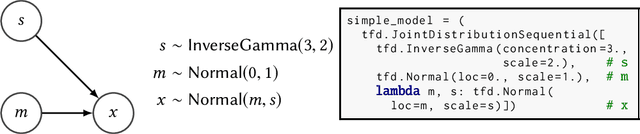

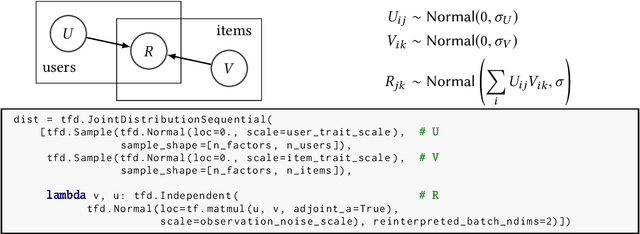

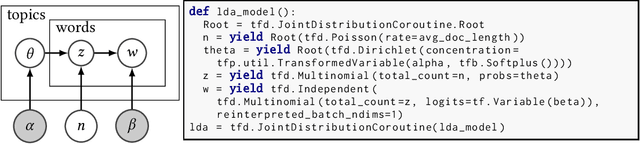

Joint Distributions for TensorFlow Probability

Jan 22, 2020

Abstract:A central tenet of probabilistic programming is that a model is specified exactly once in a canonical representation which is usable by inference algorithms. We describe JointDistributions, a family of declarative representations of directed graphical models in TensorFlow Probability.

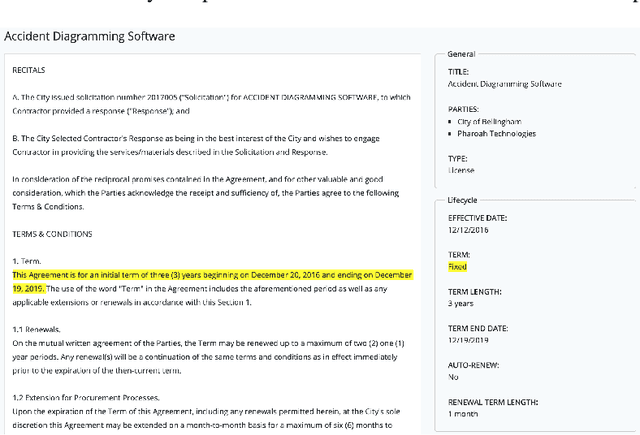

BERT Goes to Law School: Quantifying the Competitive Advantage of Access to Large Legal Corpora in Contract Understanding

Nov 01, 2019

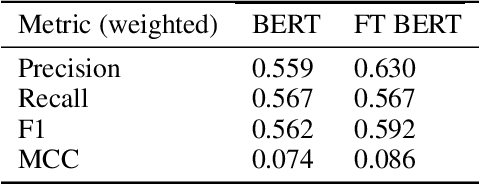

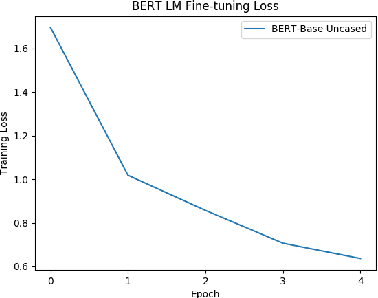

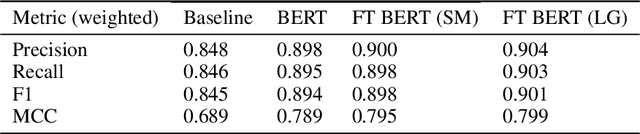

Abstract:Fine-tuning language models, such as BERT, on domain specific corpora has proven to be valuable in domains like scientific papers and biomedical text. In this paper, we show that fine-tuning BERT on legal documents similarly provides valuable improvements on NLP tasks in the legal domain. Demonstrating this outcome is significant for analyzing commercial agreements, because obtaining large legal corpora is challenging due to their confidential nature. As such, we show that having access to large legal corpora is a competitive advantage for commercial applications, and academic research on analyzing contracts.

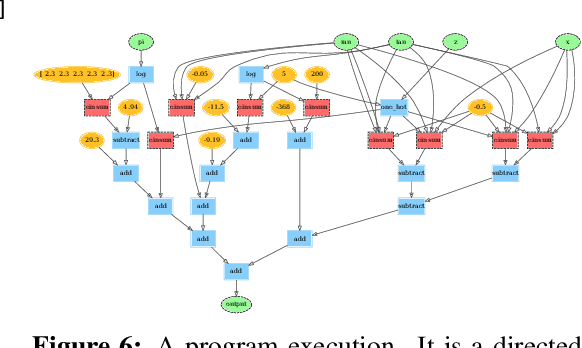

Automatic Reparameterisation of Probabilistic Programs

Jun 07, 2019

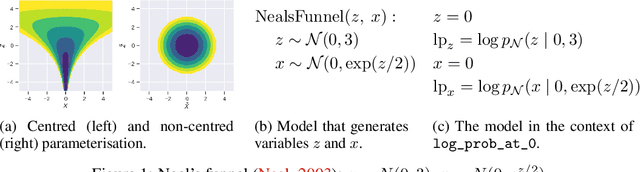

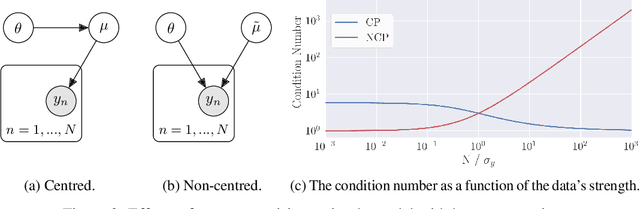

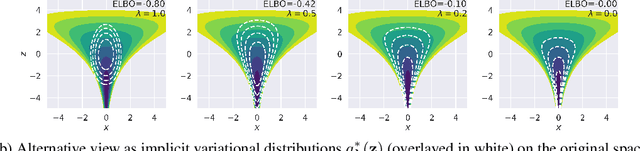

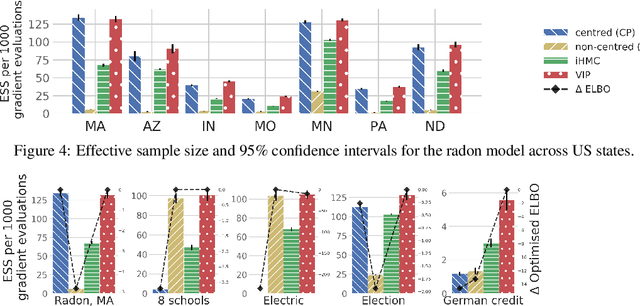

Abstract:Probabilistic programming has emerged as a powerful paradigm in statistics, applied science, and machine learning: by decoupling modelling from inference, it promises to allow modellers to directly reason about the processes generating data. However, the performance of inference algorithms can be dramatically affected by the parameterisation used to express a model, requiring users to transform their programs in non-intuitive ways. We argue for automating these transformations, and demonstrate that mechanisms available in recent modeling frameworks can implement non-centring and related reparameterisations. This enables new inference algorithms, and we propose two: a simple approach using interleaved sampling and a novel variational formulation that searches over a continuous space of parameterisations. We show that these approaches enable robust inference across a range of models, and can yield more efficient samplers than the best fixed parameterisation.

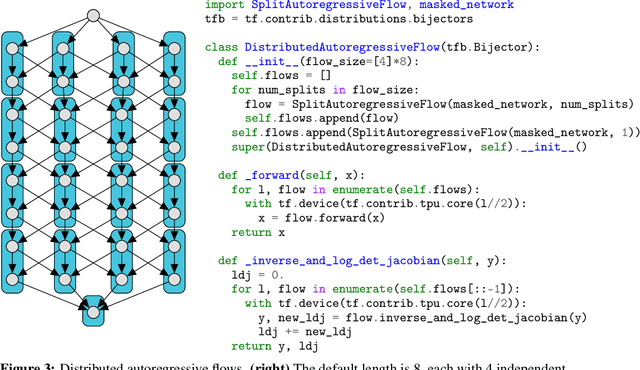

Simple, Distributed, and Accelerated Probabilistic Programming

Nov 29, 2018

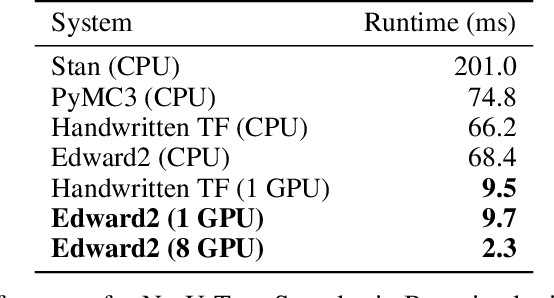

Abstract:We describe a simple, low-level approach for embedding probabilistic programming in a deep learning ecosystem. In particular, we distill probabilistic programming down to a single abstraction---the random variable. Our lightweight implementation in TensorFlow enables numerous applications: a model-parallel variational auto-encoder (VAE) with 2nd-generation tensor processing units (TPUv2s); a data-parallel autoregressive model (Image Transformer) with TPUv2s; and multi-GPU No-U-Turn Sampler (NUTS). For both a state-of-the-art VAE on 64x64 ImageNet and Image Transformer on 256x256 CelebA-HQ, our approach achieves an optimal linear speedup from 1 to 256 TPUv2 chips. With NUTS, we see a 100x speedup on GPUs over Stan and 37x over PyMC3.

Effect Handling for Composable Program Transformations in Edward2

Nov 15, 2018Abstract:Algebraic effects and handlers have emerged in the programming languages community as a convenient, modular abstraction for controlling computational effects. They have found several applications including concurrent programming, meta programming, and more recently, probabilistic programming, as part of Pyro's Poutines library. We investigate the use of effect handlers as a lightweight abstraction for implementing probabilistic programming languages (PPLs). We interpret the existing design of Edward2 as an accidental implementation of an effect-handling mechanism, and extend that design to support nested, composable transformations. We demonstrate that this enables straightforward implementation of sophisticated model transformations and inference algorithms.

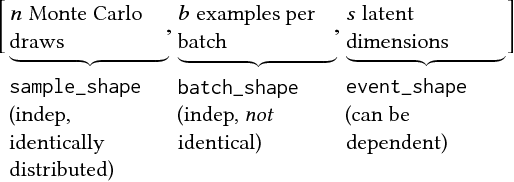

TensorFlow Distributions

Nov 28, 2017

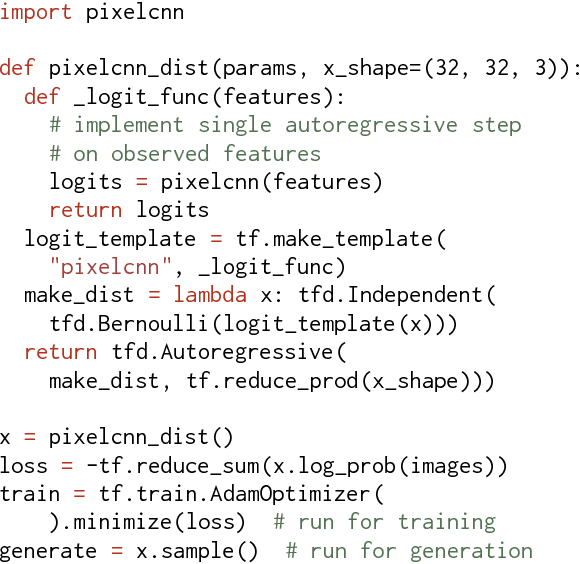

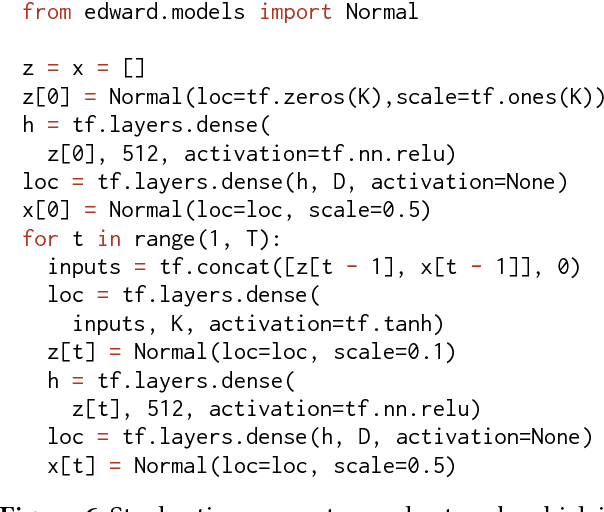

Abstract:The TensorFlow Distributions library implements a vision of probability theory adapted to the modern deep-learning paradigm of end-to-end differentiable computation. Building on two basic abstractions, it offers flexible building blocks for probabilistic computation. Distributions provide fast, numerically stable methods for generating samples and computing statistics, e.g., log density. Bijectors provide composable volume-tracking transformations with automatic caching. Together these enable modular construction of high dimensional distributions and transformations not possible with previous libraries (e.g., pixelCNNs, autoregressive flows, and reversible residual networks). They are the workhorse behind deep probabilistic programming systems like Edward and empower fast black-box inference in probabilistic models built on deep-network components. TensorFlow Distributions has proven an important part of the TensorFlow toolkit within Google and in the broader deep learning community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge