Hung-Jui Huang

GelBelt: A Vision-based Tactile Sensor for Continuous Sensing of Large Surfaces

Jan 09, 2025Abstract:Scanning large-scale surfaces is widely demanded in surface reconstruction applications and detecting defects in industries' quality control and maintenance stages. Traditional vision-based tactile sensors have shown promising performance in high-resolution shape reconstruction while suffering limitations such as small sensing areas or susceptibility to damage when slid across surfaces, making them unsuitable for continuous sensing on large surfaces. To address these shortcomings, we introduce a novel vision-based tactile sensor designed for continuous surface sensing applications. Our design uses an elastomeric belt and two wheels to continuously scan the target surface. The proposed sensor showed promising results in both shape reconstruction and surface fusion, indicating its applicability. The dot product of the estimated and reference surface normal map is reported over the sensing area and for different scanning speeds. Results indicate that the proposed sensor can rapidly scan large-scale surfaces with high accuracy at speeds up to 45 mm/s.

NormalFlow: Fast, Robust, and Accurate Contact-based Object 6DoF Pose Tracking with Vision-based Tactile Sensors

Dec 12, 2024Abstract:Tactile sensing is crucial for robots aiming to achieve human-level dexterity. Among tactile-dependent skills, tactile-based object tracking serves as the cornerstone for many tasks, including manipulation, in-hand manipulation, and 3D reconstruction. In this work, we introduce NormalFlow, a fast, robust, and real-time tactile-based 6DoF tracking algorithm. Leveraging the precise surface normal estimation of vision-based tactile sensors, NormalFlow determines object movements by minimizing discrepancies between the tactile-derived surface normals. Our results show that NormalFlow consistently outperforms competitive baselines and can track low-texture objects like table surfaces. For long-horizon tracking, we demonstrate when rolling the sensor around a bead for 360 degrees, NormalFlow maintains a rotational tracking error of 2.5 degrees. Additionally, we present state-of-the-art tactile-based 3D reconstruction results, showcasing the high accuracy of NormalFlow. We believe NormalFlow unlocks new possibilities for high-precision perception and manipulation tasks that involve interacting with objects using hands. The video demo, code, and dataset are available on our website: https://joehjhuang.github.io/normalflow.

* 8 pages, published in 2024 RA-L, website link: https://joehjhuang.github.io/normalflow

FusionSense: Bridging Common Sense, Vision, and Touch for Robust Sparse-View Reconstruction

Oct 10, 2024

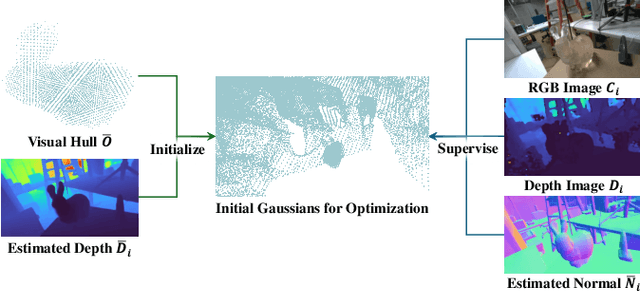

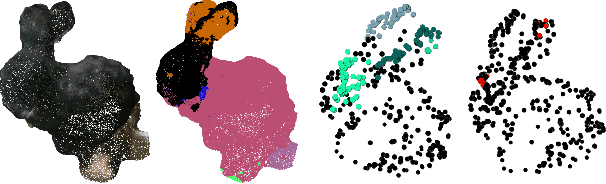

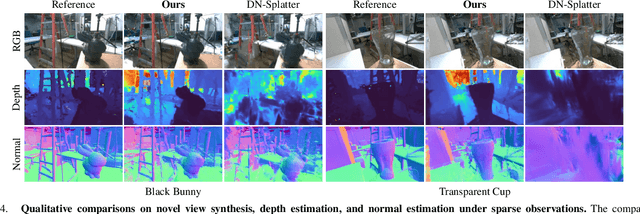

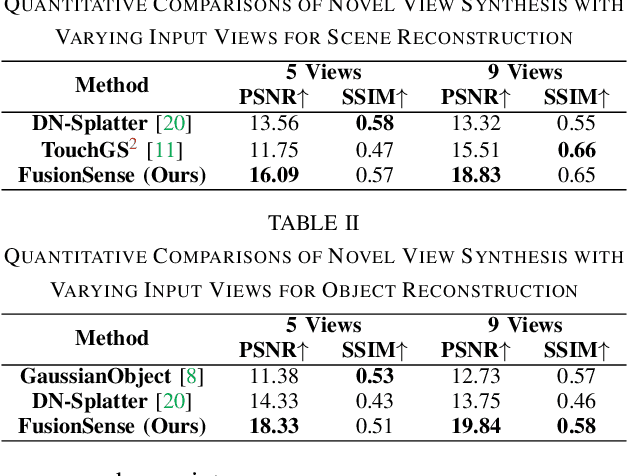

Abstract:Humans effortlessly integrate common-sense knowledge with sensory input from vision and touch to understand their surroundings. Emulating this capability, we introduce FusionSense, a novel 3D reconstruction framework that enables robots to fuse priors from foundation models with highly sparse observations from vision and tactile sensors. FusionSense addresses three key challenges: (i) How can robots efficiently acquire robust global shape information about the surrounding scene and objects? (ii) How can robots strategically select touch points on the object using geometric and common-sense priors? (iii) How can partial observations such as tactile signals improve the overall representation of the object? Our framework employs 3D Gaussian Splatting as a core representation and incorporates a hierarchical optimization strategy involving global structure construction, object visual hull pruning and local geometric constraints. This advancement results in fast and robust perception in environments with traditionally challenging objects that are transparent, reflective, or dark, enabling more downstream manipulation or navigation tasks. Experiments on real-world data suggest that our framework outperforms previously state-of-the-art sparse-view methods. All code and data are open-sourced on the project website.

An Intelligent Robotic System for Perceptive Pancake Batter Stirring and Precise Pouring

Jul 01, 2024

Abstract:Cooking robots have long been desired by the commercial market, while the technical challenge is still significant. A major difficulty comes from the demand of perceiving and handling liquid with different properties. This paper presents a robot system that mixes batter and makes pancakes out of it, where understanding and handling the viscous liquid is an essential component. The system integrates Haptic Sensing and control algorithms to autonomously stir flour and water to achieve the desired batter uniformity, estimate the batter's properties such as the water-flour ratio and liquid level, as well as perform precise manipulations to pour the batter into any specified shape. Experimental results show the system's capability to always produce batter of desired uniformity, estimate water-flour ratio and liquid level precisely, and accurately pour it into complex shapes. This research showcases the potential for robots to assist in kitchens and step towards commercial culinary automation.

Kitchen Artist: Precise Control of Liquid Dispensing for Gourmet Plating

Nov 20, 2023Abstract:Manipulating liquid is widely required for many tasks, especially in cooking. A common way to address this is extruding viscous liquid from a squeeze bottle. In this work, our goal is to create a sauce plating robot, which requires precise control of the thickness of squeezed liquids on a surface. Different liquids demand different manipulation policies. We command the robot to tilt the container and monitor the liquid response using a force sensor to identify liquid properties. Based on the liquid properties, we predict the liquid behavior with fixed squeezing motions in a data-driven way and calculate the required drawing speed for the desired stroke size. This open-loop system works effectively even without sensor feedback. Our experiments demonstrate accurate stroke size control across different liquids and fill levels. We show that understanding liquid properties can facilitate effective liquid manipulation. More importantly, our dish garnishing robot has a wide range of applications and holds significant commercialization potential.

Estimating Properties of Solid Particles Inside Container Using Touch Sensing

Jul 28, 2023Abstract:Solid particles, such as rice and coffee beans, are commonly stored in containers and are ubiquitous in our daily lives. Understanding those particles' properties could help us make later decisions or perform later manipulation tasks such as pouring. Humans typically interact with the containers to get an understanding of the particles inside them, but it is still a challenge for robots to achieve that. This work utilizes tactile sensing to estimate multiple properties of solid particles enclosed in the container, specifically, content mass, content volume, particle size, and particle shape. We design a sequence of robot actions to interact with the container. Based on physical understanding, we extract static force/torque value from the F/T sensor, vibration-related features and topple-related features from the newly designed high-speed GelSight tactile sensor to estimate those four particle properties. We test our method on $37$ very different daily particles, including powder, rice, beans, tablets, etc. Experiments show that our approach is able to estimate content mass with an error of $1.8$ g, content volume with an error of $6.1$ ml, particle size with an error of $1.1$ mm, and achieves an accuracy of $75.6$% for particle shape estimation. In addition, our method can generalize to unseen particles with unknown volumes. By estimating these particle properties, our method can help robots to better perceive the granular media and help with different manipulation tasks in daily life and industry.

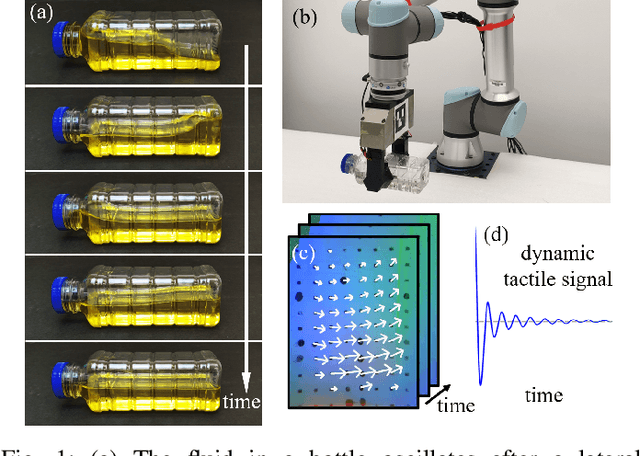

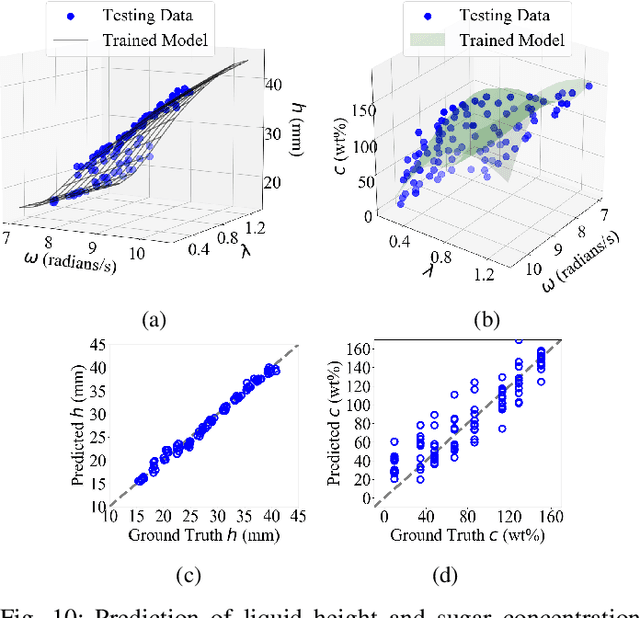

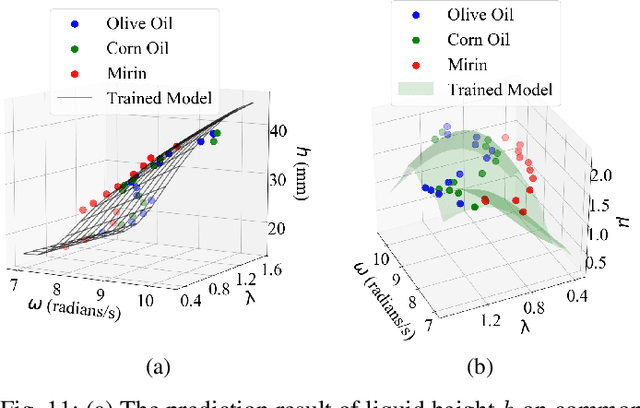

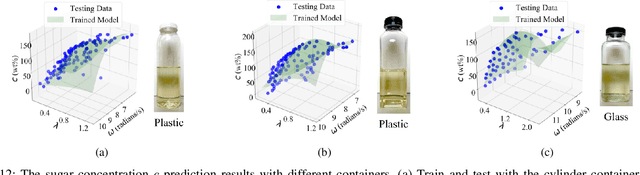

Understanding Dynamic Tactile Sensing for Liquid Property Estimation

May 18, 2022

Abstract:Humans perceive the world by interacting with objects, which often happens in a dynamic way. For example, a human would shake a bottle to guess its content. However, it remains a challenge for robots to understand many dynamic signals during contact well. This paper investigates dynamic tactile sensing by tackling the task of estimating liquid properties. We propose a new way of thinking about dynamic tactile sensing: by building a light-weighted data-driven model based on the simplified physical principle. The liquid in a bottle will oscillate after a perturbation. We propose a simple physics-inspired model to explain this oscillation and use a high-resolution tactile sensor GelSight to sense it. Specifically, the viscosity and the height of the liquid determine the decay rate and frequency of the oscillation. We then train a Gaussian Process Regression model on a small amount of the real data to estimate the liquid properties. Experiments show that our model can classify three different liquids with 100% accuracy. The model can estimate volume with high precision and even estimate the concentration of sugar-water solution. It is data-efficient and can easily generalize to other liquids and bottles. Our work posed a physically-inspired understanding of the correlation between dynamic tactile signals and the dynamic performance of the liquid. Our approach creates a good balance between simplicity, accuracy, and generality. It will help robots to better perceive liquids in different environments such as kitchens, food factories, and pharmaceutical factories.

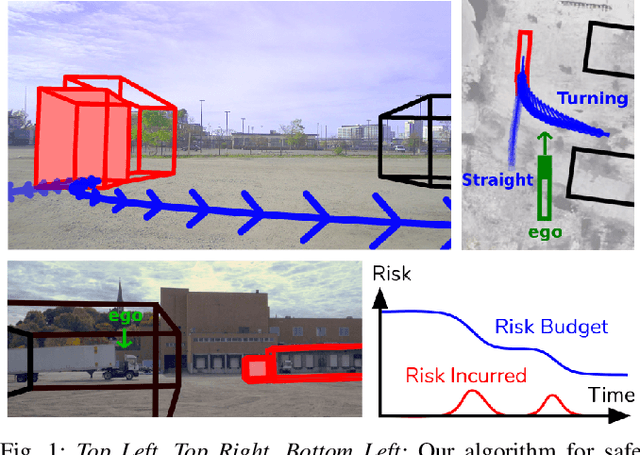

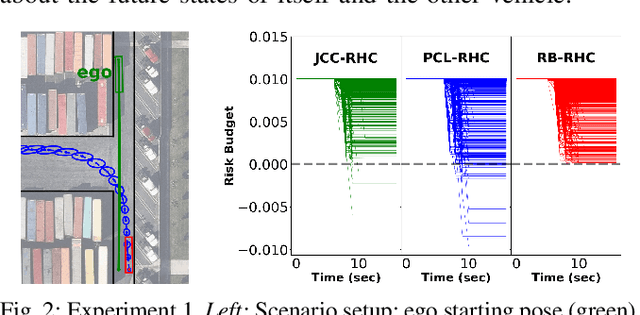

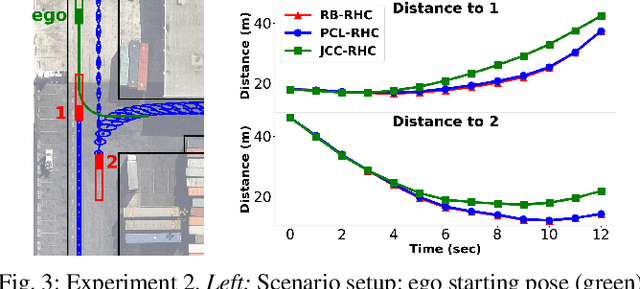

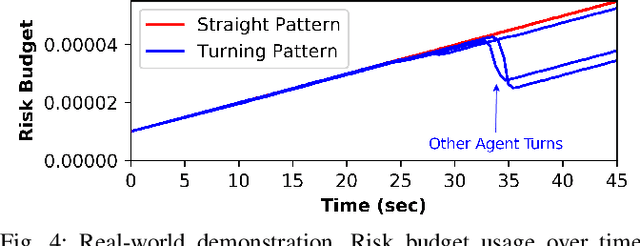

Planning on a Budget: Safe Non-Conservative Planning in Probabilistic Dynamic Environments

Jun 16, 2021

Abstract:Planning in environments with other agents whose future actions are uncertain often requires compromise between safety and performance. Here our goal is to design efficient planning algorithms with guaranteed bounds on the probability of safety violation, which nonetheless achieve non-conservative performance. To quantify a system's risk, we define a natural criterion called interval risk bounds (IRBs), which provide a parametric upper bound on the probability of safety violation over a given time interval or task. We present a novel receding horizon algorithm, and prove that it can satisfy a desired IRB. Our algorithm maintains a dynamic risk budget which constrains the allowable risk at each iteration, and guarantees recursive feasibility by requiring a safe set to be reachable by a contingency plan within the budget. We empirically demonstrate that our algorithm is both safer and less conservative than strong baselines in two simulated autonomous driving experiments in scenarios involving collision avoidance with other vehicles, and additionally demonstrate our algorithm running on an autonomous class 8 truck.

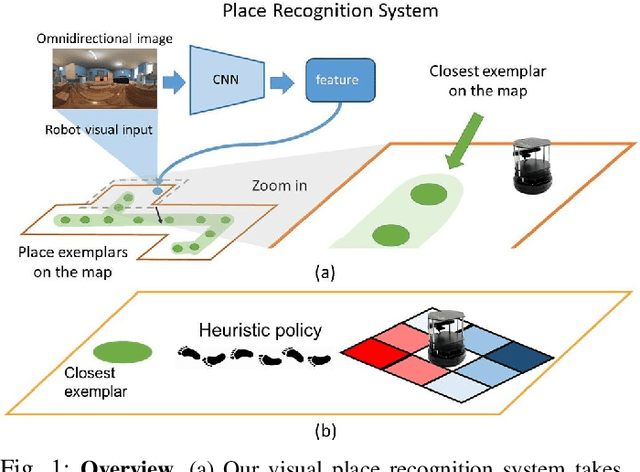

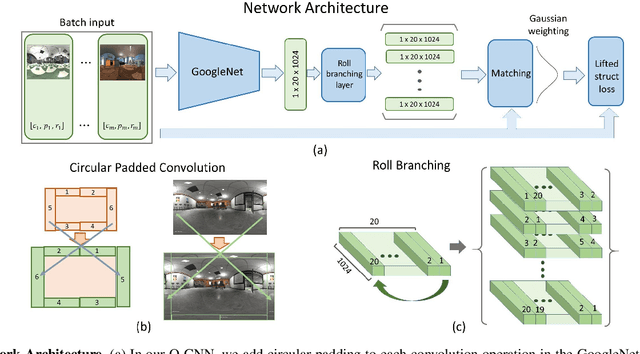

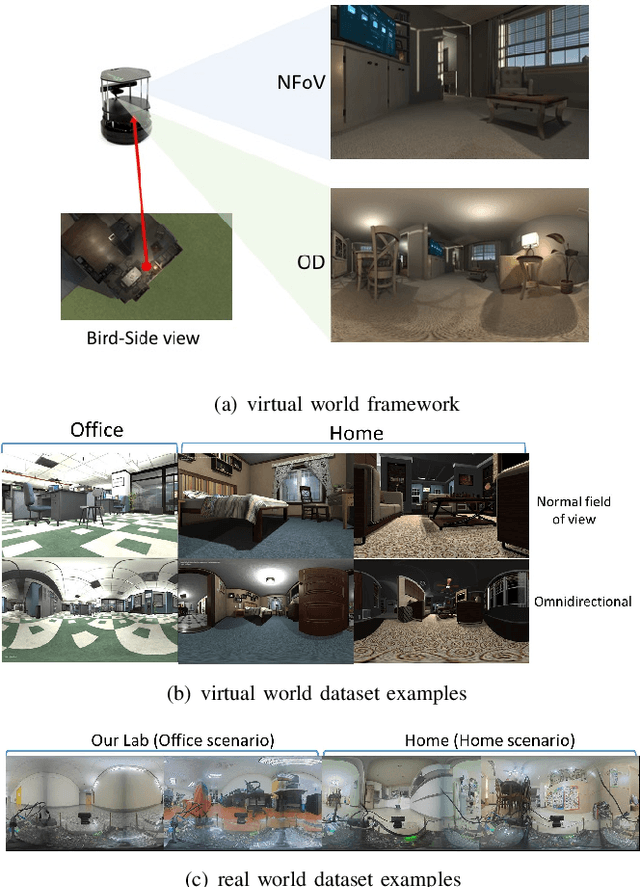

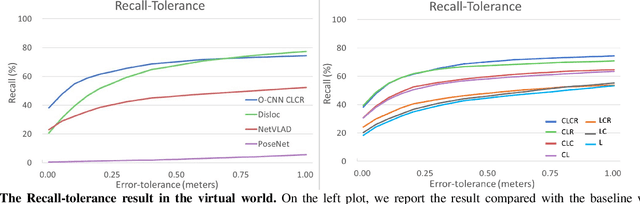

Omnidirectional CNN for Visual Place Recognition and Navigation

Mar 12, 2018

Abstract:$ $Visual place recognition is challenging, especially when only a few place exemplars are given. To mitigate the challenge, we consider place recognition method using omnidirectional cameras and propose a novel Omnidirectional Convolutional Neural Network (O-CNN) to handle severe camera pose variation. Given a visual input, the task of the O-CNN is not to retrieve the matched place exemplar, but to retrieve the closest place exemplar and estimate the relative distance between the input and the closest place. With the ability to estimate relative distance, a heuristic policy is proposed to navigate a robot to the retrieved closest place. Note that the network is designed to take advantage of the omnidirectional view by incorporating circular padding and rotation invariance. To train a powerful O-CNN, we build a virtual world for training on a large scale. We also propose a continuous lifted structured feature embedding loss to learn the concept of distance efficiently. Finally, our experimental results confirm that our method achieves state-of-the-art accuracy and speed with both the virtual world and real-world datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge