Habib Hajimolahoseini

Accelerating the Low-Rank Decomposed Models

Jul 24, 2024

Abstract:Tensor decomposition is a mathematically supported technique for data compression. It consists of applying some kind of a Low Rank Decomposition technique on the tensors or matrices in order to reduce the redundancy of the data. However, it is not a popular technique for compressing the AI models duo to the high number of new layers added to the architecture after decomposition. Although the number of parameters could shrink significantly, it could result in the model be more than twice deeper which could add some latency to the training or inference. In this paper, we present a comprehensive study about how to modify low rank decomposition technique in AI models so that we could benefit from both high accuracy and low memory consumption as well as speeding up the training and inference

Is 3D Convolution with 5D Tensors Really Necessary for Video Analysis?

Jul 23, 2024

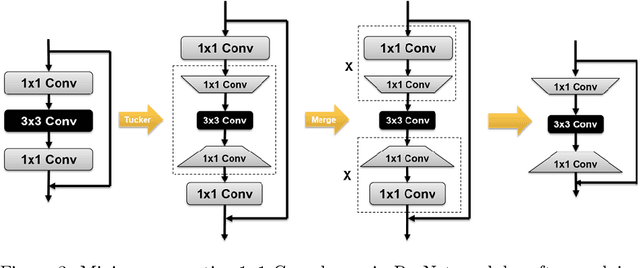

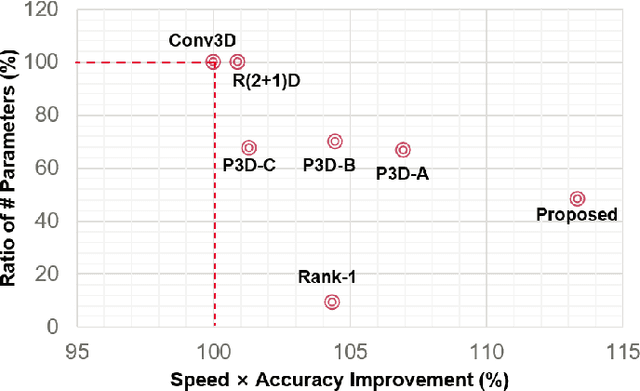

Abstract:In this paper, we present a comprehensive study and propose several novel techniques for implementing 3D convolutional blocks using 2D and/or 1D convolutions with only 4D and/or 3D tensors. Our motivation is that 3D convolutions with 5D tensors are computationally very expensive and they may not be supported by some of the edge devices used in real-time applications such as robots. The existing approaches mitigate this by splitting the 3D kernels into spatial and temporal domains, but they still use 3D convolutions with 5D tensors in their implementations. We resolve this issue by introducing some appropriate 4D/3D tensor reshaping as well as new combination techniques for spatial and temporal splits. The proposed implementation methods show significant improvement both in terms of efficiency and accuracy. The experimental results confirm that the proposed spatio-temporal processing structure outperforms the original model in terms of speed and accuracy using only 4D tensors with fewer parameters.

Single Parent Family: A Spectrum of Family Members from a Single Pre-Trained Foundation Model

Jun 28, 2024Abstract:This paper introduces a novel method of Progressive Low Rank Decomposition (PLRD) tailored for the compression of large language models. Our approach leverages a pre-trained model, which is then incrementally decompressed to smaller sizes using progressively lower ranks. This method allows for significant reductions in computational overhead and energy consumption, as subsequent models are derived from the original without the need for retraining from scratch. We detail the implementation of PLRD, which strategically decreases the tensor ranks, thus optimizing the trade-off between model performance and resource usage. The efficacy of PLRD is demonstrated through extensive experiments showing that models trained with PLRD method on only 1B tokens maintain comparable performance with traditionally trained models while using 0.1% of the tokens. The versatility of PLRD is highlighted by its ability to generate multiple model sizes from a single foundational model, adapting fluidly to varying computational and memory budgets. Our findings suggest that PLRD could set a new standard for the efficient scaling of LLMs, making advanced AI more feasible on diverse platforms.

SkipViT: Speeding Up Vision Transformers with a Token-Level Skip Connection

Jan 27, 2024Abstract:Vision transformers are known to be more computationally and data-intensive than CNN models. These transformer models such as ViT, require all the input image tokens to learn the relationship among them. However, many of these tokens are not informative and may contain irrelevant information such as unrelated background or unimportant scenery. These tokens are overlooked by the multi-head self-attention (MHSA), resulting in many redundant and unnecessary computations in MHSA and the feed-forward network (FFN). In this work, we propose a method to optimize the amount of unnecessary interactions between unimportant tokens by separating and sending them through a different low-cost computational path. Our method does not add any parameters to the ViT model and aims to find the best trade-off between training throughput and achieving a 0% loss in the Top-1 accuracy of the final model. Our experimental results on training ViT-small from scratch show that SkipViT is capable of effectively dropping 55% of the tokens while gaining more than 13% training throughput and maintaining classification accuracy at the level of the baseline model on Huawei Ascend910A.

SwiftLearn: A Data-Efficient Training Method of Deep Learning Models using Importance Sampling

Nov 25, 2023

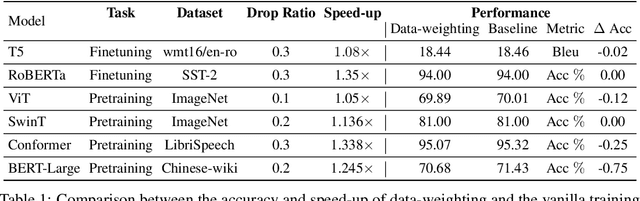

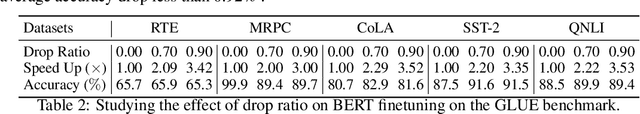

Abstract:In this paper, we present SwiftLearn, a data-efficient approach to accelerate training of deep learning models using a subset of data samples selected during the warm-up stages of training. This subset is selected based on an importance criteria measured over the entire dataset during warm-up stages, aiming to preserve the model performance with fewer examples during the rest of training. The importance measure we propose could be updated during training every once in a while, to make sure that all of the data samples have a chance to return to the training loop if they show a higher importance. The model architecture is unchanged but since the number of data samples controls the number of forward and backward passes during training, we can reduce the training time by reducing the number of training samples used in each epoch of training. Experimental results on a variety of CV and NLP models during both pretraining and finetuning show that the model performance could be preserved while achieving a significant speed-up during training. More specifically, BERT finetuning on GLUE benchmark shows that almost 90% of the data can be dropped achieving an end-to-end average speedup of 3.36x while keeping the average accuracy drop less than 0.92%.

GQKVA: Efficient Pre-training of Transformers by Grouping Queries, Keys, and Values

Nov 06, 2023Abstract:Massive transformer-based models face several challenges, including slow and computationally intensive pre-training and over-parametrization. This paper addresses these challenges by proposing a versatile method called GQKVA, which generalizes query, key, and value grouping techniques. GQKVA is designed to speed up transformer pre-training while reducing the model size. Our experiments with various GQKVA variants highlight a clear trade-off between performance and model size, allowing for customized choices based on resource and time limitations. Our findings also indicate that the conventional multi-head attention approach is not always the best choice, as there are lighter and faster alternatives available. We tested our method on ViT, which achieved an approximate 0.3% increase in accuracy while reducing the model size by about 4% in the task of image classification. Additionally, our most aggressive model reduction experiment resulted in a reduction of approximately 15% in model size, with only around a 1% drop in accuracy.

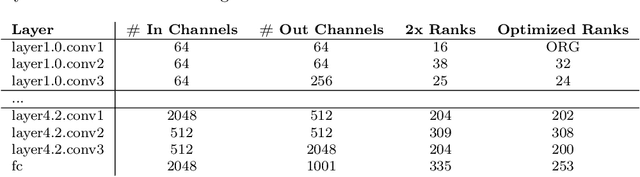

Speeding up Resnet Architecture with Layers Targeted Low Rank Decomposition

Sep 21, 2023Abstract:Compression of a neural network can help in speeding up both the training and the inference of the network. In this research, we study applying compression using low rank decomposition on network layers. Our research demonstrates that to acquire a speed up, the compression methodology should be aware of the underlying hardware as analysis should be done to choose which layers to compress. The advantage of our approach is demonstrated via a case study of compressing ResNet50 and training on full ImageNet-ILSVRC2012. We tested on two different hardware systems Nvidia V100 and Huawei Ascend910. With hardware targeted compression, results on Ascend910 showed 5.36% training speedup and 15.79% inference speed on Ascend310 with only 1% drop in accuracy compared to the original uncompressed model

Training Acceleration of Low-Rank Decomposed Networks using Sequential Freezing and Rank Quantization

Sep 07, 2023

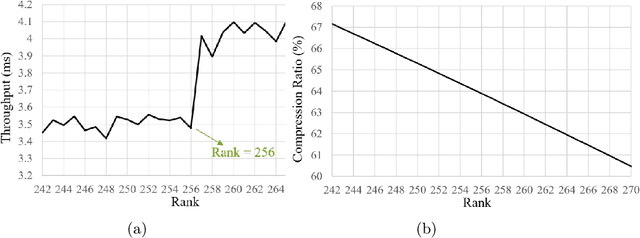

Abstract:Low Rank Decomposition (LRD) is a model compression technique applied to the weight tensors of deep learning models in order to reduce the number of trainable parameters and computational complexity. However, due to high number of new layers added to the architecture after applying LRD, it may not lead to a high training/inference acceleration if the decomposition ranks are not small enough. The issue is that using small ranks increases the risk of significant accuracy drop after decomposition. In this paper, we propose two techniques for accelerating low rank decomposed models without requiring to use small ranks for decomposition. These methods include rank optimization and sequential freezing of decomposed layers. We perform experiments on both convolutional and transformer-based models. Experiments show that these techniques can improve the model throughput up to 60% during training and 37% during inference when combined together while preserving the accuracy close to that of the original models

Improving Resnet-9 Generalization Trained on Small Datasets

Sep 07, 2023Abstract:This paper presents our proposed approach that won the first prize at the ICLR competition on Hardware Aware Efficient Training. The challenge is to achieve the highest possible accuracy in an image classification task in less than 10 minutes. The training is done on a small dataset of 5000 images picked randomly from CIFAR-10 dataset. The evaluation is performed by the competition organizers on a secret dataset with 1000 images of the same size. Our approach includes applying a series of technique for improving the generalization of ResNet-9 including: sharpness aware optimization, label smoothing, gradient centralization, input patch whitening as well as metalearning based training. Our experiments show that the ResNet-9 can achieve the accuracy of 88% while trained only on a 10% subset of CIFAR-10 dataset in less than 10 minuets

A Short Study on Compressing Decoder-Based Language Models

Oct 16, 2021

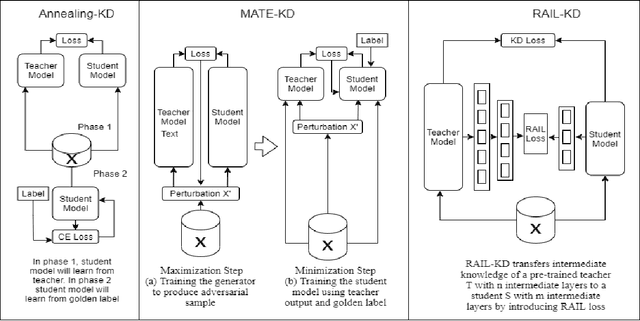

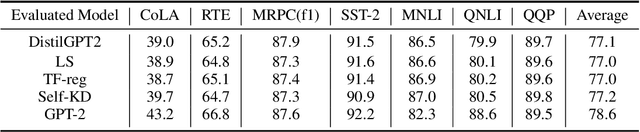

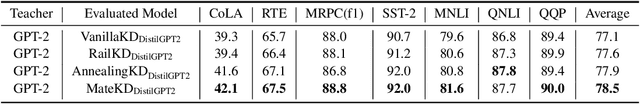

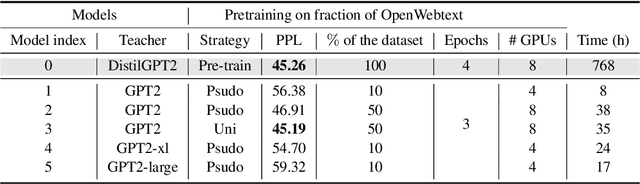

Abstract:Pre-trained Language Models (PLMs) have been successful for a wide range of natural language processing (NLP) tasks. The state-of-the-art of PLMs, however, are extremely large to be used on edge devices. As a result, the topic of model compression has attracted increasing attention in the NLP community. Most of the existing works focus on compressing encoder-based models (tiny-BERT, distilBERT, distilRoBERTa, etc), however, to the best of our knowledge, the compression of decoder-based models (such as GPT-2) has not been investigated much. Our paper aims to fill this gap. Specifically, we explore two directions: 1) we employ current state-of-the-art knowledge distillation techniques to improve fine-tuning of DistilGPT-2. 2) we pre-train a compressed GPT-2 model using layer truncation and compare it against the distillation-based method (DistilGPT2). The training time of our compressed model is significantly less than DistilGPT-2, but it can achieve better performance when fine-tuned on downstream tasks. We also demonstrate the impact of data cleaning on model performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge