Guillermo Cámbara

VCNAC: A Variable-Channel Neural Audio Codec for Mono, Stereo, and Surround Sound

Jan 21, 2026Abstract:We present VCNAC, a variable channel neural audio codec. Our approach features a single encoder and decoder parametrization that enables native inference for different channel setups, from mono speech to cinematic 5.1 channel surround audio. Channel compatibility objectives ensure that multi-channel content maintains perceptual quality when decoded to fewer channels. The shared representation enables training of generative language models on a single set of codebooks while supporting inference-time scalability across modalities and channel configurations. Evaluation using objective spatial audio metrics and subjective listening tests demonstrates that our unified approach maintains high reconstruction quality across mono, stereo, and surround audio configurations.

BASE TTS: Lessons from building a billion-parameter Text-to-Speech model on 100K hours of data

Feb 15, 2024

Abstract:We introduce a text-to-speech (TTS) model called BASE TTS, which stands for $\textbf{B}$ig $\textbf{A}$daptive $\textbf{S}$treamable TTS with $\textbf{E}$mergent abilities. BASE TTS is the largest TTS model to-date, trained on 100K hours of public domain speech data, achieving a new state-of-the-art in speech naturalness. It deploys a 1-billion-parameter autoregressive Transformer that converts raw texts into discrete codes ("speechcodes") followed by a convolution-based decoder which converts these speechcodes into waveforms in an incremental, streamable manner. Further, our speechcodes are built using a novel speech tokenization technique that features speaker ID disentanglement and compression with byte-pair encoding. Echoing the widely-reported "emergent abilities" of large language models when trained on increasing volume of data, we show that BASE TTS variants built with 10K+ hours and 500M+ parameters begin to demonstrate natural prosody on textually complex sentences. We design and share a specialized dataset to measure these emergent abilities for text-to-speech. We showcase state-of-the-art naturalness of BASE TTS by evaluating against baselines that include publicly available large-scale text-to-speech systems: YourTTS, Bark and TortoiseTTS. Audio samples generated by the model can be heard at https://amazon-ltts-paper.com/.

Data Augmentation for Low-Resource Quechua ASR Improvement

Jul 14, 2022

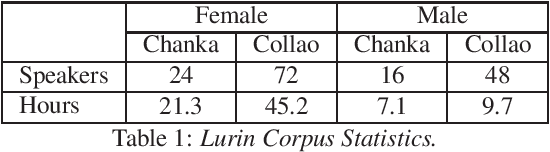

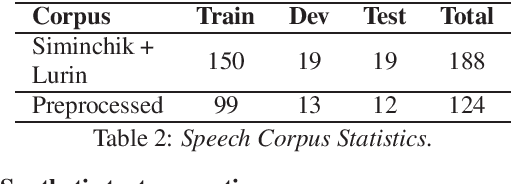

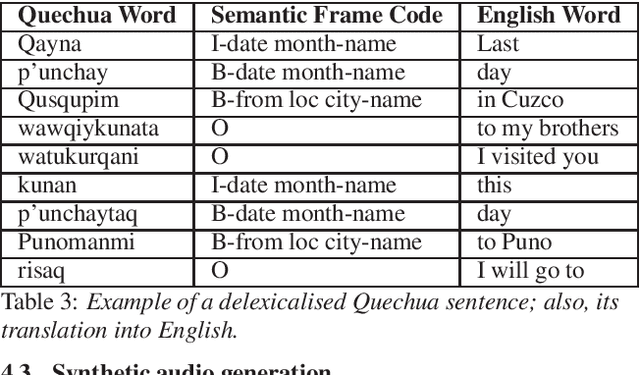

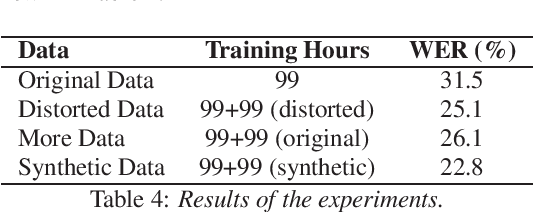

Abstract:Automatic Speech Recognition (ASR) is a key element in new services that helps users to interact with an automated system. Deep learning methods have made it possible to deploy systems with word error rates below 5% for ASR of English. However, the use of these methods is only available for languages with hundreds or thousands of hours of audio and their corresponding transcriptions. For the so-called low-resource languages to speed up the availability of resources that can improve the performance of their ASR systems, methods of creating new resources on the basis of existing ones are being investigated. In this paper we describe our data augmentation approach to improve the results of ASR models for low-resource and agglutinative languages. We carry out experiments developing an ASR for Quechua using the wav2letter++ model. We reduced WER by 8.73% through our approach to the base model. The resulting ASR model obtained 22.75% WER and was trained with 99 hours of original resources and 99 hours of synthetic data obtained with a combination of text augmentation and synthetic speech generati

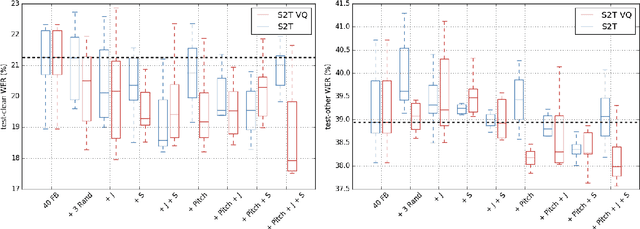

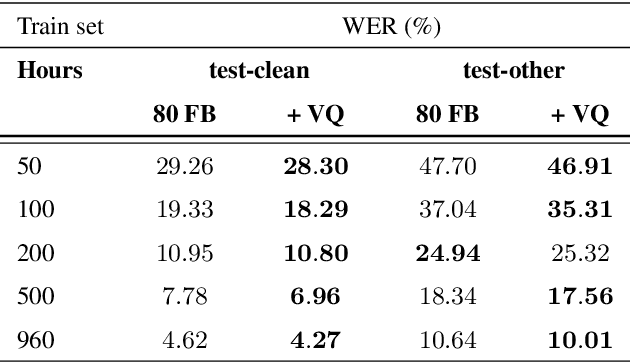

Voice Quality and Pitch Features in Transformer-Based Speech Recognition

Dec 21, 2021

Abstract:Jitter and shimmer measurements have shown to be carriers of voice quality and prosodic information which enhance the performance of tasks like speaker recognition, diarization or automatic speech recognition (ASR). However, such features have been seldom used in the context of neural-based ASR, where spectral features often prevail. In this work, we study the effects of incorporating voice quality and pitch features altogether and separately to a Transformer-based ASR model, with the intuition that the attention mechanisms might exploit latent prosodic traits. For doing so, we propose separated convolutional front-ends for prosodic and spectral features, showing that this architectural choice yields better results than simple concatenation of such pitch and voice quality features to mel-spectrogram filterbanks. Furthermore, we find mean Word Error Rate relative reductions of up to 5.6% with the LibriSpeech benchmark. Such findings motivate further research on the application of prosody knowledge for increasing the robustness of Transformer-based ASR.

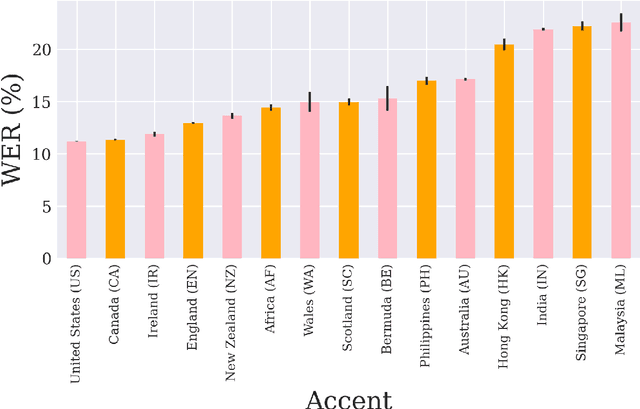

English Accent Accuracy Analysis in a State-of-the-Art Automatic Speech Recognition System

May 09, 2021

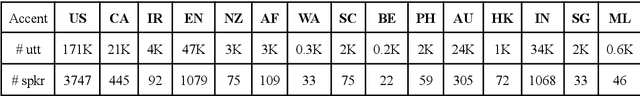

Abstract:Nowadays, research in speech technologies has gotten a lot out thanks to recently created public domain corpora that contain thousands of recording hours. These large amounts of data are very helpful for training the new complex models based on deep learning technologies. However, the lack of dialectal diversity in a corpus is known to cause performance biases in speech systems, mainly for underrepresented dialects. In this work, we propose to evaluate a state-of-the-art automatic speech recognition (ASR) deep learning-based model, using unseen data from a corpus with a wide variety of labeled English accents from different countries around the world. The model has been trained with 44.5K hours of English speech from an open access corpus called Multilingual LibriSpeech, showing remarkable results in popular benchmarks. We test the accuracy of such ASR against samples extracted from another public corpus that is continuously growing, the Common Voice dataset. Then, we present graphically the accuracy in terms of Word Error Rate of each of the different English included accents, showing that there is indeed an accuracy bias in terms of accentual variety, favoring the accents most prevalent in the training corpus.

Speech Enhancement for Wake-Up-Word detection in Voice Assistants

Jan 29, 2021

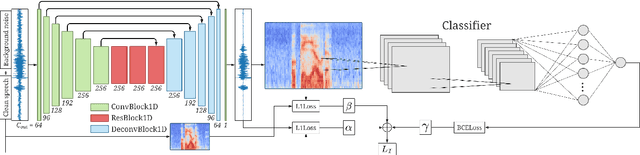

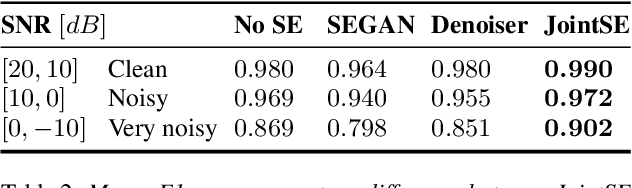

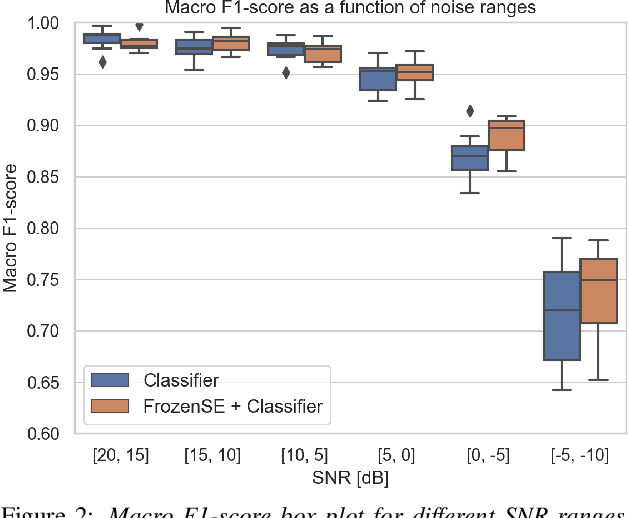

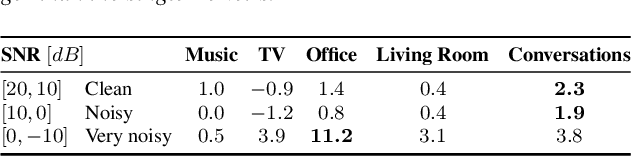

Abstract:Keyword spotting and in particular Wake-Up-Word (WUW) detection is a very important task for voice assistants. A very common issue of voice assistants is that they get easily activated by background noise like music, TV or background speech that accidentally triggers the device. In this paper, we propose a Speech Enhancement (SE) model adapted to the task of WUW detection that aims at increasing the recognition rate and reducing the false alarms in the presence of these types of noises. The SE model is a fully-convolutional denoising auto-encoder at waveform level and is trained using a log-Mel Spectrogram and waveform reconstruction losses together with the BCE loss of a simple WUW classification network. A new database has been purposely prepared for the task of recognizing the WUW in challenging conditions containing negative samples that are very phonetically similar to the keyword. The database is extended with public databases and an exhaustive data augmentation to simulate different noises and environments. The results obtained by concatenating the SE with a simple and state-of-the-art WUW detectors show that the SE does not have a negative impact on the recognition rate in quiet environments while increasing the performance in the presence of noise, especially when the SE and WUW detector are trained jointly end-to-end.

BCN2BRNO: ASR System Fusion for Albayzin 2020 Speech to Text Challenge

Jan 29, 2021

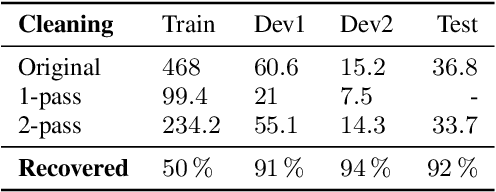

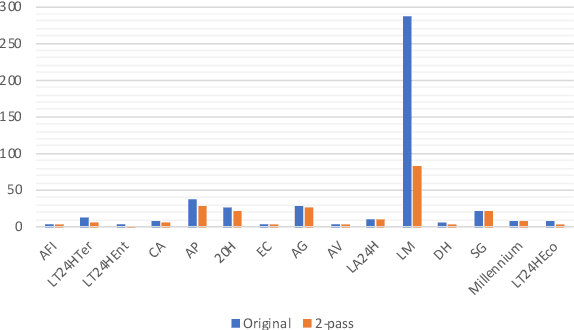

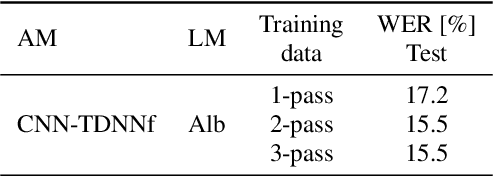

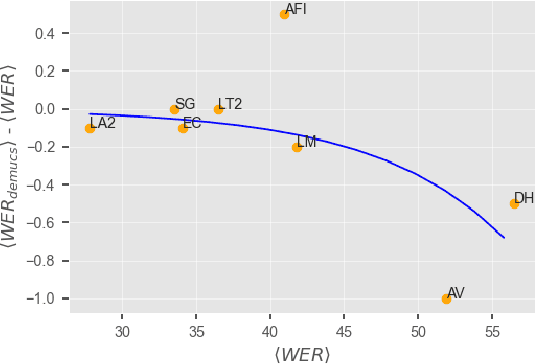

Abstract:This paper describes joint effort of BUT and Telef\'onica Research on development of Automatic Speech Recognition systems for Albayzin 2020 Challenge. We compare approaches based on either hybrid or end-to-end models. In hybrid modelling, we explore the impact of SpecAugment layer on performance. For end-to-end modelling, we used a convolutional neural network with gated linear units (GLUs). The performance of such model is also evaluated with an additional n-gram language model to improve word error rates. We further inspect source separation methods to extract speech from noisy environment (i.e. TV shows). More precisely, we assess the effect of using a neural-based music separator named Demucs. A fusion of our best systems achieved 23.33% WER in official Albayzin 2020 evaluations. Aside from techniques used in our final submitted systems, we also describe our efforts in retrieving high quality transcripts for training.

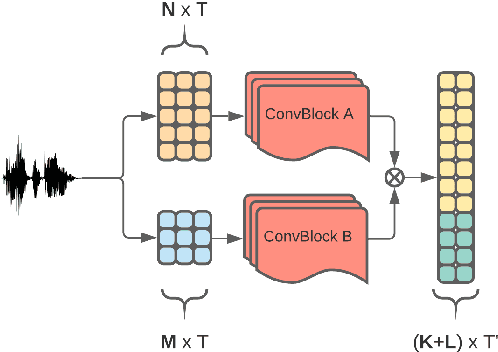

Convolutional Speech Recognition with Pitch and Voice Quality Features

Sep 02, 2020

Abstract:The effects of adding pitch and voice quality features such as jitter and shimmer to a state-of-the-art CNN model for Automatic Speech Recognition are studied in this work. Pitch features have been previously used for improving classical HMM and DNN baselines, while jitter and shimmer parameters have proven to be useful for tasks like speaker or emotion recognition. Up to our knowledge, this is the first work combining such pitch and voice quality features with modern convolutional architectures, showing improvements up to 2% absolute WER points, for the publicly available Spanish Common Voice dataset. Particularly, our work combines these features with mel-frequency spectral coefficients (MFSCs) to train a convolutional architecture with Gated Linear Units (Conv GLUs). Such models have shown to yield small word error rates, while being very suitable for parallel processing for online streaming recognition use cases. We have added pitch and voice quality functionality to Facebook's wav2letter speech recognition framework, and we provide with such code and recipes to the community, to carry on with further experiments. Besides, to the best of our knowledge, our Spanish Common Voice recipe is the first public Spanish recipe for wav2letter.

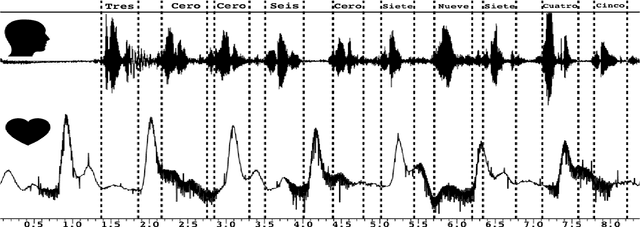

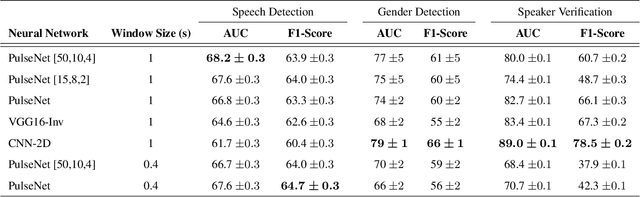

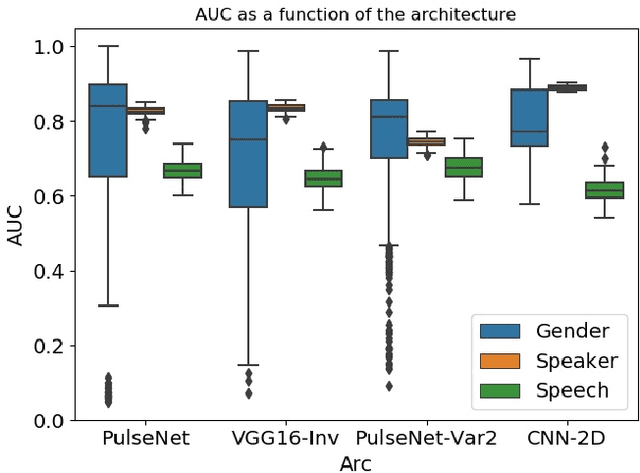

Detection of speech events and speaker characteristics through photo-plethysmographic signal neural processing

Nov 12, 2019

Abstract:The use of photoplethysmogram signal (PPG) for heart and sleep monitoring is commonly found nowadays in smartphones and wrist wearables. Besides common usages, it has been proposed and reported that person information can be extracted from PPG for other uses, like biometry tasks. In this work, we explore several end-to-end convolutional neural network architectures for detection of human's characteristics such as gender or person identity. In addition, we evaluate whether speech/non-speech events may be inferred from PPG signal, where speech might translate in fluctuations into the pulse signal. The obtained results are promising and clearly show the potential of fully end-to-end topologies for automatic extraction of meaningful biomarkers, even from a noisy signal sampled by a low-cost PPG sensor. The AUCs for best architectures put forward PPG wave as biological discriminant, reaching $79\%$ and $89.0\%$, respectively for gender and person verification tasks. Furthermore, speech detection experiments reporting AUCs around $69\%$ encourage us for further exploration about the feasibility of PPG for speech processing tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge