Guangshe Zhao

Inherent Redundancy in Spiking Neural Networks

Aug 16, 2023

Abstract:Spiking Neural Networks (SNNs) are well known as a promising energy-efficient alternative to conventional artificial neural networks. Subject to the preconceived impression that SNNs are sparse firing, the analysis and optimization of inherent redundancy in SNNs have been largely overlooked, thus the potential advantages of spike-based neuromorphic computing in accuracy and energy efficiency are interfered. In this work, we pose and focus on three key questions regarding the inherent redundancy in SNNs. We argue that the redundancy is induced by the spatio-temporal invariance of SNNs, which enhances the efficiency of parameter utilization but also invites lots of noise spikes. Further, we analyze the effect of spatio-temporal invariance on the spatio-temporal dynamics and spike firing of SNNs. Then, motivated by these analyses, we propose an Advance Spatial Attention (ASA) module to harness SNNs' redundancy, which can adaptively optimize their membrane potential distribution by a pair of individual spatial attention sub-modules. In this way, noise spike features are accurately regulated. Experimental results demonstrate that the proposed method can significantly drop the spike firing with better performance than state-of-the-art SNN baselines. Our code is available in \url{https://github.com/BICLab/ASA-SNN}.

Probabilistic Modeling: Proving the Lottery Ticket Hypothesis in Spiking Neural Network

May 20, 2023

Abstract:The Lottery Ticket Hypothesis (LTH) states that a randomly-initialized large neural network contains a small sub-network (i.e., winning tickets) which, when trained in isolation, can achieve comparable performance to the large network. LTH opens up a new path for network pruning. Existing proofs of LTH in Artificial Neural Networks (ANNs) are based on continuous activation functions, such as ReLU, which satisfying the Lipschitz condition. However, these theoretical methods are not applicable in Spiking Neural Networks (SNNs) due to the discontinuous of spiking function. We argue that it is possible to extend the scope of LTH by eliminating Lipschitz condition. Specifically, we propose a novel probabilistic modeling approach for spiking neurons with complicated spatio-temporal dynamics. Then we theoretically and experimentally prove that LTH holds in SNNs. According to our theorem, we conclude that pruning directly in accordance with the weight size in existing SNNs is clearly not optimal. We further design a new criterion for pruning based on our theory, which achieves better pruning results than baseline.

Attention Spiking Neural Networks

Sep 28, 2022

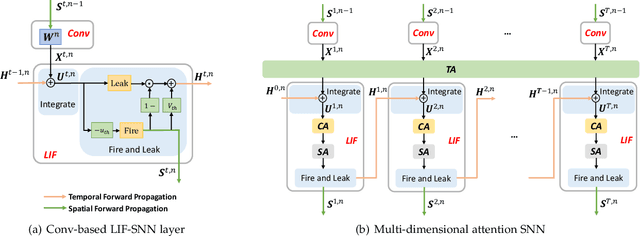

Abstract:Benefiting from the event-driven and sparse spiking characteristics of the brain, spiking neural networks (SNNs) are becoming an energy-efficient alternative to artificial neural networks (ANNs). However, the performance gap between SNNs and ANNs has been a great hindrance to deploying SNNs ubiquitously for a long time. To leverage the full potential of SNNs, we study the effect of attention mechanisms in SNNs. We first present our idea of attention with a plug-and-play kit, termed the Multi-dimensional Attention (MA). Then, a new attention SNN architecture with end-to-end training called "MA-SNN" is proposed, which infers attention weights along the temporal, channel, as well as spatial dimensions separately or simultaneously. Based on the existing neuroscience theories, we exploit the attention weights to optimize membrane potentials, which in turn regulate the spiking response in a data-dependent way. At the cost of negligible additional parameters, MA facilitates vanilla SNNs to achieve sparser spiking activity, better performance, and energy efficiency concurrently. Experiments are conducted in event-based DVS128 Gesture/Gait action recognition and ImageNet-1k image classification. On Gesture/Gait, the spike counts are reduced by 84.9%/81.6%, and the task accuracy and energy efficiency are improved by 5.9%/4.7% and 3.4$\times$/3.2$\times$. On ImageNet-1K, we achieve top-1 accuracy of 75.92% and 77.08% on single/4-step Res-SNN-104, which are state-of-the-art results in SNNs. To our best knowledge, this is for the first time, that the SNN community achieves comparable or even better performance compared with its ANN counterpart in the large-scale dataset. Our work lights up SNN's potential as a general backbone to support various applications for SNNs, with a great balance between effectiveness and efficiency.

Temporal-wise Attention Spiking Neural Networks for Event Streams Classification

Jul 25, 2021

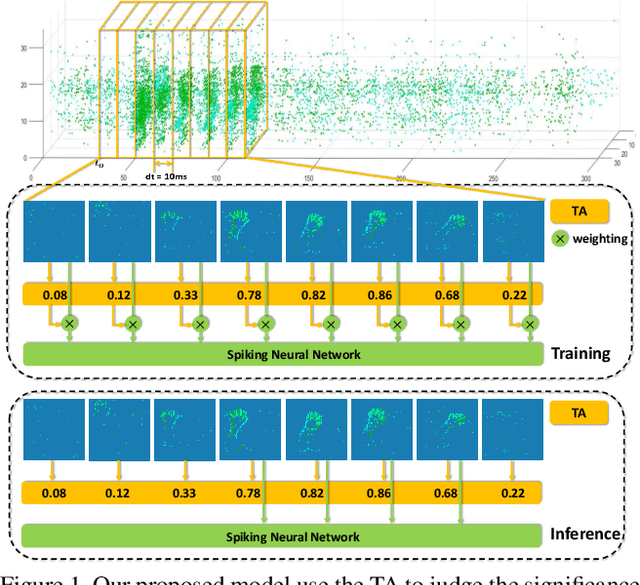

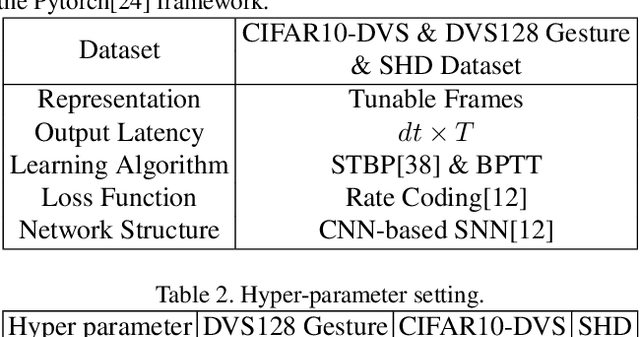

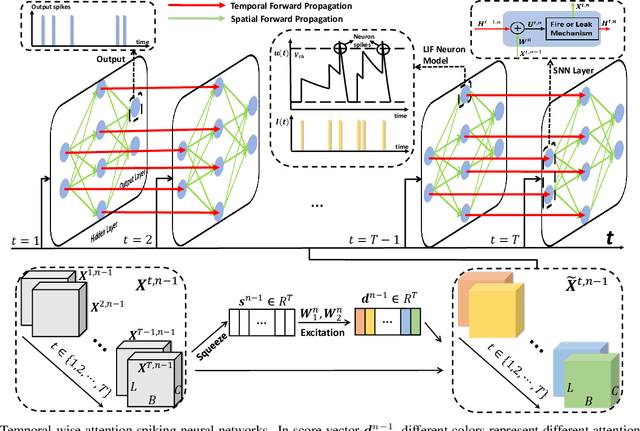

Abstract:How to effectively and efficiently deal with spatio-temporal event streams, where the events are generally sparse and non-uniform and have the microsecond temporal resolution, is of great value and has various real-life applications. Spiking neural network (SNN), as one of the brain-inspired event-triggered computing models, has the potential to extract effective spatio-temporal features from the event streams. However, when aggregating individual events into frames with a new higher temporal resolution, existing SNN models do not attach importance to that the serial frames have different signal-to-noise ratios since event streams are sparse and non-uniform. This situation interferes with the performance of existing SNNs. In this work, we propose a temporal-wise attention SNN (TA-SNN) model to learn frame-based representation for processing event streams. Concretely, we extend the attention concept to temporal-wise input to judge the significance of frames for the final decision at the training stage, and discard the irrelevant frames at the inference stage. We demonstrate that TA-SNN models improve the accuracy of event streams classification tasks. We also study the impact of multiple-scale temporal resolutions for frame-based representation. Our approach is tested on three different classification tasks: gesture recognition, image classification, and spoken digit recognition. We report the state-of-the-art results on these tasks, and get the essential improvement of accuracy (almost 19\%) for gesture recognition with only 60 ms.

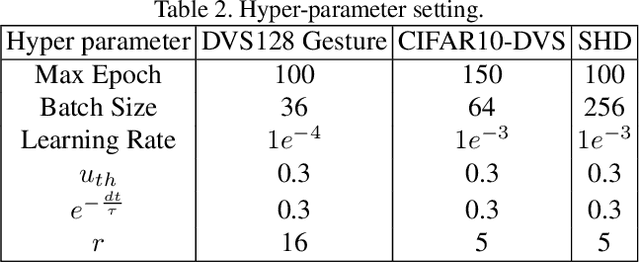

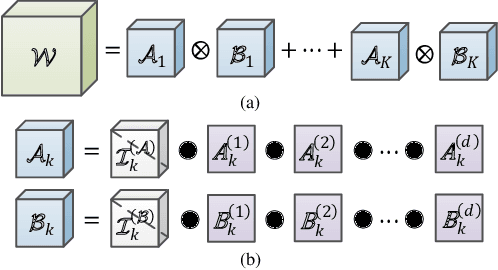

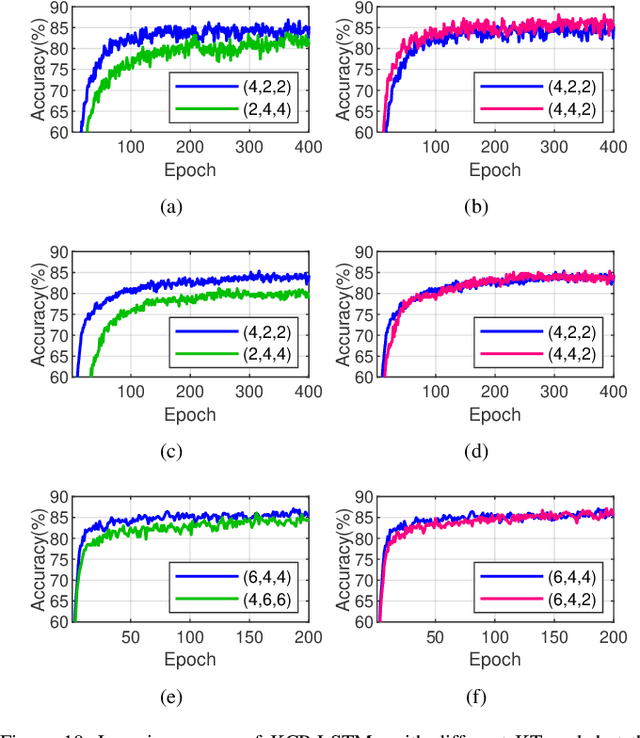

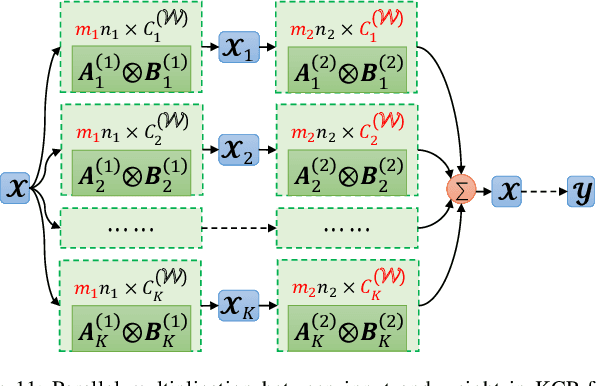

Kronecker CP Decomposition with Fast Multiplication for Compressing RNNs

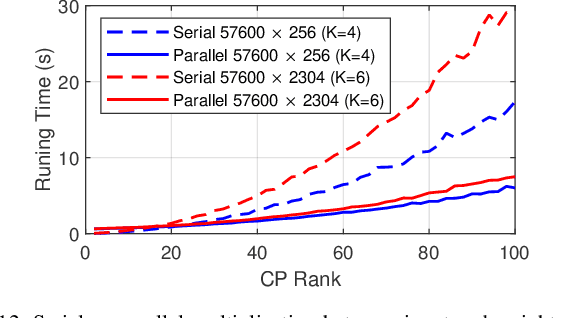

Aug 21, 2020

Abstract:Recurrent neural networks (RNNs) are powerful in the tasks oriented to sequential data, such as natural language processing and video recognition. However, since the modern RNNs, including long-short term memory (LSTM) and gated recurrent unit (GRU) networks, have complex topologies and expensive space/computation complexity, compressing them becomes a hot and promising topic in recent years. Among plenty of compression methods, tensor decomposition, e.g., tensor train (TT), block term (BT), tensor ring (TR) and hierarchical Tucker (HT), appears to be the most amazing approach since a very high compression ratio might be obtained. Nevertheless, none of these tensor decomposition formats can provide both the space and computation efficiency. In this paper, we consider to compress RNNs based on a novel Kronecker CANDECOMP/PARAFAC (KCP) decomposition, which is derived from Kronecker tensor (KT) decomposition, by proposing two fast algorithms of multiplication between the input and the tensor-decomposed weight. According to our experiments based on UCF11, Youtube Celebrities Face and UCF50 datasets, it can be verified that the proposed KCP-RNNs have comparable performance of accuracy with those in other tensor-decomposed formats, and even 278,219x compression ratio could be obtained by the low rank KCP. More importantly, KCP-RNNs are efficient in both space and computation complexity compared with other tensor-decomposed ones under similar ranks. Besides, we find KCP has the best potential for parallel computing to accelerate the calculations in neural networks.

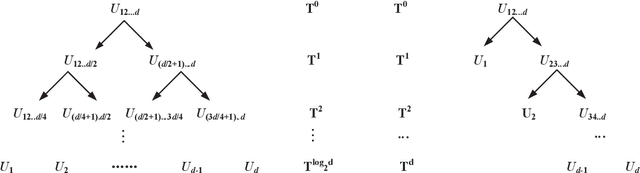

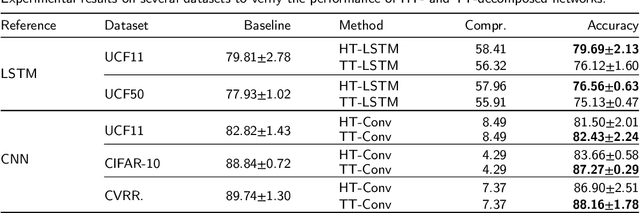

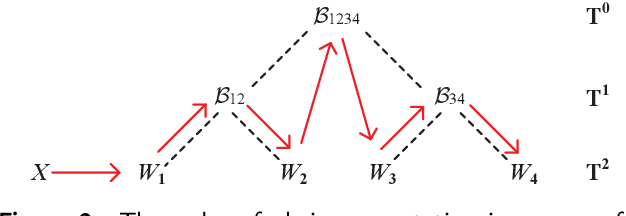

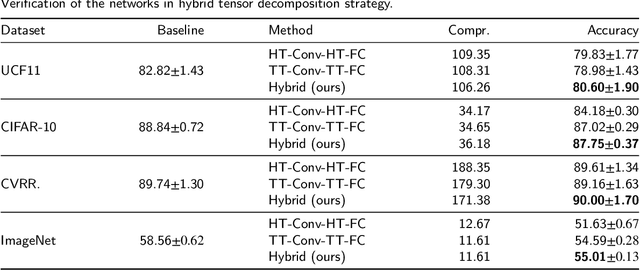

Hybrid Tensor Decomposition in Neural Network Compression

Jun 29, 2020

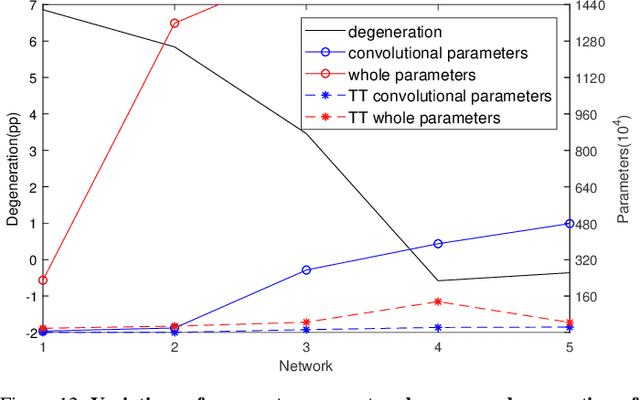

Abstract:Deep neural networks (DNNs) have enabled impressive breakthroughs in various artificial intelligence (AI) applications recently due to its capability of learning high-level features from big data. However, the current demand of DNNs for computational resources especially the storage consumption is growing due to that the increasing sizes of models are being required for more and more complicated applications. To address this problem, several tensor decomposition methods including tensor-train (TT) and tensor-ring (TR) have been applied to compress DNNs and shown considerable compression effectiveness. In this work, we introduce the hierarchical Tucker (HT), a classical but rarely-used tensor decomposition method, to investigate its capability in neural network compression. We convert the weight matrices and convolutional kernels to both HT and TT formats for comparative study, since the latter is the most widely used decomposition method and the variant of HT. We further theoretically and experimentally discover that the HT format has better performance on compressing weight matrices, while the TT format is more suited for compressing convolutional kernels. Based on this phenomenon we propose a strategy of hybrid tensor decomposition by combining TT and HT together to compress convolutional and fully connected parts separately and attain better accuracy than only using the TT or HT format on convolutional neural networks (CNNs). Our work illuminates the prospects of hybrid tensor decomposition for neural network compression.

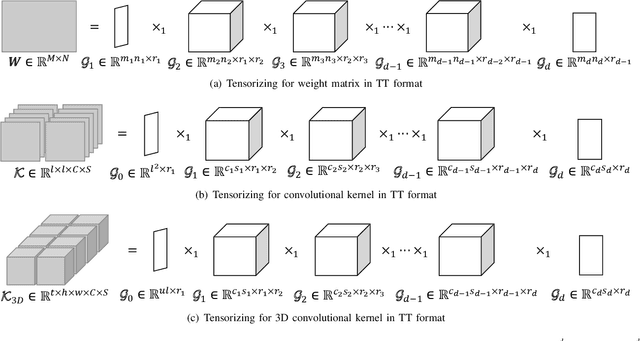

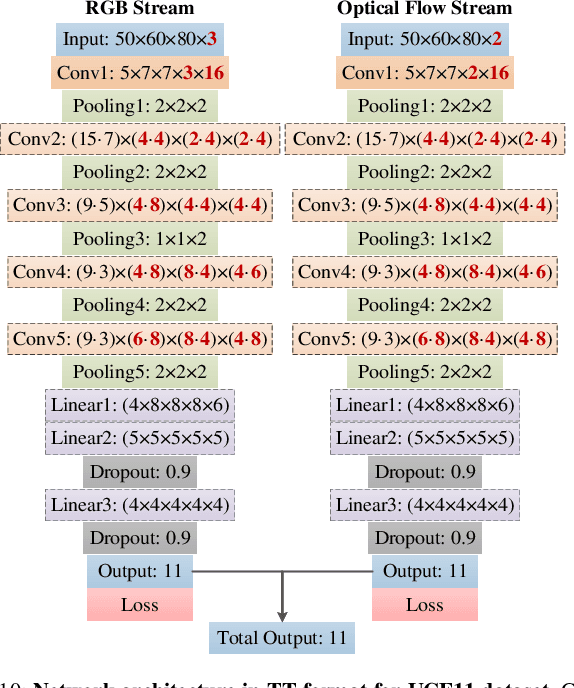

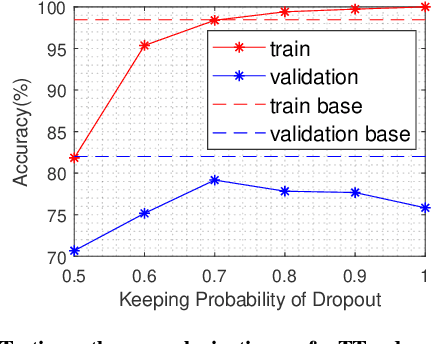

Lossless Compression for 3DCNNs Based on Tensor Train Decomposition

Dec 08, 2019

Abstract:Three dimensional convolutional neural networks (3DCNNs) have been applied in many tasks of video or 3D point cloud recognition. However, due to the higher dimension of convolutional kernels, the space complexity of 3DCNNs is generally larger than that of traditional two dimensional convolutional neural networks (2DCNNs). To miniaturize 3DCNNs for the deployment in confining environments such as embedded devices, neural network compression is a promising approach. In this work, we adopt the tensor train (TT) decomposition, the most compact and simplest \emph{in situ} training compression method, to shrink the 3DCNN models. We give the tensorizing for 3D convolutional kernels in TT format and investigate how to select appropriate ranks for the tensor in TT format. In the light of multiple contrast experiments based on VIVA challenge and UCF11 datasets, we conclude that the TT decomposition can compress redundant 3DCNNs in a ratio up to 121\(\times\) with little accuracy improvement. Besides, we achieve a state-of-the-art result of TT-3DCNN on VIVA challenge dataset (81.83\%).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge