Guanbin Xing

Vision meets mmWave Radar: 3D Object Perception Benchmark for Autonomous Driving

Nov 17, 2023

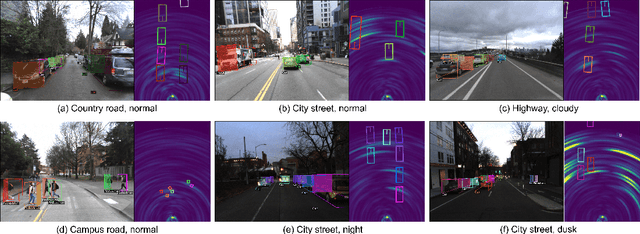

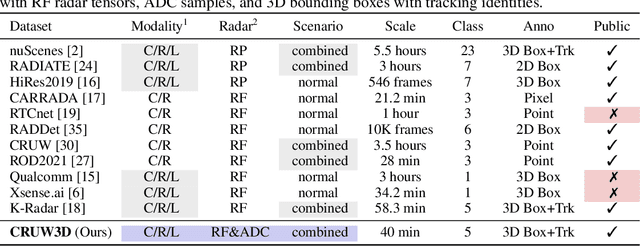

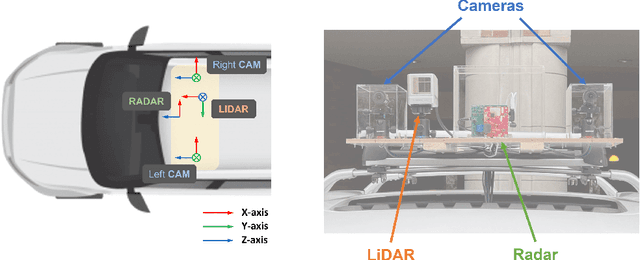

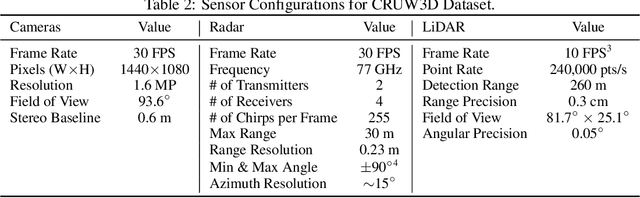

Abstract:Sensor fusion is crucial for an accurate and robust perception system on autonomous vehicles. Most existing datasets and perception solutions focus on fusing cameras and LiDAR. However, the collaboration between camera and radar is significantly under-exploited. The incorporation of rich semantic information from the camera, and reliable 3D information from the radar can potentially achieve an efficient, cheap, and portable solution for 3D object perception tasks. It can also be robust to different lighting or all-weather driving scenarios due to the capability of mmWave radars. In this paper, we introduce the CRUW3D dataset, including 66K synchronized and well-calibrated camera, radar, and LiDAR frames in various driving scenarios. Unlike other large-scale autonomous driving datasets, our radar data is in the format of radio frequency (RF) tensors that contain not only 3D location information but also spatio-temporal semantic information. This kind of radar format can enable machine learning models to generate more reliable object perception results after interacting and fusing the information or features between the camera and radar.

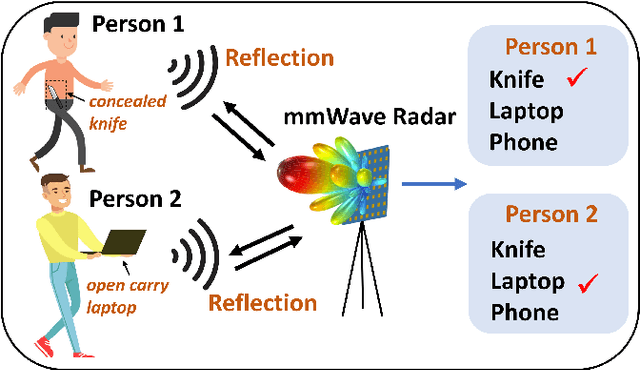

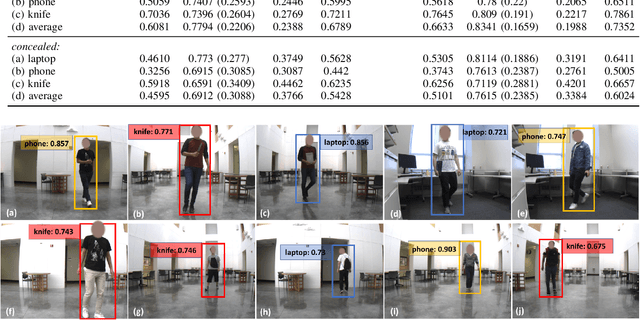

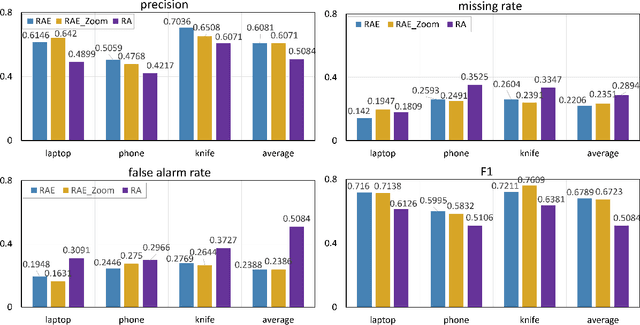

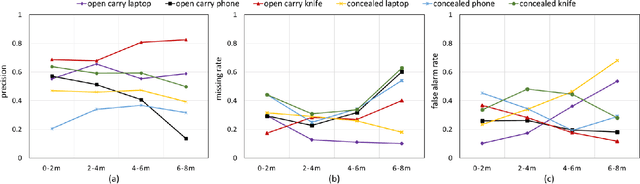

Learning to Detect Open Carry and Concealed Object with 77GHz Radar

Oct 31, 2021

Abstract:Detecting harmful carried objects plays a key role in intelligent surveillance systems and has widespread applications, for example, in airport security. In this paper, we focus on the relatively unexplored area of using low-cost 77GHz mmWave radar for the carried objects detection problem. The proposed system is capable of real-time detecting three classes of objects - laptop, phone, and knife - under open carry and concealed cases where objects are hidden with clothes or bags. This capability is achieved by initial signal processing for localization and generating range-azimuth-elevation image cubes, followed by a deep learning-based prediction network and a multi-shot post-processing module for detecting objects. Extensive experiments for validating the system performance on detecting open carry and concealed objects have been presented with a self-built radar-camera testbed and dataset. Additionally, the influence of different input, factors, and parameters on system performance is analyzed, providing an intuitive understanding of the system. This system would be the very first baseline for other future works aiming to detect carried objects using 77GHz radar.

Perception Through 2D-MIMO FMCW Automotive Radar Under Adverse Weather

Apr 23, 2021

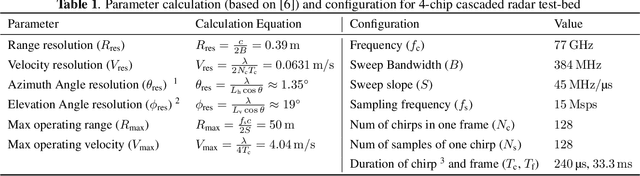

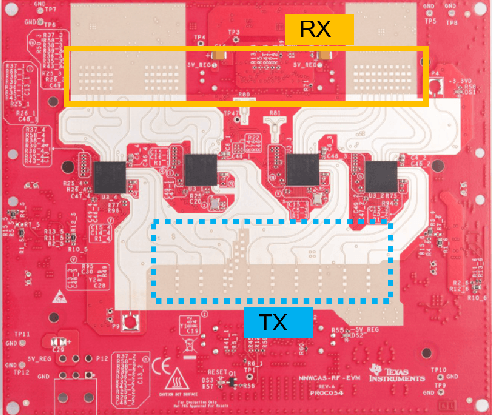

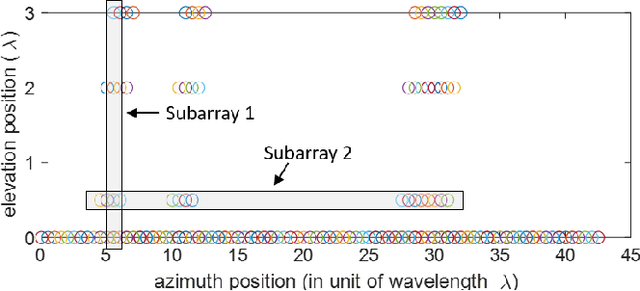

Abstract:Millimeter-wave (mmWave) radars are being increasingly integrated in commercial vehicles to support new Adaptive Driver Assisted Systems (ADAS) features that require accurate location and Doppler velocity estimates of objects, independent of environmental conditions. To explore radar-based ADAS applications, we have updated our test-bed with Texas Instrument's 4-chip cascaded FMCW radar (TIDEP-01012) that forms a non-uniform 2D MIMO virtual array. In this paper, we develop the necessary received signal models for applying different direction of arrival (DoA) estimation algorithms and experimentally validating their performance on formed virtual array under controlled scenarios. To test the robustness of mmWave radars under adverse weather conditions, we collected raw radar dataset (I-Q samples post demodulated) for various objects by a driven vehicle-mounted platform, specifically for snowy and foggy situations where cameras are largely ineffective. Initial results from radar imaging algorithms to this dataset are presented.

RODNet: A Real-Time Radar Object Detection Network Cross-Supervised by Camera-Radar Fused Object 3D Localization

Feb 09, 2021

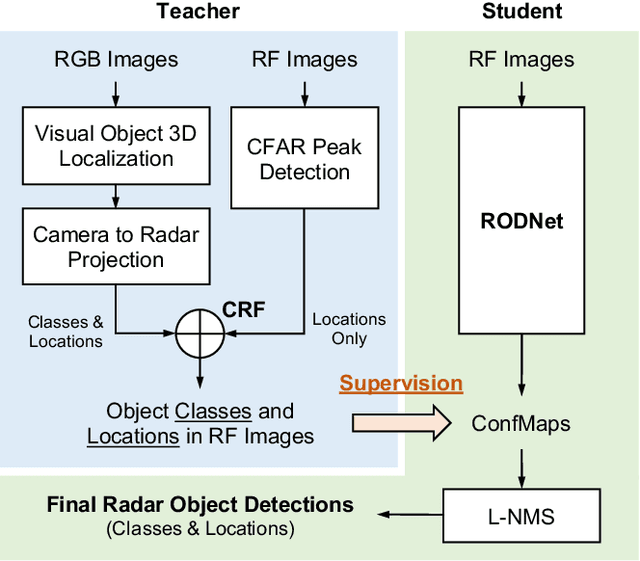

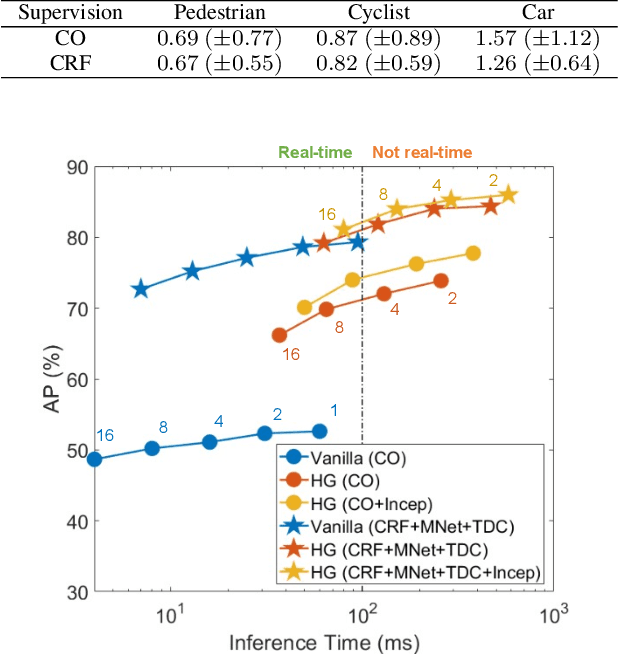

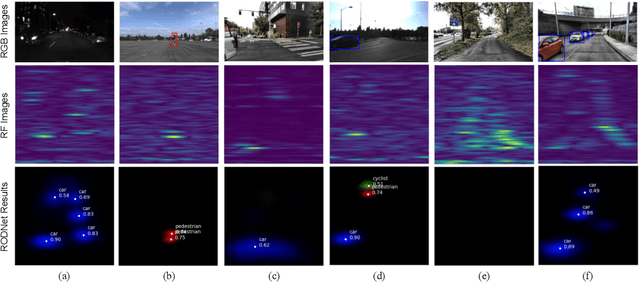

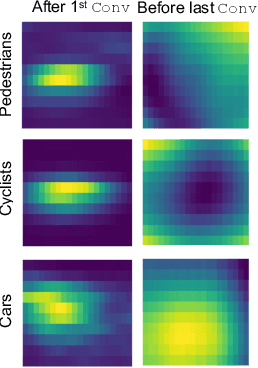

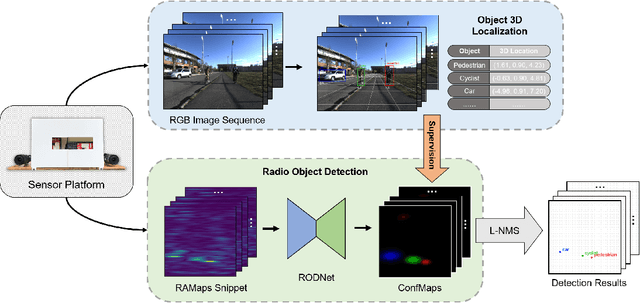

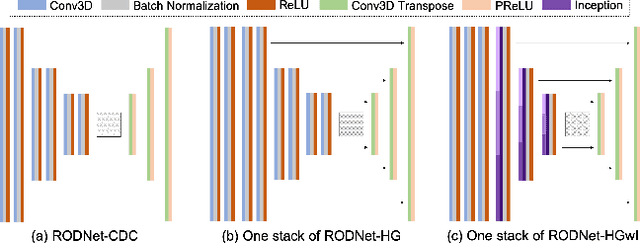

Abstract:Various autonomous or assisted driving strategies have been facilitated through the accurate and reliable perception of the environment around a vehicle. Among the commonly used sensors, radar has usually been considered as a robust and cost-effective solution even in adverse driving scenarios, e.g., weak/strong lighting or bad weather. Instead of considering to fuse the unreliable information from all available sensors, perception from pure radar data becomes a valuable alternative that is worth exploring. In this paper, we propose a deep radar object detection network, named RODNet, which is cross-supervised by a camera-radar fused algorithm without laborious annotation efforts, to effectively detect objects from the radio frequency (RF) images in real-time. First, the raw signals captured by millimeter-wave radars are transformed to RF images in range-azimuth coordinates. Second, our proposed RODNet takes a sequence of RF images as the input to predict the likelihood of objects in the radar field of view (FoV). Two customized modules are also added to handle multi-chirp information and object relative motion. Instead of using human-labeled ground truth for training, the proposed RODNet is cross-supervised by a novel 3D localization of detected objects using a camera-radar fusion (CRF) strategy in the training stage. Finally, we propose a method to evaluate the object detection performance of the RODNet. Due to no existing public dataset available for our task, we create a new dataset, named CRUW, which contains synchronized RGB and RF image sequences in various driving scenarios. With intensive experiments, our proposed cross-supervised RODNet achieves 86% average precision and 88% average recall of object detection performance, which shows the robustness to noisy scenarios in various driving conditions.

MIMO-SAR: A Hierarchical High-resolution Imaging Algorithm for FMCW Automotive Radar

Jan 22, 2021

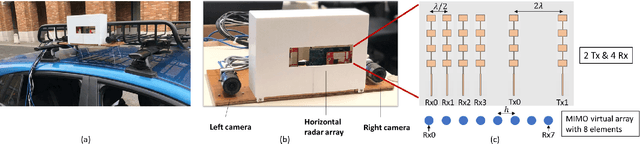

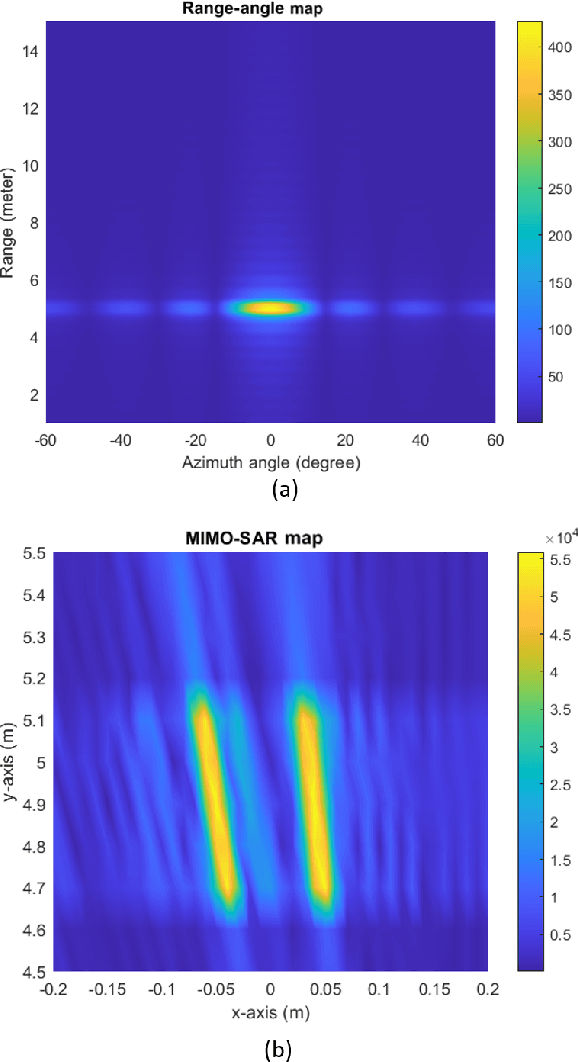

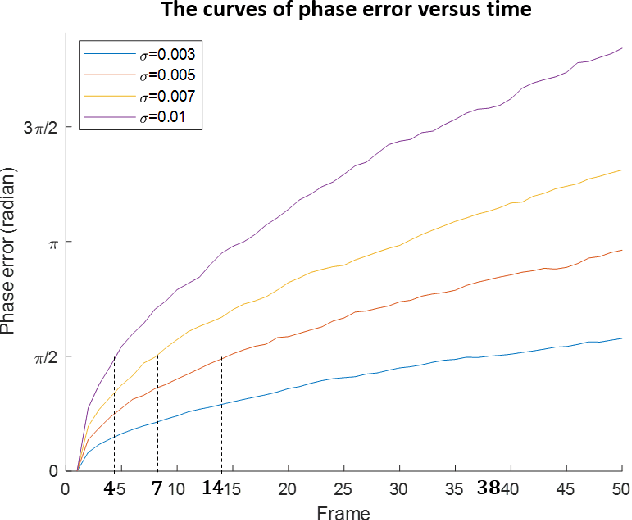

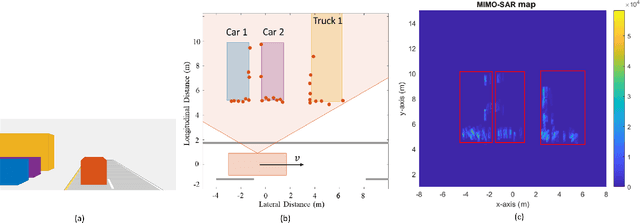

Abstract:Millimeter-wave radars are being increasingly integrated into commercial vehicles to support advanced driver-assistance system features. A key shortcoming for present-day vehicular radar imaging is poor azimuth resolution (for side-looking operation) due to the form factor limits on antenna size and placement. In this paper, we propose a solution via a new multiple-input and multiple-output synthetic aperture radar (MIMO-SAR) imaging technique, that applies coherent SAR principles to vehicular MIMO radar to improve the side-view (angular) resolution. The proposed 2-stage hierarchical MIMO-SAR processing workflow drastically reduces the computation load while preserving image resolution. To enable coherent processing over the synthetic aperture, we integrate a radar odometry algorithm that estimates the trajectory of ego-radar. The MIMO-SAR algorithm is validated by both simulations and real experiment data collected by a vehicle-mounted radar platform (see Fig. 1).

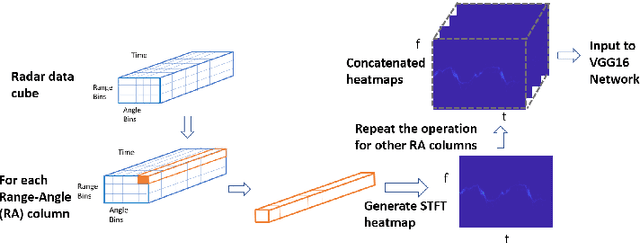

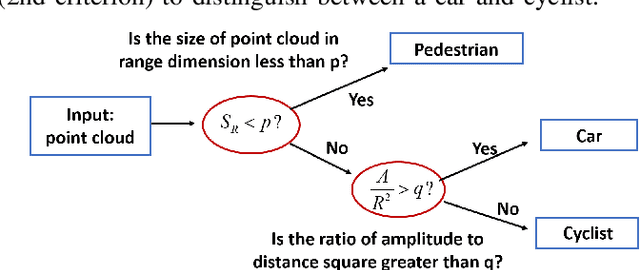

RAMP-CNN: A Novel Neural Network for Enhanced Automotive Radar Object Recognition

Nov 13, 2020

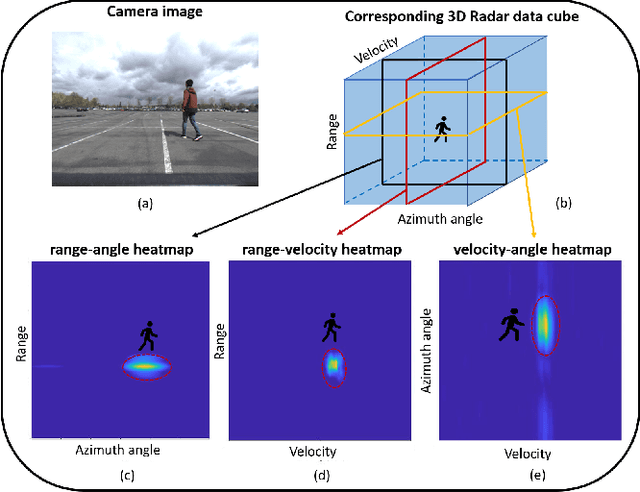

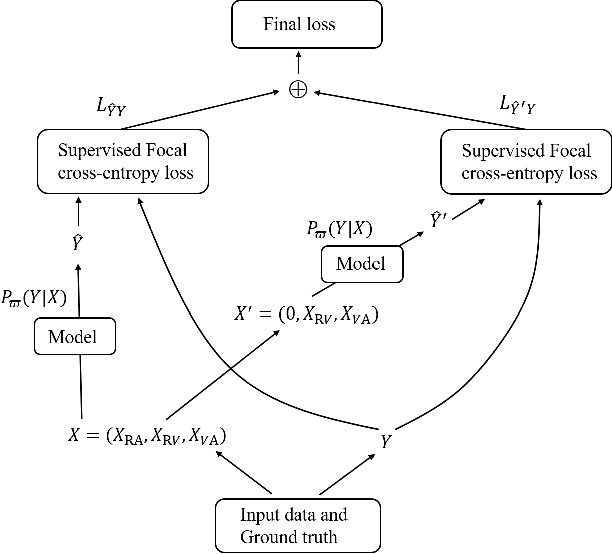

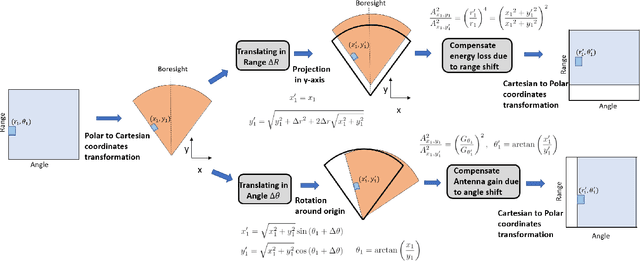

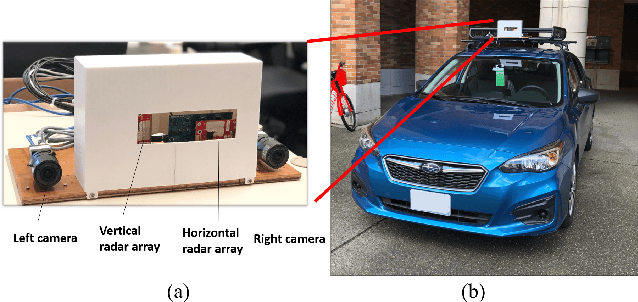

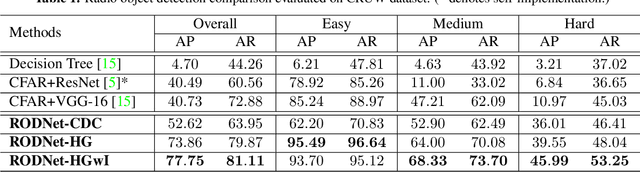

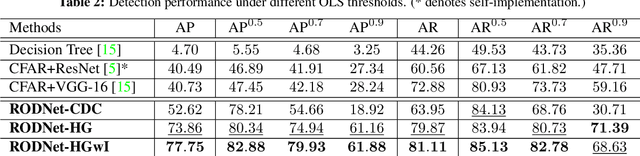

Abstract:Millimeter-wave (mmW) radars are being increasingly integrated into commercial vehicles to support new advanced driver-assistance systems (ADAS) by enabling robust and high-performance object detection, localization, as well as recognition - a key component of new environmental perception. In this paper, we propose a novel radar multiple-perspectives convolutional neural network (RAMP-CNN) that extracts the location and class of objects based on further processing of the range-velocity-angle (RVA) heatmap sequences. To bypass the complexity of 4D convolutional neural networks (NN), we propose to combine several lower-dimension NN models within our RAMP-CNN model that nonetheless approaches the performance upper-bound with lower complexity. The extensive experiments show that the proposed RAMP-CNN model achieves better average recall (AR) and average precision (AP) than prior works in all testing scenarios (see Table. III). Besides, the RAMP-CNN model is validated to work robustly under the nighttime, which enables low-cost radars as a potential substitute for pure optical sensing under severe conditions.

* 15 pages

RODNet: Object Detection under Severe Conditions Using Vision-Radio Cross-Modal Supervision

Mar 03, 2020

Abstract:Radar is usually more robust than the camera in severe autonomous driving scenarios, e.g., weak/strong lighting and bad weather. However, the semantic information from the radio signals is difficult to extract. In this paper, we propose a radio object detection network (RODNet) to detect objects purely from the processed radar data in the format of range-azimuth frequency heatmaps (RAMaps). To train the RODNet, we introduce a cross-modal supervision framework, which utilizes the rich information extracted by a vision-based object 3D localization technique to teach object detection for the radar. In order to train and evaluate our method, we build a new dataset -- CRUW, containing synchronized video sequences and RAMaps in various scenarios. After intensive experiments, our RODNet shows favorable object detection performance without the presence of the camera. To the best of our knowledge, this is the first work that can achieve accurate multi-class object detection purely using radar data as the input.

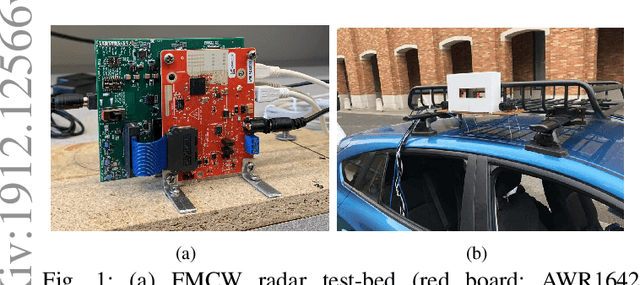

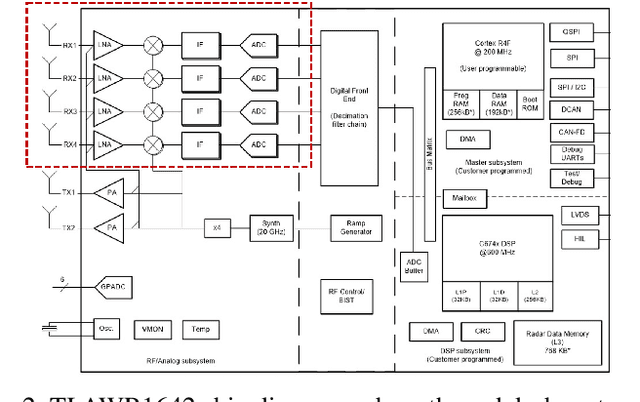

Experiments with mmWave Automotive Radar Test-bed

Dec 29, 2019

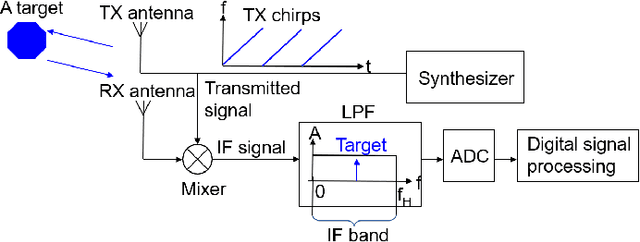

Abstract:Millimeter-wave (mmW) radars are being increasingly integrated in commercial vehicles to support new Adaptive Driver Assisted Systems (ADAS) for its ability to provide high accuracy location, velocity, and angle estimates of objects, largely independent of environmental conditions. Such radar sensors not only perform basic functions such as detection and ranging/angular localization, but also provide critical inputs for environmental perception via object recognition and classification. To explore radar-based ADAS applications, we have assembled a lab-scale frequency modulated continuous wave (FMCW) radar test-bed (https://depts.washington.edu/funlab/research) based on Texas Instrument's (TI) automotive chipset family. In this work, we describe the test-bed components and provide a summary of FMCW radar operational principles. To date, we have created a large raw radar dataset for various objects under controlled scenarios. Thereafter, we apply some radar imaging algorithms to the collected dataset, and present some preliminary results that validate its capabilities in terms of object recognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge