RODNet: Object Detection under Severe Conditions Using Vision-Radio Cross-Modal Supervision

Paper and Code

Mar 03, 2020

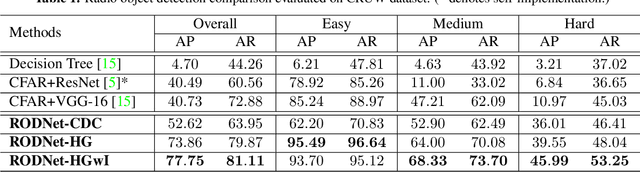

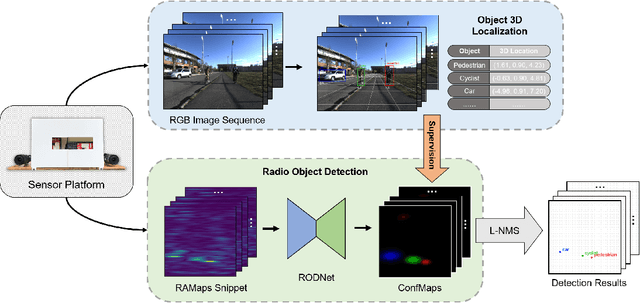

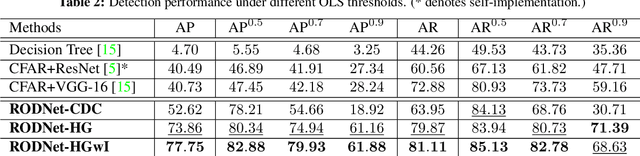

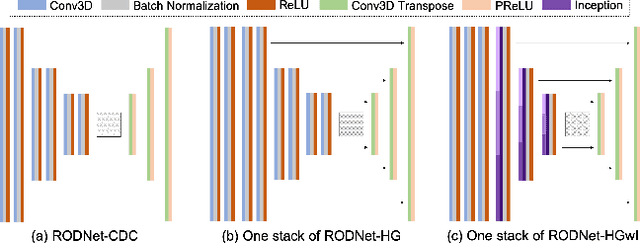

Radar is usually more robust than the camera in severe autonomous driving scenarios, e.g., weak/strong lighting and bad weather. However, the semantic information from the radio signals is difficult to extract. In this paper, we propose a radio object detection network (RODNet) to detect objects purely from the processed radar data in the format of range-azimuth frequency heatmaps (RAMaps). To train the RODNet, we introduce a cross-modal supervision framework, which utilizes the rich information extracted by a vision-based object 3D localization technique to teach object detection for the radar. In order to train and evaluate our method, we build a new dataset -- CRUW, containing synchronized video sequences and RAMaps in various scenarios. After intensive experiments, our RODNet shows favorable object detection performance without the presence of the camera. To the best of our knowledge, this is the first work that can achieve accurate multi-class object detection purely using radar data as the input.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge