Grzegorz J. Nalepa

Jagiellonian University

Financial Management System for SMEs: Real-World Deployment of Accounts Receivable and Cash Flow Prediction

Nov 05, 2025Abstract:Small and Medium Enterprises (SMEs), particularly freelancers and early-stage businesses, face unique financial management challenges due to limited resources, small customer bases, and constrained data availability. This paper presents the development and deployment of an integrated financial prediction system that combines accounts receivable prediction and cash flow forecasting specifically designed for SME operational constraints. Our system addresses the gap between enterprise-focused financial tools and the practical needs of freelancers and small businesses. The solution integrates two key components: a binary classification model for predicting invoice payment delays, and a multi-module cash flow forecasting model that handles incomplete and limited historical data. A prototype system has been implemented and deployed as a web application with integration into Cluee's platform, a startup providing financial management tools for freelancers, demonstrating practical feasibility for real-world SME financial management.

Position Paper: Metadata Enrichment Model: Integrating Neural Networks and Semantic Knowledge Graphs for Cultural Heritage Applications

May 29, 2025Abstract:The digitization of cultural heritage collections has opened new directions for research, yet the lack of enriched metadata poses a substantial challenge to accessibility, interoperability, and cross-institutional collaboration. In several past years neural networks models such as YOLOv11 and Detectron2 have revolutionized visual data analysis, but their application to domain-specific cultural artifacts - such as manuscripts and incunabula - remains limited by the absence of methodologies that address structural feature extraction and semantic interoperability. In this position paper, we argue, that the integration of neural networks with semantic technologies represents a paradigm shift in cultural heritage digitization processes. We present the Metadata Enrichment Model (MEM), a conceptual framework designed to enrich metadata for digitized collections by combining fine-tuned computer vision models, large language models (LLMs) and structured knowledge graphs. The Multilayer Vision Mechanism (MVM) appears as the key innovation of MEM. This iterative process improves visual analysis by dynamically detecting nested features, such as text within seals or images within stamps. To expose MEM's potential, we apply it to a dataset of digitized incunabula from the Jagiellonian Digital Library and release a manually annotated dataset of 105 manuscript pages. We examine the practical challenges of MEM's usage in real-world GLAM institutions, including the need for domain-specific fine-tuning, the adjustment of enriched metadata with Linked Data standards and computational costs. We present MEM as a flexible and extensible methodology. This paper contributes to the discussion on how artificial intelligence and semantic web technologies can advance cultural heritage research, and also use these technologies in practice.

User-centric evaluation of explainability of AI with and for humans: a comprehensive empirical study

Oct 21, 2024

Abstract:This study is located in the Human-Centered Artificial Intelligence (HCAI) and focuses on the results of a user-centered assessment of commonly used eXplainable Artificial Intelligence (XAI) algorithms, specifically investigating how humans understand and interact with the explanations provided by these algorithms. To achieve this, we employed a multi-disciplinary approach that included state-of-the-art research methods from social sciences to measure the comprehensibility of explanations generated by a state-of-the-art lachine learning model, specifically the Gradient Boosting Classifier (XGBClassifier). We conducted an extensive empirical user study involving interviews with 39 participants from three different groups, each with varying expertise in data science, data visualization, and domain-specific knowledge related to the dataset used for training the machine learning model. Participants were asked a series of questions to assess their understanding of the model's explanations. To ensure replicability, we built the model using a publicly available dataset from the UC Irvine Machine Learning Repository, focusing on edible and non-edible mushrooms. Our findings reveal limitations in existing XAI methods and confirm the need for new design principles and evaluation techniques that address the specific information needs and user perspectives of different classes of AI stakeholders. We believe that the results of our research and the cross-disciplinary methodology we developed can be successfully adapted to various data types and user profiles, thus promoting dialogue and address opportunities in HCAI research. To support this, we are making the data resulting from our study publicly available.

Advancing Manuscript Metadata: Work in Progress at the Jagiellonian University

Jul 09, 2024Abstract:As part of ongoing research projects, three Jagiellonian University units -- the Jagiellonian University Museum, the Jagiellonian University Archives, and the Jagiellonian Library -- are collaborating to digitize cultural heritage documents, describe them in detail, and then integrate these descriptions into a linked data cloud. Achieving this goal requires, as a first step, the development of a metadata model that, on the one hand, complies with existing standards, on the other hand, allows interoperability with other systems, and on the third, captures all the elements of description established by the curators of the collections. In this paper, we present a report on the current status of the work, in which we outline the most important requirements for the data model under development and then make a detailed comparison with the two standards that are the most relevant from the point of view of collections: Europeana Data Model used in Europeana and Encoded Archival Description used in Kalliope.

Microsoft Cloud-based Digitization Workflow with Rich Metadata Acquisition for Cultural Heritage Objects

Jul 09, 2024Abstract:In response to several cultural heritage initiatives at the Jagiellonian University, we have developed a new digitization workflow in collaboration with the Jagiellonian Library (JL). The solution is based on easy-to-access technological solutions -- Microsoft 365 cloud with MS Excel files as metadata acquisition interfaces, Office Script for validation, and MS Sharepoint for storage -- that allows metadata acquisition by domain experts (philologists, historians, philosophers, librarians, archivists, curators, etc.) regardless of their experience with information systems. The ultimate goal is to create a knowledge graph that describes the analyzed holdings, linked to general knowledge bases, as well as to other cultural heritage collections, so careful attention is paid to the high accuracy of metadata and proper links to external sources. The workflow has already been evaluated in two pilots in the DiHeLib project focused on digitizing the so-called "Berlin Collection" and in two workshops with international guests, which allowed for its refinement and confirmation of its correctness and usability for JL. As the proposed workflow does not interfere with existing systems or domain guidelines regarding digitization and basic metadata collection in a given institution (e.g., file type, image quality, use of Dublin Core/MARC-21), but extends them in order to enable rich metadata collection, not previously possible, we believe that it could be of interest to all GLAMs (galleries, libraries, archives, and museums).

Evaluation and Comparison of Emotionally Evocative Image Augmentation Methods

Jun 23, 2024Abstract:Experiments in affective computing are based on stimulus datasets that, in the process of standardization, receive metadata describing which emotions each stimulus evokes. In this paper, we explore an approach to creating stimulus datasets for affective computing using generative adversarial networks (GANs). Traditional dataset preparation methods are costly and time consuming, prompting our investigation of alternatives. We conducted experiments with various GAN architectures, including Deep Convolutional GAN, Conditional GAN, Auxiliary Classifier GAN, Progressive Augmentation GAN, and Wasserstein GAN, alongside data augmentation and transfer learning techniques. Our findings highlight promising advances in the generation of emotionally evocative synthetic images, suggesting significant potential for future research and improvements in this domain.

Local Universal Rule-based Explanations

Oct 23, 2023

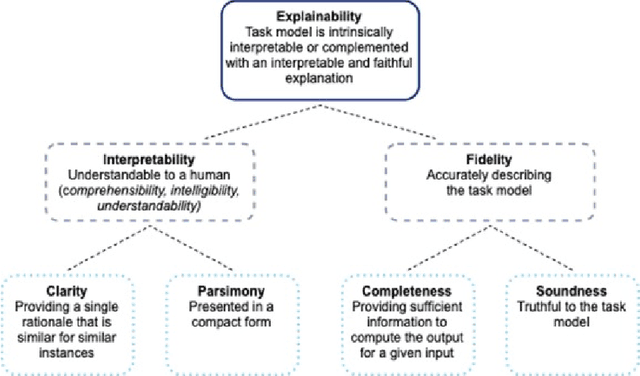

Abstract:Explainable artificial intelligence (XAI) is one of the most intensively developed are of AI in recent years. It is also one of the most fragmented one with multiple methods that focus on different aspects of explanations. This makes difficult to obtain the full spectrum of explanation at once in a compact and consistent way. To address this issue, we present Local Universal Explainer (LUX) that is a rule-based explainer which can generate factual, counterfactual and visual explanations. It is based on a modified version of decision tree algorithms that allows for oblique splits and integration with feature importance XAI methods such as SHAP or LIME. It does not use data generation in opposite to other algorithms, but is focused on selecting local concepts in a form of high-density clusters of real data that have the highest impact on forming the decision boundary of the explained model. We tested our method on real and synthetic datasets and compared it with state-of-the-art rule-based explainers such as LORE, EXPLAN and Anchor. Our method outperforms currently existing approaches in terms of simplicity, global fidelity and representativeness.

Explainable Predictive Maintenance

Jun 08, 2023

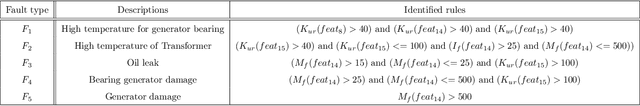

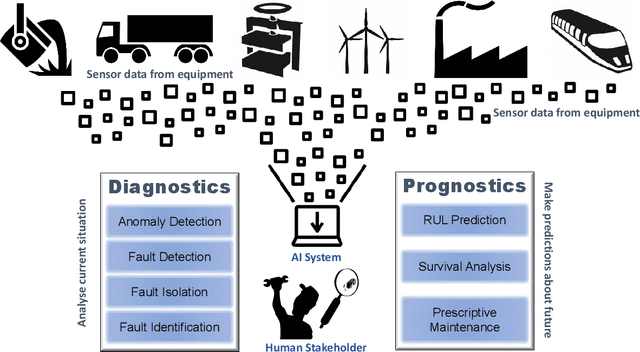

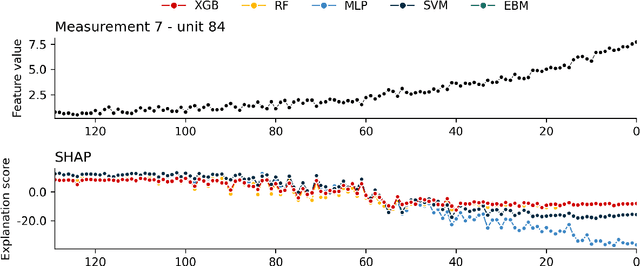

Abstract:Explainable Artificial Intelligence (XAI) fills the role of a critical interface fostering interactions between sophisticated intelligent systems and diverse individuals, including data scientists, domain experts, end-users, and more. It aids in deciphering the intricate internal mechanisms of ``black box'' Machine Learning (ML), rendering the reasons behind their decisions more understandable. However, current research in XAI primarily focuses on two aspects; ways to facilitate user trust, or to debug and refine the ML model. The majority of it falls short of recognising the diverse types of explanations needed in broader contexts, as different users and varied application areas necessitate solutions tailored to their specific needs. One such domain is Predictive Maintenance (PdM), an exploding area of research under the Industry 4.0 \& 5.0 umbrella. This position paper highlights the gap between existing XAI methodologies and the specific requirements for explanations within industrial applications, particularly the Predictive Maintenance field. Despite explainability's crucial role, this subject remains a relatively under-explored area, making this paper a pioneering attempt to bring relevant challenges to the research community's attention. We provide an overview of predictive maintenance tasks and accentuate the need and varying purposes for corresponding explanations. We then list and describe XAI techniques commonly employed in the literature, discussing their suitability for PdM tasks. Finally, to make the ideas and claims more concrete, we demonstrate XAI applied in four specific industrial use cases: commercial vehicles, metro trains, steel plants, and wind farms, spotlighting areas requiring further research.

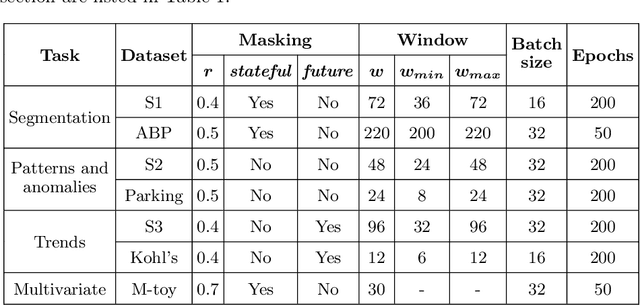

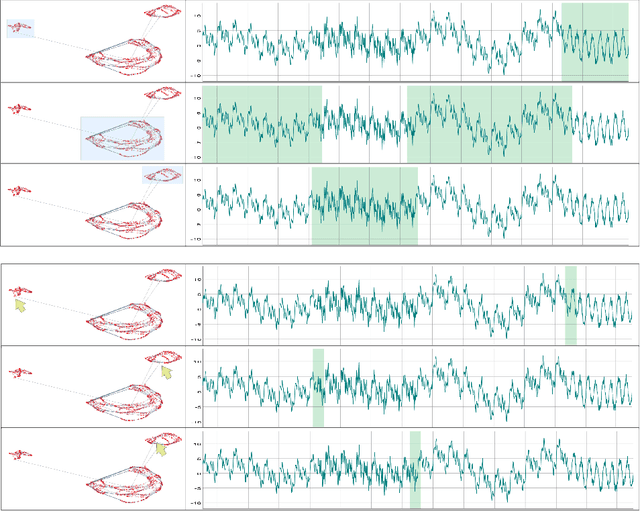

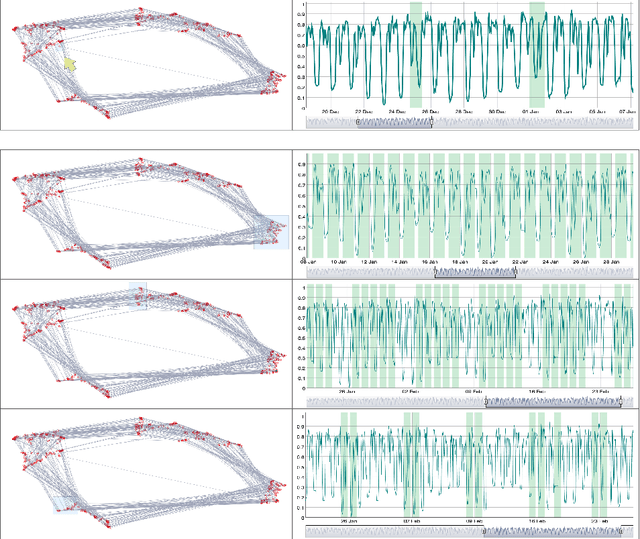

DeepVATS: Deep Visual Analytics for Time Series

Feb 08, 2023

Abstract:The field of Deep Visual Analytics (DVA) has recently arisen from the idea of developing Visual Interactive Systems supported by deep learning techniques, in order to provide them with large-scale data processing capabilities and to unify their implementation across different data modalities and domains of application. In this paper we present DeepVATS, an open-source tool that brings the field of DVA into time series data. DeepVATS trains, in a self-supervised way, a masked time series autoencoder that reconstructs patches of a time series, and projects the knowledge contained in the embeddings of that model in an interactive plot, from which time series patterns and anomalies emerge and can be easily spotted. The tool has been tested on both synthetic and real datasets, and its code is publicly available on https://github.com/vrodriguezf/deepvats

KnAC: an approach for enhancing cluster analysis with background knowledge and explanations

Dec 16, 2021

Abstract:Pattern discovery in multidimensional data sets has been a subject of research since decades. There exists a wide spectrum of clustering algorithms that can be used for that purpose. However, their practical applications share in common the post-clustering phase, which concerns expert-based interpretation and analysis of the obtained results. We argue that this can be a bottleneck of the process, especially in the cases where domain knowledge exists prior to clustering. Such a situation requires not only a proper analysis of automatically discovered clusters, but also a conformance checking with existing knowledge. In this work, we present Knowledge Augmented Clustering (KnAC), which main goal is to confront expert-based labelling with automated clustering for the sake of updating and refining the former. Our solution does not depend on any ready clustering algorithm, nor introduce one. Instead KnAC can serve as an augmentation of an arbitrary clustering algorithm, making the approach robust and model-agnostic. We demonstrate the feasibility of our method on artificially, reproducible examples and on a real life use case scenario.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge