Francesco Piccialli

Towards One-shot Federated Learning: Advances, Challenges, and Future Directions

May 05, 2025Abstract:One-shot FL enables collaborative training in a single round, eliminating the need for iterative communication, making it particularly suitable for use in resource-constrained and privacy-sensitive applications. This survey offers a thorough examination of One-shot FL, highlighting its distinct operational framework compared to traditional federated approaches. One-shot FL supports resource-limited devices by enabling single-round model aggregation while maintaining data locality. The survey systematically categorizes existing methodologies, emphasizing advancements in client model initialization, aggregation techniques, and strategies for managing heterogeneous data distributions. Furthermore, we analyze the limitations of current approaches, particularly in terms of scalability and generalization in non-IID settings. By analyzing cutting-edge techniques and outlining open challenges, this survey aspires to provide a comprehensive reference for researchers and practitioners aiming to design and implement One-shot FL systems, advancing the development and adoption of One-shot FL solutions in a real-world, resource-constrained scenario.

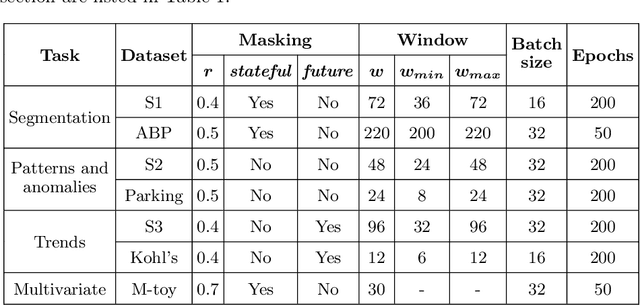

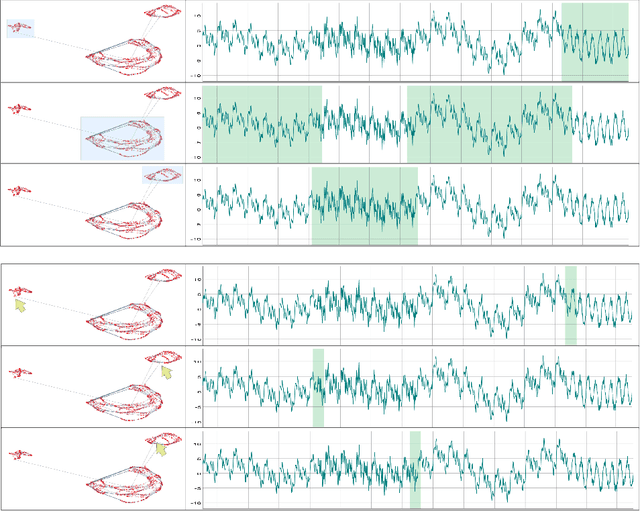

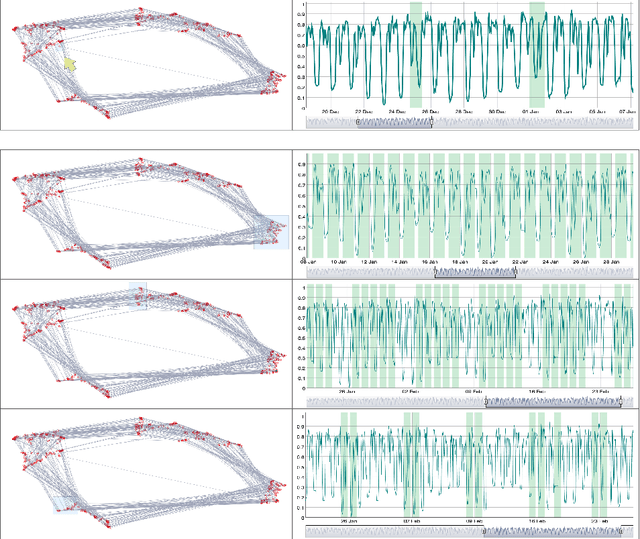

DeepVATS: Deep Visual Analytics for Time Series

Feb 08, 2023

Abstract:The field of Deep Visual Analytics (DVA) has recently arisen from the idea of developing Visual Interactive Systems supported by deep learning techniques, in order to provide them with large-scale data processing capabilities and to unify their implementation across different data modalities and domains of application. In this paper we present DeepVATS, an open-source tool that brings the field of DVA into time series data. DeepVATS trains, in a self-supervised way, a masked time series autoencoder that reconstructs patches of a time series, and projects the knowledge contained in the embeddings of that model in an interactive plot, from which time series patterns and anomalies emerge and can be easily spotted. The tool has been tested on both synthetic and real datasets, and its code is publicly available on https://github.com/vrodriguezf/deepvats

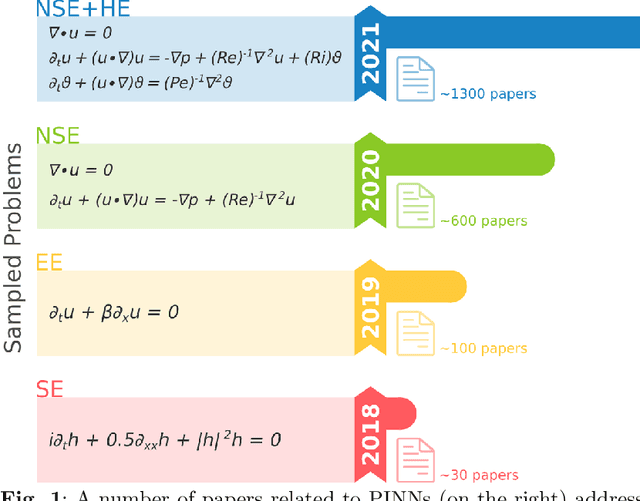

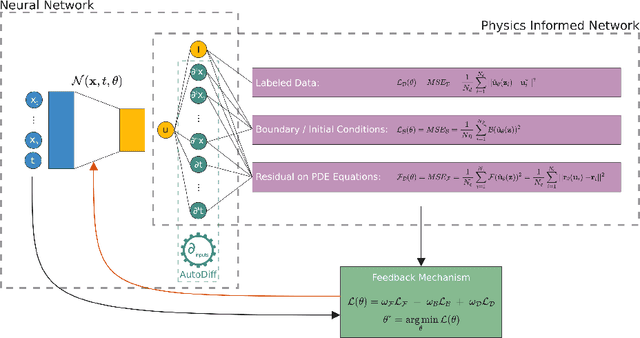

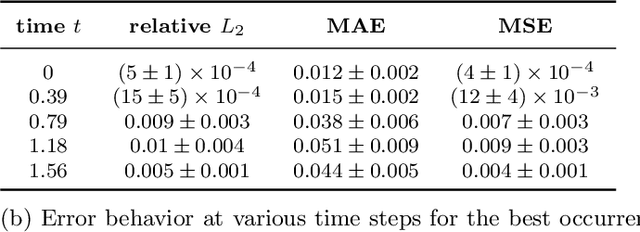

Scientific Machine Learning through Physics-Informed Neural Networks: Where we are and What's next

Jan 21, 2022

Abstract:Physics-Informed Neural Networks (PINN) are neural networks (NNs) that encode model equations, like Partial Differential Equations (PDE), as a component of the neural network itself. PINNs are nowadays used to solve PDEs, fractional equations, and integral-differential equations. This novel methodology has arisen as a multi-task learning framework in which a NN must fit observed data while reducing a PDE residual. This article provides a comprehensive review of the literature on PINNs: while the primary goal of the study was to characterize these networks and their related advantages and disadvantages, the review also attempts to incorporate publications on a larger variety of issues, including physics-constrained neural networks (PCNN), where the initial or boundary conditions are directly embedded in the NN structure rather than in the loss functions. The study indicates that most research has focused on customizing the PINN through different activation functions, gradient optimization techniques, neural network structures, and loss function structures. Despite the wide range of applications for which PINNs have been used, by demonstrating their ability to be more feasible in some contexts than classical numerical techniques like Finite Element Method (FEM), advancements are still possible, most notably theoretical issues that remain unresolved.

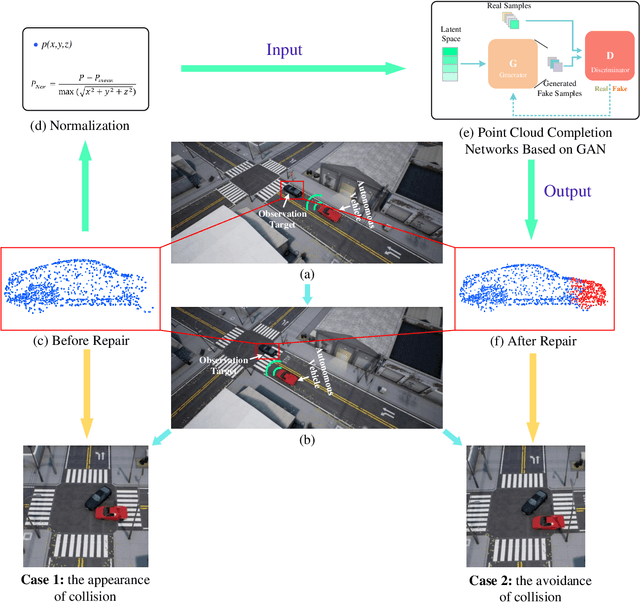

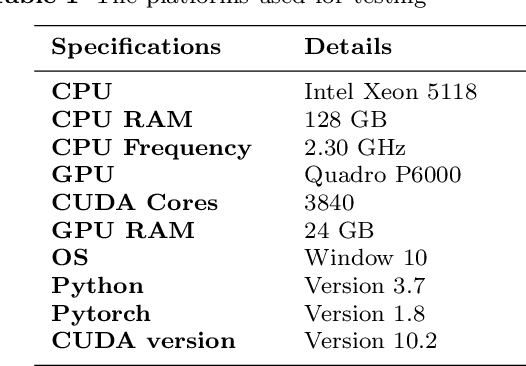

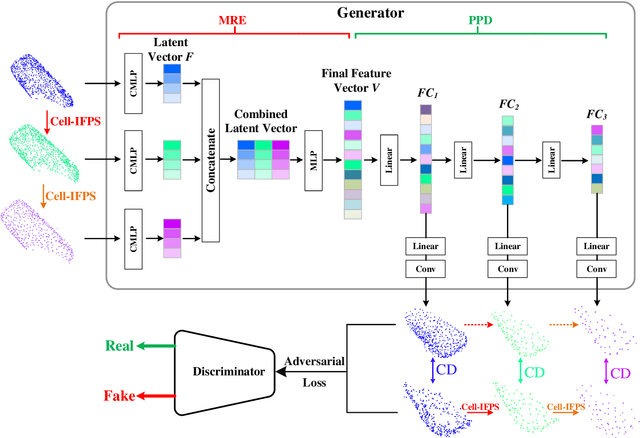

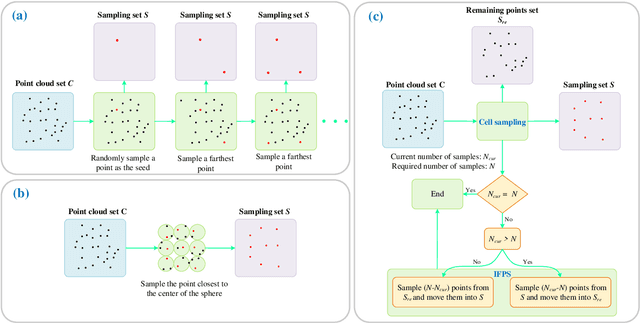

An Efficient Deep Learning Approach Using Improved Generative Adversarial Networks for Incomplete Information Completion of Self-driving

Sep 01, 2021

Abstract:Autonomous driving is the key technology of intelligent logistics in Industrial Internet of Things (IIoT). In autonomous driving, the appearance of incomplete point clouds losing geometric and semantic information is inevitable owing to limitations of occlusion, sensor resolution, and viewing angle when the Light Detection And Ranging (LiDAR) is applied. The emergence of incomplete point clouds, especially incomplete vehicle point clouds, would lead to the reduction of the accuracy of autonomous driving vehicles in object detection, traffic alert, and collision avoidance. Existing point cloud completion networks, such as Point Fractal Network (PF-Net), focus on the accuracy of point cloud completion, without considering the efficiency of inference process, which makes it difficult for them to be deployed for vehicle point cloud repair in autonomous driving. To address the above problem, in this paper, we propose an efficient deep learning approach to repair incomplete vehicle point cloud accurately and efficiently in autonomous driving. In the proposed method, an efficient downsampling algorithm combining incremental sampling and one-time sampling is presented to improves the inference speed of the PF-Net based on Generative Adversarial Network (GAN). To evaluate the performance of the proposed method, a real dataset is used, and an autonomous driving scene is created, where three incomplete vehicle point clouds with 5 different sizes are set for three autonomous driving situations. The improved PF-Net can achieve the speedups of over 19x with almost the same accuracy when compared to the original PF-Net. Experimental results demonstrate that the improved PF-Net can be applied to efficiently complete vehicle point clouds in autonomous driving.

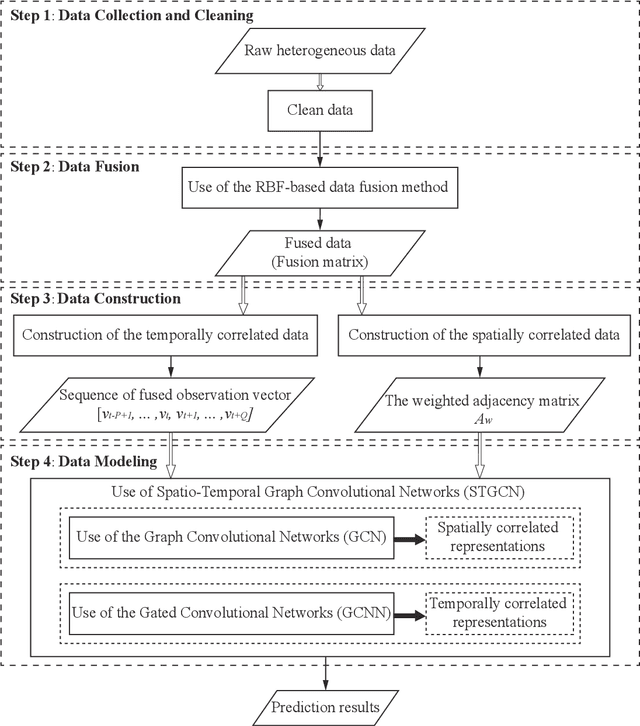

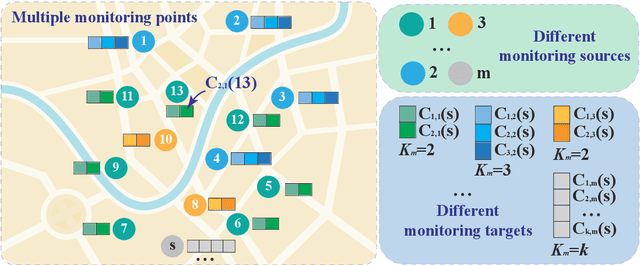

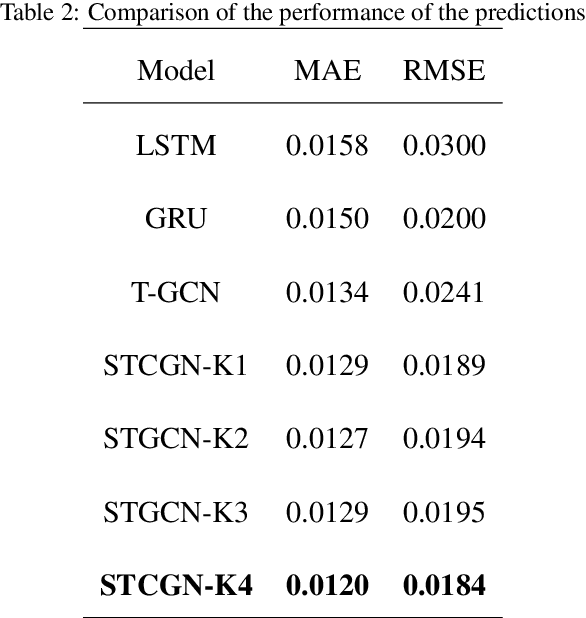

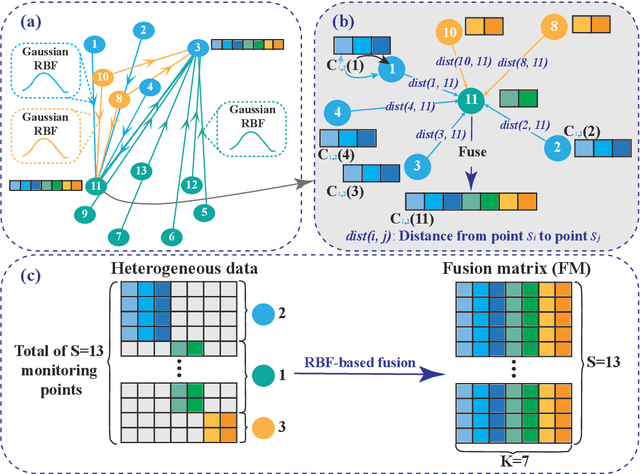

Heterogeneous Data Fusion Considering Spatial Correlations using Graph Convolutional Networks and its Application in Air Quality Prediction

May 24, 2021

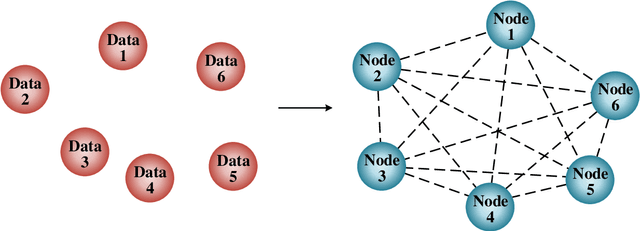

Abstract:Heterogeneous data are commonly adopted as the inputs for some models that predict the future trends of some observations. Existing predictive models typically ignore the inconsistencies and imperfections in heterogeneous data while also failing to consider the (1) spatial correlations among monitoring points or (2) predictions for the entire study area. To address the above problems, this paper proposes a deep learning method for fusing heterogeneous data collected from multiple monitoring points using graph convolutional networks (GCNs) to predict the future trends of some observations and evaluates its effectiveness by applying it in an air quality predictions scenario. The essential idea behind the proposed method is to (1) fuse the collected heterogeneous data based on the locations of the monitoring points with regard to their spatial correlations and (2) perform prediction based on global information rather than local information. In the proposed method, first, we assemble a fusion matrix using the proposed RBF-based fusion approach; second, based on the fused data, we construct spatially and temporally correlated data as inputs for the predictive model; finally, we employ the spatiotemporal graph convolutional network (STGCN) to predict the future trends of some observations. In the application scenario of air quality prediction, it is observed that (1) the fused data derived from the RBF-based fusion approach achieve satisfactory consistency; (2) the performances of the prediction models based on fused data are better than those based on raw data; and (3) the STGCN model achieves the best performance when compared to those of all baseline models. The proposed method is applicable for similar scenarios where continuous heterogeneous data are collected from multiple monitoring points scattered across a study area.

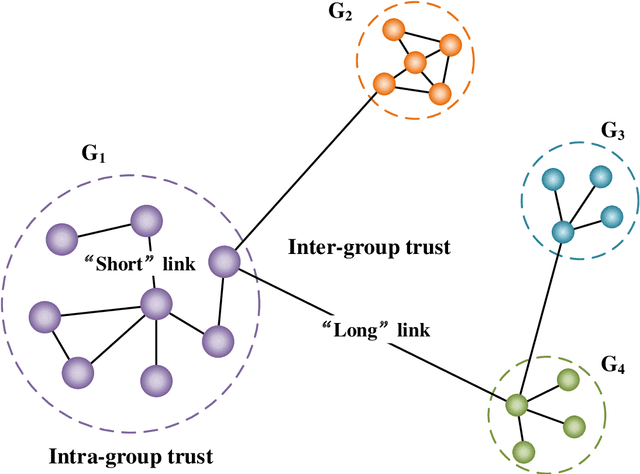

KCoreMotif: An Efficient Graph Clustering Algorithm for Large Networks by Exploiting k-core Decomposition and Motifs

Aug 21, 2020

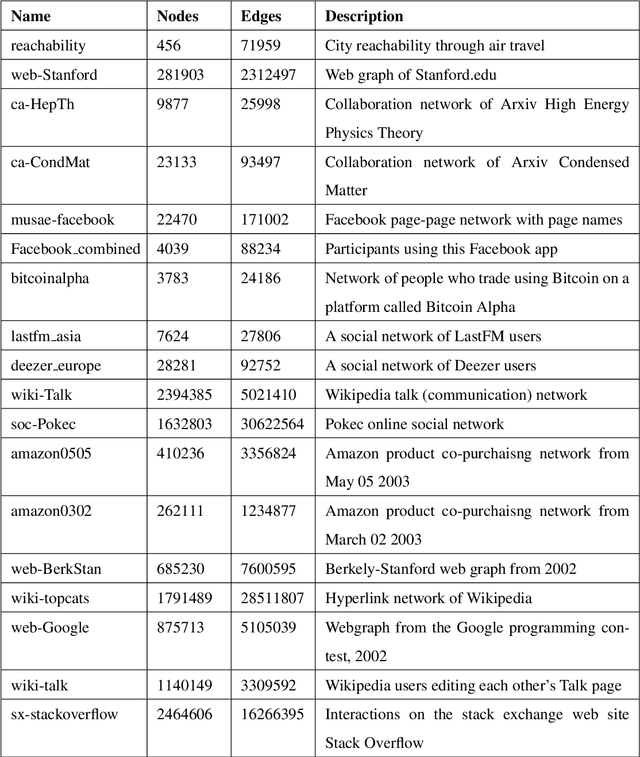

Abstract:Clustering analysis has been widely used in trust evaluation on various complex networks such as wireless sensors networks and online social networks. Spectral clustering is one of the most commonly used algorithms for graph-structured data (networks). However, the conventional spectral clustering is inherently difficult to work with large-scale networks due to the fact that it needs computationally expensive matrix manipulations. To deal with large networks, in this paper, we propose an efficient graph clustering algorithm, KCoreMotif, specifically for large networks by exploiting k-core decomposition and motifs. The essential idea behind the proposed clustering algorithm is to perform the efficient motif-based spectral clustering algorithm on k-core subgraphs, rather than on the entire graph. More specifically, (1) we first conduct the k-core decomposition of the large input network; (2) we then perform the motif-based spectral clustering for the top k-core subgraphs; (3) we group the remaining vertices in the rest (k-1)-core subgraphs into previously found clusters; and finally obtain the desired clusters of the large input network. To evaluate the performance of the proposed graph clustering algorithm KCoreMotif, we use both the conventional and the motif-based spectral clustering algorithms as the baselines and compare our algorithm with them for 18 groups of real-world datasets. Comparative results demonstrate that the proposed graph clustering algorithm is accurate yet efficient for large networks, which also means that it can be further used to evaluate the intra-cluster and inter-cluster trusts on large networks.

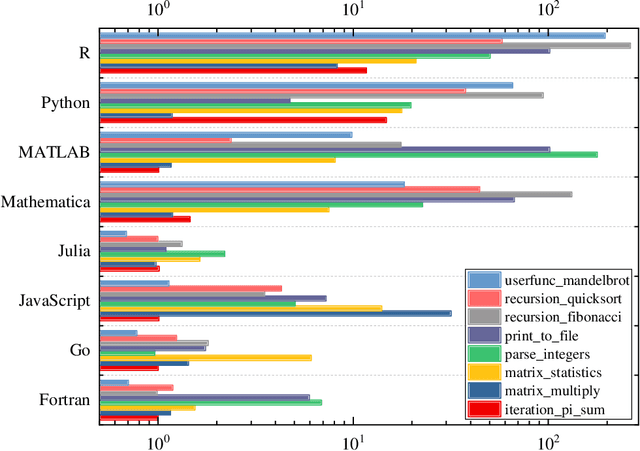

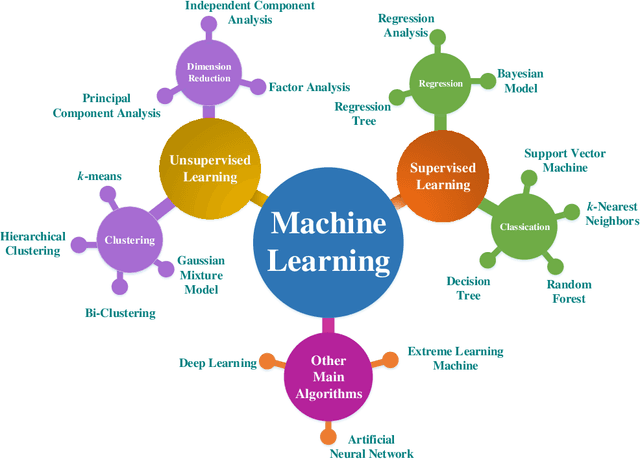

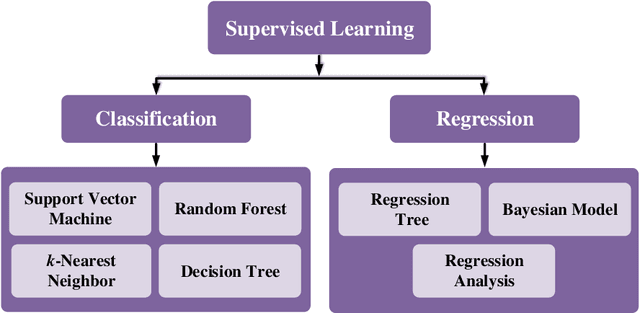

Julia Language in Machine Learning: Algorithms, Applications, and Open Issues

Mar 23, 2020

Abstract:Machine learning is driving development across many fields in science and engineering. A simple and efficient programming language could accelerate applications of machine learning in various fields. Currently, the programming languages most commonly used to develop machine learning algorithms include Python, MATLAB, and C/C ++. However, none of these languages well balance both efficiency and simplicity. The Julia language is a fast, easy-to-use, and open-source programming language that was originally designed for high-performance computing, which can well balance the efficiency and simplicity. This paper summarizes the related research work and developments in the application of the Julia language in machine learning. It first surveys the popular machine learning algorithms that are developed in the Julia language. Then, it investigates applications of the machine learning algorithms implemented with the Julia language. Finally, it discusses the open issues and the potential future directions that arise in the use of the Julia language in machine learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge