Gonzalo Mateo-Garcia

AI for operational methane emitter monitoring from space

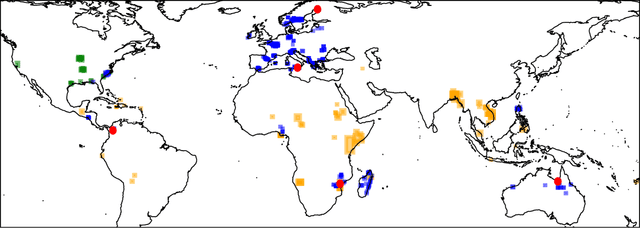

Aug 08, 2024Abstract:Mitigating methane emissions is the fastest way to stop global warming in the short-term and buy humanity time to decarbonise. Despite the demonstrated ability of remote sensing instruments to detect methane plumes, no system has been available to routinely monitor and act on these events. We present MARS-S2L, an automated AI-driven methane emitter monitoring system for Sentinel-2 and Landsat satellite imagery deployed operationally at the United Nations Environment Programme's International Methane Emissions Observatory. We compile a global dataset of thousands of super-emission events for training and evaluation, demonstrating that MARS-S2L can skillfully monitor emissions in a diverse range of regions globally, providing a 216% improvement in mean average precision over a current state-of-the-art detection method. Running this system operationally for six months has yielded 457 near-real-time detections in 22 different countries of which 62 have already been used to provide formal notifications to governments and stakeholders.

Multi-Spectral Multi-Image Super-Resolution of Sentinel-2 with Radiometric Consistency Losses and Its Effect on Building Delineation

Nov 05, 2021

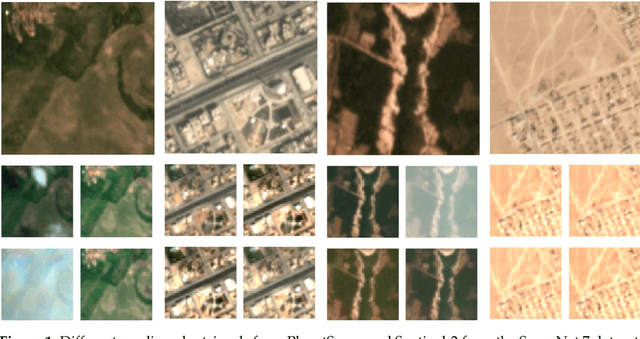

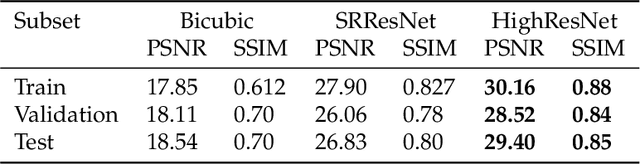

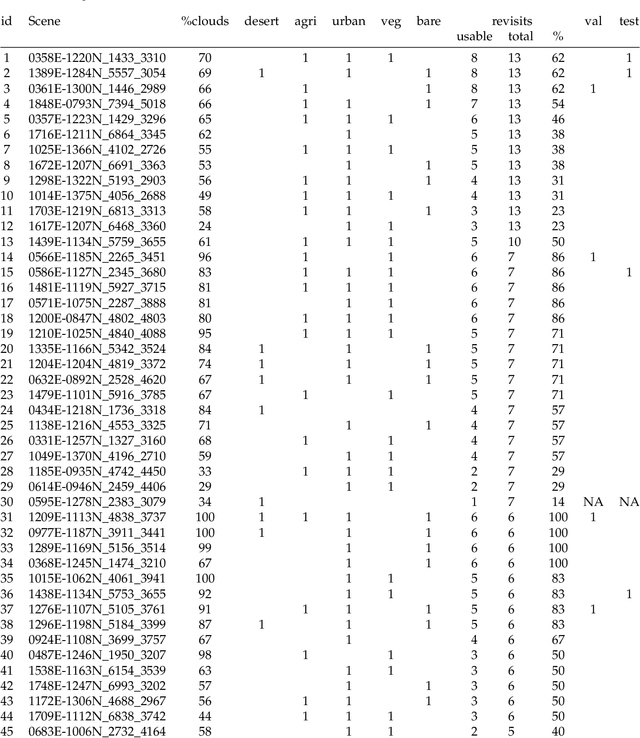

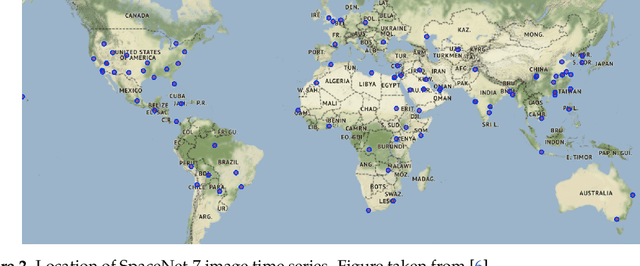

Abstract:High resolution remote sensing imagery is used in broad range of tasks, including detection and classification of objects. High-resolution imagery is however expensive, while lower resolution imagery is often freely available and can be used by the public for range of social good applications. To that end, we curate a multi-spectral multi-image super-resolution dataset, using PlanetScope imagery from the SpaceNet 7 challenge as the high resolution reference and multiple Sentinel-2 revisits of the same imagery as the low-resolution imagery. We present the first results of applying multi-image super-resolution (MISR) to multi-spectral remote sensing imagery. We, additionally, introduce a radiometric consistency module into MISR model the to preserve the high radiometric resolution of the Sentinel-2 sensor. We show that MISR is superior to single-image super-resolution and other baselines on a range of image fidelity metrics. Furthermore, we conduct the first assessment of the utility of multi-image super-resolution on building delineation, showing that utilising multiple images results in better performance in these downstream tasks.

Unsupervised Change Detection of Extreme Events Using ML On-Board

Nov 04, 2021

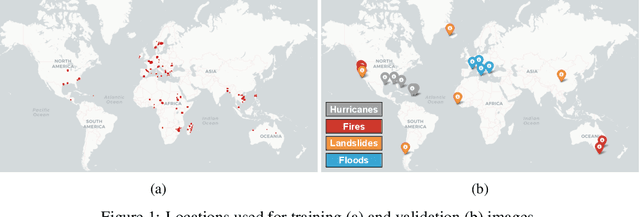

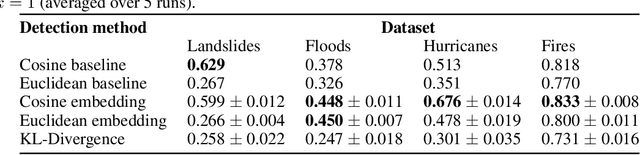

Abstract:In this paper, we introduce RaVAEn, a lightweight, unsupervised approach for change detection in satellite data based on Variational Auto-Encoders (VAEs) with the specific purpose of on-board deployment. Applications such as disaster management enormously benefit from the rapid availability of satellite observations. Traditionally, data analysis is performed on the ground after all data is transferred - downlinked - to a ground station. Constraint on the downlink capabilities therefore affects any downstream application. In contrast, RaVAEn pre-processes the sampled data directly on the satellite and flags changed areas to prioritise for downlink, shortening the response time. We verified the efficacy of our system on a dataset composed of time series of catastrophic events - which we plan to release alongside this publication - demonstrating that RaVAEn outperforms pixel-wise baselines. Finally we tested our approach on resource-limited hardware for assessing computational and memory limitations.

Retrieval of Case 2 Water Quality Parameters with Machine Learning

Dec 08, 2020

Abstract:Water quality parameters are derived applying several machine learning regression methods on the Case2eXtreme dataset (C2X). The used data are based on Hydrolight in-water radiative transfer simulations at Sentinel-3 OLCI wavebands, and the application is done exclusively for absorbing waters with high concentrations of coloured dissolved organic matter (CDOM). The regression approaches are: regularized linear, random forest, Kernel ridge, Gaussian process and support vector regressors. The validation is made with and an independent simulation dataset. A comparison with the OLCI Neural Network Swarm (ONSS) is made as well. The best approached is applied to a sample scene and compared with the standard OLCI product delivered by EUMETSAT/ESA

Pix2Streams: Dynamic Hydrology Maps from Satellite-LiDAR Fusion

Nov 15, 2020

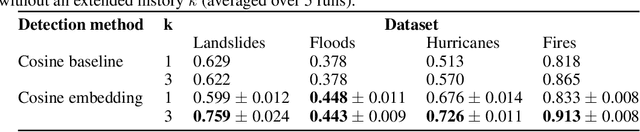

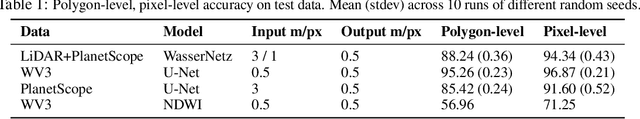

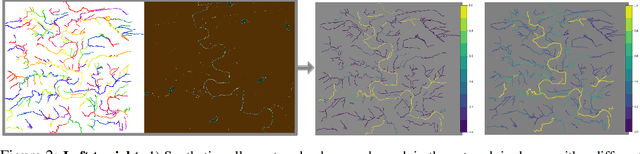

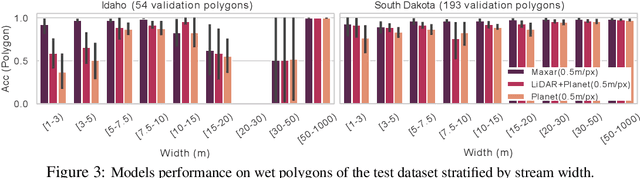

Abstract:Where are the Earth's streams flowing right now? Inland surface waters expand with floods and contract with droughts, so there is no one map of our streams. Current satellite approaches are limited to monthly observations that map only the widest streams. These are fed by smaller tributaries that make up much of the dendritic surface network but whose flow is unobserved. A complete map of our daily waters can give us an early warning for where droughts are born: the receding tips of the flowing network. Mapping them over years can give us a map of impermanence of our waters, showing where to expect water, and where not to. To that end, we feed the latest high-res sensor data to multiple deep learning models in order to map these flowing networks every day, stacking the times series maps over many years. Specifically, i) we enhance water segmentation to $50$ cm/pixel resolution, a 60$\times$ improvement over previous state-of-the-art results. Our U-Net trained on 30-40cm WorldView3 images can detect streams as narrow as 1-3m (30-60$\times$ over SOTA). Our multi-sensor, multi-res variant, WasserNetz, fuses a multi-day window of 3m PlanetScope imagery with 1m LiDAR data, to detect streams 5-7m wide. Both U-Nets produce a water probability map at the pixel-level. ii) We integrate this water map over a DEM-derived synthetic valley network map to produce a snapshot of flow at the stream level. iii) We apply this pipeline, which we call Pix2Streams, to a 2-year daily PlanetScope time-series of three watersheds in the US to produce the first high-fidelity dynamic map of stream flow frequency. The end result is a new map that, if applied at the national scale, could fundamentally improve how we manage our water resources around the world.

Flood Detection On Low Cost Orbital Hardware

Oct 14, 2019

Abstract:Satellite imaging is a critical technology for monitoring and responding to natural disasters such as flooding. Despite the capabilities of modern satellites, there is still much to be desired from the perspective of first response organisations like UNICEF. Two main challenges are rapid access to data, and the ability to automatically identify flooded regions in images. We describe a prototypical flood segmentation system, identifying cloud, water and land, that could be deployed on a constellation of small satellites, performing processing on board to reduce downlink bandwidth by 2 orders of magnitude. We target PhiSat-1, part of the FSSCAT mission, which is planned to be launched by the European Space Agency (ESA) near the start of 2020 as a proof of concept for this new technology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge