Genghang Zhuang

Optimizing Dynamic Balance in a Rat Robot via the Lateral Flexion of a Soft Actuated Spine

Mar 01, 2024

Abstract:Balancing oneself using the spine is a physiological alignment of the body posture in the most efficient manner by the muscular forces for mammals. For this reason, we can see many disabled quadruped animals can still stand or walk even with three limbs. This paper investigates the optimization of dynamic balance during trot gait based on the spatial relationship between the center of mass (CoM) and support area influenced by spinal flexion. During trotting, the robot balance is significantly influenced by the distance of the CoM to the support area formed by diagonal footholds. In this context, lateral spinal flexion, which is able to modify the position of footholds, holds promise for optimizing balance during trotting. This paper explores this phenomenon using a rat robot equipped with a soft actuated spine. Based on the lateral flexion of the spine, we establish a kinematic model to quantify the impact of spinal flexion on robot balance during trot gait. Subsequently, we develop an optimized controller for spinal flexion, designed to enhance balance without altering the leg locomotion. The effectiveness of our proposed controller is evaluated through extensive simulations and physical experiments conducted on a rat robot. Compared to both a non-spine based trot gait controller and a trot gait controller with lateral spinal flexion, our proposed optimized controller effectively improves the dynamic balance of the robot and retains the desired locomotion during trotting.

Learning from Symmetry: Meta-Reinforcement Learning with Symmetric Data and Language Instructions

Sep 21, 2022

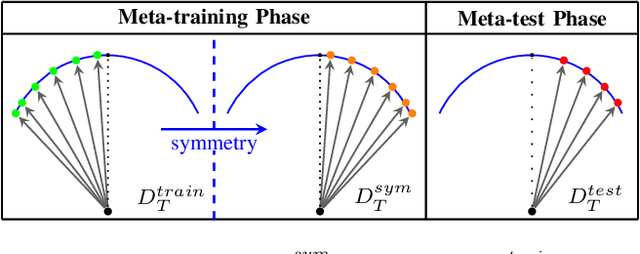

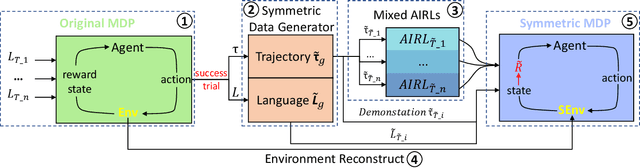

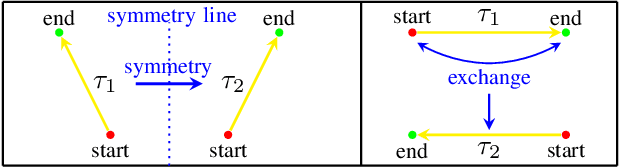

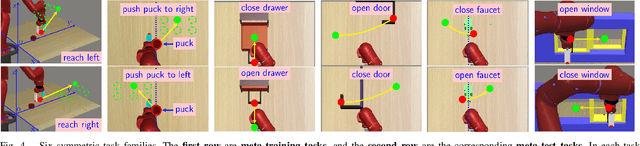

Abstract:Meta-reinforcement learning (meta-RL) is a promising approach that enables the agent to learn new tasks quickly. However, most meta-RL algorithms show poor generalization in multiple-task scenarios due to the insufficient task information provided only by rewards. Language-conditioned meta-RL improves the generalization by matching language instructions and the agent's behaviors. Learning from symmetry is an important form of human learning, therefore, combining symmetry and language instructions into meta-RL can help improve the algorithm's generalization and learning efficiency. We thus propose a dual-MDP meta-reinforcement learning method that enables learning new tasks efficiently with symmetric data and language instructions. We evaluate our method in multiple challenging manipulation tasks, and experimental results show our method can greatly improve the generalization and efficiency of meta-reinforcement learning.

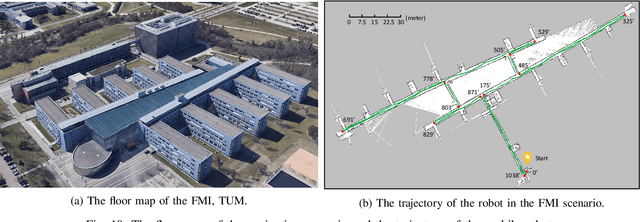

A Biologically Inspired Global Localization System for Mobile Robots Using LiDAR Sensor

Sep 27, 2021

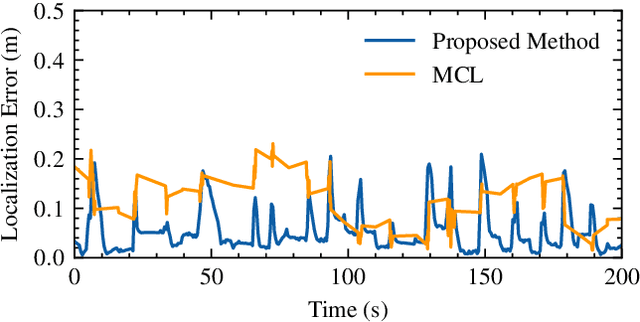

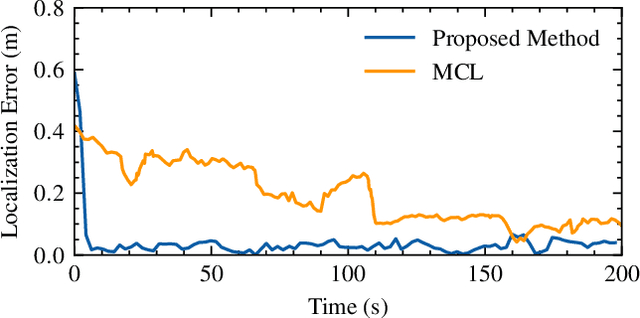

Abstract:Localization in the environment is an essential navigational capability for animals and mobile robots. In the indoor environment, the global localization problem remains challenging to be perfectly solved with probabilistic methods. However, animals are able to instinctively localize themselves with much less effort. Therefore, it is intriguing and promising to seek biological inspiration from animals. In this paper, we present a biologically-inspired global localization system using a LiDAR sensor that utilizes a hippocampal model and a landmark-based re-localization approach. The experiment results show that the proposed method is competitive to Monte Carlo Localization, and the results demonstrate the high accuracy, applicability, and reliability of the proposed biologically-inspired localization system in different localization scenarios.

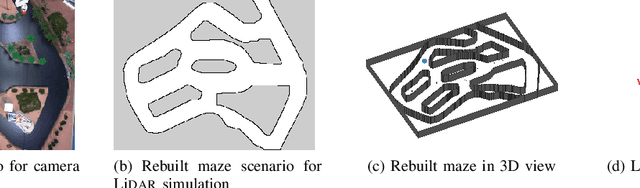

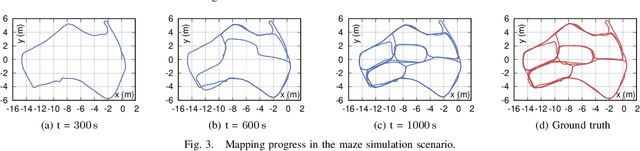

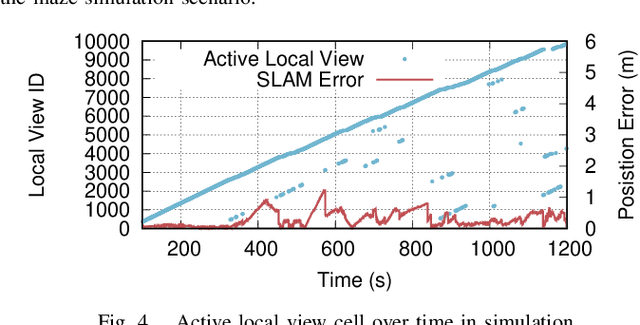

A Biologically Inspired Simultaneous Localization and Mapping System Based on LiDAR Sensor

Sep 27, 2021

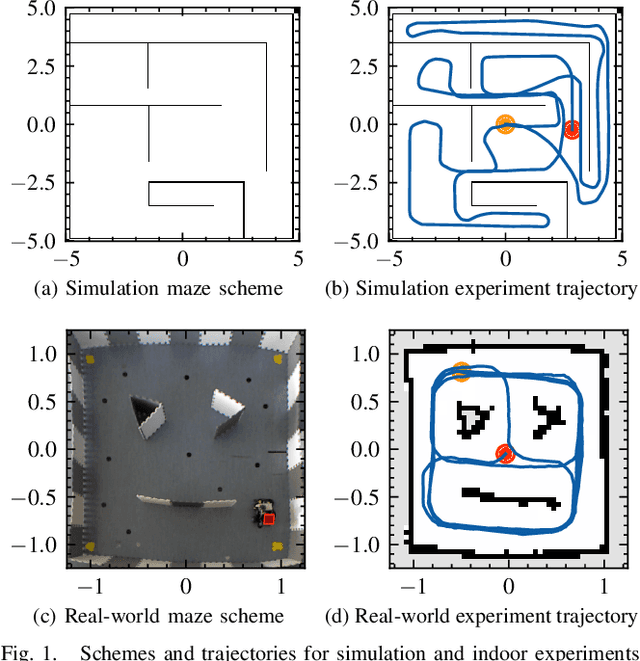

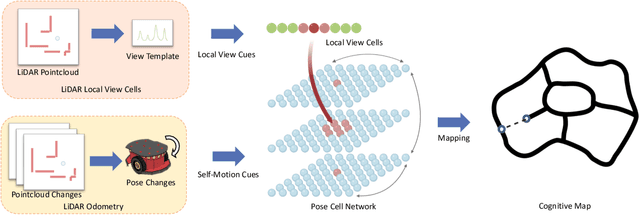

Abstract:Simultaneous localization and mapping (SLAM) is one of the essential techniques and functionalities used by robots to perform autonomous navigation tasks. Inspired by the rodent hippocampus, this paper presents a biologically inspired SLAM system based on a LiDAR sensor using a hippocampal model to build a cognitive map and estimate the robot pose in indoor environments. Based on the biologically inspired model, the SLAM system using point cloud data from a LiDAR sensor is capable of leveraging the self-motion cues from the LiDAR odometry and the local view cues from the LiDAR local view cells to build a cognitive map and estimate the robot pose. Experiment results show that the proposed SLAM system is highly applicable and sufficiently accurate for LiDAR-based SLAM tasks in both simulation and indoor environments.

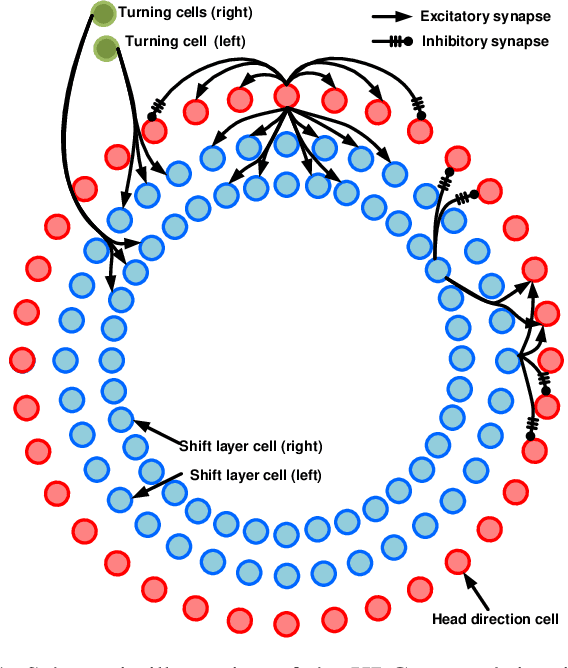

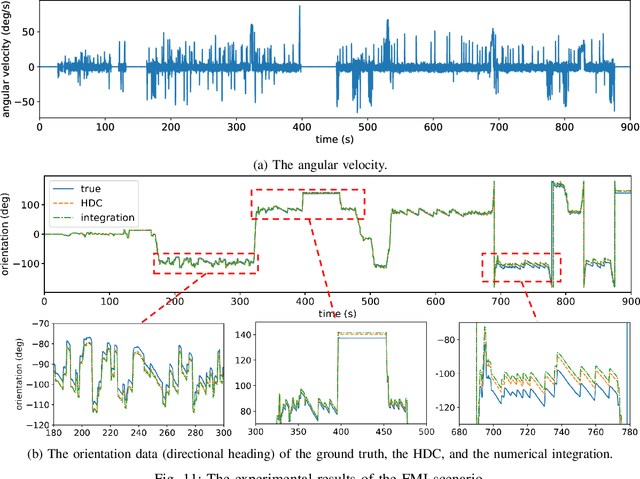

Towards Cognitive Navigation: Design and Implementation of a Biologically Inspired Head Direction Cell Network

Sep 22, 2021

Abstract:As a vital cognitive function of animals, the navigation skill is first built on the accurate perception of the directional heading in the environment. Head direction cells (HDCs), found in the limbic system of animals, are proven to play an important role in identifying the directional heading allocentrically in the horizontal plane, independent of the animal's location and the ambient conditions of the environment. However, practical HDC models that can be implemented in robotic applications are rarely investigated, especially those that are biologically plausible and yet applicable to the real world. In this paper, we propose a computational HDC network which is consistent with several neurophysiological findings concerning biological HDCs, and then implement it in robotic navigation tasks. The HDC network keeps a representation of the directional heading only relying on the angular velocity as an input. We examine the proposed HDC model in extensive simulations and real-world experiments and demonstrate its excellent performance in terms of accuracy and real-time capability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge