Erfan Aasi

See Less, Drive Better: Generalizable End-to-End Autonomous Driving via Foundation Models Stochastic Patch Selection

Jan 15, 2026Abstract:Recent advances in end-to-end autonomous driving show that policies trained on patch-aligned features extracted from foundation models generalize better to Out-of-Distribution (OOD). We hypothesize that due to the self-attention mechanism, each patch feature implicitly embeds/contains information from all other patches, represented in a different way and intensity, making these descriptors highly redundant. We quantify redundancy in such (BLIP2) features via PCA and cross-patch similarity: $90$% of variance is captured by $17/64$ principal components, and strong inter-token correlations are pervasive. Training on such overlapping information leads the policy to overfit spurious correlations, hurting OOD robustness. We present Stochastic-Patch-Selection (SPS), a simple yet effective approach for learning policies that are more robust, generalizable, and efficient. For every frame, SPS randomly masks a fraction of patch descriptors, not feeding them to the policy model, while preserving the spatial layout of the remaining patches. Thus, the policy is provided with different stochastic but complete views of the (same) scene: every random subset of patches acts like a different, yet still sensible, coherent projection of the world. The policy thus bases its decisions on features that are invariant to which specific tokens survive. Extensive experiments confirm that across all OOD scenarios, our method outperforms the state of the art (SOTA), achieving a $6.2$% average improvement and up to $20.4$% in closed-loop simulations, while being $2.4\times$ faster. We conduct ablations over masking rates and patch-feature reorganization, training and evaluating 9 systems, with 8 of them surpassing prior SOTA. Finally, we show that the same learned policy transfers to a physical, real-world car without any tuning.

SAFe-Copilot: Unified Shared Autonomy Framework

Nov 06, 2025Abstract:Autonomous driving systems remain brittle in rare, ambiguous, and out-of-distribution scenarios, where human driver succeed through contextual reasoning. Shared autonomy has emerged as a promising approach to mitigate such failures by incorporating human input when autonomy is uncertain. However, most existing methods restrict arbitration to low-level trajectories, which represent only geometric paths and therefore fail to preserve the underlying driving intent. We propose a unified shared autonomy framework that integrates human input and autonomous planners at a higher level of abstraction. Our method leverages Vision Language Models (VLMs) to infer driver intent from multi-modal cues -- such as driver actions and environmental context -- and to synthesize coherent strategies that mediate between human and autonomous control. We first study the framework in a mock-human setting, where it achieves perfect recall alongside high accuracy and precision. A human-subject survey further shows strong alignment, with participants agreeing with arbitration outcomes in 92% of cases. Finally, evaluation on the Bench2Drive benchmark demonstrates a substantial reduction in collision rate and improvement in overall performance compared to pure autonomy. Arbitration at the level of semantic, language-based representations emerges as a design principle for shared autonomy, enabling systems to exercise common-sense reasoning and maintain continuity with human intent.

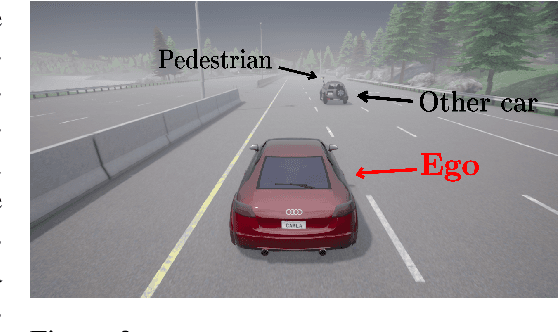

ReGen: Generative Robot Simulation via Inverse Design

Nov 06, 2025Abstract:Simulation plays a key role in scaling robot learning and validating policies, but constructing simulations remains a labor-intensive process. This paper introduces ReGen, a generative simulation framework that automates simulation design via inverse design. Given a robot's behavior -- such as a motion trajectory or an objective function -- and its textual description, ReGen infers plausible scenarios and environments that could have caused the behavior. ReGen leverages large language models to synthesize scenarios by expanding a directed graph that encodes cause-and-effect relationships, relevant entities, and their properties. This structured graph is then translated into a symbolic program, which configures and executes a robot simulation environment. Our framework supports (i) augmenting simulations based on ego-agent behaviors, (ii) controllable, counterfactual scenario generation, (iii) reasoning about agent cognition and mental states, and (iv) reasoning with distinct sensing modalities, such as braking due to faulty GPS signals. We demonstrate ReGen in autonomous driving and robot manipulation tasks, generating more diverse, complex simulated environments compared to existing simulations with high success rates, and enabling controllable generation for corner cases. This approach enhances the validation of robot policies and supports data or simulation augmentation, advancing scalable robot learning for improved generalization and robustness. We provide code and example videos at: https://regen-sim.github.io/

Generating Out-Of-Distribution Scenarios Using Language Models

Nov 25, 2024

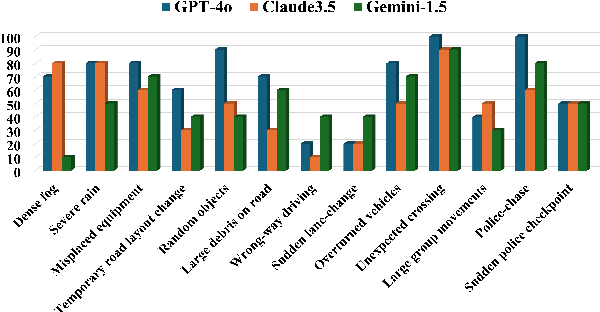

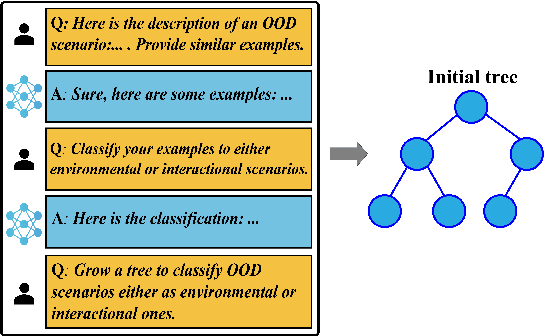

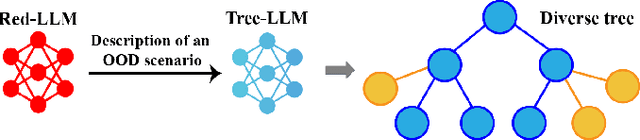

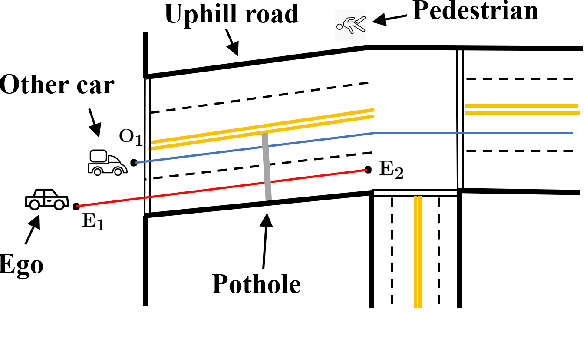

Abstract:The deployment of autonomous vehicles controlled by machine learning techniques requires extensive testing in diverse real-world environments, robust handling of edge cases and out-of-distribution scenarios, and comprehensive safety validation to ensure that these systems can navigate safely and effectively under unpredictable conditions. Addressing Out-Of-Distribution (OOD) driving scenarios is essential for enhancing safety, as OOD scenarios help validate the reliability of the models within the vehicle's autonomy stack. However, generating OOD scenarios is challenging due to their long-tailed distribution and rarity in urban driving dataset. Recently, Large Language Models (LLMs) have shown promise in autonomous driving, particularly for their zero-shot generalization and common-sense reasoning capabilities. In this paper, we leverage these LLM strengths to introduce a framework for generating diverse OOD driving scenarios. Our approach uses LLMs to construct a branching tree, where each branch represents a unique OOD scenario. These scenarios are then simulated in the CARLA simulator using an automated framework that aligns scene augmentation with the corresponding textual descriptions. We evaluate our framework through extensive simulations, and assess its performance via a diversity metric that measures the richness of the scenarios. Additionally, we introduce a new "OOD-ness" metric, which quantifies how much the generated scenarios deviate from typical urban driving conditions. Furthermore, we explore the capacity of modern Vision-Language Models (VLMs) to interpret and safely navigate through the simulated OOD scenarios. Our findings offer valuable insights into the reliability of language models in addressing OOD scenarios within the context of urban driving.

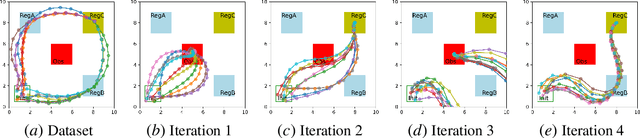

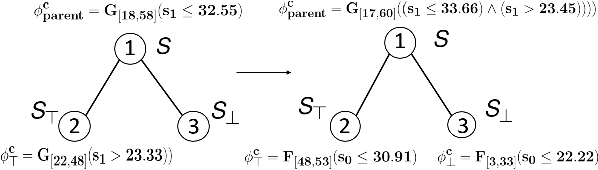

Interpretable Generative Adversarial Imitation Learning

Feb 15, 2024

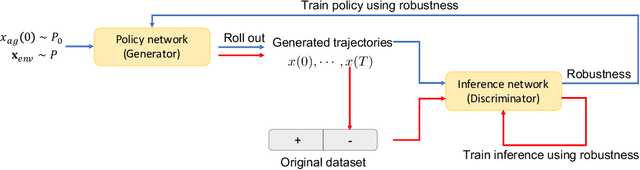

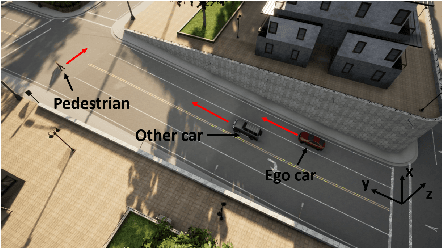

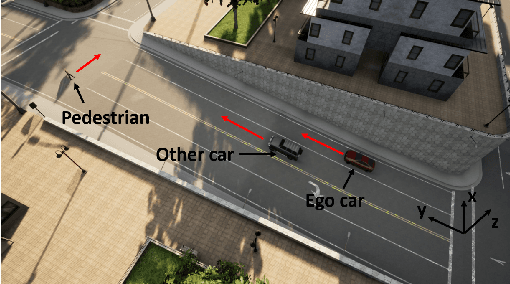

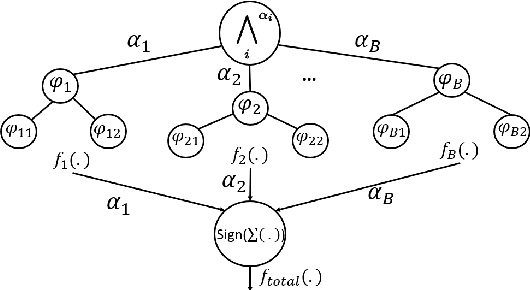

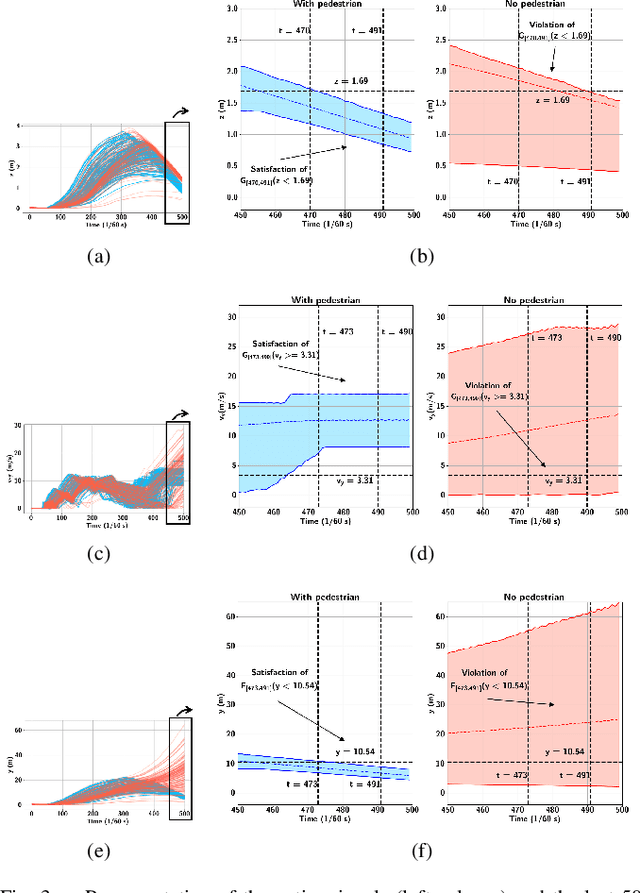

Abstract:Imitation learning methods have demonstrated considerable success in teaching autonomous systems complex tasks through expert demonstrations. However, a limitation of these methods is their lack of interpretability, particularly in understanding the specific task the learning agent aims to accomplish. In this paper, we propose a novel imitation learning method that combines Signal Temporal Logic (STL) inference and control synthesis, enabling the explicit representation of the task as an STL formula. This approach not only provides a clear understanding of the task but also allows for the incorporation of human knowledge and adaptation to new scenarios through manual adjustments of the STL formulae. Additionally, we employ a Generative Adversarial Network (GAN)-inspired training approach for both the inference and the control policy, effectively narrowing the gap between the expert and learned policies. The effectiveness of our algorithm is demonstrated through two case studies, showcasing its practical applicability and adaptability.

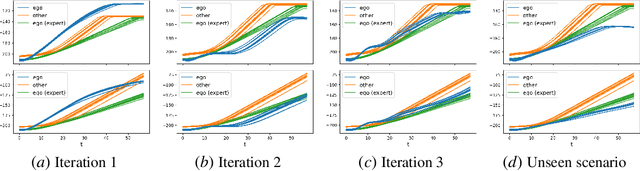

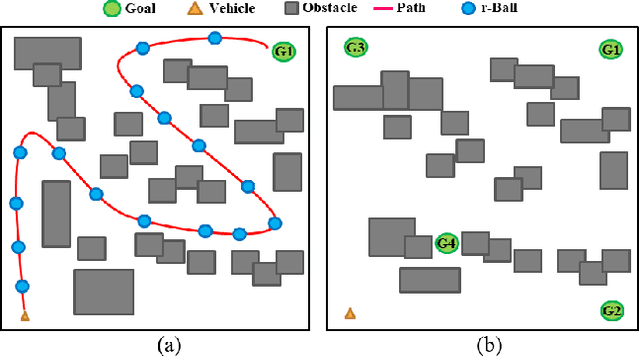

Overcoming Exploration: Deep Reinforcement Learning in Complex Environments from Temporal Logic Specifications

Feb 01, 2022

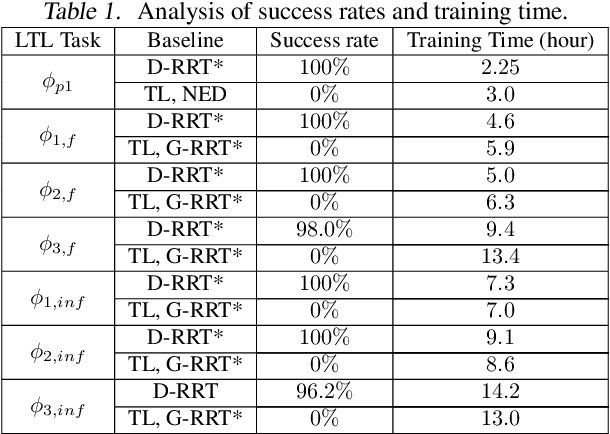

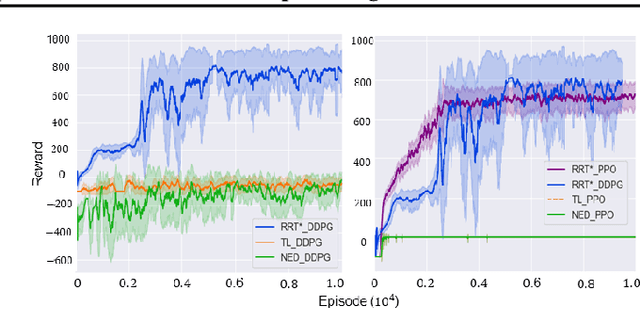

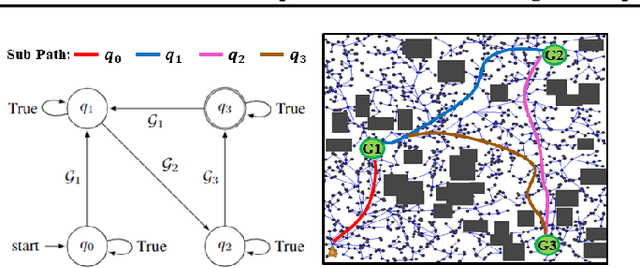

Abstract:We present a Deep Reinforcement Learning (DRL) algorithm for a task-guided robot with unknown continuous-time dynamics deployed in a large-scale complex environment. Linear Temporal Logic (LTL) is applied to express a rich robotic specification. To overcome the environmental challenge, we propose a novel path planning-guided reward scheme that is dense over the state space, and crucially, robust to infeasibility of computed geometric paths due to the unknown robot dynamics. To facilitate LTL satisfaction, our approach decomposes the LTL mission into sub-tasks that are solved using distributed DRL, where the sub-tasks are trained in parallel, using Deep Policy Gradient algorithms. Our framework is shown to significantly improve performance (effectiveness, efficiency) and exploration of robots tasked with complex missions in large-scale complex environments.

Time-Incremental Learning from Data Using Temporal Logics

Dec 28, 2021

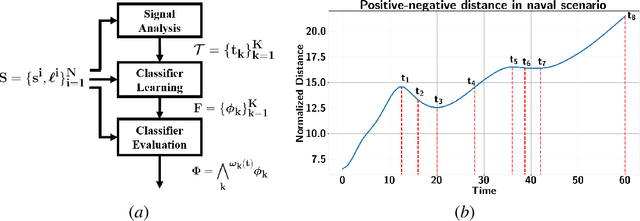

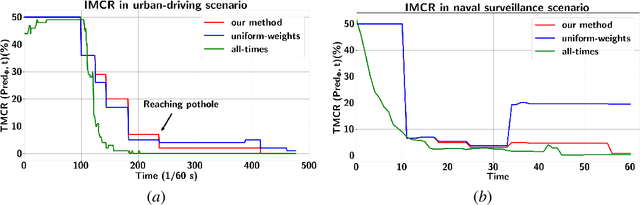

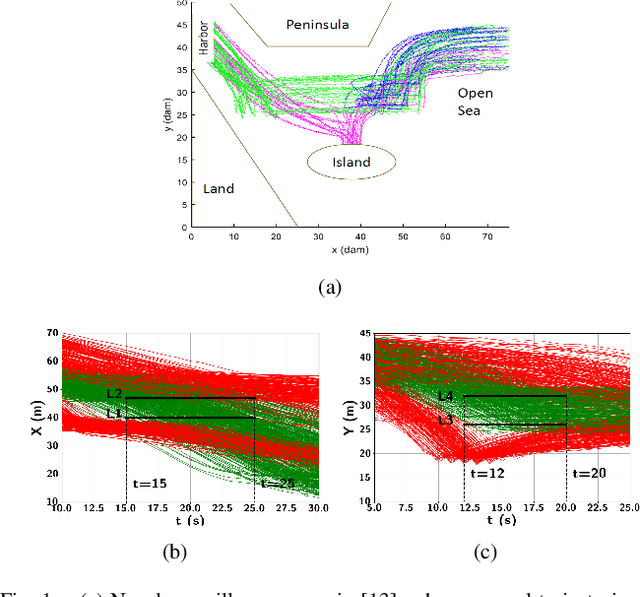

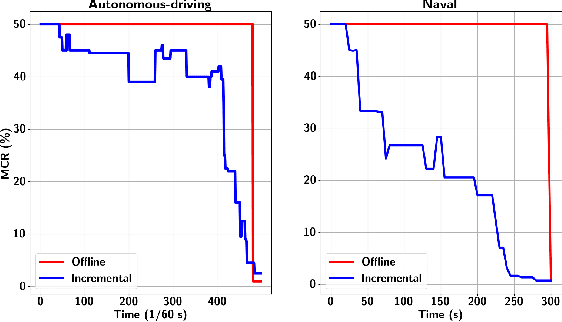

Abstract:Real-time and human-interpretable decision-making in cyber-physical systems is a significant but challenging task, which usually requires predictions of possible future events from limited data. In this paper, we introduce a time-incremental learning framework: given a dataset of labeled signal traces with a common time horizon, we propose a method to predict the label of a signal that is received incrementally over time, referred to as prefix signal. Prefix signals are the signals that are being observed as they are generated, and their time length is shorter than the common horizon of signals. We present a novel decision-tree based approach to generate a finite number of Signal Temporal Logic (STL) specifications from the given dataset, and construct a predictor based on them. Each STL specification, as a binary classifier of time-series data, captures the temporal properties of the dataset over time. The predictor is constructed by assigning time-variant weights to the STL formulas. The weights are learned by using neural networks, with the goal of minimizing the misclassification rate for the prefix signals defined over the given dataset. The learned predictor is used to predict the label of a prefix signal, by computing the weighted sum of the robustness of the prefix signal with respect to each STL formula. The effectiveness and classification performance of our algorithm are evaluated on an urban-driving and a naval-surveillance case studies.

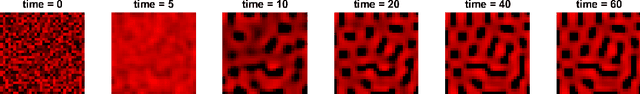

Learning Spatio-Temporal Specifications for Dynamical Systems

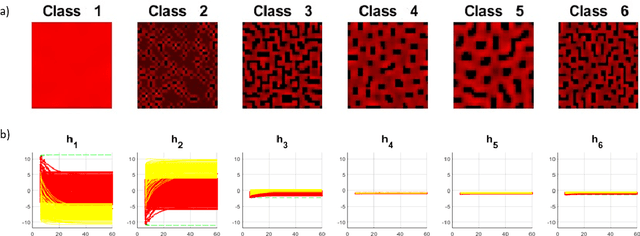

Dec 20, 2021

Abstract:Learning dynamical systems properties from data provides important insights that help us understand such systems and mitigate undesired outcomes. In this work, we propose a framework for learning spatio-temporal (ST) properties as formal logic specifications from data. We introduce SVM-STL, an extension of Signal Signal Temporal Logic (STL), capable of specifying spatial and temporal properties of a wide range of dynamical systems that exhibit time-varying spatial patterns. Our framework utilizes machine learning techniques to learn SVM-STL specifications from system executions given by sequences of spatial patterns. We present methods to deal with both labeled and unlabeled data. In addition, given system requirements in the form of SVM-STL specifications, we provide an approach for parameter synthesis to find parameters that maximize the satisfaction of such specifications. Our learning framework and parameter synthesis approach are showcased in an example of a reaction-diffusion system.

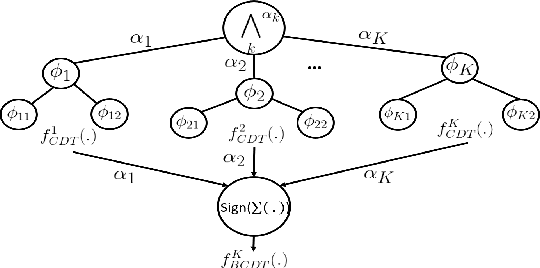

Classification of Time-Series Data Using Boosted Decision Trees

Oct 01, 2021

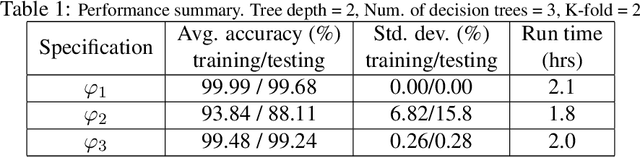

Abstract:Time-series data classification is central to the analysis and control of autonomous systems, such as robots and self-driving cars. Temporal logic-based learning algorithms have been proposed recently as classifiers of such data. However, current frameworks are either inaccurate for real-world applications, such as autonomous driving, or they generate long and complicated formulae that lack interpretability. To address these limitations, we introduce a novel learning method, called Boosted Concise Decision Trees (BCDTs), to generate binary classifiers that are represented as Signal Temporal Logic (STL) formulae. Our algorithm leverages an ensemble of Concise Decision Trees (CDTs) to improve the classification performance, where each CDT is a decision tree that is empowered by a set of techniques to generate simpler formulae and improve interpretability. The effectiveness and classification performance of our algorithm are evaluated on naval surveillance and urban-driving case studies.

Inferring Temporal Logic Properties from Data using Boosted Decision Trees

May 24, 2021

Abstract:Many autonomous systems, such as robots and self-driving cars, involve real-time decision making in complex environments, and require prediction of future outcomes from limited data. Moreover, their decisions are increasingly required to be interpretable to humans for safe and trustworthy co-existence. This paper is a first step towards interpretable learning-based robot control. We introduce a novel learning problem, called incremental formula and predictor learning, to generate binary classifiers with temporal logic structure from time-series data. The classifiers are represented as pairs of Signal Temporal Logic (STL) formulae and predictors for their satisfaction. The incremental property provides prediction of labels for prefix signals that are revealed over time. We propose a boosted decision-tree algorithm that leverages weak, but computationally inexpensive, learners to increase prediction and runtime performance. The effectiveness and classification accuracy of our algorithms are evaluated on autonomous-driving and naval surveillance case studies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge