En Li

dots.llm1 Technical Report

Jun 06, 2025Abstract:Mixture of Experts (MoE) models have emerged as a promising paradigm for scaling language models efficiently by activating only a subset of parameters for each input token. In this report, we present dots.llm1, a large-scale MoE model that activates 14B parameters out of a total of 142B parameters, delivering performance on par with state-of-the-art models while reducing training and inference costs. Leveraging our meticulously crafted and efficient data processing pipeline, dots.llm1 achieves performance comparable to Qwen2.5-72B after pretraining on 11.2T high-quality tokens and post-training to fully unlock its capabilities. Notably, no synthetic data is used during pretraining. To foster further research, we open-source intermediate training checkpoints at every one trillion tokens, providing valuable insights into the learning dynamics of large language models.

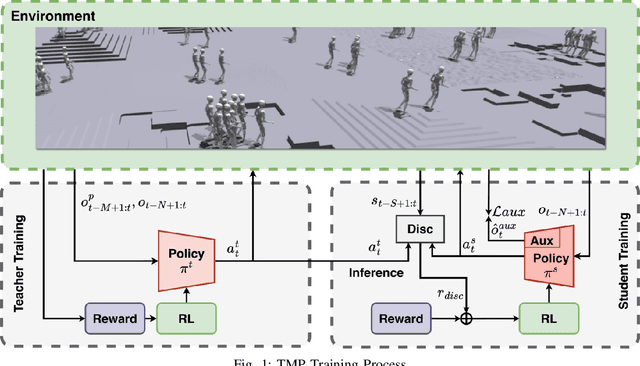

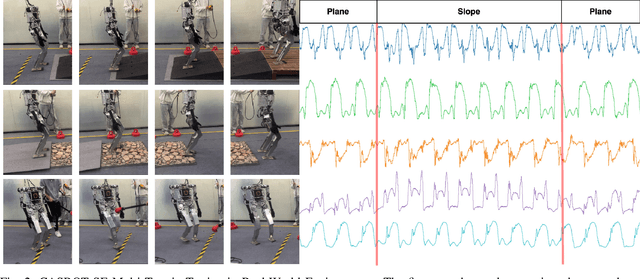

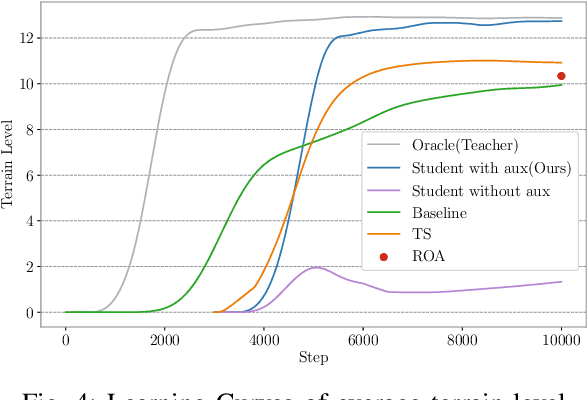

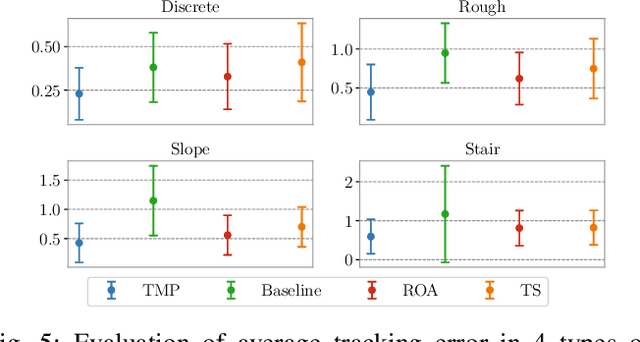

Teacher Motion Priors: Enhancing Robot Locomotion over Challenging Terrain

Apr 14, 2025

Abstract:Achieving robust locomotion on complex terrains remains a challenge due to high dimensional control and environmental uncertainties. This paper introduces a teacher prior framework based on the teacher student paradigm, integrating imitation and auxiliary task learning to improve learning efficiency and generalization. Unlike traditional paradigms that strongly rely on encoder-based state embeddings, our framework decouples the network design, simplifying the policy network and deployment. A high performance teacher policy is first trained using privileged information to acquire generalizable motion skills. The teacher's motion distribution is transferred to the student policy, which relies only on noisy proprioceptive data, via a generative adversarial mechanism to mitigate performance degradation caused by distributional shifts. Additionally, auxiliary task learning enhances the student policy's feature representation, speeding up convergence and improving adaptability to varying terrains. The framework is validated on a humanoid robot, showing a great improvement in locomotion stability on dynamic terrains and significant reductions in development costs. This work provides a practical solution for deploying robust locomotion strategies in humanoid robots.

Robust and accurate depth estimation by fusing LiDAR and Stereo

Jul 13, 2022

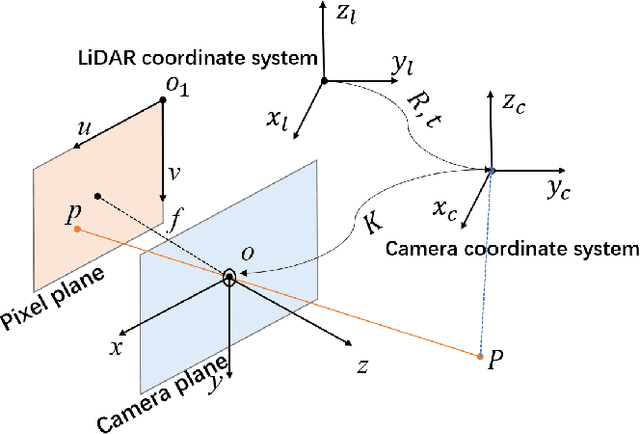

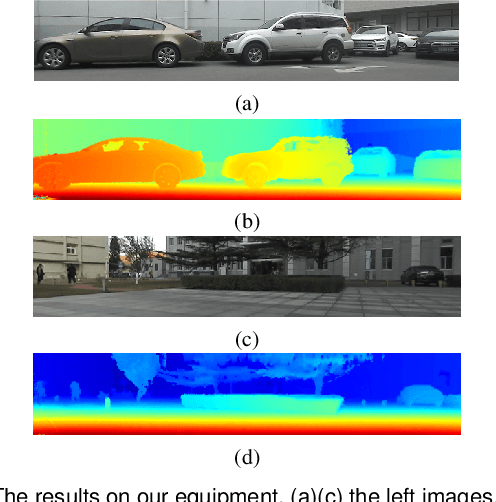

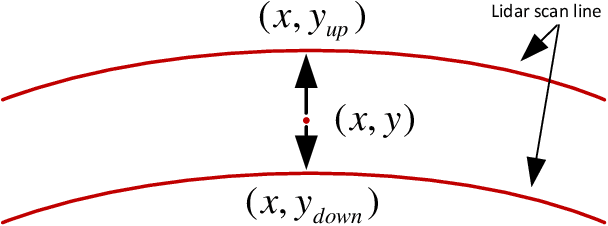

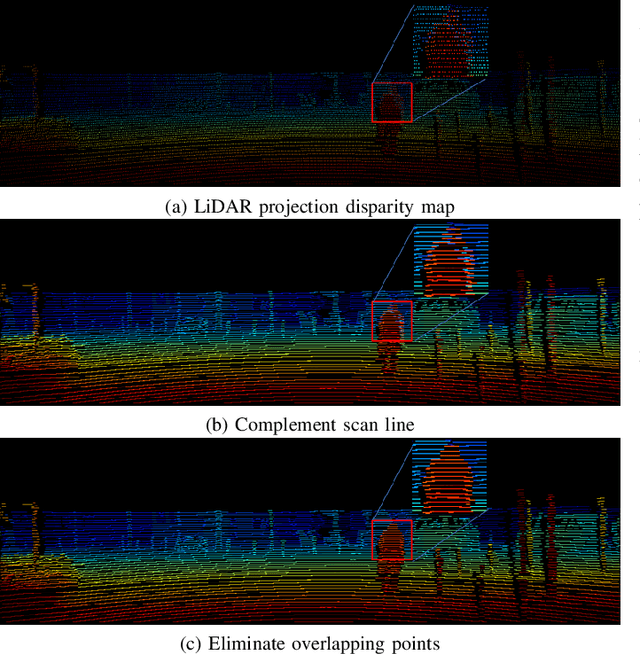

Abstract:Depth estimation is one of the key technologies in some fields such as autonomous driving and robot navigation. However, the traditional method of using a single sensor is inevitably limited by the performance of the sensor. Therefore, a precision and robust method for fusing the LiDAR and stereo cameras is proposed. This method fully combines the advantages of the LiDAR and stereo camera, which can retain the advantages of the high precision of the LiDAR and the high resolution of images respectively. Compared with the traditional stereo matching method, the texture of the object and lighting conditions have less influence on the algorithm. Firstly, the depth of the LiDAR data is converted to the disparity of the stereo camera. Because the density of the LiDAR data is relatively sparse on the y-axis, the converted disparity map is up-sampled using the interpolation method. Secondly, in order to make full use of the precise disparity map, the disparity map and stereo matching are fused to propagate the accurate disparity. Finally, the disparity map is converted to the depth map. Moreover, the converted disparity map can also increase the speed of the algorithm. We evaluate the proposed pipeline on the KITTI benchmark. The experiment demonstrates that our algorithm has higher accuracy than several classic methods.

An approach for auxiliary diagnosing and screening coronary disease based on machine learning

Jul 24, 2020

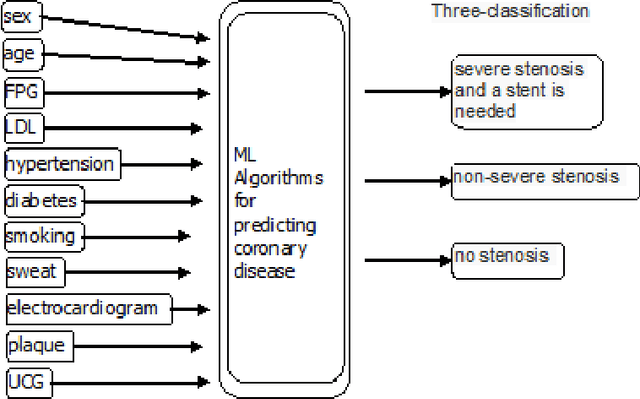

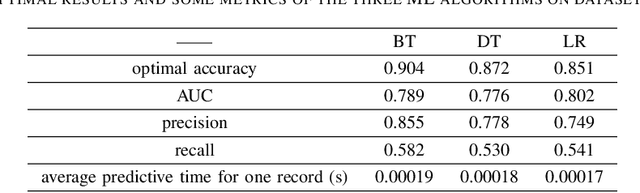

Abstract:How to accurately classify and predict whether an individual has Coronary Artery Disease (CAD) and the degree of coronary stenosis without using invasive examination? This problem has not been solved satisfactorily. To this end, the three kinds of machine learning (ML)algorithms, i.e., Boost Tree (BT), Decision Tree (DT), Logistic Regression (LR), are employed in this paper. First, 11 features including basic information of an individual, symptoms and results of routine physical examination are selected, and one label is specified, indicating whether an individual suffers from CAD or different severity of coronary artery stenosis. On the basis of it, a sample set is constructed. Second, each of these three ML algorithms learns from the sample set to obtain the corresponding optimal predictive results, respectively. The experimental results show that: BT predicts whether an individual has CAD with an accuracy of 94%, and this algorithm predicts the degree of an individuals coronary artery stenosis with an accuracy of 90%.

Edge AI: On-Demand Accelerating Deep Neural Network Inference via Edge Computing

Oct 04, 2019

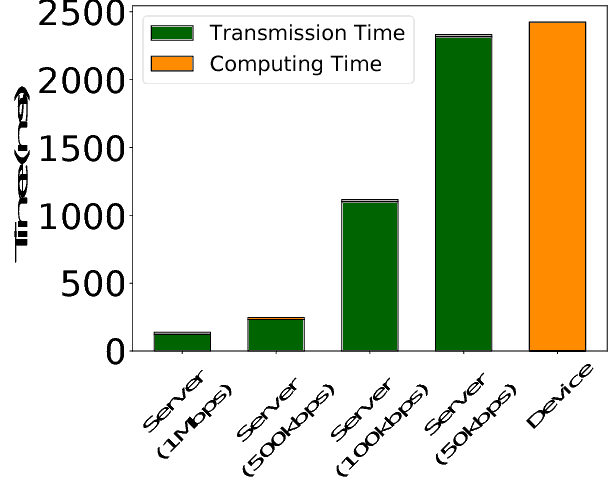

Abstract:As a key technology of enabling Artificial Intelligence (AI) applications in 5G era, Deep Neural Networks (DNNs) have quickly attracted widespread attention. However, it is challenging to run computation-intensive DNN-based tasks on mobile devices due to the limited computation resources. What's worse, traditional cloud-assisted DNN inference is heavily hindered by the significant wide-area network latency, leading to poor real-time performance as well as low quality of user experience. To address these challenges, in this paper, we propose Edgent, a framework that leverages edge computing for DNN collaborative inference through device-edge synergy. Edgent exploits two design knobs: (1) DNN partitioning that adaptively partitions computation between device and edge for purpose of coordinating the powerful cloud resource and the proximal edge resource for real-time DNN inference; (2) DNN right-sizing that further reduces computing latency via early exiting inference at an appropriate intermediate DNN layer. In addition, considering the potential network fluctuation in real-world deployment, Edgentis properly design to specialize for both static and dynamic network environment. Specifically, in a static environment where the bandwidth changes slowly, Edgent derives the best configurations with the assist of regression-based prediction models, while in a dynamic environment where the bandwidth varies dramatically, Edgent generates the best execution plan through the online change point detection algorithm that maps the current bandwidth state to the optimal configuration. We implement Edgent prototype based on the Raspberry Pi and the desktop PC and the extensive experimental evaluations demonstrate Edgent's effectiveness in enabling on-demand low-latency edge intelligence.

Edge Intelligence: Paving the Last Mile of Artificial Intelligence with Edge Computing

May 24, 2019

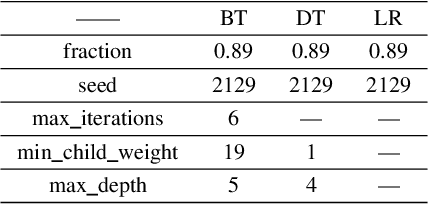

Abstract:With the breakthroughs in deep learning, the recent years have witnessed a booming of artificial intelligence (AI) applications and services, spanning from personal assistant to recommendation systems to video/audio surveillance. More recently, with the proliferation of mobile computing and Internet-of-Things (IoT), billions of mobile and IoT devices are connected to the Internet, generating zillions Bytes of data at the network edge. Driving by this trend, there is an urgent need to push the AI frontiers to the network edge so as to fully unleash the potential of the edge big data. To meet this demand, edge computing, an emerging paradigm that pushes computing tasks and services from the network core to the network edge, has been widely recognized as a promising solution. The resulted new inter-discipline, edge AI or edge intelligence, is beginning to receive a tremendous amount of interest. However, research on edge intelligence is still in its infancy stage, and a dedicated venue for exchanging the recent advances of edge intelligence is highly desired by both the computer system and artificial intelligence communities. To this end, we conduct a comprehensive survey of the recent research efforts on edge intelligence. Specifically, we first review the background and motivation for artificial intelligence running at the network edge. We then provide an overview of the overarching architectures, frameworks and emerging key technologies for deep learning model towards training/inference at the network edge. Finally, we discuss future research opportunities on edge intelligence. We believe that this survey will elicit escalating attentions, stimulate fruitful discussions and inspire further research ideas on edge intelligence.

Edge Intelligence: On-Demand Deep Learning Model Co-Inference with Device-Edge Synergy

Sep 14, 2018

Abstract:As the backbone technology of machine learning, deep neural networks (DNNs) have have quickly ascended to the spotlight. Running DNNs on resource-constrained mobile devices is, however, by no means trivial, since it incurs high performance and energy overhead. While offloading DNNs to the cloud for execution suffers unpredictable performance, due to the uncontrolled long wide-area network latency. To address these challenges, in this paper, we propose Edgent, a collaborative and on-demand DNN co-inference framework with device-edge synergy. Edgent pursues two design knobs: (1) DNN partitioning that adaptively partitions DNN computation between device and edge, in order to leverage hybrid computation resources in proximity for real-time DNN inference. (2) DNN right-sizing that accelerates DNN inference through early-exit at a proper intermediate DNN layer to further reduce the computation latency. The prototype implementation and extensive evaluations based on Raspberry Pi demonstrate Edgent's effectiveness in enabling on-demand low-latency edge intelligence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge