Duanshun Li

Large-Scale Data-Driven Airline Market Influence Maximization

May 31, 2021

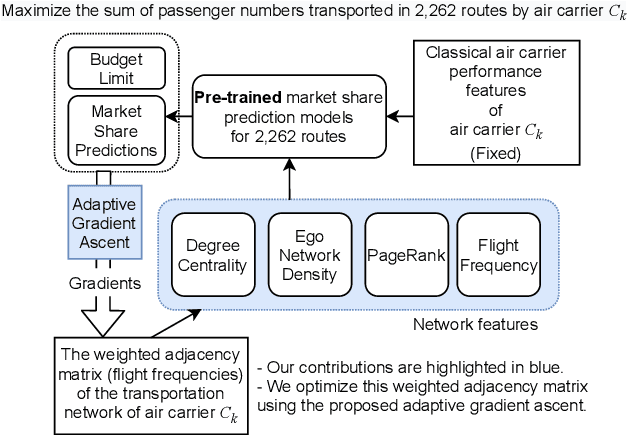

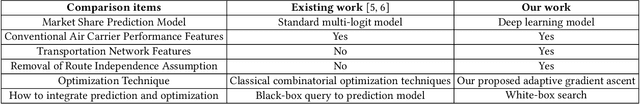

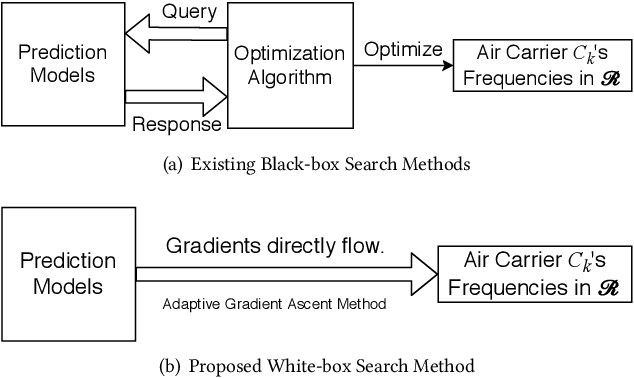

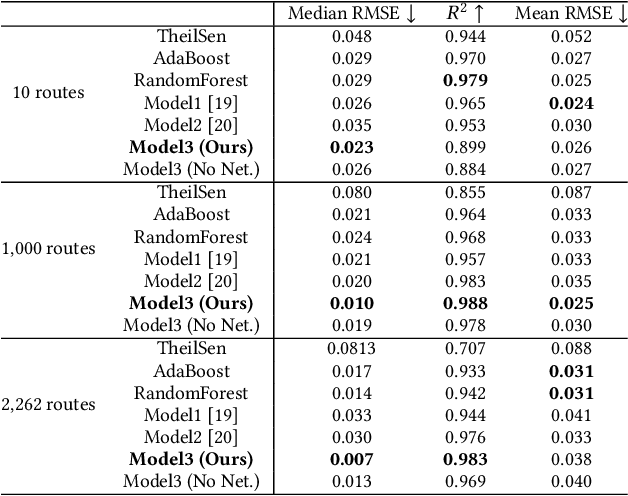

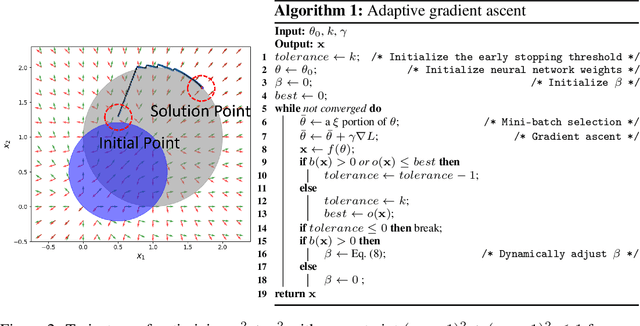

Abstract:We present a prediction-driven optimization framework to maximize the market influence in the US domestic air passenger transportation market by adjusting flight frequencies. At the lower level, our neural networks consider a wide variety of features, such as classical air carrier performance features and transportation network features, to predict the market influence. On top of the prediction models, we define a budget-constrained flight frequency optimization problem to maximize the market influence over 2,262 routes. This problem falls into the category of the non-linear optimization problem, which cannot be solved exactly by conventional methods. To this end, we present a novel adaptive gradient ascent (AGA) method. Our prediction models show two to eleven times better accuracy in terms of the median root-mean-square error (RMSE) over baselines. In addition, our AGA optimization method runs 690 times faster with a better optimization result (in one of our largest scale experiments) than a greedy algorithm.

TearingNet: Point Cloud Autoencoder to Learn Topology-Friendly Representations

Jun 17, 2020

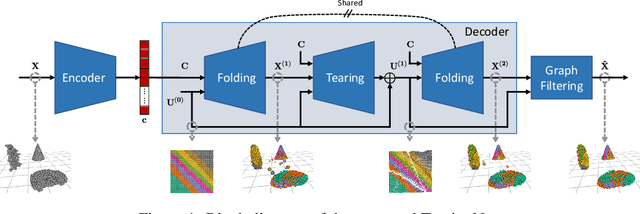

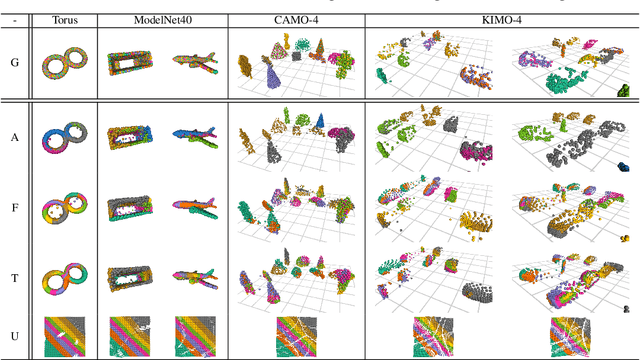

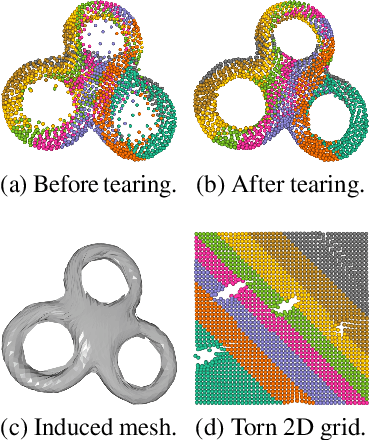

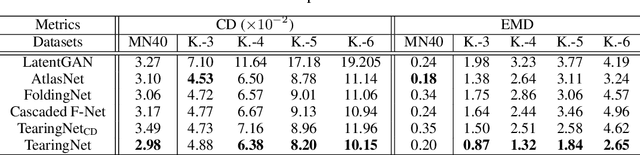

Abstract:Topology matters. Despite the recent success of point cloud processing with geometric deep learning, it remains arduous to capture the complex topologies of point cloud data with a learning model. Given a point cloud dataset containing objects with various genera or scenes with multiple objects, we propose an autoencoder, TearingNet, which tackles the challenging task of representing the point clouds using a fixed-length descriptor. Unlike existing works to deform primitives of genus zero (e.g., a 2D square patch) to an object-level point cloud, we propose a function which tears the primitive during deformation, letting it emulate the topology of a target point cloud. From the torn primitive, we construct a locally-connected graph to further enforce the learned topology via filtering. Moreover, we analyze a widely existing problem which we call point-collapse when processing point clouds with diverse topologies. Correspondingly, we propose a subtractive sculpture strategy to train our TearingNet model. Experimentation finally shows the superiority of our proposal in terms of reconstructing more faithful point clouds as well as generating more topology-friendly representations than benchmarks.

Deep Learning-Based Decoding of Constrained Sequence Codes

Jun 13, 2019

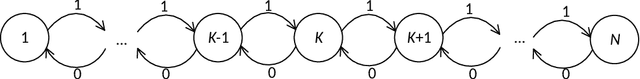

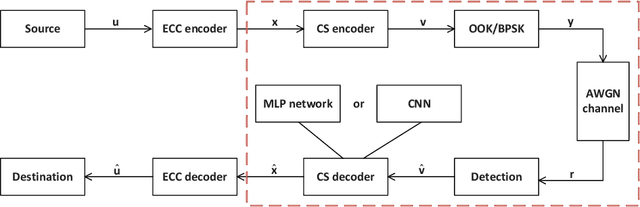

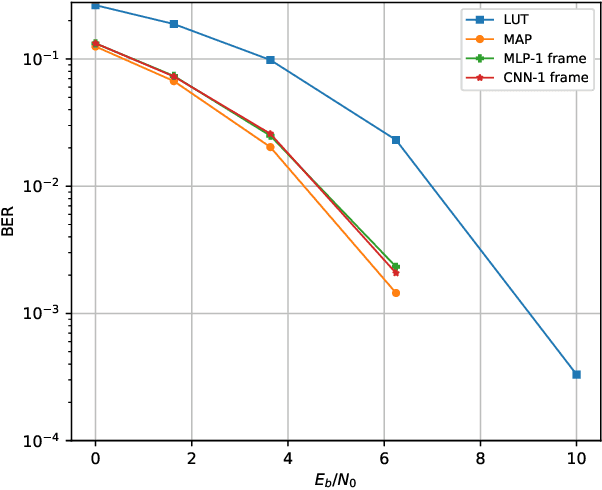

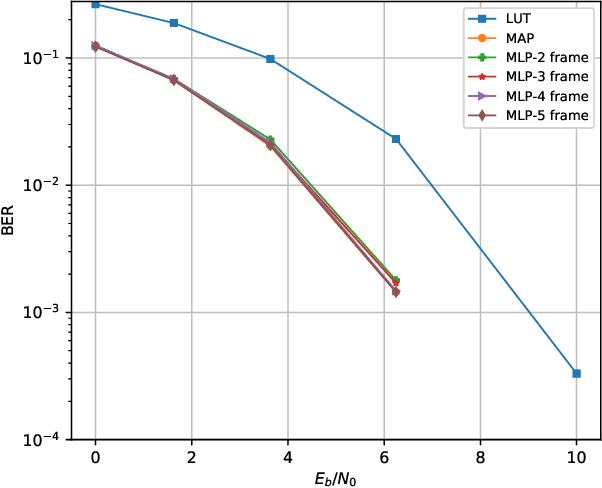

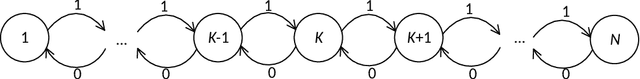

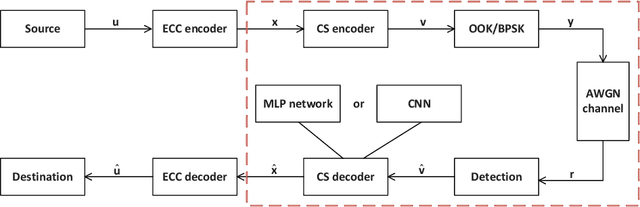

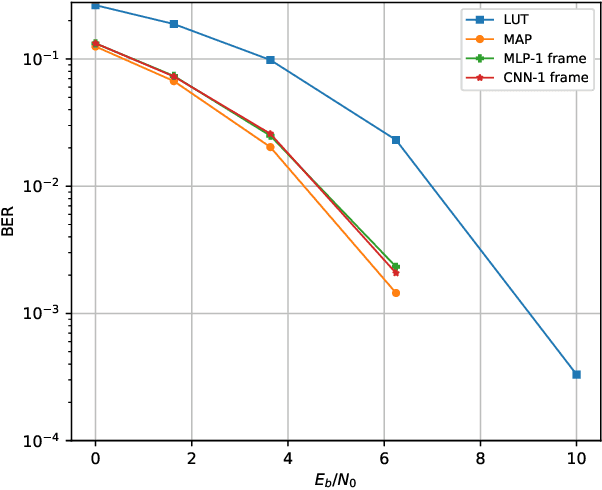

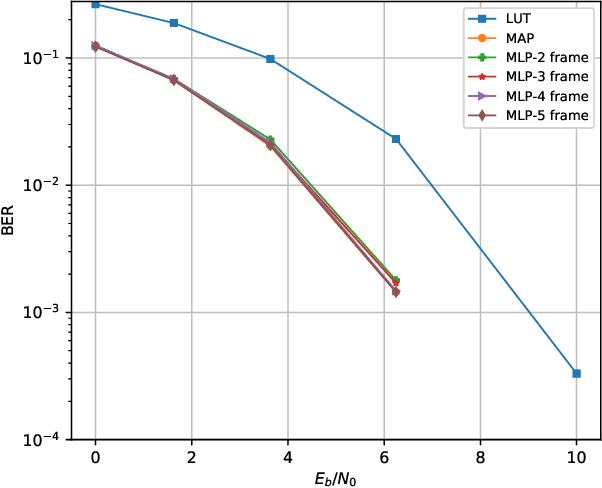

Abstract:Constrained sequence (CS) codes, including fixed-length CS codes and variable-length CS codes, have been widely used in modern wireless communication and data storage systems. Sequences encoded with constrained sequence codes satisfy constraints imposed by the physical channel to enable efficient and reliable transmission of coded symbols. In this paper, we propose using deep learning approaches to decode fixed-length and variable-length CS codes. Traditional encoding and decoding of fixed-length CS codes rely on look-up tables (LUTs), which is prone to errors that occur during transmission. We introduce fixed-length constrained sequence decoding based on multiple layer perception (MLP) networks and convolutional neural networks (CNNs), and demonstrate that we are able to achieve low bit error rates that are close to maximum a posteriori probability (MAP) decoding as well as improve the system throughput. Further, implementation of capacity-achieving fixed-length codes, where the complexity is prohibitively high with LUT decoding, becomes practical with deep learning-based decoding. We then consider CNN-aided decoding of variable-length CS codes. Different from conventional decoding where the received sequence is processed bit-by-bit, we propose using CNNs to perform one-shot batch-processing of variable-length CS codes such that an entire batch is decoded at once, which improves the system throughput. Moreover, since the CNNs can exploit global information with batch-processing instead of only making use of local information as in conventional bit-by-bit processing, the error rates can be reduced. We present simulation results that show excellent performance with both fixed-length and variable-length CS codes that are used in the frontiers of wireless communication systems.

Solving Large-Scale 0-1 Knapsack Problems and its Application to Point Cloud Resampling

Jun 11, 2019

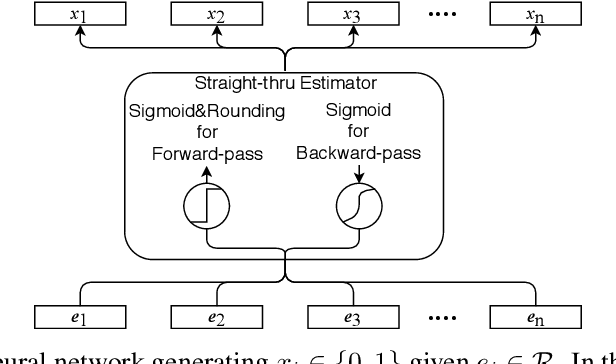

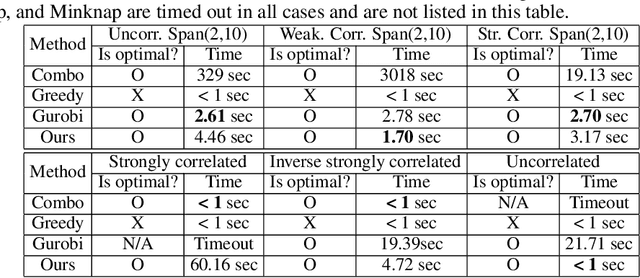

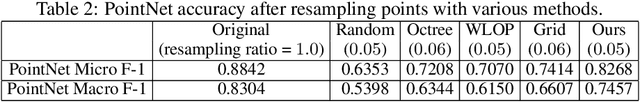

Abstract:0-1 knapsack is of fundamental importance in computer science, business, operations research, etc. In this paper, we present a deep learning technique-based method to solve large-scale 0-1 knapsack problems where the number of products (items) is large and/or the values of products are not necessarily predetermined but decided by an external value assignment function during the optimization process. Our solution is greatly inspired by the method of Lagrange multiplier and some recent adoptions of game theory to deep learning. After formally defining our proposed method based on them, we develop an adaptive gradient ascent method to stabilize its optimization process. In our experiments, the presented method solves all the large-scale benchmark KP instances in a minute whereas existing methods show fluctuating runtime. We also show that our method can be used for other applications, including but not limited to the point cloud resampling.

Deep Unsupervised Learning of 3D Point Clouds via Graph Topology Inference and Filtering

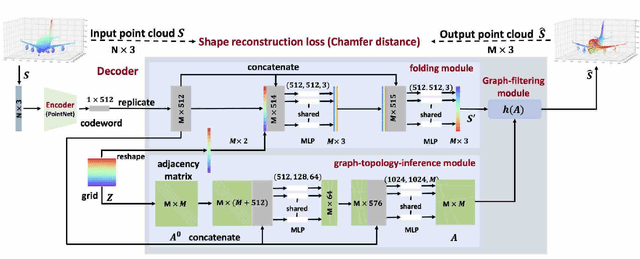

May 11, 2019

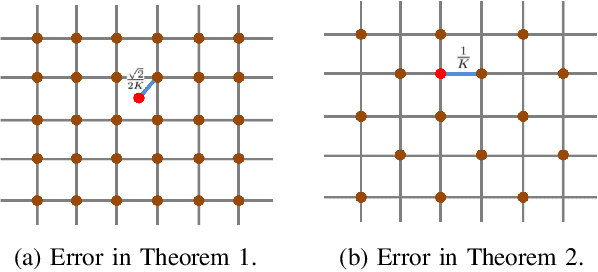

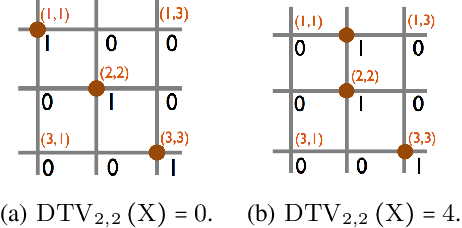

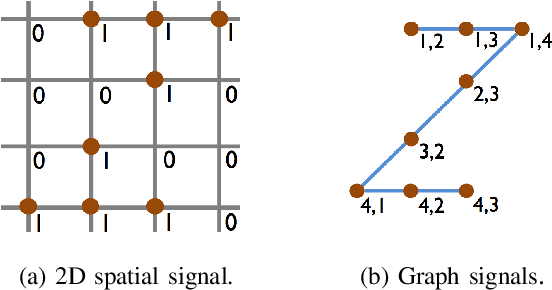

Abstract:We propose a deep autoencoder with graph topology inference and filtering to achieve compact representations of unorganized 3D point clouds in an unsupervised manner. The encoder of the proposed networks adopts similar architectures as in PointNet, which is a well-acknowledged method for supervised learning of 3D point clouds. The decoder of the proposed networks involves three novel modules: the folding module, the graph-topology-inference module, and the graph-filtering module. The folding module folds a canonical 2D lattice to the underlying surface of a 3D point cloud, achieving coarse reconstruction; the graph-topology-inference module learns a graph topology to represent pairwise relationships between 3D points; and the graph-filtering module designs graph filters based on the learnt graph topology to obtain the refined reconstruction. We further provide theoretical analyses of the proposed architecture. We provide an upper bound for the reconstruction loss and further show the superiority of graph smoothness over spatial smoothness as a prior to model 3D point clouds. In the experiments, we validate the proposed networks in three tasks, including reconstruction, visualization, and classification. The experimental results show that (1) the proposed networks outperform the state-of-the-art methods in various tasks, including reconstruction and transfer classification; (2) a graph topology can be inferred as auxiliary information without specific supervision on graph topology inference; and (3) graph filtering refines the reconstruction, leading to better performances.

Primitive Fitting Using Deep Boundary Aware Geometric Segmentation

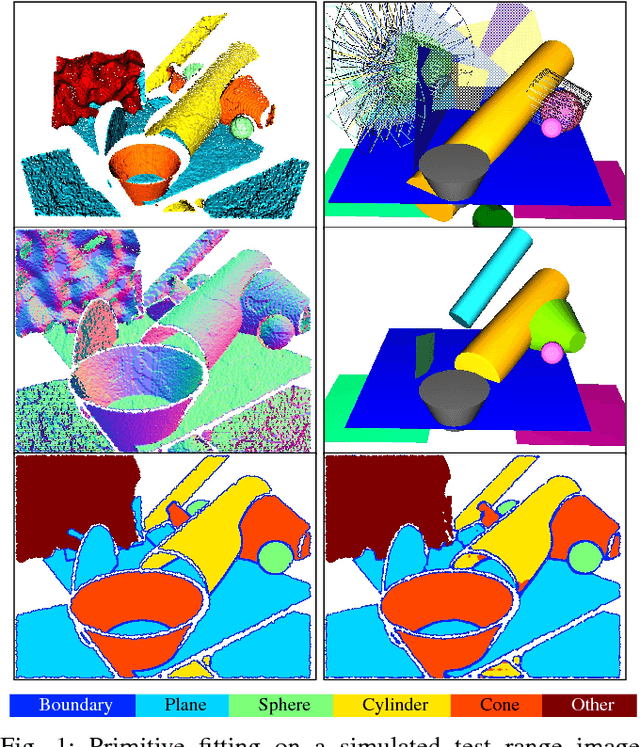

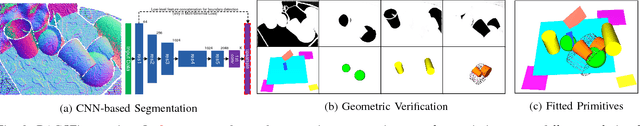

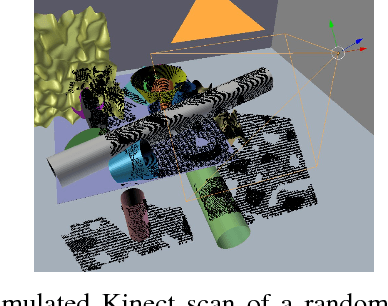

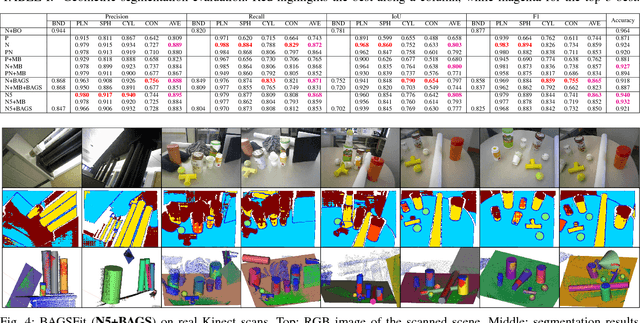

Oct 03, 2018

Abstract:To identify and fit geometric primitives (e.g., planes, spheres, cylinders, cones) in a noisy point cloud is a challenging yet beneficial task for fields such as robotics and reverse engineering. As a multi-model multi-instance fitting problem, it has been tackled with different approaches including RANSAC, which however often fit inferior models in practice with noisy inputs of cluttered scenes. Inspired by the corresponding human recognition process, and benefiting from the recent advancements in image semantic segmentation using deep neural networks, we propose BAGSFit as a new framework addressing this problem. Firstly, through a fully convolutional neural network, the input point cloud is point-wisely segmented into multiple classes divided by jointly detected instance boundaries without any geometric fitting. Thus, segments can serve as primitive hypotheses with a probability estimation of associating primitive classes. Finally, all hypotheses are sent through a geometric verification to correct any misclassification by fitting primitives respectively. We performed training using simulated range images and tested it with both simulated and real-world point clouds. Quantitative and qualitative experiments demonstrated the superiority of BAGSFit.

Deep Learning-Based Decoding for Constrained Sequence Codes

Sep 06, 2018

Abstract:Constrained sequence codes have been widely used in modern communication and data storage systems. Sequences encoded with constrained sequence codes satisfy constraints imposed by the physical channel, hence enabling efficient and reliable transmission of coded symbols. Traditional encoding and decoding of constrained sequence codes rely on table look-up, which is prone to errors that occur during transmission. In this paper, we introduce constrained sequence decoding based on deep learning. With multiple layer perception (MLP) networks and convolutional neural networks (CNNs), we are able to achieve low bit error rates that are close to maximum a posteriori probability (MAP) decoding as well as improve the system throughput. Moreover, implementation of capacity-achieving fixed-length codes, where the complexity is prohibitively high with table look-up decoding, becomes practical with deep learning-based decoding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge