Primitive Fitting Using Deep Boundary Aware Geometric Segmentation

Paper and Code

Oct 03, 2018

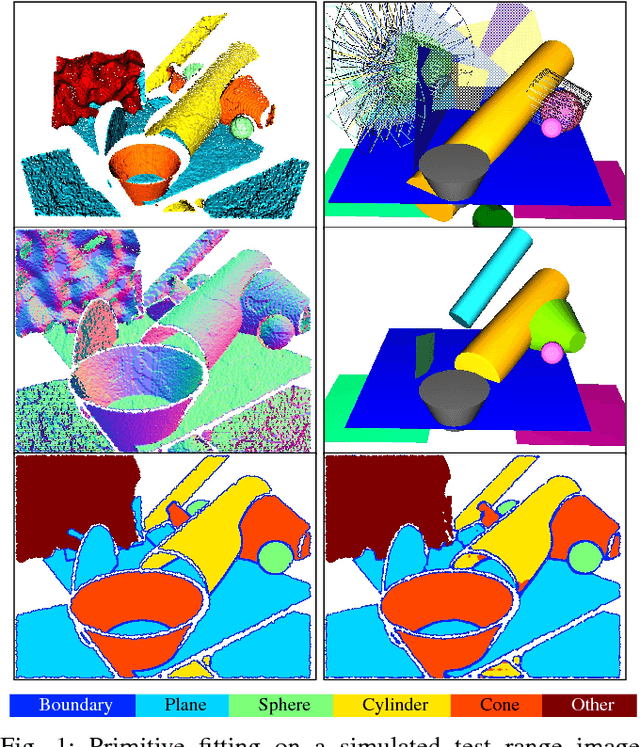

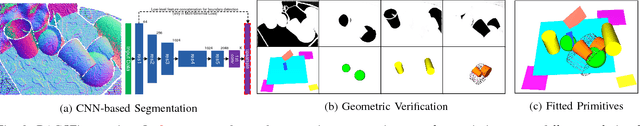

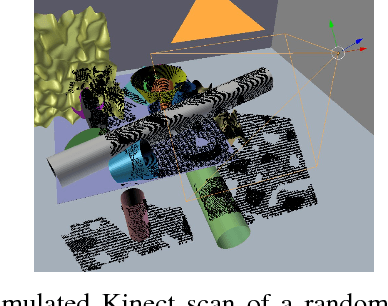

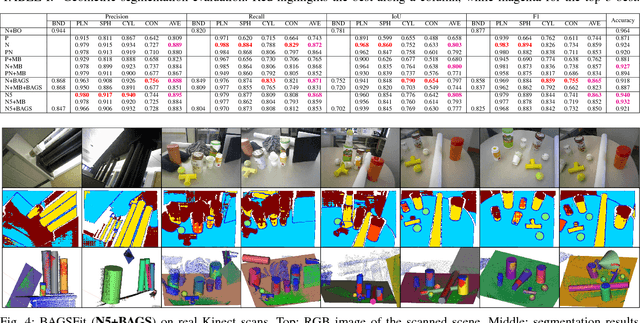

To identify and fit geometric primitives (e.g., planes, spheres, cylinders, cones) in a noisy point cloud is a challenging yet beneficial task for fields such as robotics and reverse engineering. As a multi-model multi-instance fitting problem, it has been tackled with different approaches including RANSAC, which however often fit inferior models in practice with noisy inputs of cluttered scenes. Inspired by the corresponding human recognition process, and benefiting from the recent advancements in image semantic segmentation using deep neural networks, we propose BAGSFit as a new framework addressing this problem. Firstly, through a fully convolutional neural network, the input point cloud is point-wisely segmented into multiple classes divided by jointly detected instance boundaries without any geometric fitting. Thus, segments can serve as primitive hypotheses with a probability estimation of associating primitive classes. Finally, all hypotheses are sent through a geometric verification to correct any misclassification by fitting primitives respectively. We performed training using simulated range images and tested it with both simulated and real-world point clouds. Quantitative and qualitative experiments demonstrated the superiority of BAGSFit.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge