Dongliang Chang

Towards Privacy-Preserving Fine-Grained Visual Classification via Hierarchical Learning from Label Proportions

May 29, 2025

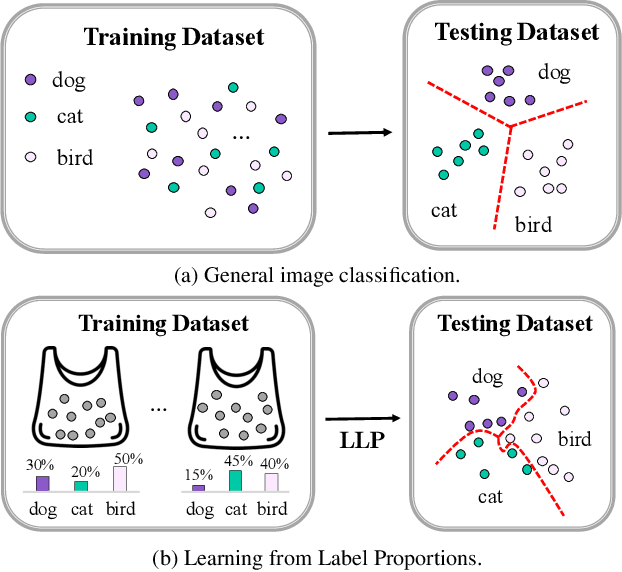

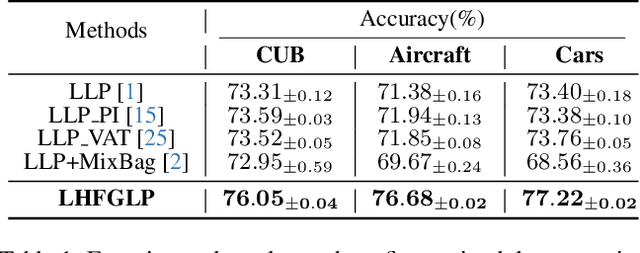

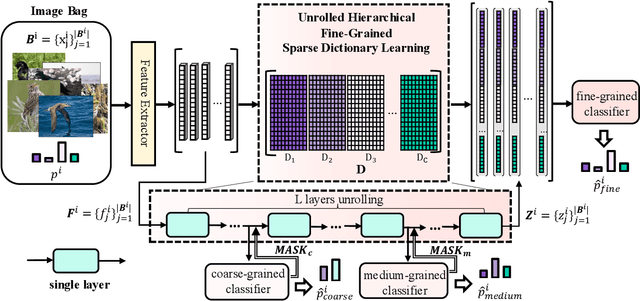

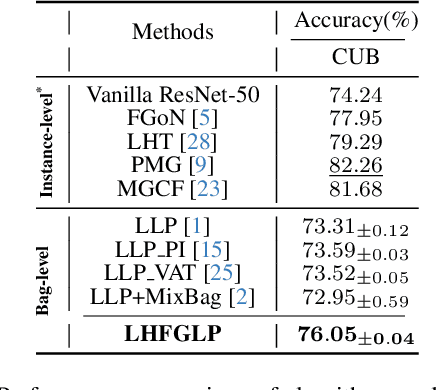

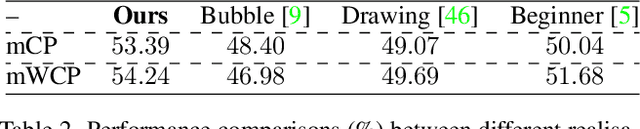

Abstract:In recent years, Fine-Grained Visual Classification (FGVC) has achieved impressive recognition accuracy, despite minimal inter-class variations. However, existing methods heavily rely on instance-level labels, making them impractical in privacy-sensitive scenarios such as medical image analysis. This paper aims to enable accurate fine-grained recognition without direct access to instance labels. To achieve this, we leverage the Learning from Label Proportions (LLP) paradigm, which requires only bag-level labels for efficient training. Unlike existing LLP-based methods, our framework explicitly exploits the hierarchical nature of fine-grained datasets, enabling progressive feature granularity refinement and improving classification accuracy. We propose Learning from Hierarchical Fine-Grained Label Proportions (LHFGLP), a framework that incorporates Unrolled Hierarchical Fine-Grained Sparse Dictionary Learning, transforming handcrafted iterative approximation into learnable network optimization. Additionally, our proposed Hierarchical Proportion Loss provides hierarchical supervision, further enhancing classification performance. Experiments on three widely-used fine-grained datasets, structured in a bag-based manner, demonstrate that our framework consistently outperforms existing LLP-based methods. We will release our code and datasets to foster further research in privacy-preserving fine-grained classification.

Multimodal Conditional Information Bottleneck for Generalizable AI-Generated Image Detection

May 21, 2025Abstract:Although existing CLIP-based methods for detecting AI-generated images have achieved promising results, they are still limited by severe feature redundancy, which hinders their generalization ability. To address this issue, incorporating an information bottleneck network into the task presents a straightforward solution. However, relying solely on image-corresponding prompts results in suboptimal performance due to the inherent diversity of prompts. In this paper, we propose a multimodal conditional bottleneck network to reduce feature redundancy while enhancing the discriminative power of features extracted by CLIP, thereby improving the model's generalization ability. We begin with a semantic analysis experiment, where we observe that arbitrary text features exhibit lower cosine similarity with real image features than with fake image features in the CLIP feature space, a phenomenon we refer to as "bias". Therefore, we introduce InfoFD, a text-guided AI-generated image detection framework. InfoFD consists of two key components: the Text-Guided Conditional Information Bottleneck (TGCIB) and Dynamic Text Orthogonalization (DTO). TGCIB improves the generalizability of learned representations by conditioning on both text and class modalities. DTO dynamically updates weighted text features, preserving semantic information while leveraging the global "bias". Our model achieves exceptional generalization performance on the GenImage dataset and latest generative models. Our code is available at https://github.com/Ant0ny44/InfoFD.

FakeReasoning: Towards Generalizable Forgery Detection and Reasoning

Mar 27, 2025Abstract:Accurate and interpretable detection of AI-generated images is essential for mitigating risks associated with AI misuse. However, the substantial domain gap among generative models makes it challenging to develop a generalizable forgery detection model. Moreover, since every pixel in an AI-generated image is synthesized, traditional saliency-based forgery explanation methods are not well suited for this task. To address these challenges, we propose modeling AI-generated image detection and explanation as a Forgery Detection and Reasoning task (FDR-Task), leveraging vision-language models (VLMs) to provide accurate detection through structured and reliable reasoning over forgery attributes. To facilitate this task, we introduce the Multi-Modal Forgery Reasoning dataset (MMFR-Dataset), a large-scale dataset containing 100K images across 10 generative models, with 10 types of forgery reasoning annotations, enabling comprehensive evaluation of FDR-Task. Additionally, we propose FakeReasoning, a forgery detection and reasoning framework with two key components. First, Forgery-Aligned Contrastive Learning enhances VLMs' understanding of forgery-related semantics through both cross-modal and intra-modal contrastive learning between images and forgery attribute reasoning. Second, a Classification Probability Mapper bridges the optimization gap between forgery detection and language modeling by mapping the output logits of VLMs to calibrated binary classification probabilities. Experiments across multiple generative models demonstrate that FakeReasoning not only achieves robust generalization but also outperforms state-of-the-art methods on both detection and reasoning tasks.

M4Fog: A Global Multi-Regional, Multi-Modal, and Multi-Stage Dataset for Marine Fog Detection and Forecasting to Bridge Ocean and Atmosphere

Jun 19, 2024Abstract:Marine fog poses a significant hazard to global shipping, necessitating effective detection and forecasting to reduce economic losses. In recent years, several machine learning (ML) methods have demonstrated superior detection accuracy compared to traditional meteorological methods. However, most of these works are developed on proprietary datasets, and the few publicly accessible datasets are often limited to simplistic toy scenarios for research purposes. To advance the field, we have collected nearly a decade's worth of multi-modal data related to continuous marine fog stages from four series of geostationary meteorological satellites, along with meteorological observations and numerical analysis, covering 15 marine regions globally where maritime fog frequently occurs. Through pixel-level manual annotation by meteorological experts, we present the most comprehensive marine fog detection and forecasting dataset to date, named M4Fog, to bridge ocean and atmosphere. The dataset comprises 68,000 "super data cubes" along four dimensions: elements, latitude, longitude and time, with a temporal resolution of half an hour and a spatial resolution of 1 kilometer. Considering practical applications, we have defined and explored three meaningful tracks with multi-metric evaluation systems: static or dynamic marine fog detection, and spatio-temporal forecasting for cloud images. Extensive benchmarking and experiments demonstrate the rationality and effectiveness of the construction concept for proposed M4Fog. The data and codes are available to whole researchers through cloud platforms to develop ML-driven marine fog solutions and mitigate adverse impacts on human activities.

DemoFusion: Democratising High-Resolution Image Generation With No $$$

Nov 24, 2023Abstract:High-resolution image generation with Generative Artificial Intelligence (GenAI) has immense potential but, due to the enormous capital investment required for training, it is increasingly centralised to a few large corporations, and hidden behind paywalls. This paper aims to democratise high-resolution GenAI by advancing the frontier of high-resolution generation while remaining accessible to a broad audience. We demonstrate that existing Latent Diffusion Models (LDMs) possess untapped potential for higher-resolution image generation. Our novel DemoFusion framework seamlessly extends open-source GenAI models, employing Progressive Upscaling, Skip Residual, and Dilated Sampling mechanisms to achieve higher-resolution image generation. The progressive nature of DemoFusion requires more passes, but the intermediate results can serve as "previews", facilitating rapid prompt iteration.

Bi-directional Feature Reconstruction Network for Fine-Grained Few-Shot Image Classification

Nov 30, 2022

Abstract:The main challenge for fine-grained few-shot image classification is to learn feature representations with higher inter-class and lower intra-class variations, with a mere few labelled samples. Conventional few-shot learning methods however cannot be naively adopted for this fine-grained setting -- a quick pilot study reveals that they in fact push for the opposite (i.e., lower inter-class variations and higher intra-class variations). To alleviate this problem, prior works predominately use a support set to reconstruct the query image and then utilize metric learning to determine its category. Upon careful inspection, we further reveal that such unidirectional reconstruction methods only help to increase inter-class variations and are not effective in tackling intra-class variations. In this paper, we for the first time introduce a bi-reconstruction mechanism that can simultaneously accommodate for inter-class and intra-class variations. In addition to using the support set to reconstruct the query set for increasing inter-class variations, we further use the query set to reconstruct the support set for reducing intra-class variations. This design effectively helps the model to explore more subtle and discriminative features which is key for the fine-grained problem in hand. Furthermore, we also construct a self-reconstruction module to work alongside the bi-directional module to make the features even more discriminative. Experimental results on three widely used fine-grained image classification datasets consistently show considerable improvements compared with other methods. Codes are available at: https://github.com/PRIS-CV/Bi-FRN.

Multi-View Active Fine-Grained Recognition

Jun 02, 2022

Abstract:As fine-grained visual classification (FGVC) being developed for decades, great works related have exposed a key direction -- finding discriminative local regions and revealing subtle differences. However, unlike identifying visual contents within static images, for recognizing objects in the real physical world, discriminative information is not only present within seen local regions but also hides in other unseen perspectives. In other words, in addition to focusing on the distinguishable part from the whole, for efficient and accurate recognition, it is required to infer the key perspective with a few glances, e.g., people may recognize a "Benz AMG GT" with a glance of its front and then know that taking a look at its exhaust pipe can help to tell which year's model it is. In this paper, back to reality, we put forward the problem of active fine-grained recognition (AFGR) and complete this study in three steps: (i) a hierarchical, multi-view, fine-grained vehicle dataset is collected as the testbed, (ii) a simple experiment is designed to verify that different perspectives contribute differently for FGVC and different categories own different discriminative perspective, (iii) a policy-gradient-based framework is adopted to achieve efficient recognition with active view selection. Comprehensive experiments demonstrate that the proposed method delivers a better performance-efficient trade-off than previous FGVC methods and advanced neural networks.

Domain Generalization via Frequency-based Feature Disentanglement and Interaction

Jan 20, 2022

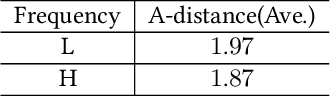

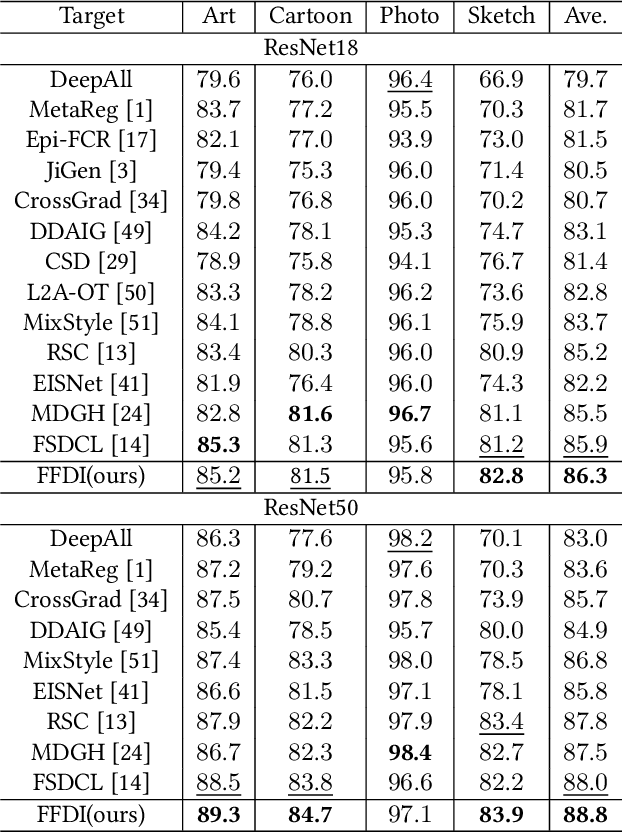

Abstract:Data out-of-distribution is a meta-challenge for all statistical learning algorithms that strongly rely on the i.i.d. assumption. It leads to unavoidable labor costs and confidence crises in realistic applications. For that, domain generalization aims at mining domain-irrelevant knowledge from multiple source domains that can generalize to unseen target domains with unknown distributions. In this paper, leveraging the image frequency domain, we uniquely work with two key observations: (i) the high-frequency information of images depict object edge structure, which is naturally consistent across different domains, and (ii) the low-frequency component retains object smooth structure but are much more domain-specific. Motivated by these insights, we introduce (i) an encoder-decoder structure for high-frequency and low-frequency feature disentangling, (ii) an information interaction mechanism that ensures helpful knowledge from both two parts can cooperate effectively, and (iii) a novel data augmentation technique that works on the frequency domain for encouraging robustness of the network. The proposed method obtains state-of-the-art results on three widely used domain generalization benchmarks (Digit-DG, Office-Home, and PACS).

Clue Me In: Semi-Supervised FGVC with Out-of-Distribution Data

Dec 06, 2021

Abstract:Despite great strides made on fine-grained visual classification (FGVC), current methods are still heavily reliant on fully-supervised paradigms where ample expert labels are called for. Semi-supervised learning (SSL) techniques, acquiring knowledge from unlabeled data, provide a considerable means forward and have shown great promise for coarse-grained problems. However, exiting SSL paradigms mostly assume in-distribution (i.e., category-aligned) unlabeled data, which hinders their effectiveness when re-proposed on FGVC. In this paper, we put forward a novel design specifically aimed at making out-of-distribution data work for semi-supervised FGVC, i.e., to "clue them in". We work off an important assumption that all fine-grained categories naturally follow a hierarchical structure (e.g., the phylogenetic tree of "Aves" that covers all bird species). It follows that, instead of operating on individual samples, we can instead predict sample relations within this tree structure as the optimization goal of SSL. Beyond this, we further introduced two strategies uniquely brought by these tree structures to achieve inter-sample consistency regularization and reliable pseudo-relation. Our experimental results reveal that (i) the proposed method yields good robustness against out-of-distribution data, and (ii) it can be equipped with prior arts, boosting their performance thus yielding state-of-the-art results. Code is available at https://github.com/PRIS-CV/RelMatch.

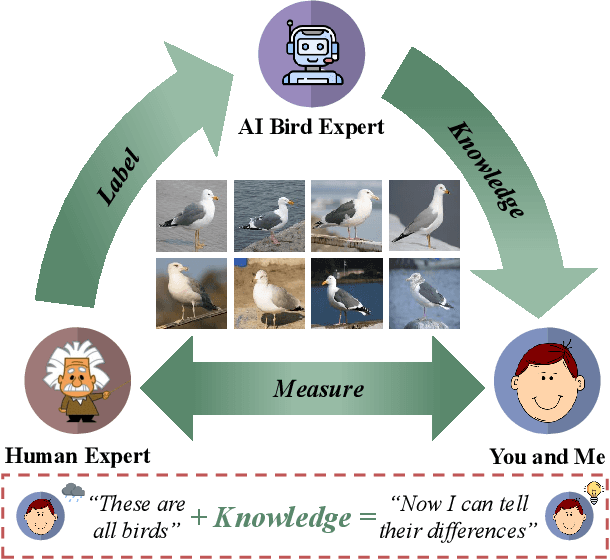

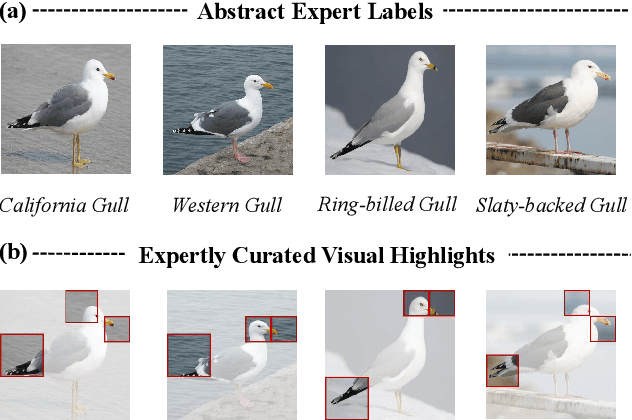

Making a Bird AI Expert Work for You and Me

Dec 06, 2021

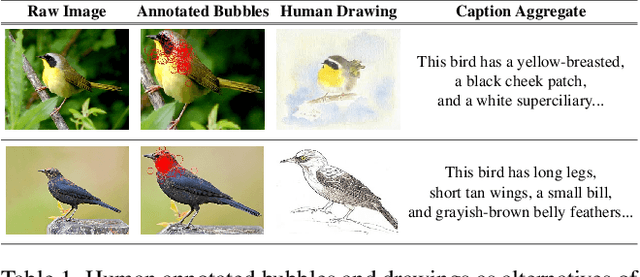

Abstract:As powerful as fine-grained visual classification (FGVC) is, responding your query with a bird name of "Whip-poor-will" or "Mallard" probably does not make much sense. This however commonly accepted in the literature, underlines a fundamental question interfacing AI and human -- what constitutes transferable knowledge for human to learn from AI? This paper sets out to answer this very question using FGVC as a test bed. Specifically, we envisage a scenario where a trained FGVC model (the AI expert) functions as a knowledge provider in enabling average people (you and me) to become better domain experts ourselves, i.e. those capable in distinguishing between "Whip-poor-will" and "Mallard". Fig. 1 lays out our approach in answering this question. Assuming an AI expert trained using expert human labels, we ask (i) what is the best transferable knowledge we can extract from AI, and (ii) what is the most practical means to measure the gains in expertise given that knowledge? On the former, we propose to represent knowledge as highly discriminative visual regions that are expert-exclusive. For that, we devise a multi-stage learning framework, which starts with modelling visual attention of domain experts and novices before discriminatively distilling their differences to acquire the expert exclusive knowledge. For the latter, we simulate the evaluation process as book guide to best accommodate the learning practice of what is accustomed to humans. A comprehensive human study of 15,000 trials shows our method is able to consistently improve people of divergent bird expertise to recognise once unrecognisable birds. Interestingly, our approach also leads to improved conventional FGVC performance when the extracted knowledge defined is utilised as means to achieve discriminative localisation. Codes are available at: https://github.com/PRIS-CV/Making-a-Bird-AI-Expert-Work-for-You-and-Me

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge