Dmitry Petrov

ShapeWords: Guiding Text-to-Image Synthesis with 3D Shape-Aware Prompts

Dec 03, 2024

Abstract:We introduce ShapeWords, an approach for synthesizing images based on 3D shape guidance and text prompts. ShapeWords incorporates target 3D shape information within specialized tokens embedded together with the input text, effectively blending 3D shape awareness with textual context to guide the image synthesis process. Unlike conventional shape guidance methods that rely on depth maps restricted to fixed viewpoints and often overlook full 3D structure or textual context, ShapeWords generates diverse yet consistent images that reflect both the target shape's geometry and the textual description. Experimental results show that ShapeWords produces images that are more text-compliant, aesthetically plausible, while also maintaining 3D shape awareness.

GEM3D: GEnerative Medial Abstractions for 3D Shape Synthesis

Feb 26, 2024

Abstract:We introduce GEM3D -- a new deep, topology-aware generative model of 3D shapes. The key ingredient of our method is a neural skeleton-based representation encoding information on both shape topology and geometry. Through a denoising diffusion probabilistic model, our method first generates skeleton-based representations following the Medial Axis Transform (MAT), then generates surfaces through a skeleton-driven neural implicit formulation. The neural implicit takes into account the topological and geometric information stored in the generated skeleton representations to yield surfaces that are more topologically and geometrically accurate compared to previous neural field formulations. We discuss applications of our method in shape synthesis and point cloud reconstruction tasks, and evaluate our method both qualitatively and quantitatively. We demonstrate significantly more faithful surface reconstruction and diverse shape generation results compared to the state-of-the-art, also involving challenging scenarios of reconstructing and synthesizing structurally complex, high-genus shape surfaces from Thingi10K and ShapeNet.

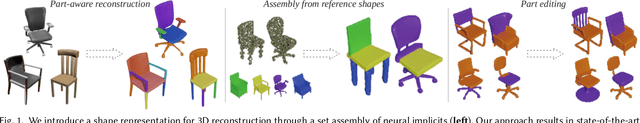

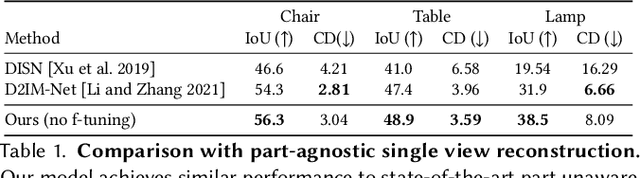

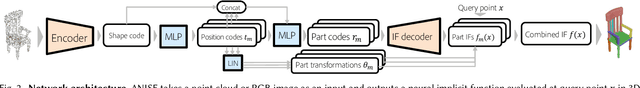

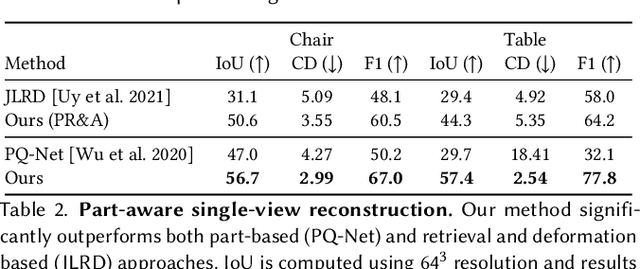

ANISE: Assembly-based Neural Implicit Surface rEconstruction

May 27, 2022

Abstract:We present ANISE, a method that reconstructs a 3D shape from partial observations (images or sparse point clouds) using a part-aware neural implicit shape representation. It is formulated as an assembly of neural implicit functions, each representing a different shape part. In contrast to previous approaches, the prediction of this representation proceeds in a coarse-to-fine manner. Our network first predicts part transformations which are associated with part neural implicit functions conditioned on those transformations. The part implicit functions can then be combined into a single, coherent shape, enabling part-aware shape reconstructions from images and point clouds. Those reconstructions can be obtained in two ways: (i) by directly decoding combining the refined part implicit functions; or (ii) by using part latents to query similar parts in a part database and assembling them in a single shape. We demonstrate that, when performing reconstruction by decoding part representations into implicit functions, our method achieves state-of-the-art part-aware reconstruction results from both images and sparse point clouds. When reconstructing shapes by assembling parts queried from a dataset, our approach significantly outperforms traditional shape retrieval methods even when significantly restricting the size of the shape database. We present our results in well-known sparse point cloud reconstruction and single-view reconstruction benchmarks.

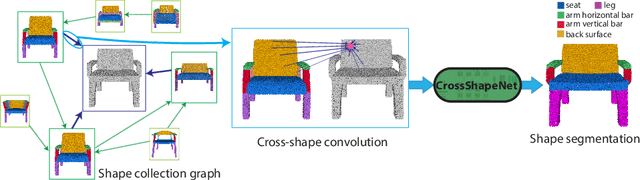

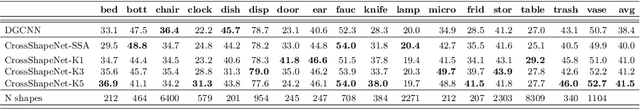

Cross-Shape Graph Convolutional Networks

Apr 06, 2020

Abstract:We present a method that processes 3D point clouds by performing graph convolution operations across shapes. In this manner, point descriptors are learned by allowing interaction and propagation of feature representations within a shape collection. To enable this form of non-local, cross-shape graph convolution, our method learns a pairwise point attention mechanism indicating the degree of interaction between points on different shapes. Our method also learns to create a graph over shapes of an input collection whose edges connect shapes deemed as useful for performing cross-shape convolution. The edges are also equipped with learned weights indicating the compatibility of each shape pair for cross-shape convolution. Our experiments demonstrate that this interaction and propagation of point representations across shapes make them more discriminative. In particular, our results show significantly improved performance for 3D point cloud semantic segmentation compared to conventional approaches, especially in cases with the limited number of training examples.

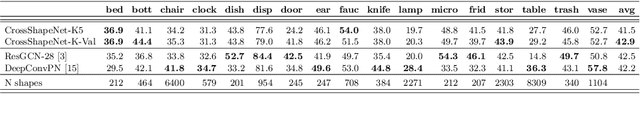

Evaluating 35 Methods to Generate Structural Connectomes Using Pairwise Classification

Jun 19, 2017

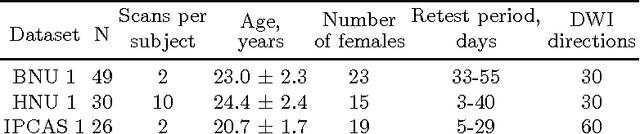

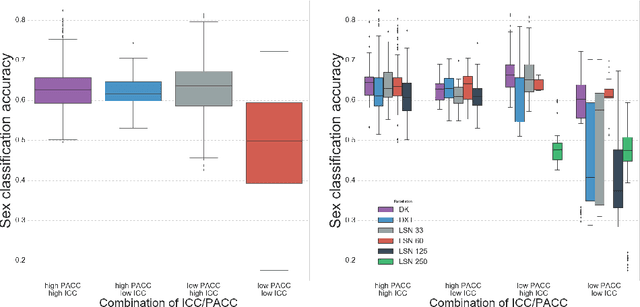

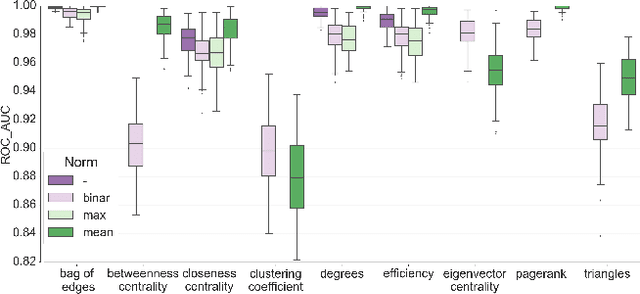

Abstract:There is no consensus on how to construct structural brain networks from diffusion MRI. How variations in pre-processing steps affect network reliability and its ability to distinguish subjects remains opaque. In this work, we address this issue by comparing 35 structural connectome-building pipelines. We vary diffusion reconstruction models, tractography algorithms and parcellations. Next, we classify structural connectome pairs as either belonging to the same individual or not. Connectome weights and eight topological derivative measures form our feature set. For experiments, we use three test-retest datasets from the Consortium for Reliability and Reproducibility (CoRR) comprised of a total of 105 individuals. We also compare pairwise classification results to a commonly used parametric test-retest measure, Intraclass Correlation Coefficient (ICC).

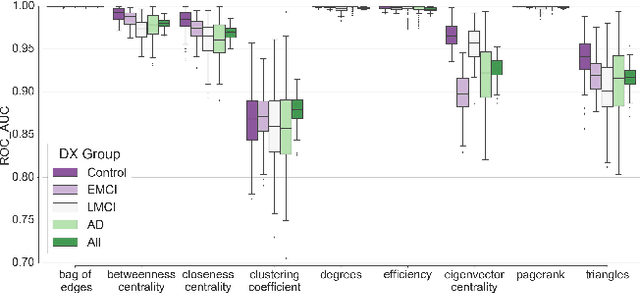

Structural Connectome Validation Using Pairwise Classification

Jan 30, 2017

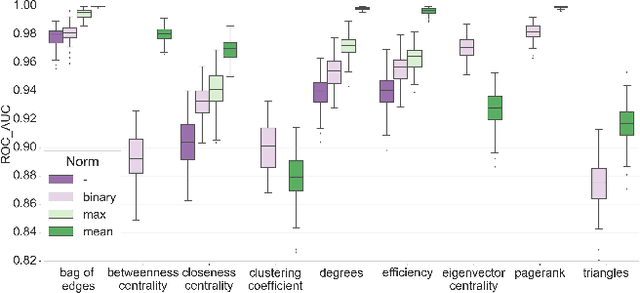

Abstract:In this work, we study the extent to which structural connectomes and topological derivative measures are unique to individual changes within human brains. To do so, we classify structural connectome pairs from two large longitudinal datasets as either belonging to the same individual or not. Our data is comprised of 227 individuals from the Alzheimer's Disease Neuroimaging Initiative (ADNI) and 226 from the Parkinson's Progression Markers Initiative (PPMI). We achieve 0.99 area under the ROC curve score for features which represent either weights or network structure of the connectomes (node degrees, PageRank and local efficiency). Our approach may be useful for eliminating noisy features as a preprocessing step in brain aging studies and early diagnosis classification problems.

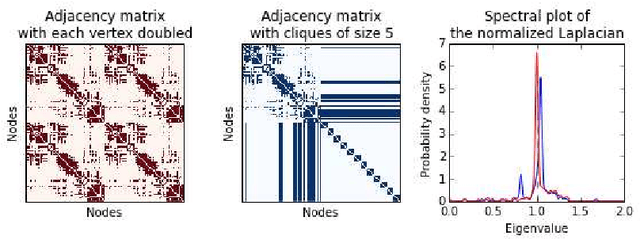

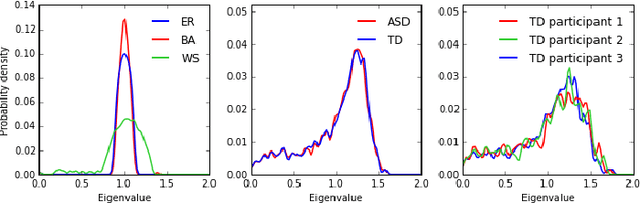

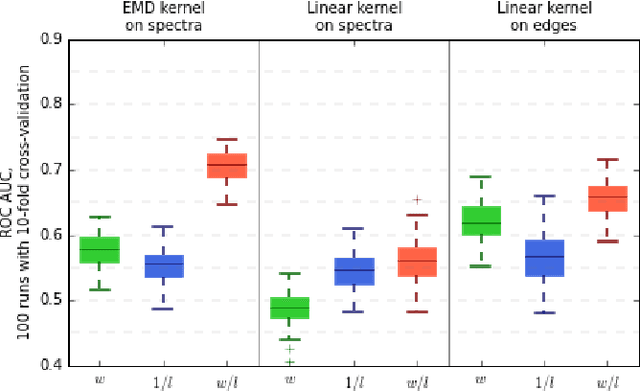

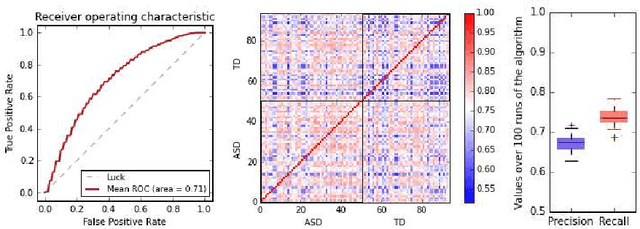

Kernel classification of connectomes based on earth mover's distance between graph spectra

Nov 27, 2016

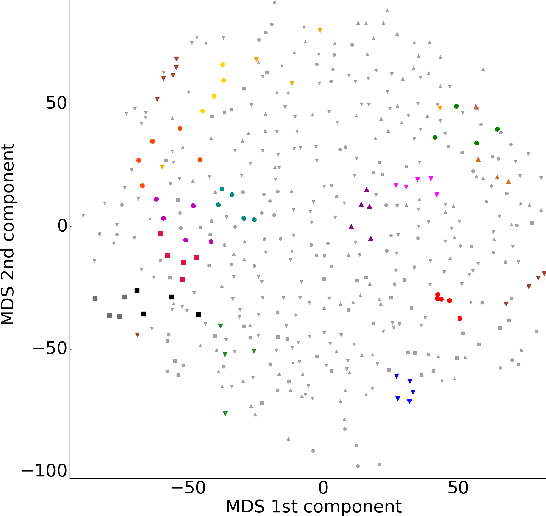

Abstract:In this paper, we tackle a problem of predicting phenotypes from structural connectomes. We propose that normalized Laplacian spectra can capture structural properties of brain networks, and hence graph spectral distributions are useful for a task of connectome-based classification. We introduce a kernel that is based on earth mover's distance (EMD) between spectral distributions of brain networks. We access performance of an SVM classifier with the proposed kernel for a task of classification of autism spectrum disorder versus typical development based on a publicly available dataset. Classification quality (area under the ROC-curve) obtained with the EMD-based kernel on spectral distributions is 0.71, which is higher than that based on simpler graph embedding methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge