ANISE: Assembly-based Neural Implicit Surface rEconstruction

Paper and Code

May 27, 2022

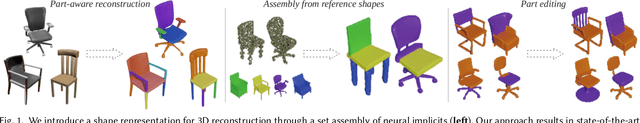

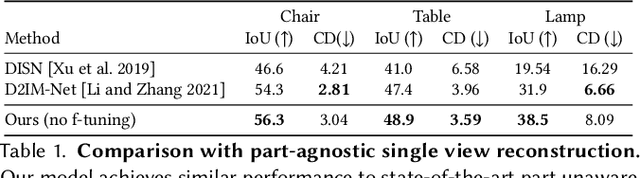

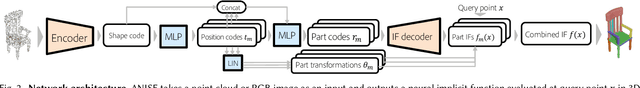

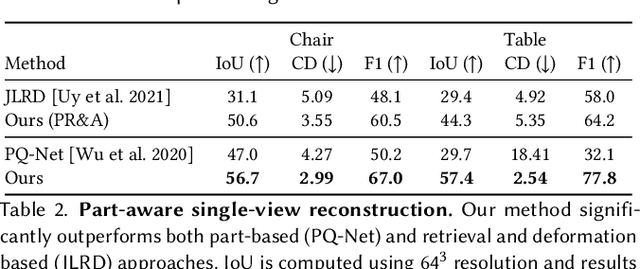

We present ANISE, a method that reconstructs a 3D shape from partial observations (images or sparse point clouds) using a part-aware neural implicit shape representation. It is formulated as an assembly of neural implicit functions, each representing a different shape part. In contrast to previous approaches, the prediction of this representation proceeds in a coarse-to-fine manner. Our network first predicts part transformations which are associated with part neural implicit functions conditioned on those transformations. The part implicit functions can then be combined into a single, coherent shape, enabling part-aware shape reconstructions from images and point clouds. Those reconstructions can be obtained in two ways: (i) by directly decoding combining the refined part implicit functions; or (ii) by using part latents to query similar parts in a part database and assembling them in a single shape. We demonstrate that, when performing reconstruction by decoding part representations into implicit functions, our method achieves state-of-the-art part-aware reconstruction results from both images and sparse point clouds. When reconstructing shapes by assembling parts queried from a dataset, our approach significantly outperforms traditional shape retrieval methods even when significantly restricting the size of the shape database. We present our results in well-known sparse point cloud reconstruction and single-view reconstruction benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge