Danny Weyns

Online ML Self-adaptation in Face of Traps

Sep 11, 2023Abstract:Online machine learning (ML) is often used in self-adaptive systems to strengthen the adaptation mechanism and improve the system utility. Despite such benefits, applying online ML for self-adaptation can be challenging, and not many papers report its limitations. Recently, we experimented with applying online ML for self-adaptation of a smart farming scenario and we had faced several unexpected difficulties -- traps -- that, to our knowledge, are not discussed enough in the community. In this paper, we report our experience with these traps. Specifically, we discuss several traps that relate to the specification and online training of the ML-based estimators, their impact on self-adaptation, and the approach used to evaluate the estimators. Our overview of these traps provides a list of lessons learned, which can serve as guidance for other researchers and practitioners when applying online ML for self-adaptation.

From Self-Adaptation to Self-Evolution Leveraging the Operational Design Domain

Mar 27, 2023

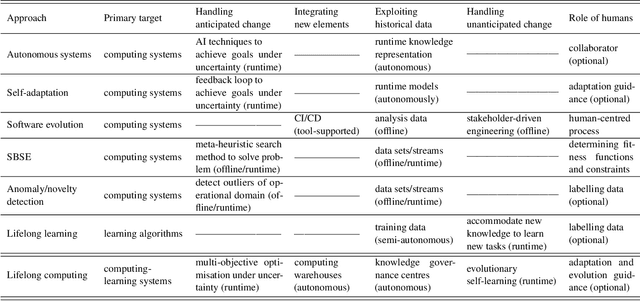

Abstract:Engineering long-running computing systems that achieve their goals under ever-changing conditions pose significant challenges. Self-adaptation has shown to be a viable approach to dealing with changing conditions. Yet, the capabilities of a self-adaptive system are constrained by its operational design domain (ODD), i.e., the conditions for which the system was built (requirements, constraints, and context). Changes, such as adding new goals or dealing with new contexts, require system evolution. While the system evolution process has been automated substantially, it remains human-driven. Given the growing complexity of computing systems, human-driven evolution will eventually become unmanageable. In this paper, we provide a definition for ODD and apply it to a self-adaptive system. Next, we explain why conditions not covered by the ODD require system evolution. Then, we outline a new approach for self-evolution that leverages the concept of ODD, enabling a system to evolve autonomously to deal with conditions not anticipated by its initial ODD. We conclude with open challenges to realise self-evolution.

Dealing with Drift of Adaptation Spaces in Learning-based Self-Adaptive Systems using Lifelong Self-Adaptation

Nov 04, 2022Abstract:Recently, machine learning (ML) has become a popular approach to support self-adaptation. ML has been used to deal with several problems in self-adaptation, such as maintaining an up-to-date runtime model under uncertainty and scalable decision-making. Yet, exploiting ML comes with inherent challenges. In this paper, we focus on a particularly important challenge for learning-based self-adaptive systems: drift in adaptation spaces. With adaptation space we refer to the set of adaptation options a self-adaptive system can select from at a given time to adapt based on the estimated quality properties of the adaptation options. Drift of adaptation spaces originates from uncertainties, affecting the quality properties of the adaptation options. Such drift may imply that eventually no adaptation option can satisfy the initial set of the adaptation goals, deteriorating the quality of the system, or adaptation options may emerge that allow enhancing the adaptation goals. In ML, such shift corresponds to novel class appearance, a type of concept drift in target data that common ML techniques have problems dealing with. To tackle this problem, we present a novel approach to self-adaptation that enhances learning-based self-adaptive systems with a lifelong ML layer. We refer to this approach as lifelong self-adaptation. The lifelong ML layer tracks the system and its environment, associates this knowledge with the current tasks, identifies new tasks based on differences, and updates the learning models of the self-adaptive system accordingly. A human stakeholder may be involved to support the learning process and adjust the learning and goal models. We present a reusable architecture for lifelong self-adaptation and apply it to the case of drift of adaptation spaces that affects the decision-making in self-adaptation. We validate the approach for a series of scenarios using the DeltaIoT exemplar.

The Vision of Self-Evolving Computing Systems

Apr 14, 2022

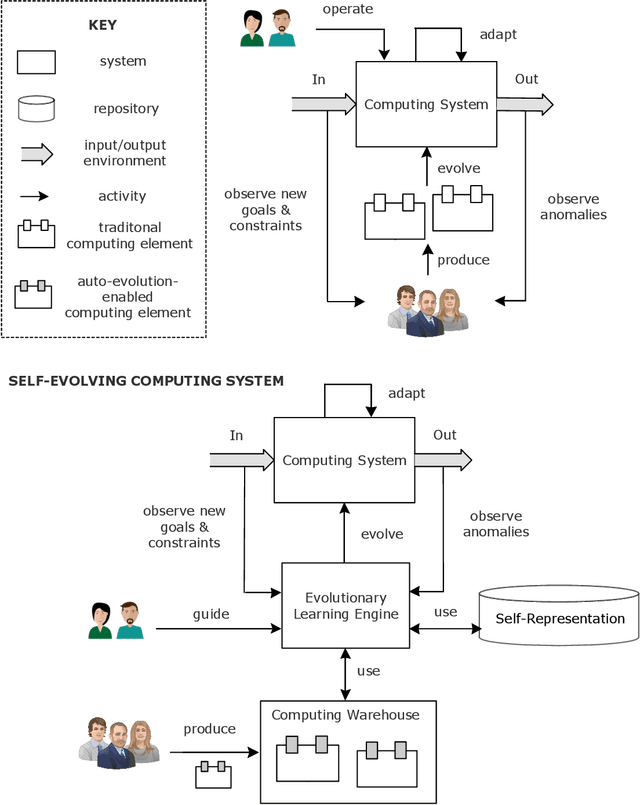

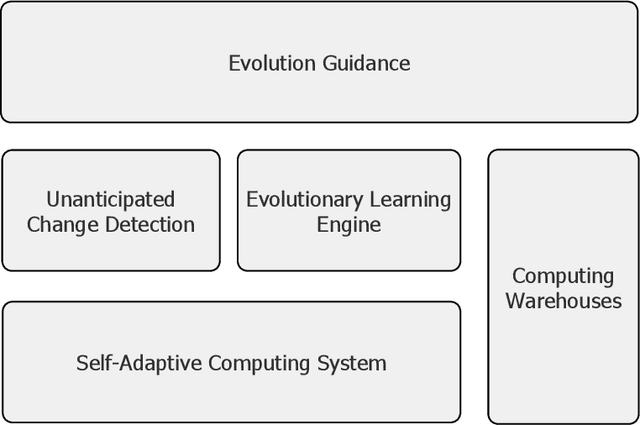

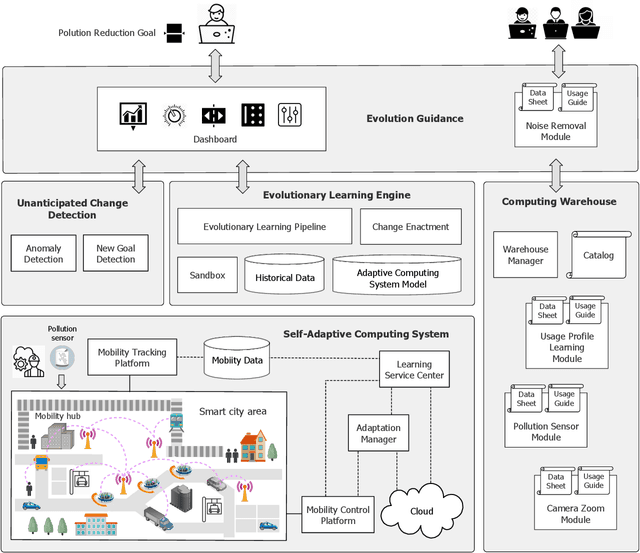

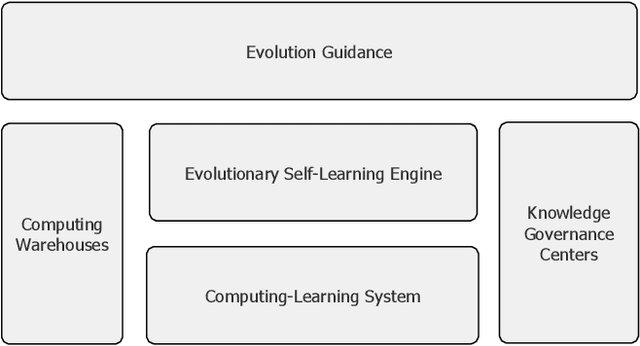

Abstract:Computing systems are omnipresent; their sustainability has become crucial for our society. A key aspect of this sustainability is the ability of computing systems to cope with the continuous change they face, ranging from dynamic operating conditions, to changing goals, and technological progress. While we are able to engineer smart computing systems that autonomously deal with various types of changes, handling unanticipated changes requires system evolution, which remains in essence a human-centered process. This will eventually become unmanageable. To break through the status quo, we put forward an arguable opinion for the vision of self-evolving computing systems that are equipped with an evolutionary engine enabling them to evolve autonomously. Specifically, when a self-evolving computing system detects conditions outside its operational domain, such as an anomaly or a new goal, it activates an evolutionary engine that runs online experiments to determine how the system needs to evolve to deal with the changes, thereby evolving its architecture. During this process the engine can integrate new computing elements that are provided by computing warehouses. These computing elements provide specifications and procedures enabling their automatic integration. We motivate the need for self-evolving computing systems in light of the state of the art, outline a conceptual architecture of self-evolving computing systems, and illustrate the architecture for a future smart city mobility system that needs to evolve continuously with changing conditions. To conclude, we highlight key research challenges to realize the vision of self-evolving computing systems.

Deep Learning for Effective and Efficient Reduction of Large Adaptation Spaces in Self-Adaptive Systems

Apr 13, 2022

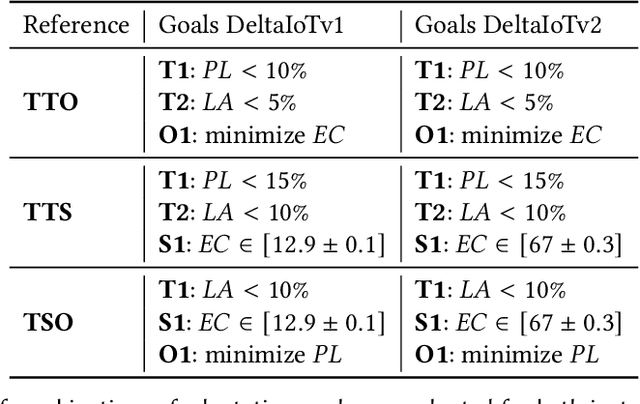

Abstract:Many software systems today face uncertain operating conditions, such as sudden changes in the availability of resources or unexpected user behavior. Without proper mitigation these uncertainties can jeopardize the system goals. Self-adaptation is a common approach to tackle such uncertainties. When the system goals may be compromised, the self-adaptive system has to select the best adaptation option to reconfigure by analyzing the possible adaptation options, i.e., the adaptation space. Yet, analyzing large adaptation spaces using rigorous methods can be resource- and time-consuming, or even be infeasible. One approach to tackle this problem is by using online machine learning to reduce adaptation spaces. However, existing approaches require domain expertise to perform feature engineering to define the learner, and support online adaptation space reduction only for specific goals. To tackle these limitations, we present 'Deep Learning for Adaptation Space Reduction Plus' -- DLASeR+ in short. DLASeR+ offers an extendable learning framework for online adaptation space reduction that does not require feature engineering, while supporting three common types of adaptation goals: threshold, optimization, and set-point goals. We evaluate DLASeR+ on two instances of an Internet-of-Things application with increasing sizes of adaptation spaces for different combinations of adaptation goals. We compare DLASeR+ with a baseline that applies exhaustive analysis and two state-of-the-art approaches for adaptation space reduction that rely on learning. Results show that DLASeR+ is effective with a negligible effect on the realization of the adaptation goals compared to an exhaustive analysis approach, and supports three common types of adaptation goals beyond the state-of-the-art approaches.

Lifelong Self-Adaptation: Self-Adaptation Meets Lifelong Machine Learning

Apr 04, 2022

Abstract:In the past years, machine learning (ML) has become a popular approach to support self-adaptation. While ML techniques enable dealing with several problems in self-adaptation, such as scalable decision-making, they are also subject to inherent challenges. In this paper, we focus on one such challenge that is particularly important for self-adaptation: ML techniques are designed to deal with a set of predefined tasks associated with an operational domain; they have problems to deal with new emerging tasks, such as concept shift in input data that is used for learning. To tackle this challenge, we present \textit{lifelong self-adaptation}: a novel approach to self-adaptation that enhances self-adaptive systems that use ML techniques with a lifelong ML layer. The lifelong ML layer tracks the running system and its environment, associates this knowledge with the current tasks, identifies new tasks based on differentiations, and updates the learning models of the self-adaptive system accordingly. We present a reusable architecture for lifelong self-adaptation and apply it to the case of concept drift caused by unforeseen changes of the input data of a learning model that is used for decision-making in self-adaptation. We validate lifelong self-adaptation for two types of concept drift using two cases.

Lifelong Computing

Aug 19, 2021

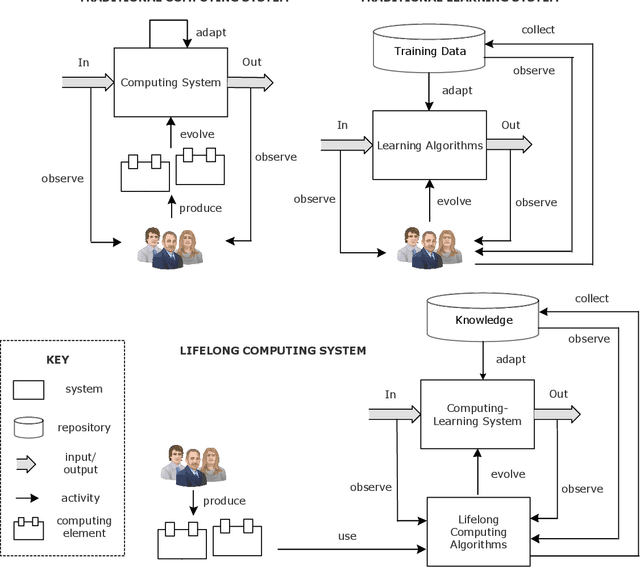

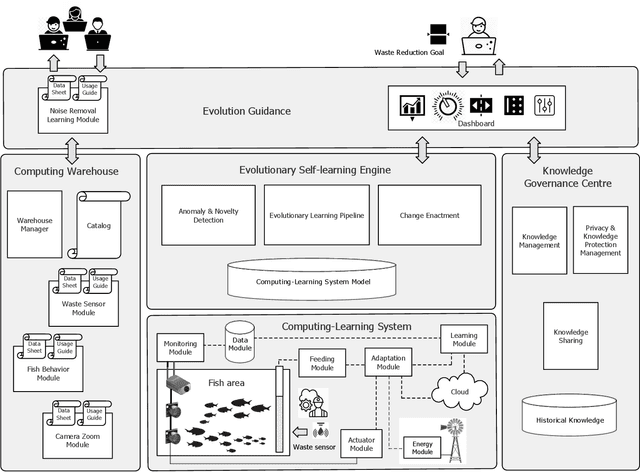

Abstract:Computing systems form the backbone of many aspects of our life, hence they are becoming as vital as water, electricity, and road infrastructures for our society. Yet, engineering long running computing systems that achieve their goals in ever-changing environments pose significant challenges. Currently, we can build computing systems that adjust or learn over time to match changes that were anticipated. However, dealing with unanticipated changes, such as anomalies, novelties, new goals or constraints, requires system evolution, which remains in essence a human-driven activity. Given the growing complexity of computing systems and the vast amount of highly complex data to process, this approach will eventually become unmanageable. To break through the status quo, we put forward a new paradigm for the design and operation of computing systems that we coin "lifelong computing." The paradigm starts from computing-learning systems that integrate computing/service modules and learning modules. Computing warehouses offer such computing elements together with data sheets and usage guides. When detecting anomalies, novelties, new goals or constraints, a lifelong computing system activates an evolutionary self-learning engine that runs online experiments to determine how the computing-learning system needs to evolve to deal with the changes, thereby changing its architecture and integrating new computing elements from computing warehouses as needed. Depending on the domain at hand, some activities of lifelong computing systems can be supported by humans. We motivate the need for lifelong computing with a future fish farming scenario, outline a blueprint architecture for lifelong computing systems, and highlight key research challenges to realise the vision of lifelong computing.

Towards Better Adaptive Systems by Combining MAPE, Control Theory, and Machine Learning

Mar 19, 2021

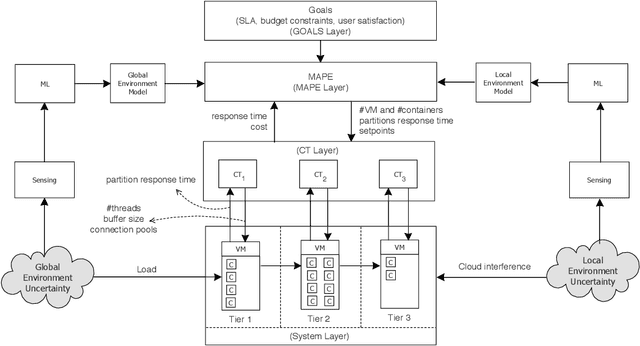

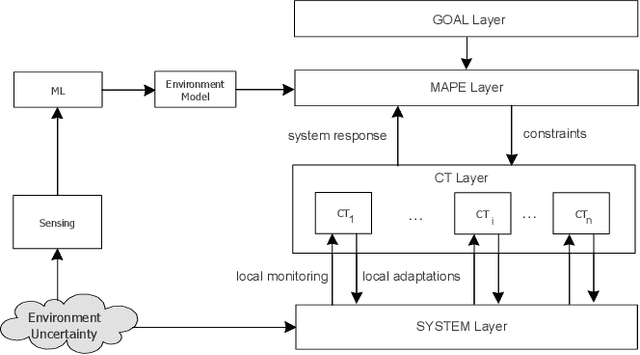

Abstract:Two established approaches to engineer adaptive systems are architecture-based adaptation that uses a Monitor-Analysis-Planning-Executing (MAPE) loop that reasons over architectural models (aka Knowledge) to make adaptation decisions, and control-based adaptation that relies on principles of control theory (CT) to realize adaptation. Recently, we also observe a rapidly growing interest in applying machine learning (ML) to support different adaptation mechanisms. While MAPE and CT have particular characteristics and strengths to be applied independently, in this paper, we are concerned with the question of how these approaches are related with one another and whether combining them and supporting them with ML can produce better adaptive systems. We motivate the combined use of different adaptation approaches using a scenario of a cloud-based enterprise system and illustrate the analysis when combining the different approaches. To conclude, we offer a set of open questions for further research in this interesting area.

On the Impact of Applying Machine Learning in the Decision-Making of Self-Adaptive Systems

Mar 18, 2021

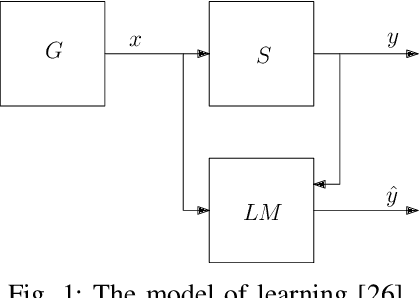

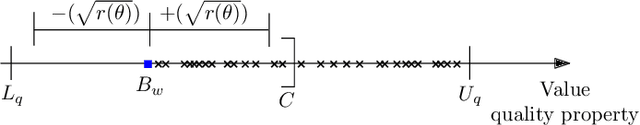

Abstract:Recently, we have been witnessing an increasing use of machine learning methods in self-adaptive systems. Machine learning methods offer a variety of use cases for supporting self-adaptation, e.g., to keep runtime models up to date, reduce large adaptation spaces, or update adaptation rules. Yet, since machine learning methods apply in essence statistical methods, they may have an impact on the decisions made by a self-adaptive system. Given the wide use of formal approaches to provide guarantees for the decisions made by self-adaptive systems, it is important to investigate the impact of applying machine learning methods when such approaches are used. In this paper, we study one particular instance that combines linear regression to reduce the adaptation space of a self-adaptive system with statistical model checking to analyze the resulting adaptation options. We use computational learning theory to determine a theoretical bound on the impact of the machine learning method on the predictions made by the verifier. We illustrate and evaluate the theoretical result using a scenario of the DeltaIoT artifact. To conclude, we look at opportunities for future research in this area.

Applying Machine Learning in Self-Adaptive Systems: A Systematic Literature Review

Mar 06, 2021

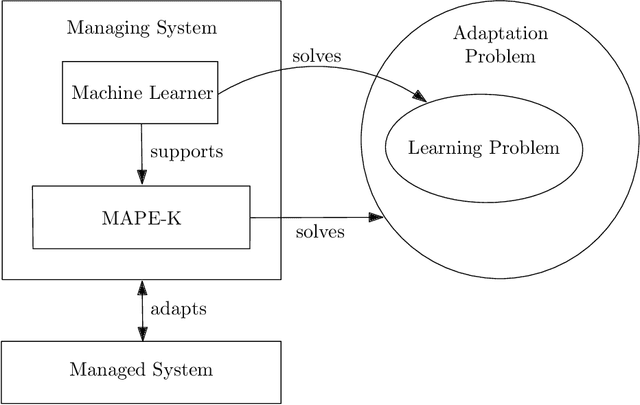

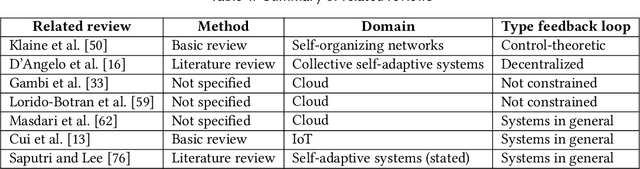

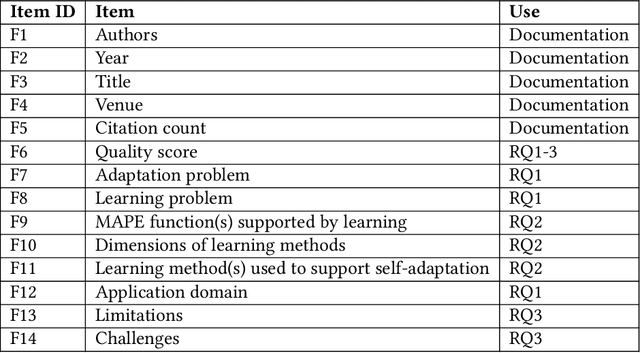

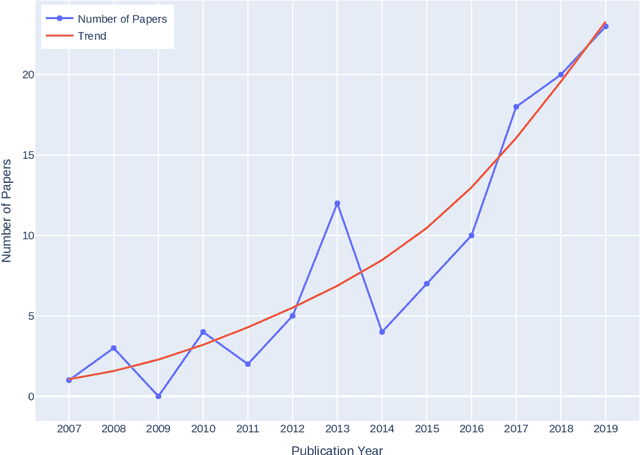

Abstract:Recently, we witness a rapid increase in the use of machine learning in self-adaptive systems. Machine learning has been used for a variety of reasons, ranging from learning a model of the environment of a system during operation to filtering large sets of possible configurations before analysing them. While a body of work on the use of machine learning in self-adaptive systems exists, there is currently no systematic overview of this area. Such overview is important for researchers to understand the state of the art and direct future research efforts. This paper reports the results of a systematic literature review that aims at providing such an overview. We focus on self-adaptive systems that are based on a traditional Monitor-Analyze-Plan-Execute feedback loop (MAPE). The research questions are centred on the problems that motivate the use of machine learning in self-adaptive systems, the key engineering aspects of learning in self-adaptation, and open challenges. The search resulted in 6709 papers, of which 109 were retained for data collection. Analysis of the collected data shows that machine learning is mostly used for updating adaptation rules and policies to improve system qualities, and managing resources to better balance qualities and resources. These problems are primarily solved using supervised and interactive learning with classification, regression and reinforcement learning as the dominant methods. Surprisingly, unsupervised learning that naturally fits automation is only applied in a small number of studies. Key open challenges in this area include the performance of learning, managing the effects of learning, and dealing with more complex types of goals. From the insights derived from this systematic literature review we outline an initial design process for applying machine learning in self-adaptive systems that are based on MAPE feedback loops.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge