On the Impact of Applying Machine Learning in the Decision-Making of Self-Adaptive Systems

Paper and Code

Mar 18, 2021

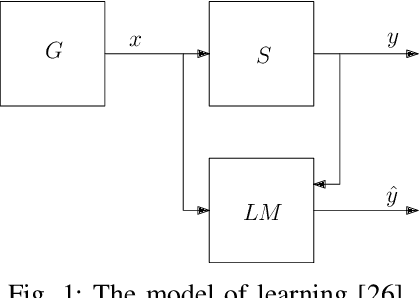

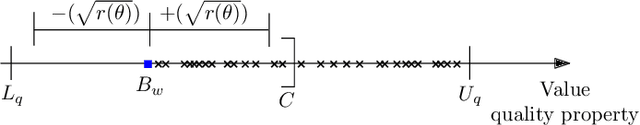

Recently, we have been witnessing an increasing use of machine learning methods in self-adaptive systems. Machine learning methods offer a variety of use cases for supporting self-adaptation, e.g., to keep runtime models up to date, reduce large adaptation spaces, or update adaptation rules. Yet, since machine learning methods apply in essence statistical methods, they may have an impact on the decisions made by a self-adaptive system. Given the wide use of formal approaches to provide guarantees for the decisions made by self-adaptive systems, it is important to investigate the impact of applying machine learning methods when such approaches are used. In this paper, we study one particular instance that combines linear regression to reduce the adaptation space of a self-adaptive system with statistical model checking to analyze the resulting adaptation options. We use computational learning theory to determine a theoretical bound on the impact of the machine learning method on the predictions made by the verifier. We illustrate and evaluate the theoretical result using a scenario of the DeltaIoT artifact. To conclude, we look at opportunities for future research in this area.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge