Dandan Zhu

Surveillance Facial Image Quality Assessment: A Multi-dimensional Dataset and Lightweight Model

Feb 07, 2026Abstract:Surveillance facial images are often captured under unconstrained conditions, resulting in severe quality degradation due to factors such as low resolution, motion blur, occlusion, and poor lighting. Although recent face restoration techniques applied to surveillance cameras can significantly enhance visual quality, they often compromise fidelity (i.e., identity-preserving features), which directly conflicts with the primary objective of surveillance images -- reliable identity verification. Existing facial image quality assessment (FIQA) predominantly focus on either visual quality or recognition-oriented evaluation, thereby failing to jointly address visual quality and fidelity, which are critical for surveillance applications. To bridge this gap, we propose the first comprehensive study on surveillance facial image quality assessment (SFIQA), targeting the unique challenges inherent to surveillance scenarios. Specifically, we first construct SFIQA-Bench, a multi-dimensional quality assessment benchmark for surveillance facial images, which consists of 5,004 surveillance facial images captured by three widely deployed surveillance cameras in real-world scenarios. A subjective experiment is conducted to collect six dimensional quality ratings, including noise, sharpness, colorfulness, contrast, fidelity and overall quality, covering the key aspects of SFIQA. Furthermore, we propose SFIQA-Assessor, a lightweight multi-task FIQA model that jointly exploits complementary facial views through cross-view feature interaction, and employs learnable task tokens to guide the unified regression of multiple quality dimensions. The experiment results on the proposed dataset show that our method achieves the best performance compared with the state-of-the-art general image quality assessment (IQA) and FIQA methods, validating its effectiveness for real-world surveillance applications.

QualiRAG: Retrieval-Augmented Generation for Visual Quality Understanding

Jan 26, 2026Abstract:Visual quality assessment (VQA) is increasingly shifting from scalar score prediction toward interpretable quality understanding -- a paradigm that demands \textit{fine-grained spatiotemporal perception} and \textit{auxiliary contextual information}. Current approaches rely on supervised fine-tuning or reinforcement learning on curated instruction datasets, which involve labor-intensive annotation and are prone to dataset-specific biases. To address these challenges, we propose \textbf{QualiRAG}, a \textit{training-free} \textbf{R}etrieval-\textbf{A}ugmented \textbf{G}eneration \textbf{(RAG)} framework that systematically leverages the latent perceptual knowledge of large multimodal models (LMMs) for visual quality perception. Unlike conventional RAG that retrieves from static corpora, QualiRAG dynamically generates auxiliary knowledge by decomposing questions into structured requests and constructing four complementary knowledge sources: \textit{visual metadata}, \textit{subject localization}, \textit{global quality summaries}, and \textit{local quality descriptions}, followed by relevance-aware retrieval for evidence-grounded reasoning. Extensive experiments show that QualiRAG achieves substantial improvements over open-source general-purpose LMMs and VQA-finetuned LMMs on visual quality understanding tasks, and delivers competitive performance on visual quality comparison tasks, demonstrating robust quality assessment capabilities without any task-specific training. The code will be publicly available at https://github.com/clh124/QualiRAG.

VQualA 2025 Challenge on Visual Quality Comparison for Large Multimodal Models: Methods and Results

Sep 11, 2025

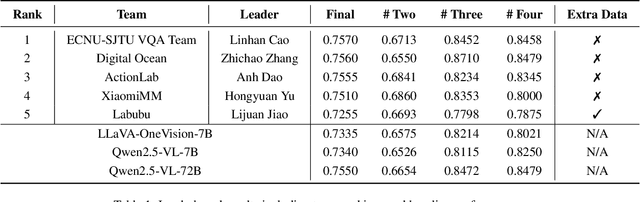

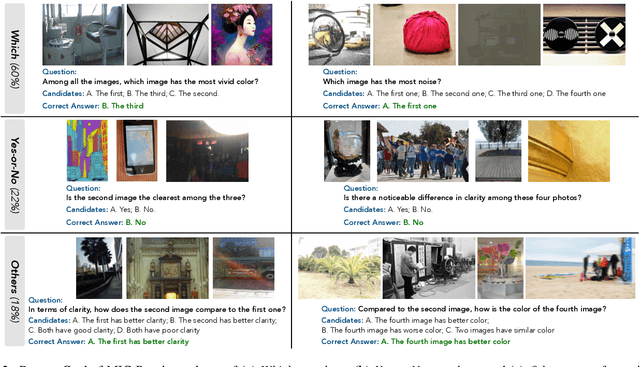

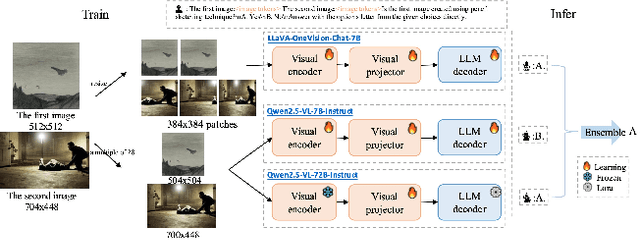

Abstract:This paper presents a summary of the VQualA 2025 Challenge on Visual Quality Comparison for Large Multimodal Models (LMMs), hosted as part of the ICCV 2025 Workshop on Visual Quality Assessment. The challenge aims to evaluate and enhance the ability of state-of-the-art LMMs to perform open-ended and detailed reasoning about visual quality differences across multiple images. To this end, the competition introduces a novel benchmark comprising thousands of coarse-to-fine grained visual quality comparison tasks, spanning single images, pairs, and multi-image groups. Each task requires models to provide accurate quality judgments. The competition emphasizes holistic evaluation protocols, including 2AFC-based binary preference and multi-choice questions (MCQs). Around 100 participants submitted entries, with five models demonstrating the emerging capabilities of instruction-tuned LMMs on quality assessment. This challenge marks a significant step toward open-domain visual quality reasoning and comparison and serves as a catalyst for future research on interpretable and human-aligned quality evaluation systems.

CompressedVQA-HDR: Generalized Full-reference and No-reference Quality Assessment Models for Compressed High Dynamic Range Videos

Jul 16, 2025Abstract:Video compression is a standard procedure applied to all videos to minimize storage and transmission demands while preserving visual quality as much as possible. Therefore, evaluating the visual quality of compressed videos is crucial for guiding the practical usage and further development of video compression algorithms. Although numerous compressed video quality assessment (VQA) methods have been proposed, they often lack the generalization capability needed to handle the increasing diversity of video types, particularly high dynamic range (HDR) content. In this paper, we introduce CompressedVQA-HDR, an effective VQA framework designed to address the challenges of HDR video quality assessment. Specifically, we adopt the Swin Transformer and SigLip 2 as the backbone networks for the proposed full-reference (FR) and no-reference (NR) VQA models, respectively. For the FR model, we compute deep structural and textural similarities between reference and distorted frames using intermediate-layer features extracted from the Swin Transformer as its quality-aware feature representation. For the NR model, we extract the global mean of the final-layer feature maps from SigLip 2 as its quality-aware representation. To mitigate the issue of limited HDR training data, we pre-train the FR model on a large-scale standard dynamic range (SDR) VQA dataset and fine-tune it on the HDRSDR-VQA dataset. For the NR model, we employ an iterative mixed-dataset training strategy across multiple compressed VQA datasets, followed by fine-tuning on the HDRSDR-VQA dataset. Experimental results show that our models achieve state-of-the-art performance compared to existing FR and NR VQA models. Moreover, CompressedVQA-HDR-FR won first place in the FR track of the Generalizable HDR & SDR Video Quality Measurement Grand Challenge at IEEE ICME 2025. The code is available at https://github.com/sunwei925/CompressedVQA-HDR.

Mesh Mamba: A Unified State Space Model for Saliency Prediction in Non-Textured and Textured Meshes

Apr 02, 2025Abstract:Mesh saliency enhances the adaptability of 3D vision by identifying and emphasizing regions that naturally attract visual attention. To investigate the interaction between geometric structure and texture in shaping visual attention, we establish a comprehensive mesh saliency dataset, which is the first to systematically capture the differences in saliency distribution under both textured and non-textured visual conditions. Furthermore, we introduce mesh Mamba, a unified saliency prediction model based on a state space model (SSM), designed to adapt across various mesh types. Mesh Mamba effectively analyzes the geometric structure of the mesh while seamlessly incorporating texture features into the topological framework, ensuring coherence throughout appearance-enhanced modeling. More importantly, by subgraph embedding and a bidirectional SSM, the model enables global context modeling for both local geometry and texture, preserving the topological structure and improving the understanding of visual details and structural complexity. Through extensive theoretical and empirical validation, our model not only improves performance across various mesh types but also demonstrates high scalability and versatility, particularly through cross validations of various visual features.

Pose as a Modality: A Psychology-Inspired Network for Personality Recognition with a New Multimodal Dataset

Mar 17, 2025Abstract:In recent years, predicting Big Five personality traits from multimodal data has received significant attention in artificial intelligence (AI). However, existing computational models often fail to achieve satisfactory performance. Psychological research has shown a strong correlation between pose and personality traits, yet previous research has largely ignored pose data in computational models. To address this gap, we develop a novel multimodal dataset that incorporates full-body pose data. The dataset includes video recordings of 287 participants completing a virtual interview with 36 questions, along with self-reported Big Five personality scores as labels. To effectively utilize this multimodal data, we introduce the Psychology-Inspired Network (PINet), which consists of three key modules: Multimodal Feature Awareness (MFA), Multimodal Feature Interaction (MFI), and Psychology-Informed Modality Correlation Loss (PIMC Loss). The MFA module leverages the Vision Mamba Block to capture comprehensive visual features related to personality, while the MFI module efficiently fuses the multimodal features. The PIMC Loss, grounded in psychological theory, guides the model to emphasize different modalities for different personality dimensions. Experimental results show that the PINet outperforms several state-of-the-art baseline models. Furthermore, the three modules of PINet contribute almost equally to the model's overall performance. Incorporating pose data significantly enhances the model's performance, with the pose modality ranking mid-level in importance among the five modalities. These findings address the existing gap in personality-related datasets that lack full-body pose data and provide a new approach for improving the accuracy of personality prediction models, highlighting the importance of integrating psychological insights into AI frameworks.

Textured Mesh Saliency: Bridging Geometry and Texture for Human Perception in 3D Graphics

Dec 11, 2024

Abstract:Textured meshes significantly enhance the realism and detail of objects by mapping intricate texture details onto the geometric structure of 3D models. This advancement is valuable across various applications, including entertainment, education, and industry. While traditional mesh saliency studies focus on non-textured meshes, our work explores the complexities introduced by detailed texture patterns. We present a new dataset for textured mesh saliency, created through an innovative eye-tracking experiment in a six degrees of freedom (6-DOF) VR environment. This dataset addresses the limitations of previous studies by providing comprehensive eye-tracking data from multiple viewpoints, thereby advancing our understanding of human visual behavior and supporting more accurate and effective 3D content creation. Our proposed model predicts saliency maps for textured mesh surfaces by treating each triangular face as an individual unit and assigning a saliency density value to reflect the importance of each local surface region. The model incorporates a texture alignment module and a geometric extraction module, combined with an aggregation module to integrate texture and geometry for precise saliency prediction. We believe this approach will enhance the visual fidelity of geometric processing algorithms while ensuring efficient use of computational resources, which is crucial for real-time rendering and high-detail applications such as VR and gaming.

Self-Reasoning Assistant Learning for non-Abelian Gauge Fields Design

Jul 23, 2024

Abstract:Non-Abelian braiding has attracted substantial attention because of its pivotal role in describing the exchange behaviour of anyons, in which the input and outcome of non-Abelian braiding are connected by a unitary matrix. Implementing braiding in a classical system can assist the experimental investigation of non-Abelian physics. However, the design of non-Abelian gauge fields faces numerous challenges stemmed from the intricate interplay of group structures, Lie algebra properties, representation theory, topology, and symmetry breaking. The extreme diversity makes it a powerful tool for the study of condensed matter physics. Whereas the widely used artificial intelligence with data-driven approaches has greatly promoted the development of physics, most works are limited on the data-to-data design. Here we propose a self-reasoning assistant learning framework capable of directly generating non-Abelian gauge fields. This framework utilizes the forward diffusion process to capture and reproduce the complex patterns and details inherent in the target distribution through continuous transformation. Then the reverse diffusion process is used to make the generated data closer to the distribution of the original situation. Thus, it owns strong self-reasoning capabilities, allowing to automatically discover the feature representation and capture more subtle relationships from the dataset. Moreover, the self-reasoning eliminates the need for manual feature engineering and simplifies the process of model building. Our framework offers a disruptive paradigm shift to parse complex physical processes, automatically uncovering patterns from massive datasets.

Quaternion Generative Adversarial Neural Networks and Applications to Color Image Inpainting

Jun 17, 2024

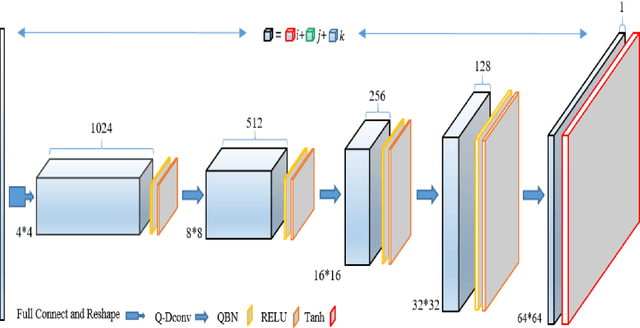

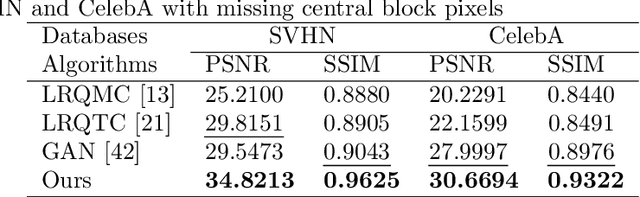

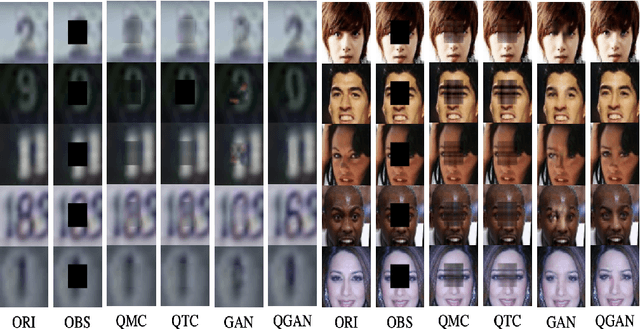

Abstract:Color image inpainting is a challenging task in imaging science. The existing method is based on real operation, and the red, green and blue channels of the color image are processed separately, ignoring the correlation between each channel. In order to make full use of the correlation between each channel, this paper proposes a Quaternion Generative Adversarial Neural Network (QGAN) model and related theory, and applies it to solve the problem of color image inpainting with large area missing. Firstly, the definition of quaternion deconvolution is given and the quaternion batch normalization is proposed. Secondly, the above two innovative modules are applied to generate adversarial networks to improve stability. Finally, QGAN is applied to color image inpainting and compared with other state-of-the-art algorithms. The experimental results show that QGAN has superiority in color image inpainting with large area missing.

Deep learning for the design of non-Hermitian topolectrical circuits

Feb 15, 2024

Abstract:Non-Hermitian topological phases can produce some remarkable properties, compared with their Hermitian counterpart, such as the breakdown of conventional bulk-boundary correspondence and the non-Hermitian topological edge mode. Here, we introduce several algorithms with multi-layer perceptron (MLP), and convolutional neural network (CNN) in the field of deep learning, to predict the winding of eigenvalues non-Hermitian Hamiltonians. Subsequently, we use the smallest module of the periodic circuit as one unit to construct high-dimensional circuit data features. Further, we use the Dense Convolutional Network (DenseNet), a type of convolutional neural network that utilizes dense connections between layers to design a non-Hermitian topolectrical Chern circuit, as the DenseNet algorithm is more suitable for processing high-dimensional data. Our results demonstrate the effectiveness of the deep learning network in capturing the global topological characteristics of a non-Hermitian system based on training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge