Ciyuan Peng

Multimodal Visual Surrogate Compression for Alzheimer's Disease Classification

Jan 29, 2026Abstract:High-dimensional structural MRI (sMRI) images are widely used for Alzheimer's Disease (AD) diagnosis. Most existing methods for sMRI representation learning rely on 3D architectures (e.g., 3D CNNs), slice-wise feature extraction with late aggregation, or apply training-free feature extractions using 2D foundation models (e.g., DINO). However, these three paradigms suffer from high computational cost, loss of cross-slice relations, and limited ability to extract discriminative features, respectively. To address these challenges, we propose Multimodal Visual Surrogate Compression (MVSC). It learns to compress and adapt large 3D sMRI volumes into compact 2D features, termed as visual surrogates, which are better aligned with frozen 2D foundation models to extract powerful representations for final AD classification. MVSC has two key components: a Volume Context Encoder that captures global cross-slice context under textual guidance, and an Adaptive Slice Fusion module that aggregates slice-level information in a text-enhanced, patch-wise manner. Extensive experiments on three large-scale Alzheimer's disease benchmarks demonstrate our MVSC performs favourably on both binary and multi-class classification tasks compared against state-of-the-art methods.

BrainMAP: Multimodal Graph Learning For Efficient Brain Disease Localization

Jun 12, 2025Abstract:Recent years have seen a surge in research focused on leveraging graph learning techniques to detect neurodegenerative diseases. However, existing graph-based approaches typically lack the ability to localize and extract the specific brain regions driving neurodegenerative pathology within the full connectome. Additionally, recent works on multimodal brain graph models often suffer from high computational complexity, limiting their practical use in resource-constrained devices. In this study, we present BrainMAP, a novel multimodal graph learning framework designed for precise and computationally efficient identification of brain regions affected by neurodegenerative diseases. First, BrainMAP utilizes an atlas-driven filtering approach guided by the AAL atlas to pinpoint and extract critical brain subgraphs. Unlike recent state-of-the-art methods, which model the entire brain network, BrainMAP achieves more than 50% reduction in computational overhead by concentrating on disease-relevant subgraphs. Second, we employ an advanced multimodal fusion process comprising cross-node attention to align functional magnetic resonance imaging (fMRI) and diffusion tensor imaging (DTI) data, coupled with an adaptive gating mechanism to blend and integrate these modalities dynamically. Experimental results demonstrate that BrainMAP outperforms state-of-the-art methods in computational efficiency, without compromising predictive accuracy.

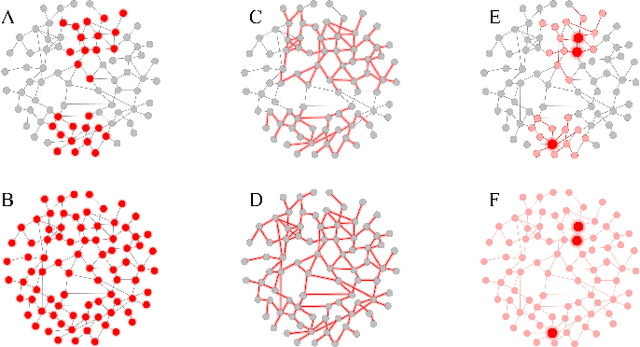

Biologically Plausible Brain Graph Transformer

Feb 13, 2025Abstract:State-of-the-art brain graph analysis methods fail to fully encode the small-world architecture of brain graphs (accompanied by the presence of hubs and functional modules), and therefore lack biological plausibility to some extent. This limitation hinders their ability to accurately represent the brain's structural and functional properties, thereby restricting the effectiveness of machine learning models in tasks such as brain disorder detection. In this work, we propose a novel Biologically Plausible Brain Graph Transformer (BioBGT) that encodes the small-world architecture inherent in brain graphs. Specifically, we present a network entanglement-based node importance encoding technique that captures the structural importance of nodes in global information propagation during brain graph communication, highlighting the biological properties of the brain structure. Furthermore, we introduce a functional module-aware self-attention to preserve the functional segregation and integration characteristics of brain graphs in the learned representations. Experimental results on three benchmark datasets demonstrate that BioBGT outperforms state-of-the-art models, enhancing biologically plausible brain graph representations for various brain graph analytical tasks

GraphDART: Graph Distillation for Efficient Advanced Persistent Threat Detection

Jan 06, 2025

Abstract:Cyber-physical-social systems (CPSSs) have emerged in many applications over recent decades, requiring increased attention to security concerns. The rise of sophisticated threats like Advanced Persistent Threats (APTs) makes ensuring security in CPSSs particularly challenging. Provenance graph analysis has proven effective for tracing and detecting anomalies within systems, but the sheer size and complexity of these graphs hinder the efficiency of existing methods, especially those relying on graph neural networks (GNNs). To address these challenges, we present GraphDART, a modular framework designed to distill provenance graphs into compact yet informative representations, enabling scalable and effective anomaly detection. GraphDART can take advantage of diverse graph distillation techniques, including classic and modern graph distillation methods, to condense large provenance graphs while preserving essential structural and contextual information. This approach significantly reduces computational overhead, allowing GNNs to learn from distilled graphs efficiently and enhance detection performance. Extensive evaluations on benchmark datasets demonstrate the robustness of GraphDART in detecting malicious activities across cyber-physical-social systems. By optimizing computational efficiency, GraphDART provides a scalable and practical solution to safeguard interconnected environments against APTs.

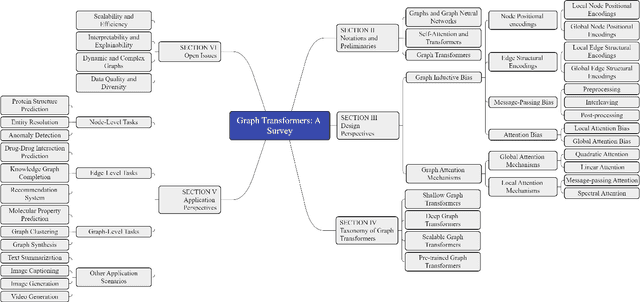

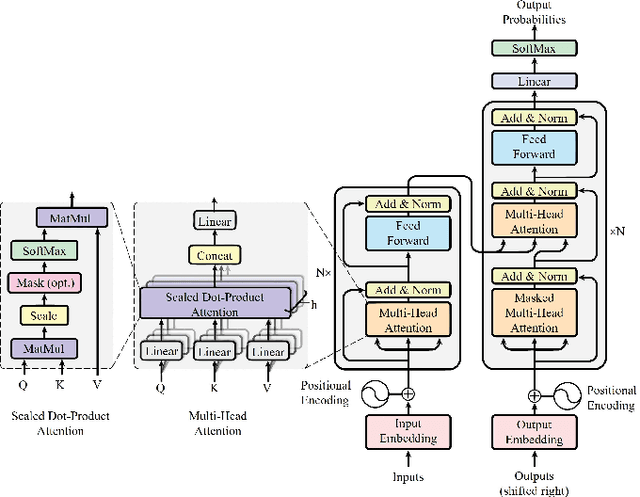

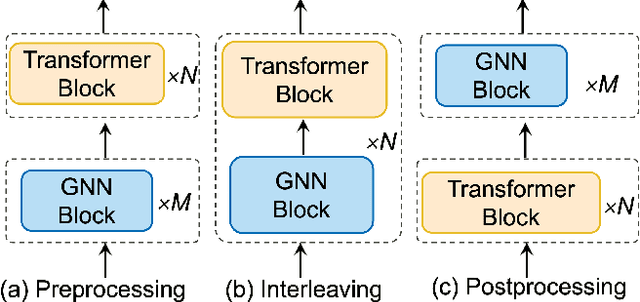

Graph Transformers: A Survey

Jul 13, 2024

Abstract:Graph transformers are a recent advancement in machine learning, offering a new class of neural network models for graph-structured data. The synergy between transformers and graph learning demonstrates strong performance and versatility across various graph-related tasks. This survey provides an in-depth review of recent progress and challenges in graph transformer research. We begin with foundational concepts of graphs and transformers. We then explore design perspectives of graph transformers, focusing on how they integrate graph inductive biases and graph attention mechanisms into the transformer architecture. Furthermore, we propose a taxonomy classifying graph transformers based on depth, scalability, and pre-training strategies, summarizing key principles for effective development of graph transformer models. Beyond technical analysis, we discuss the applications of graph transformer models for node-level, edge-level, and graph-level tasks, exploring their potential in other application scenarios as well. Finally, we identify remaining challenges in the field, such as scalability and efficiency, generalization and robustness, interpretability and explainability, dynamic and complex graphs, as well as data quality and diversity, charting future directions for graph transformer research.

Learning on Multimodal Graphs: A Survey

Feb 07, 2024

Abstract:Multimodal data pervades various domains, including healthcare, social media, and transportation, where multimodal graphs play a pivotal role. Machine learning on multimodal graphs, referred to as multimodal graph learning (MGL), is essential for successful artificial intelligence (AI) applications. The burgeoning research in this field encompasses diverse graph data types and modalities, learning techniques, and application scenarios. This survey paper conducts a comparative analysis of existing works in multimodal graph learning, elucidating how multimodal learning is achieved across different graph types and exploring the characteristics of prevalent learning techniques. Additionally, we delineate significant applications of multimodal graph learning and offer insights into future directions in this domain. Consequently, this paper serves as a foundational resource for researchers seeking to comprehend existing MGL techniques and their applicability across diverse scenarios.

Knowledge Graphs: Opportunities and Challenges

Mar 24, 2023Abstract:With the explosive growth of artificial intelligence (AI) and big data, it has become vitally important to organize and represent the enormous volume of knowledge appropriately. As graph data, knowledge graphs accumulate and convey knowledge of the real world. It has been well-recognized that knowledge graphs effectively represent complex information; hence, they rapidly gain the attention of academia and industry in recent years. Thus to develop a deeper understanding of knowledge graphs, this paper presents a systematic overview of this field. Specifically, we focus on the opportunities and challenges of knowledge graphs. We first review the opportunities of knowledge graphs in terms of two aspects: (1) AI systems built upon knowledge graphs; (2) potential application fields of knowledge graphs. Then, we thoroughly discuss severe technical challenges in this field, such as knowledge graph embeddings, knowledge acquisition, knowledge graph completion, knowledge fusion, and knowledge reasoning. We expect that this survey will shed new light on future research and the development of knowledge graphs.

Quantum Graph Learning: Frontiers and Outlook

Feb 02, 2023Abstract:Quantum theory has shown its superiority in enhancing machine learning. However, facilitating quantum theory to enhance graph learning is in its infancy. This survey investigates the current advances in quantum graph learning (QGL) from three perspectives, i.e., underlying theories, methods, and prospects. We first look at QGL and discuss the mutualism of quantum theory and graph learning, the specificity of graph-structured data, and the bottleneck of graph learning, respectively. A new taxonomy of QGL is presented, i.e., quantum computing on graphs, quantum graph representation, and quantum circuits for graph neural networks. Pitfall traps are then highlighted and explained. This survey aims to provide a brief but insightful introduction to this emerging field, along with a detailed discussion of frontiers and outlook yet to be investigated.

Physics-Informed Graph Learning: A Survey

Feb 22, 2022

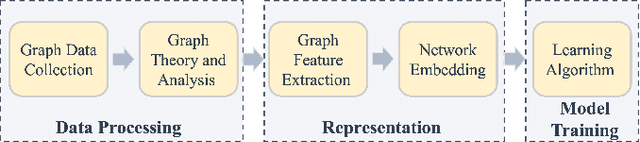

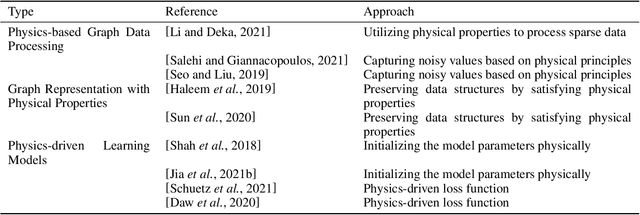

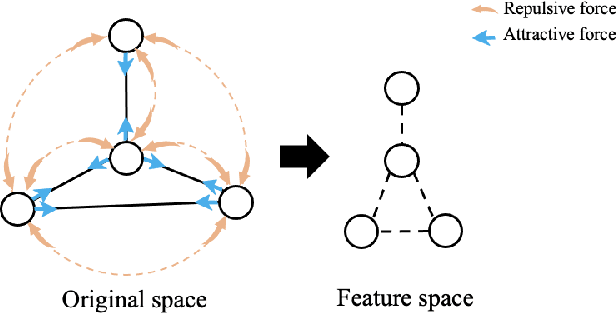

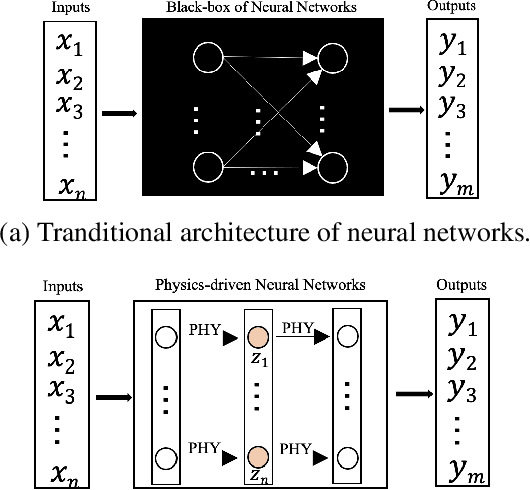

Abstract:An expeditious development of graph learning in recent years has found innumerable applications in several diversified fields. Of the main associated challenges are the volume and complexity of graph data. A lot of research has been evolving around the preservation of graph data in a low dimensional space. The graph learning models suffer from the inability to maintain original graph information. In order to compensate for this inability, physics-informed graph learning (PIGL) is emerging. PIGL incorporates physics rules while performing graph learning, which enables numerous potentials. This paper presents a systematic review of PIGL methods. We begin with introducing a unified framework of graph learning models, and then examine existing PIGL methods in relation to the unified framework. We also discuss several future challenges for PIGL. This survey paper is expected to stimulate innovative research and development activities pertaining to PIGL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge