Fengling Han

GraphDART: Graph Distillation for Efficient Advanced Persistent Threat Detection

Jan 06, 2025

Abstract:Cyber-physical-social systems (CPSSs) have emerged in many applications over recent decades, requiring increased attention to security concerns. The rise of sophisticated threats like Advanced Persistent Threats (APTs) makes ensuring security in CPSSs particularly challenging. Provenance graph analysis has proven effective for tracing and detecting anomalies within systems, but the sheer size and complexity of these graphs hinder the efficiency of existing methods, especially those relying on graph neural networks (GNNs). To address these challenges, we present GraphDART, a modular framework designed to distill provenance graphs into compact yet informative representations, enabling scalable and effective anomaly detection. GraphDART can take advantage of diverse graph distillation techniques, including classic and modern graph distillation methods, to condense large provenance graphs while preserving essential structural and contextual information. This approach significantly reduces computational overhead, allowing GNNs to learn from distilled graphs efficiently and enhance detection performance. Extensive evaluations on benchmark datasets demonstrate the robustness of GraphDART in detecting malicious activities across cyber-physical-social systems. By optimizing computational efficiency, GraphDART provides a scalable and practical solution to safeguard interconnected environments against APTs.

Graph2text or Graph2token: A Perspective of Large Language Models for Graph Learning

Jan 02, 2025

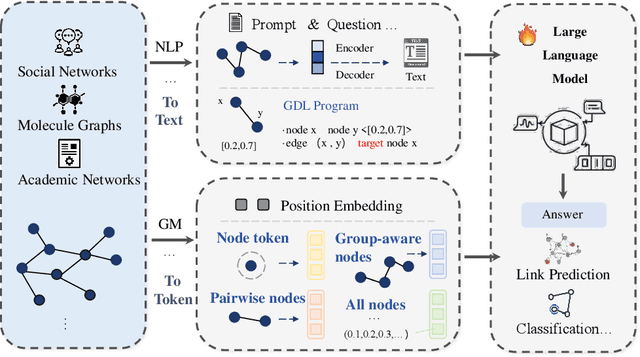

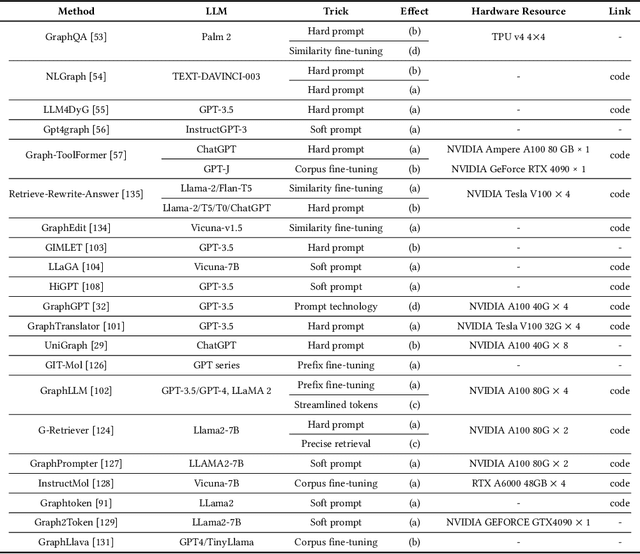

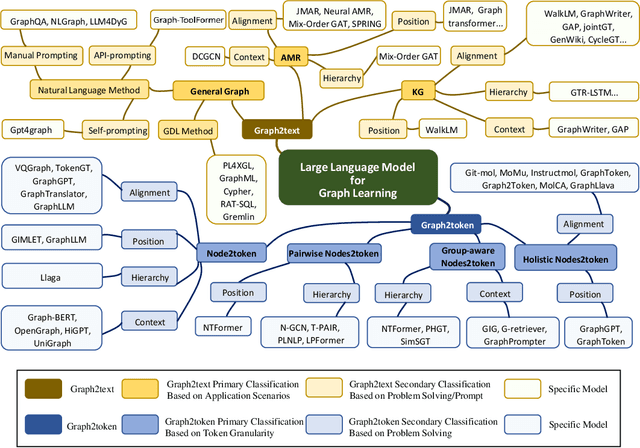

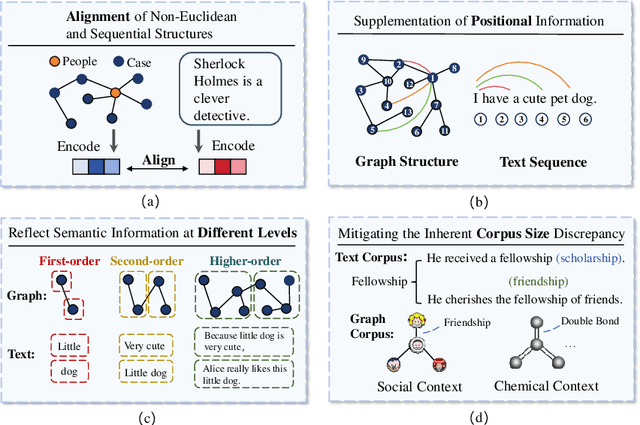

Abstract:Graphs are data structures used to represent irregular networks and are prevalent in numerous real-world applications. Previous methods directly model graph structures and achieve significant success. However, these methods encounter bottlenecks due to the inherent irregularity of graphs. An innovative solution is converting graphs into textual representations, thereby harnessing the powerful capabilities of Large Language Models (LLMs) to process and comprehend graphs. In this paper, we present a comprehensive review of methodologies for applying LLMs to graphs, termed LLM4graph. The core of LLM4graph lies in transforming graphs into texts for LLMs to understand and analyze. Thus, we propose a novel taxonomy of LLM4graph methods in the view of the transformation. Specifically, existing methods can be divided into two paradigms: Graph2text and Graph2token, which transform graphs into texts or tokens as the input of LLMs, respectively. We point out four challenges during the transformation to systematically present existing methods in a problem-oriented perspective. For practical concerns, we provide a guideline for researchers on selecting appropriate models and LLMs for different graphs and hardware constraints. We also identify five future research directions for LLM4graph.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge