Chuqin Geng

Extracting Interpretable Logic Rules from Graph Neural Networks

Mar 25, 2025

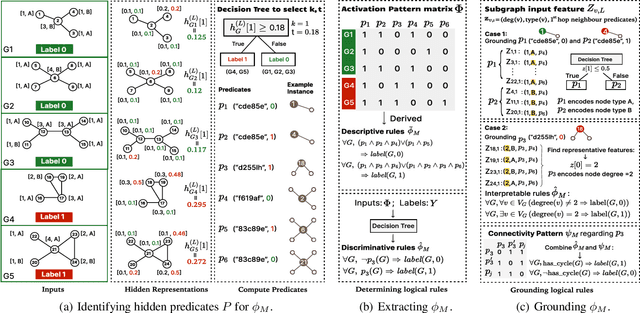

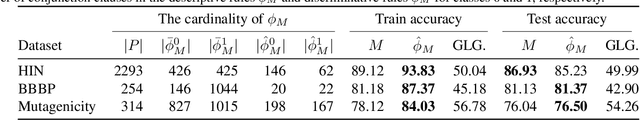

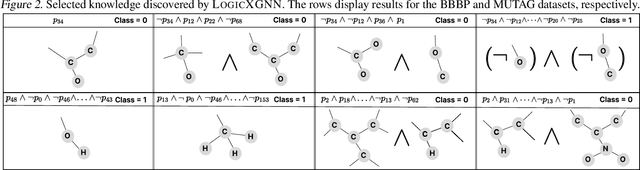

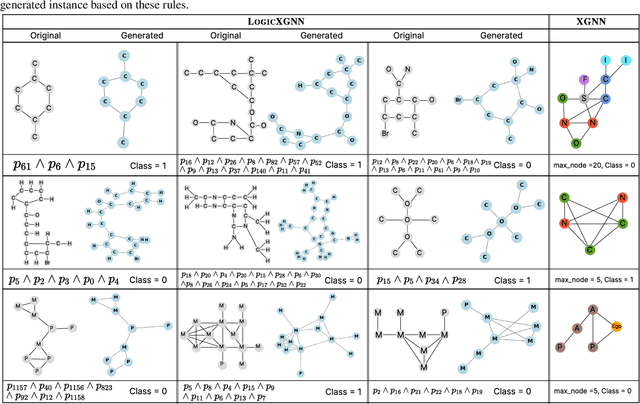

Abstract:Graph neural networks (GNNs) operate over both input feature spaces and combinatorial graph structures, making it challenging to understand the rationale behind their predictions. As GNNs gain widespread popularity and demonstrate success across various domains, such as drug discovery, studying their interpretability has become a critical task. To address this, many explainability methods have been proposed, with recent efforts shifting from instance-specific explanations to global concept-based explainability. However, these approaches face several limitations, such as relying on predefined concepts and explaining only a limited set of patterns. To address this, we propose a novel framework, LOGICXGNN, for extracting interpretable logic rules from GNNs. LOGICXGNN is model-agnostic, efficient, and data-driven, eliminating the need for predefined concepts. More importantly, it can serve as a rule-based classifier and even outperform the original neural models. Its interpretability facilitates knowledge discovery, as demonstrated by its ability to extract detailed and accurate chemistry knowledge that is often overlooked by existing methods. Another key advantage of LOGICXGNN is its ability to generate new graph instances in a controlled and transparent manner, offering significant potential for applications such as drug design. We empirically demonstrate these merits through experiments on real-world datasets such as MUTAG and BBBP.

Learning Interpretable Logic Rules from Deep Vision Models

Mar 13, 2025

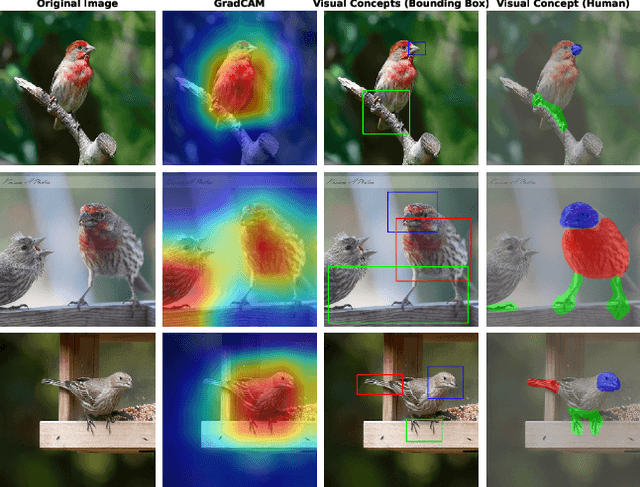

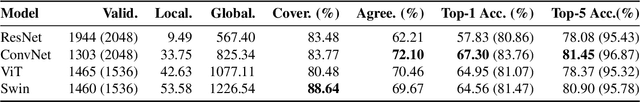

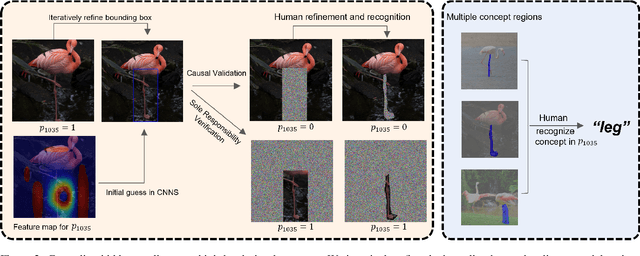

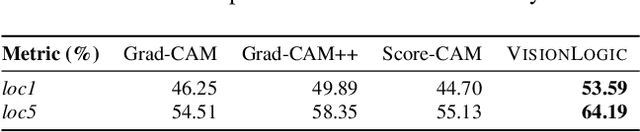

Abstract:We propose a general framework called VisionLogic to extract interpretable logic rules from deep vision models, with a focus on image classification tasks. Given any deep vision model that uses a fully connected layer as the output head, VisionLogic transforms neurons in the last layer into predicates and grounds them into vision concepts using causal validation. In this way, VisionLogic can provide local explanations for single images and global explanations for specific classes in the form of logic rules. Compared to existing interpretable visualization tools such as saliency maps, VisionLogic addresses several key challenges, including the lack of causal explanations, overconfidence in visualizations, and ambiguity in interpretation. VisionLogic also facilitates the study of visual concepts encoded by predicates, particularly how they behave under perturbation -- an area that remains underexplored in the field of hidden semantics. Apart from providing better visual explanations and insights into the visual concepts learned by the model, we show that VisionLogic retains most of the neural network's discriminative power in an interpretable and transparent manner. We envision it as a bridge between complex model behavior and human-understandable explanations, providing trustworthy and actionable insights for real-world applications.

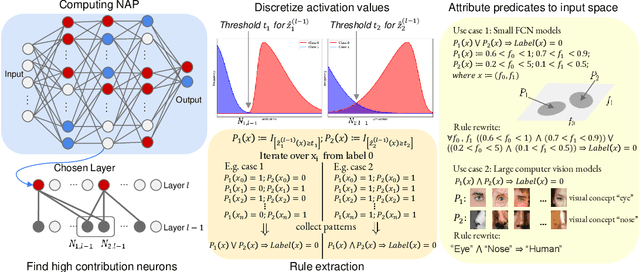

Decoding Interpretable Logic Rules from Neural Networks

Jan 14, 2025

Abstract:As deep neural networks continue to excel across various domains, their black-box nature has raised concerns about transparency and trust. In particular, interpretability has become increasingly essential for applications that demand high safety and knowledge rigor, such as drug discovery, autonomous driving, and genomics. However, progress in understanding even the simplest deep neural networks - such as fully connected networks - has been limited, despite their role as foundational elements in state-of-the-art models like ResNet and Transformer. In this paper, we address this challenge by introducing NeuroLogic, a novel approach for decoding interpretable logic rules from neural networks. NeuroLogic leverages neural activation patterns to capture the model's critical decision-making processes, translating them into logical rules represented by hidden predicates. Thanks to its flexible design in the grounding phase, NeuroLogic can be adapted to a wide range of neural networks. For simple fully connected neural networks, hidden predicates can be grounded in certain split patterns of original input features to derive decision-tree-like rules. For large, complex vision neural networks, NeuroLogic grounds hidden predicates into high-level visual concepts that are understandable to humans. Our empirical study demonstrates that NeuroLogic can extract global and interpretable rules from state-of-the-art models such as ResNet, a task at which existing work struggles. We believe NeuroLogic can help pave the way for understanding the black-box nature of neural networks.

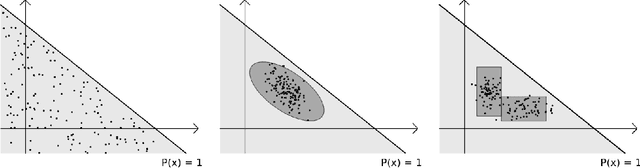

Learning Minimal NAP Specifications for Neural Network Verification

Apr 06, 2024

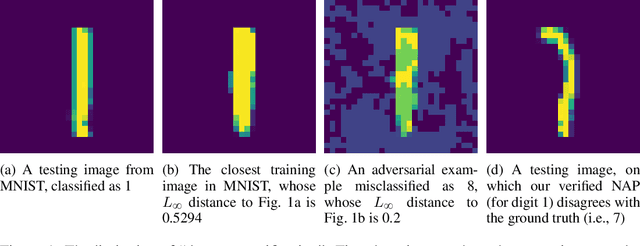

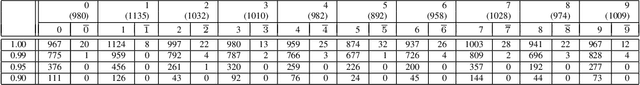

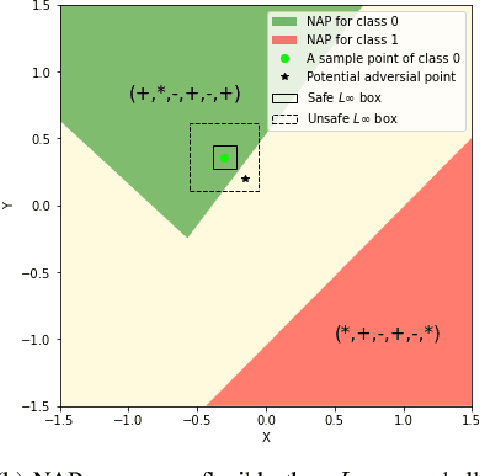

Abstract:Specifications play a crucial role in neural network verification. They define the precise input regions we aim to verify, typically represented as L-infinity norm balls. While recent research suggests using neural activation patterns (NAPs) as specifications for verifying unseen test set data, it focuses on computing the most refined NAPs, often limited to very small regions in the input space. In this paper, we study the following problem: Given a neural network, find a minimal (coarsest) NAP that is sufficient for formal verification of the network's robustness. Finding the minimal NAP specification not only expands verifiable bounds but also provides insights into which neurons contribute to the model's robustness. To address this problem, we propose several exact and approximate approaches. Our exact approaches leverage the verification tool to find minimal NAP specifications in either a deterministic or statistical manner. Whereas the approximate methods efficiently estimate minimal NAPs using adversarial examples and local gradients, without making calls to the verification tool. This allows us to inspect potential causal links between neurons and the robustness of state-of-the-art neural networks, a task for which existing verification frameworks fail to scale. Our experimental results suggest that minimal NAP specifications require much smaller fractions of neurons compared to the most refined NAP specifications, yet they can significantly expand the verifiable boundaries to several orders of magnitude larger.

Can ChatGPT Pass An Introductory Level Functional Language Programming Course?

May 04, 2023

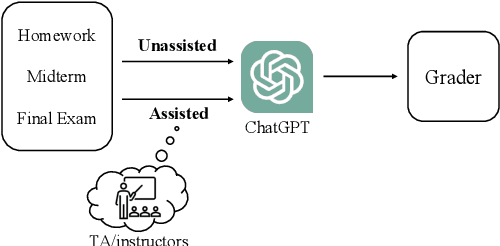

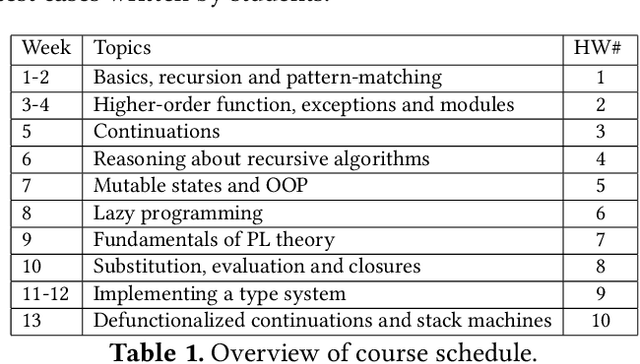

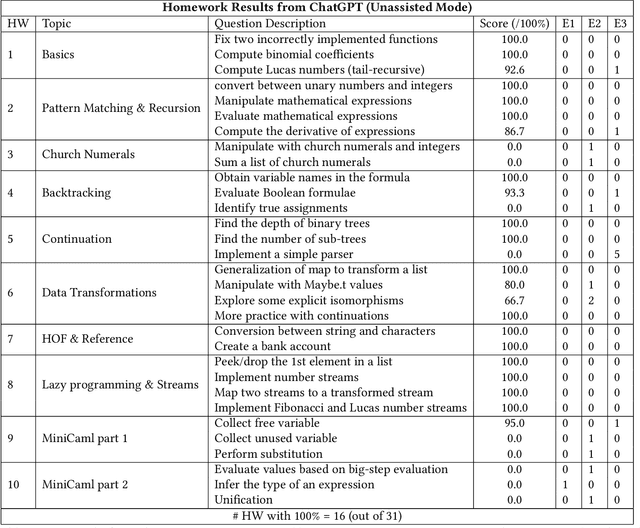

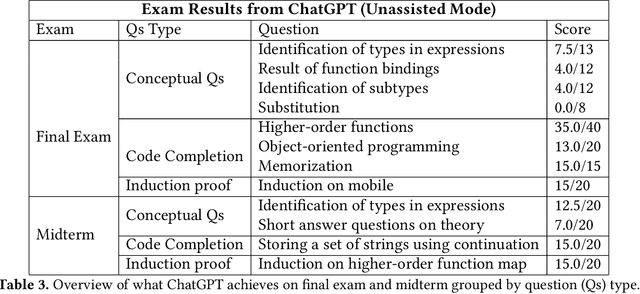

Abstract:The recent introduction of ChatGPT has drawn significant attention from both industry and academia due to its impressive capabilities in solving a diverse range of tasks, including language translation, text summarization, and computer programming. Its capability for writing, modifying, and even correcting code together with its ease of use and access is already dramatically impacting computer science education. This paper aims to explore how well ChatGPT can perform in an introductory-level functional language programming course. In our systematic evaluation, we treated ChatGPT as one of our students and demonstrated that it can achieve a grade B- and its rank in the class is 155 out of 314 students overall. Our comprehensive evaluation provides valuable insights into ChatGPT's impact from both student and instructor perspectives. Additionally, we identify several potential benefits that ChatGPT can offer to both groups. Overall, we believe that this study significantly clarifies and advances our understanding of ChatGPT's capabilities and potential impact on computer science education.

Scalar Invariant Networks with Zero Bias

Nov 15, 2022

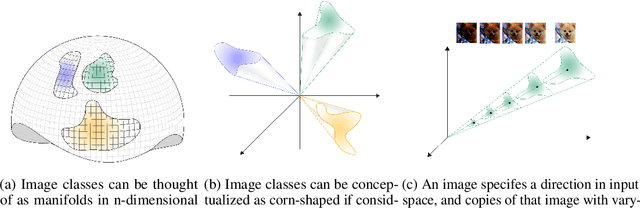

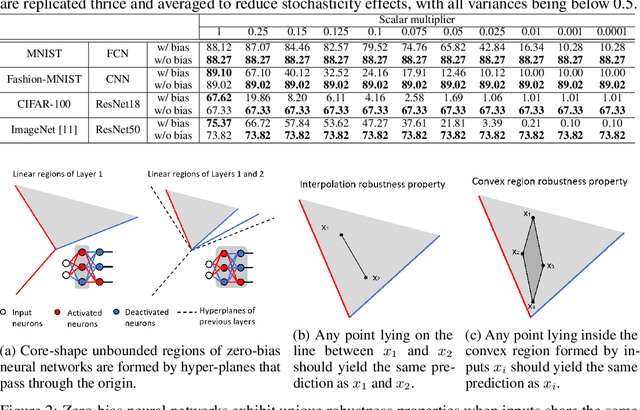

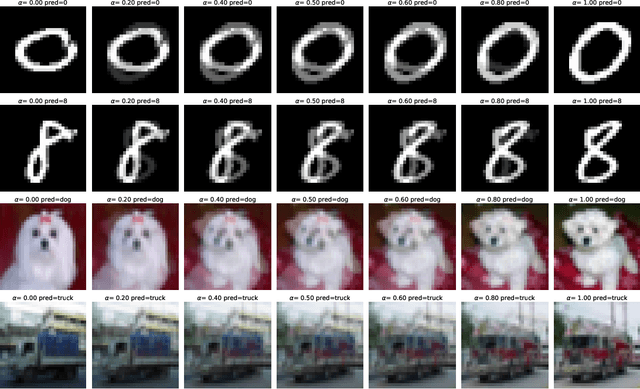

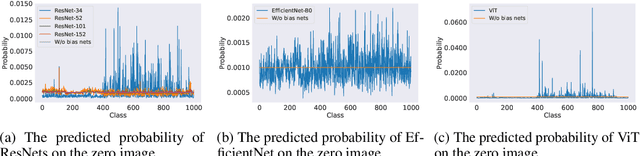

Abstract:Just like weights, bias terms are the learnable parameters of many popular machine learning models, including neural networks. Biases are believed to effectively increase the representational power of neural networks to solve a wide range of tasks in computer vision. However, we argue that if we consider the intrinsic distribution of images in the input space as well as some desired properties a model should have from the first principles, biases can be completely ignored in addressing many image-related tasks, such as image classification. Our observation indicates that zero-bias neural networks could perform comparably to neural networks with bias at least on practical image classification tasks. In addition, we prove that zero-bias neural networks possess a nice property called scalar (multiplication) invariance, which has great potential in learning and understanding images captured under poor illumination conditions. We then extend scalar invariance to more general cases that allow us to verify certain convex regions of the input space. Our experimental results show that zero-bias models could outperform the state-of-art models by a very large margin (over 60%) when predicting images under a low illumination condition (multiplying a scalar of 0.01); while achieving the same-level performance as normal models.

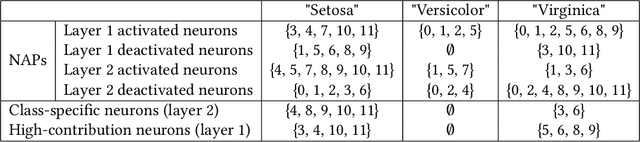

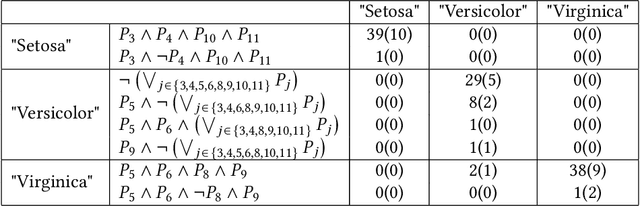

Toward Reliable Neural Specifications

Nov 14, 2022

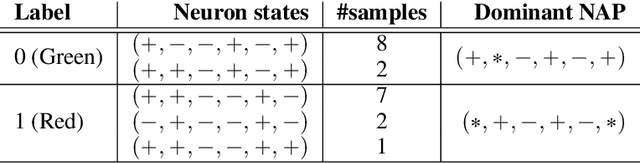

Abstract:Having reliable specifications is an unavoidable challenge in achieving verifiable correctness, robustness, and interpretability of AI systems. Existing specifications for neural networks are in the paradigm of data as specification. That is, the local neighborhood centering around a reference input is considered to be correct (or robust). However, our empirical study shows that such a specification is extremely overfitted since usually no data points from the testing set lie in the certified region of the reference input, making them impractical for real-world applications. We propose a new family of specifications called neural representation as specification, which uses the intrinsic information of neural networks - neural activation patterns (NAP), rather than input data to specify the correctness and/or robustness of neural network predictions. We present a simple statistical approach to mining dominant neural activation patterns. We analyze NAPs from a statistical point of view and find that a single NAP can cover a large number of training and testing data points whereas ad hoc data-as-specification only covers the given reference data point. To show the effectiveness of discovered NAPs, we formally verify several important properties, such as various types of misclassifications will never happen for a given NAP, and there is no-ambiguity between different NAPs. We show that by using NAP, we can verify the prediction of the entire input space, while still recalling 84% of the data. Thus, we argue that using NAPs is a more reliable and extensible specification for neural network verification.

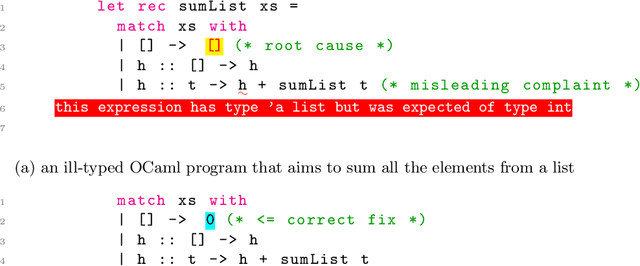

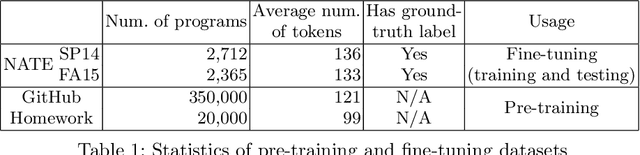

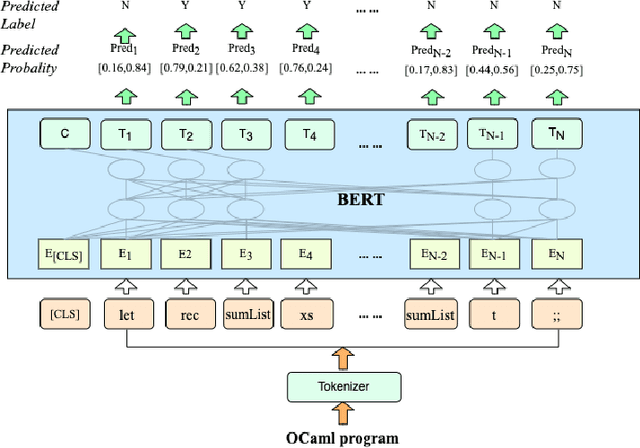

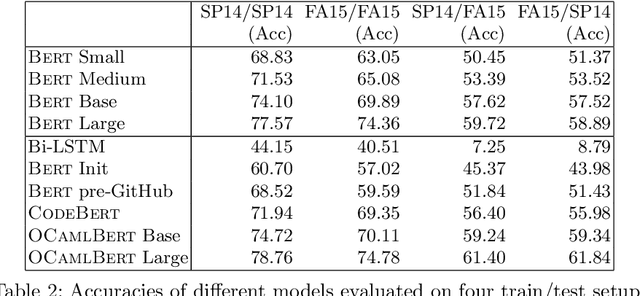

Novice Type Error Diagnosis with Natural Language Models

Oct 07, 2022

Abstract:Strong static type systems help programmers eliminate many errors without much burden of supplying type annotations. However, this flexibility makes it highly non-trivial to diagnose ill-typed programs, especially for novice programmers. Compared to classic constraint solving and optimization-based approaches, the data-driven approach has shown great promise in identifying the root causes of type errors with higher accuracy. Instead of relying on hand-engineered features, this work explores natural language models for type error localization, which can be trained in an end-to-end fashion without requiring any features. We demonstrate that, for novice type error diagnosis, the language model-based approach significantly outperforms the previous state-of-the-art data-driven approach. Specifically, our model could predict type errors correctly 62% of the time, outperforming the state-of-the-art Nate's data-driven model by 11%, in a more rigorous accuracy metric. Furthermore, we also apply structural probes to explain the performance difference between different language models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge